Remi Desmartin

A Certified Proof Checker for Deep Neural Network Verification

May 17, 2024Abstract:Recent advances in the verification of deep neural networks (DNNs) have opened the way for broader usage of DNN verification technology in many application areas, including safety-critical ones. DNN verifiers are themselves complex programs that have been shown to be susceptible to errors and imprecisions; this in turn has raised the question of trust in DNN verifiers. One prominent attempt to address this issue is enhancing DNN verifiers with the capability of producing proofs of their results that are subject to independent algorithmic certification (proof checking). Formulations of proof production and proof checking already exist on top of the state-of-the-art Marabou DNN verifier. The native implementation of the proof checking algorithm for Marabou was done in C++ and itself raised the question of trust in the code (e.g., in the precision of floating point calculations or guarantees for implementation soundness). Here, we present an alternative implementation of the Marabou proof checking algorithm in Imandra -- an industrial functional programming language and prover -- that allows us to obtain an implementation with formal guarantees, including proofs of mathematical results underlying the algorithm, such as the use of the Farkas lemma.

Towards a Certified Proof Checker for Deep Neural Network Verification

Jul 12, 2023Abstract:Recent developments in deep neural networks (DNNs) have led to their adoption in safety-critical systems, which in turn has heightened the need for guaranteeing their safety. These safety properties of DNNs can be proven using tools developed by the verification community. However, these tools are themselves prone to implementation bugs and numerical stability problems, which make their reliability questionable. To overcome this, some verifiers produce proofs of their results which can be checked by a trusted checker. In this work, we present a novel implementation of a proof checker for DNN verification. It improves on existing implementations by offering numerical stability and greater verifiability. To achieve this, we leverage two key capabilities of Imandra, an industrial theorem prover: its support of infinite precision real arithmetic and its formal verification infrastructure. So far, we have implemented a proof checker in Imandra, specified its correctness properties and started to verify the checker's compliance with them. Our ongoing work focuses on completing the formal verification of the checker and further optimizing its performance.

CheckINN: Wide Range Neural Network Verification in Imandra (Extended)

Jul 28, 2022

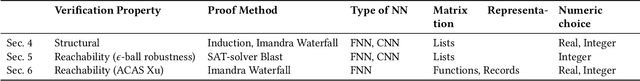

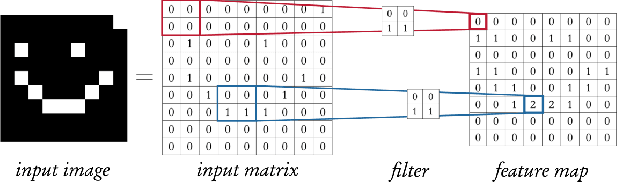

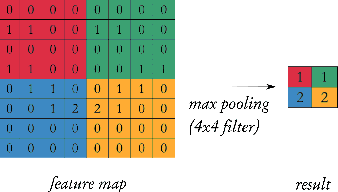

Abstract:Neural networks are increasingly relied upon as components of complex safety-critical systems such as autonomous vehicles. There is high demand for tools and methods that embed neural network verification in a larger verification cycle. However, neural network verification is difficult due to a wide range of verification properties of interest, each typically only amenable to verification in specialised solvers. In this paper, we show how Imandra, a functional programming language and a theorem prover originally designed for verification, validation and simulation of financial infrastructure can offer a holistic infrastructure for neural network verification. We develop a novel library CheckINN that formalises neural networks in Imandra, and covers different important facets of neural network verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge