Rob Stewart

Property-driven Training: All You Ever Wanted to Know About

Apr 03, 2021

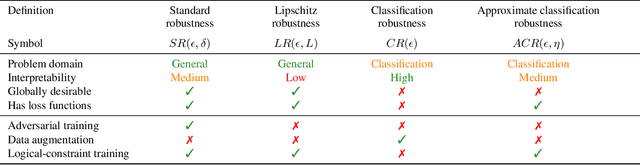

Abstract:Neural networks are known for their ability to detect general patterns in noisy data. This makes them a popular tool for perception components in complex AI systems. Paradoxically, they are also known for being vulnerable to adversarial attacks. In response, various methods such as adversarial training, data-augmentation and Lipschitz robustness training have been proposed as means of improving their robustness. However, as this paper explores, these training methods each optimise for a different definition of robustness. We perform an in-depth comparison of these different definitions, including their relationship, assumptions, interpretability and verifiability after training. We also look at constraint-driven training, a general approach designed to encode arbitrary constraints, and show that not all of these definitions are directly encodable. Finally we perform experiments to compare the applicability and efficacy of the training methods at ensuring the network obeys these different definitions. These results highlight that even the encoding of such a simple piece of knowledge such as robustness in neural network training is fraught with difficult choices and pitfalls.

Neural Network Verification for the Masses (of AI graduates)

Jul 02, 2019

Abstract:Rapid development of AI applications has stimulated demand for, and has given rise to, the rapidly growing number and diversity of AI MSc degrees. AI and Robotics research communities, industries and students are becoming increasingly aware of the problems caused by unsafe or insecure AI applications. Among them, perhaps the most famous example is vulnerability of deep neural networks to ``adversarial attacks''. Owing to wide-spread use of neural networks in all areas of AI, this problem is seen as particularly acute and pervasive. Despite of the growing number of research papers about safety and security vulnerabilities of AI applications, there is a noticeable shortage of accessible tools, methods and teaching materials for incorporating verification into AI programs. LAIV -- the Lab for AI and Verification -- is a newly opened research lab at Heriot-Watt university that engages AI and Robotics MSc students in verification projects, as part of their MSc dissertation work. In this paper, we will report on successes and unexpected difficulties LAIV faces, many of which arise from limitations of existing programming languages used for verification. We will discuss future directions for incorporating verification into AI degrees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge