Sangjae Bae

Occupancy-aware Trajectory Planning for Autonomous Valet Parking in Uncertain Dynamic Environments

Sep 11, 2025

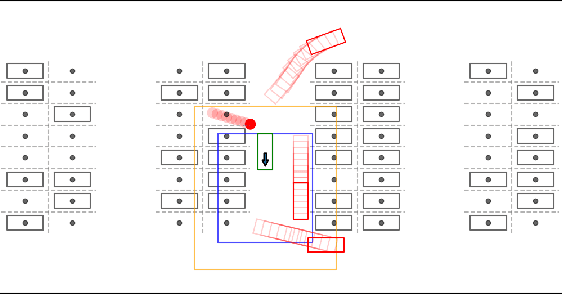

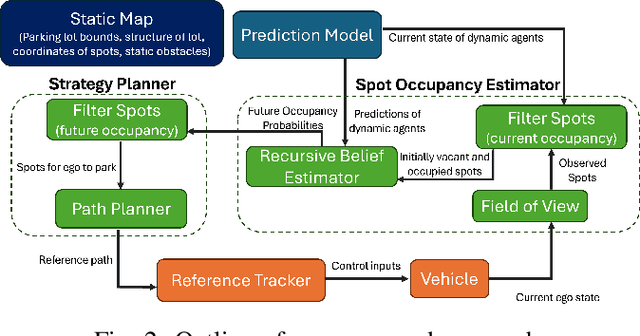

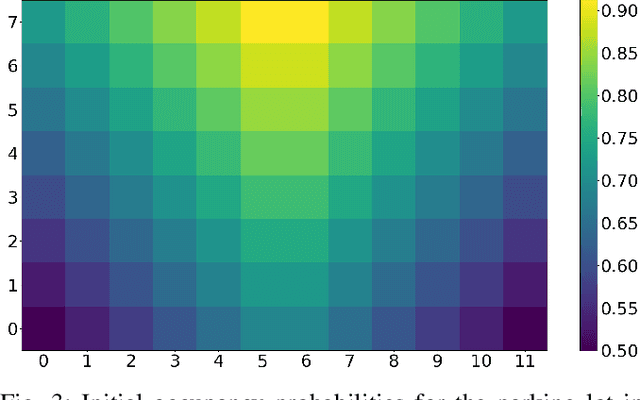

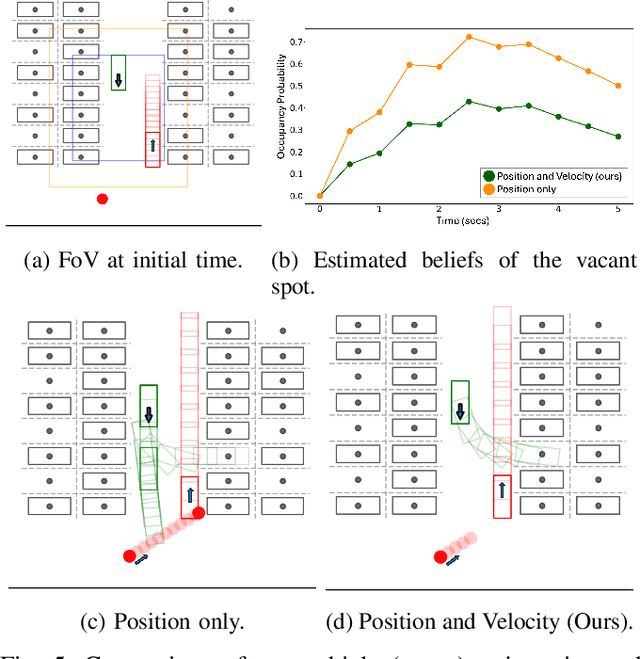

Abstract:Accurately reasoning about future parking spot availability and integrated planning is critical for enabling safe and efficient autonomous valet parking in dynamic, uncertain environments. Unlike existing methods that rely solely on instantaneous observations or static assumptions, we present an approach that predicts future parking spot occupancy by explicitly distinguishing between initially vacant and occupied spots, and by leveraging the predicted motion of dynamic agents. We introduce a probabilistic spot occupancy estimator that incorporates partial and noisy observations within a limited Field-of-View (FoV) model and accounts for the evolving uncertainty of unobserved regions. Coupled with this, we design a strategy planner that adaptively balances goal-directed parking maneuvers with exploratory navigation based on information gain, and intelligently incorporates wait-and-go behaviors at promising spots. Through randomized simulations emulating large parking lots, we demonstrate that our framework significantly improves parking efficiency, safety margins, and trajectory smoothness compared to existing approaches.

IANN-MPPI: Interaction-Aware Neural Network-Enhanced Model Predictive Path Integral Approach for Autonomous Driving

Jul 16, 2025Abstract:Motion planning for autonomous vehicles (AVs) in dense traffic is challenging, often leading to overly conservative behavior and unmet planning objectives. This challenge stems from the AVs' limited ability to anticipate and respond to the interactive behavior of surrounding agents. Traditional decoupled prediction and planning pipelines rely on non-interactive predictions that overlook the fact that agents often adapt their behavior in response to the AV's actions. To address this, we propose Interaction-Aware Neural Network-Enhanced Model Predictive Path Integral (IANN-MPPI) control, which enables interactive trajectory planning by predicting how surrounding agents may react to each control sequence sampled by MPPI. To improve performance in structured lane environments, we introduce a spline-based prior for the MPPI sampling distribution, enabling efficient lane-changing behavior. We evaluate IANN-MPPI in a dense traffic merging scenario, demonstrating its ability to perform efficient merging maneuvers. Our project website is available at https://sites.google.com/berkeley.edu/iann-mppi

Frenet Corridor Planner: An Optimal Local Path Planning Framework for Autonomous Driving

May 06, 2025Abstract:Motivated by the requirements for effectiveness and efficiency, path-speed decomposition-based trajectory planning methods have widely been adopted for autonomous driving applications. While a global route can be pre-computed offline, real-time generation of adaptive local paths remains crucial. Therefore, we present the Frenet Corridor Planner (FCP), an optimization-based local path planning strategy for autonomous driving that ensures smooth and safe navigation around obstacles. Modeling the vehicles as safety-augmented bounding boxes and pedestrians as convex hulls in the Frenet space, our approach defines a drivable corridor by determining the appropriate deviation side for static obstacles. Thereafter, a modified space-domain bicycle kinematics model enables path optimization for smoothness, boundary clearance, and dynamic obstacle risk minimization. The optimized path is then passed to a speed planner to generate the final trajectory. We validate FCP through extensive simulations and real-world hardware experiments, demonstrating its efficiency and effectiveness.

Graph-based Path Planning with Dynamic Obstacle Avoidance for Autonomous Parking

Apr 17, 2025

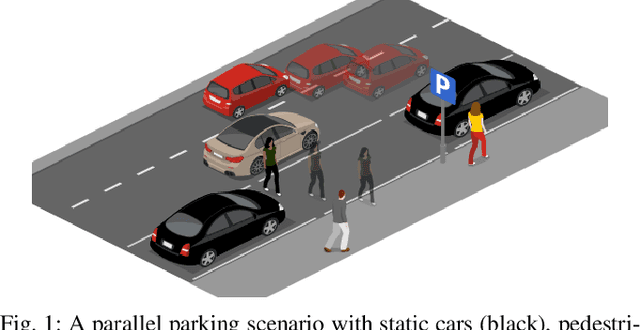

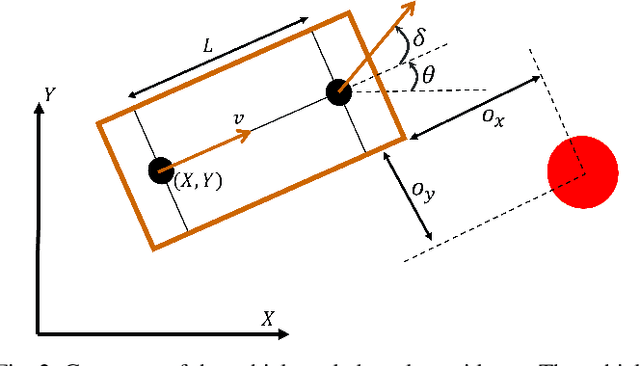

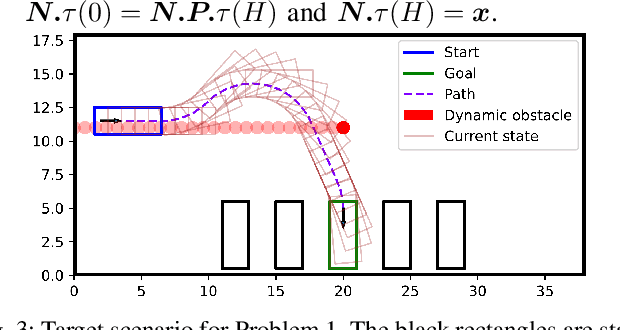

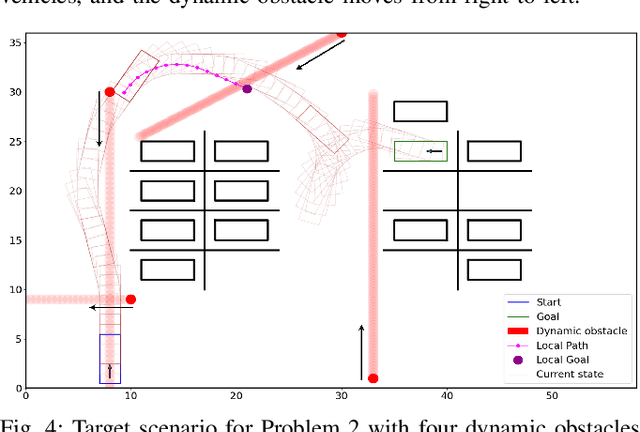

Abstract:Safe and efficient path planning in parking scenarios presents a significant challenge due to the presence of cluttered environments filled with static and dynamic obstacles. To address this, we propose a novel and computationally efficient planning strategy that seamlessly integrates the predictions of dynamic obstacles into the planning process, ensuring the generation of collision-free paths. Our approach builds upon the conventional Hybrid A star algorithm by introducing a time-indexed variant that explicitly accounts for the predictions of dynamic obstacles during node exploration in the graph, thus enabling dynamic obstacle avoidance. We integrate the time-indexed Hybrid A star algorithm within an online planning framework to compute local paths at each planning step, guided by an adaptively chosen intermediate goal. The proposed method is validated in diverse parking scenarios, including perpendicular, angled, and parallel parking. Through simulations, we showcase our approach's potential in greatly improving the efficiency and safety when compared to the state of the art spline-based planning method for parking situations.

Graph-Grounded LLMs: Leveraging Graphical Function Calling to Minimize LLM Hallucinations

Mar 13, 2025Abstract:The adoption of Large Language Models (LLMs) is rapidly expanding across various tasks that involve inherent graphical structures. Graphs are integral to a wide range of applications, including motion planning for autonomous vehicles, social networks, scene understanding, and knowledge graphs. Many problems, even those not initially perceived as graph-based, can be effectively addressed through graph theory. However, when applied to these tasks, LLMs often encounter challenges, such as hallucinations and mathematical inaccuracies. To overcome these limitations, we propose Graph-Grounded LLMs, a system that improves LLM performance on graph-related tasks by integrating a graph library through function calls. By grounding LLMs in this manner, we demonstrate significant reductions in hallucinations and improved mathematical accuracy in solving graph-based problems, as evidenced by the performance on the NLGraph benchmark. Finally, we showcase a disaster rescue application where the Graph-Grounded LLM acts as a decision-support system.

GFlowVLM: Enhancing Multi-step Reasoning in Vision-Language Models with Generative Flow Networks

Mar 09, 2025

Abstract:Vision-Language Models (VLMs) have recently shown promising advancements in sequential decision-making tasks through task-specific fine-tuning. However, common fine-tuning methods, such as Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) techniques like Proximal Policy Optimization (PPO), present notable limitations: SFT assumes Independent and Identically Distributed (IID) data, while PPO focuses on maximizing cumulative rewards. These limitations often restrict solution diversity and hinder generalization in multi-step reasoning tasks. To address these challenges, we introduce a novel framework, GFlowVLM, a framework that fine-tune VLMs using Generative Flow Networks (GFlowNets) to promote generation of diverse solutions for complex reasoning tasks. GFlowVLM models the environment as a non-Markovian decision process, allowing it to capture long-term dependencies essential for real-world applications. It takes observations and task descriptions as inputs to prompt chain-of-thought (CoT) reasoning which subsequently guides action selection. We use task based rewards to fine-tune VLM with GFlowNets. This approach enables VLMs to outperform prior fine-tuning methods, including SFT and RL. Empirical results demonstrate the effectiveness of GFlowVLM on complex tasks such as card games (NumberLine, BlackJack) and embodied planning tasks (ALFWorld), showing enhanced training efficiency, solution diversity, and stronger generalization capabilities across both in-distribution and out-of-distribution scenarios.

Delayed-Decision Motion Planning in the Presence of Multiple Predictions

Feb 28, 2025Abstract:Reliable automated driving technology is challenged by various sources of uncertainties, in particular, behavioral uncertainties of traffic agents. It is common for traffic agents to have intentions that are unknown to others, leaving an automated driving car to reason over multiple possible behaviors. This paper formalizes a behavior planning scheme in the presence of multiple possible futures with corresponding probabilities. We present a maximum entropy formulation and show how, under certain assumptions, this allows delayed decision-making to improve safety. The general formulation is then turned into a model predictive control formulation, which is solved as a quadratic program or a set of quadratic programs. We discuss implementation details for improving computation and verify operation in simulation and on a mobile robot.

End-to-End Predictive Planner for Autonomous Driving with Consistency Models

Feb 12, 2025Abstract:Trajectory prediction and planning are fundamental components for autonomous vehicles to navigate safely and efficiently in dynamic environments. Traditionally, these components have often been treated as separate modules, limiting the ability to perform interactive planning and leading to computational inefficiency in multi-agent scenarios. In this paper, we present a novel unified and data-driven framework that integrates prediction and planning with a single consistency model. Trained on real-world human driving datasets, our consistency model generates samples from high-dimensional, multimodal joint trajectory distributions of the ego and multiple surrounding agents, enabling end-to-end predictive planning. It effectively produces interactive behaviors, such as proactive nudging and yielding to ensure both safe and efficient interactions with other road users. To incorporate additional planning constraints on the ego vehicle, we propose an alternating direction method for multi-objective guidance in online guided sampling. Compared to diffusion models, our consistency model achieves better performance with fewer sampling steps, making it more suitable for real-time deployment. Experimental results on Waymo Open Motion Dataset (WOMD) demonstrate our method's superiority in trajectory quality, constraint satisfaction, and interactive behavior compared to various existing approaches.

Generalized Mission Planning for Heterogeneous Multi-Robot Teams via LLM-constructed Hierarchical Trees

Jan 27, 2025Abstract:We present a novel mission-planning strategy for heterogeneous multi-robot teams, taking into account the specific constraints and capabilities of each robot. Our approach employs hierarchical trees to systematically break down complex missions into manageable sub-tasks. We develop specialized APIs and tools, which are utilized by Large Language Models (LLMs) to efficiently construct these hierarchical trees. Once the hierarchical tree is generated, it is further decomposed to create optimized schedules for each robot, ensuring adherence to their individual constraints and capabilities. We demonstrate the effectiveness of our framework through detailed examples covering a wide range of missions, showcasing its flexibility and scalability.

Solving Reach-Avoid-Stay Problems Using Deep Deterministic Policy Gradients

Oct 03, 2024Abstract:Reach-Avoid-Stay (RAS) optimal control enables systems such as robots and air taxis to reach their targets, avoid obstacles, and stay near the target. However, current methods for RAS often struggle with handling complex, dynamic environments and scaling to high-dimensional systems. While reinforcement learning (RL)-based reachability analysis addresses these challenges, it has yet to tackle the RAS problem. In this paper, we propose a two-step deep deterministic policy gradient (DDPG) method to extend RL-based reachability method to solve RAS problems. First, we train a function that characterizes the maximal robust control invariant set within the target set, where the system can safely stay, along with its corresponding policy. Second, we train a function that defines the set of states capable of safely reaching the robust control invariant set, along with its corresponding policy. We prove that this method results in the maximal robust RAS set in the absence of training errors and demonstrate that it enables RAS in complex environments, scales to high-dimensional systems, and achieves higher success rates for the RAS task compared to previous methods, validated through one simulation and two high-dimensional experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge