Minjun Sung

IANN-MPPI: Interaction-Aware Neural Network-Enhanced Model Predictive Path Integral Approach for Autonomous Driving

Jul 16, 2025Abstract:Motion planning for autonomous vehicles (AVs) in dense traffic is challenging, often leading to overly conservative behavior and unmet planning objectives. This challenge stems from the AVs' limited ability to anticipate and respond to the interactive behavior of surrounding agents. Traditional decoupled prediction and planning pipelines rely on non-interactive predictions that overlook the fact that agents often adapt their behavior in response to the AV's actions. To address this, we propose Interaction-Aware Neural Network-Enhanced Model Predictive Path Integral (IANN-MPPI) control, which enables interactive trajectory planning by predicting how surrounding agents may react to each control sequence sampled by MPPI. To improve performance in structured lane environments, we introduce a spline-based prior for the MPPI sampling distribution, enabling efficient lane-changing behavior. We evaluate IANN-MPPI in a dense traffic merging scenario, demonstrating its ability to perform efficient merging maneuvers. Our project website is available at https://sites.google.com/berkeley.edu/iann-mppi

Graph-based Path Planning with Dynamic Obstacle Avoidance for Autonomous Parking

Apr 17, 2025

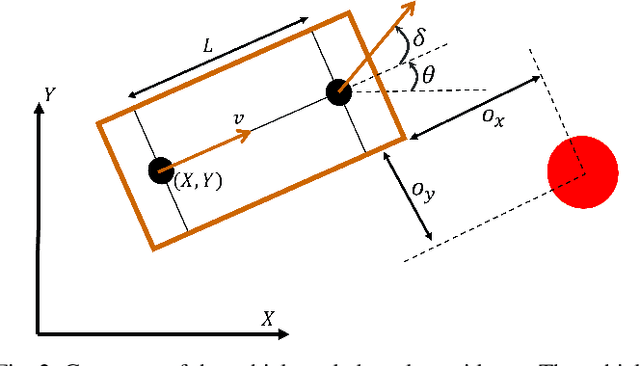

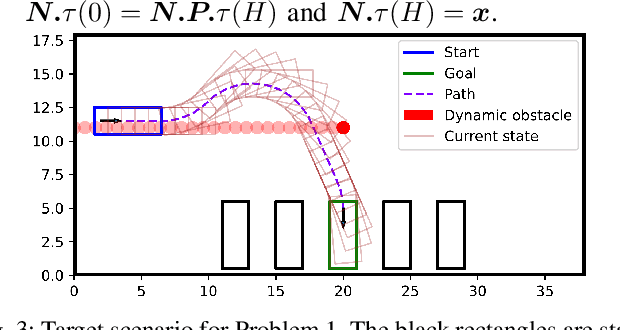

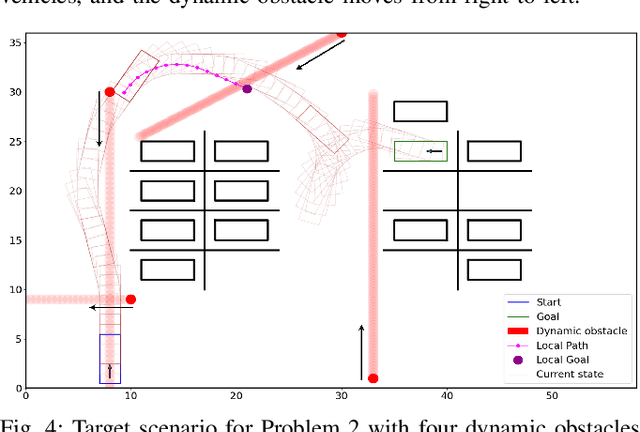

Abstract:Safe and efficient path planning in parking scenarios presents a significant challenge due to the presence of cluttered environments filled with static and dynamic obstacles. To address this, we propose a novel and computationally efficient planning strategy that seamlessly integrates the predictions of dynamic obstacles into the planning process, ensuring the generation of collision-free paths. Our approach builds upon the conventional Hybrid A star algorithm by introducing a time-indexed variant that explicitly accounts for the predictions of dynamic obstacles during node exploration in the graph, thus enabling dynamic obstacle avoidance. We integrate the time-indexed Hybrid A star algorithm within an online planning framework to compute local paths at each planning step, guided by an adaptively chosen intermediate goal. The proposed method is validated in diverse parking scenarios, including perpendicular, angled, and parallel parking. Through simulations, we showcase our approach's potential in greatly improving the efficiency and safety when compared to the state of the art spline-based planning method for parking situations.

Addressing Behavior Model Inaccuracies for Safe Motion Control in Uncertain Dynamic Environments

Jul 26, 2024

Abstract:Uncertainties in the environment and behavior model inaccuracies compromise the state estimation of a dynamic obstacle and its trajectory predictions, introducing biases in estimation and shifts in predictive distributions. Addressing these challenges is crucial to safely control an autonomous system. In this paper, we propose a novel algorithm SIED-MPC, which synergistically integrates Simultaneous State and Input Estimation (SSIE) and Distributionally Robust Model Predictive Control (DR-MPC) using model confidence evaluation. The SSIE process produces unbiased state estimates and optimal input gap estimates to assess the confidence of the behavior model, defining the ambiguity radius for DR-MPC to handle predictive distribution shifts. This systematic confidence evaluation leads to producing safe inputs with an adequate level of conservatism. Our algorithm demonstrated a reduced collision rate in autonomous driving simulations through improved state estimation, with a 54% shorter average computation time.

Robust Model Based Reinforcement Learning Using $\mathcal{L}_1$ Adaptive Control

Mar 21, 2024

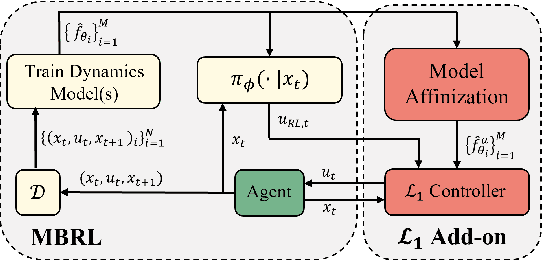

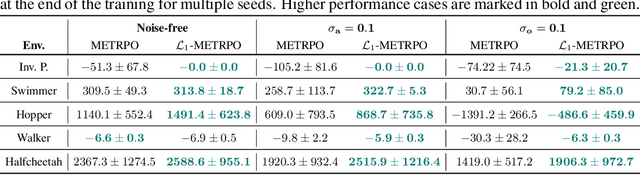

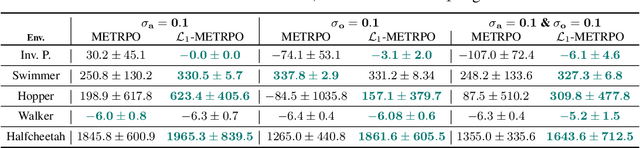

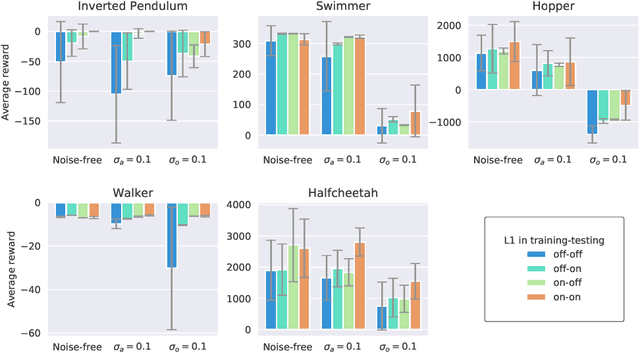

Abstract:We introduce $\mathcal{L}_1$-MBRL, a control-theoretic augmentation scheme for Model-Based Reinforcement Learning (MBRL) algorithms. Unlike model-free approaches, MBRL algorithms learn a model of the transition function using data and use it to design a control input. Our approach generates a series of approximate control-affine models of the learned transition function according to the proposed switching law. Using the approximate model, control input produced by the underlying MBRL is perturbed by the $\mathcal{L}_1$ adaptive control, which is designed to enhance the robustness of the system against uncertainties. Importantly, this approach is agnostic to the choice of MBRL algorithm, enabling the use of the scheme with various MBRL algorithms. MBRL algorithms with $\mathcal{L}_1$ augmentation exhibit enhanced performance and sample efficiency across multiple MuJoCo environments, outperforming the original MBRL algorithms, both with and without system noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge