Darshan Gadginmath

Graph-based Path Planning with Dynamic Obstacle Avoidance for Autonomous Parking

Apr 17, 2025

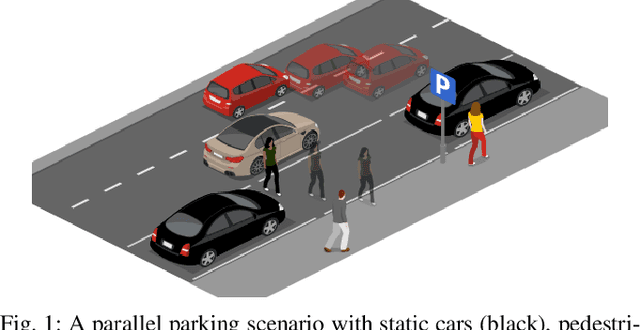

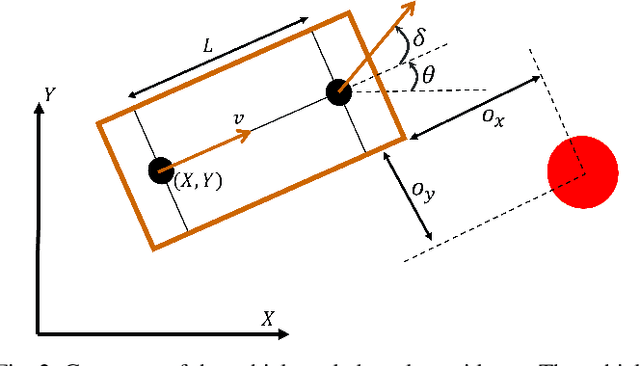

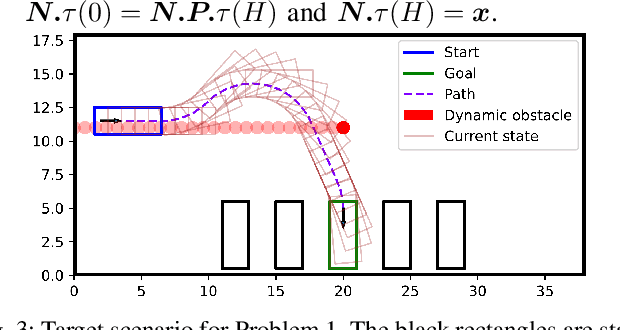

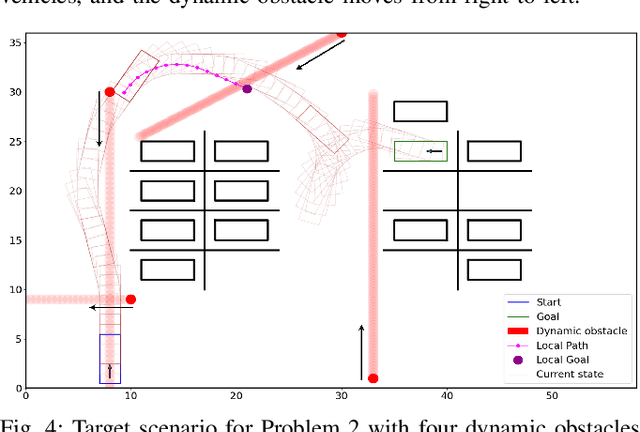

Abstract:Safe and efficient path planning in parking scenarios presents a significant challenge due to the presence of cluttered environments filled with static and dynamic obstacles. To address this, we propose a novel and computationally efficient planning strategy that seamlessly integrates the predictions of dynamic obstacles into the planning process, ensuring the generation of collision-free paths. Our approach builds upon the conventional Hybrid A star algorithm by introducing a time-indexed variant that explicitly accounts for the predictions of dynamic obstacles during node exploration in the graph, thus enabling dynamic obstacle avoidance. We integrate the time-indexed Hybrid A star algorithm within an online planning framework to compute local paths at each planning step, guided by an adaptively chosen intermediate goal. The proposed method is validated in diverse parking scenarios, including perpendicular, angled, and parallel parking. Through simulations, we showcase our approach's potential in greatly improving the efficiency and safety when compared to the state of the art spline-based planning method for parking situations.

Score Matching Diffusion Based Feedback Control and Planning of Nonlinear Systems

Apr 14, 2025Abstract:We propose a novel control-theoretic framework that leverages principles from generative modeling -- specifically, Denoising Diffusion Probabilistic Models (DDPMs) -- to stabilize control-affine systems with nonholonomic constraints. Unlike traditional stochastic approaches, which rely on noise-driven dynamics in both forward and reverse processes, our method crucially eliminates the need for noise in the reverse phase, making it particularly relevant for control applications. We introduce two formulations: one where noise perturbs all state dimensions during the forward phase while the control system enforces time reversal deterministically, and another where noise is restricted to the control channels, embedding system constraints directly into the forward process. For controllable nonlinear drift-free systems, we prove that deterministic feedback laws can exactly reverse the forward process, ensuring that the system's probability density evolves correctly without requiring artificial diffusion in the reverse phase. Furthermore, for linear time-invariant systems, we establish a time-reversal result under the second formulation. By eliminating noise in the backward process, our approach provides a more practical alternative to machine learning-based denoising methods, which are unsuitable for control applications due to the presence of stochasticity. We validate our results through numerical simulations on benchmark systems, including a unicycle model in a domain with obstacles, a driftless five-dimensional system, and a four-dimensional linear system, demonstrating the potential for applying diffusion-inspired techniques in linear, nonlinear, and settings with state space constraints.

Dynamics-aware Diffusion Models for Planning and Control

Apr 02, 2025Abstract:This paper addresses the problem of generating dynamically admissible trajectories for control tasks using diffusion models, particularly in scenarios where the environment is complex and system dynamics are crucial for practical application. We propose a novel framework that integrates system dynamics directly into the diffusion model's denoising process through a sequential prediction and projection mechanism. This mechanism, aligned with the diffusion model's noising schedule, ensures generated trajectories are both consistent with expert demonstrations and adhere to underlying physical constraints. Notably, our approach can generate maximum likelihood trajectories and accurately recover trajectories generated by linear feedback controllers, even when explicit dynamics knowledge is unavailable. We validate the effectiveness of our method through experiments on standard control tasks and a complex non-convex optimal control problem involving waypoint tracking and collision avoidance, demonstrating its potential for efficient trajectory generation in practical applications.

Predicting AI Agent Behavior through Approximation of the Perron-Frobenius Operator

Jun 04, 2024

Abstract:Predicting the behavior of AI-driven agents is particularly challenging without a preexisting model. In our paper, we address this by treating AI agents as nonlinear dynamical systems and adopting a probabilistic perspective to predict their statistical behavior using the Perron-Frobenius (PF) operator. We formulate the approximation of the PF operator as an entropy minimization problem, which can be solved by leveraging the Markovian property of the operator and decomposing its spectrum. Our data-driven methodology simultaneously approximates the PF operator to perform prediction of the evolution of the agents and also predicts the terminal probability density of AI agents, such as robotic systems and generative models. We demonstrate the effectiveness of our prediction model through extensive experiments on practical systems driven by AI algorithms.

Denoising Diffusion-Based Control of Nonlinear Systems

Feb 03, 2024Abstract:We propose a novel approach based on Denoising Diffusion Probabilistic Models (DDPMs) to control nonlinear dynamical systems. DDPMs are the state-of-art of generative models that have achieved success in a wide variety of sampling tasks. In our framework, we pose the feedback control problem as a generative task of drawing samples from a target set under control system constraints. The forward process of DDPMs constructs trajectories originating from a target set by adding noise. We learn to control a dynamical system in reverse such that the terminal state belongs to the target set. For control-affine systems without drift, we prove that the control system can exactly track the trajectory of the forward process in reverse, whenever the the Lie bracket based condition for controllability holds. We numerically study our approach on various nonlinear systems and verify our theoretical results. We also conduct numerical experiments for cases beyond our theoretical results on a physics-engine.

Fusing Multiple Algorithms for Heterogeneous Online Learning

Dec 09, 2023

Abstract:This study addresses the challenge of online learning in contexts where agents accumulate disparate data, face resource constraints, and use different local algorithms. This paper introduces the Switched Online Learning Algorithm (SOLA), designed to solve the heterogeneous online learning problem by amalgamating updates from diverse agents through a dynamic switching mechanism contingent upon their respective performance and available resources. We theoretically analyze the design of the selecting mechanism to ensure that the regret of SOLA is bounded. Our findings show that the number of changes in selection needs to be bounded by a parameter dependent on the performance of the different local algorithms. Additionally, two test cases are presented to emphasize the effectiveness of SOLA, first on an online linear regression problem and then on an online classification problem with the MNIST dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge