Samuel Budd

Whole-examination AI estimation of fetal biometrics from 20-week ultrasound scans

Jan 02, 2024Abstract:The current approach to fetal anomaly screening is based on biometric measurements derived from individually selected ultrasound images. In this paper, we introduce a paradigm shift that attains human-level performance in biometric measurement by aggregating automatically extracted biometrics from every frame across an entire scan, with no need for operator intervention. We use a convolutional neural network to classify each frame of an ultrasound video recording. We then measure fetal biometrics in every frame where appropriate anatomy is visible. We use a Bayesian method to estimate the true value of each biometric from a large number of measurements and probabilistically reject outliers. We performed a retrospective experiment on 1457 recordings (comprising 48 million frames) of 20-week ultrasound scans, estimated fetal biometrics in those scans and compared our estimates to the measurements sonographers took during the scan. Our method achieves human-level performance in estimating fetal biometrics and estimates well-calibrated credible intervals in which the true biometric value is expected to lie.

Invariant Risk Minimisation for Cross-Organism Inference: Substituting Mouse Data for Human Data in Human Risk Factor Discovery

Nov 14, 2021

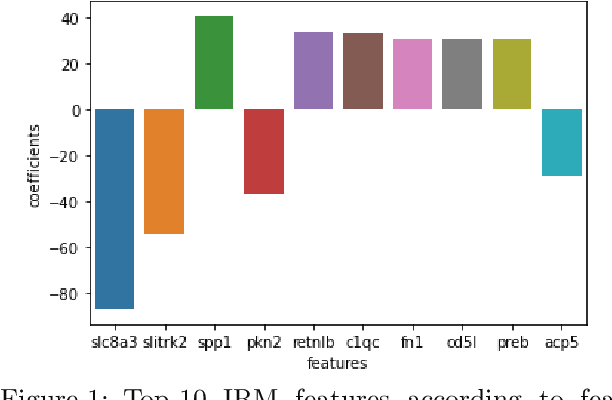

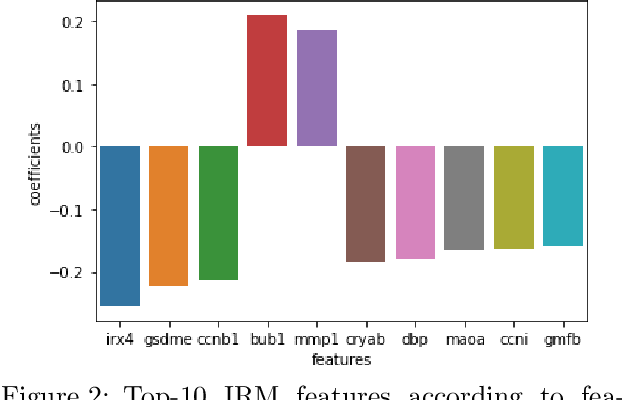

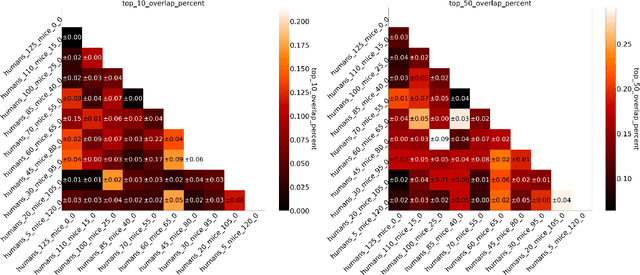

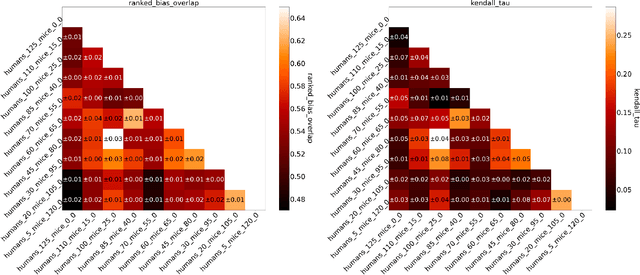

Abstract:Human medical data can be challenging to obtain due to data privacy concerns, difficulties conducting certain types of experiments, or prohibitive associated costs. In many settings, data from animal models or in-vitro cell lines are available to help augment our understanding of human data. However, this data is known for having low etiological validity in comparison to human data. In this work, we augment small human medical datasets with in-vitro data and animal models. We use Invariant Risk Minimisation (IRM) to elucidate invariant features by considering cross-organism data as belonging to different data-generating environments. Our models identify genes of relevance to human cancer development. We observe a degree of consistency between varying the amounts of human and mouse data used, however, further work is required to obtain conclusive insights. As a secondary contribution, we enhance existing open source datasets and provide two uniformly processed, cross-organism, homologue gene-matched datasets to the community.

Can non-specialists provide high quality gold standard labels in challenging modalities?

Jul 30, 2021

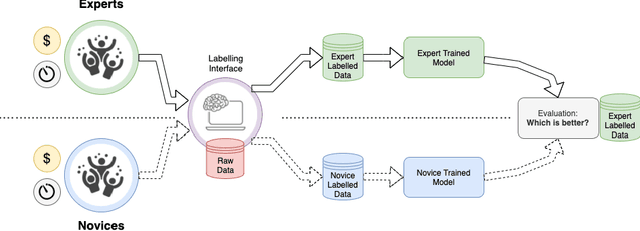

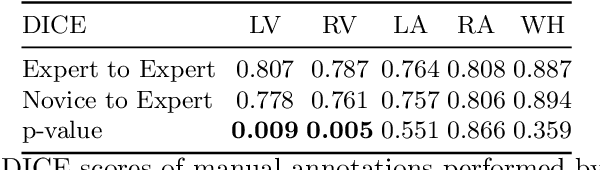

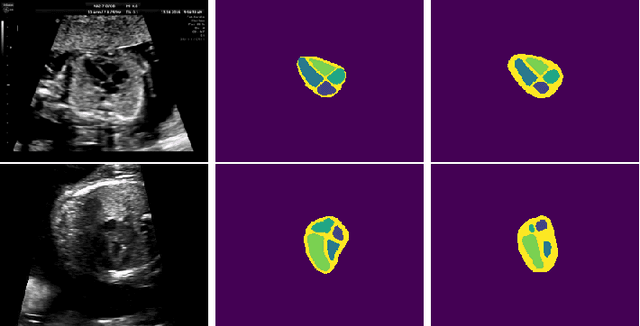

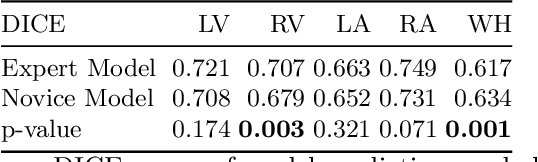

Abstract:Probably yes. -- Supervised Deep Learning dominates performance scores for many computer vision tasks and defines the state-of-the-art. However, medical image analysis lags behind natural image applications. One of the many reasons is the lack of well annotated medical image data available to researchers. One of the first things researchers are told is that we require significant expertise to reliably and accurately interpret and label such data. We see significant inter- and intra-observer variability between expert annotations of medical images. Still, it is a widely held assumption that novice annotators are unable to provide useful annotations for use by clinical Deep Learning models. In this work we challenge this assumption and examine the implications of using a minimally trained novice labelling workforce to acquire annotations for a complex medical image dataset. We study the time and cost implications of using novice annotators, the raw performance of novice annotators compared to gold-standard expert annotators, and the downstream effects on a trained Deep Learning segmentation model's performance for detecting a specific congenital heart disease (hypoplastic left heart syndrome) in fetal ultrasound imaging.

Detecting Hypo-plastic Left Heart Syndrome in Fetal Ultrasound via Disease-specific Atlas Maps

Jul 06, 2021

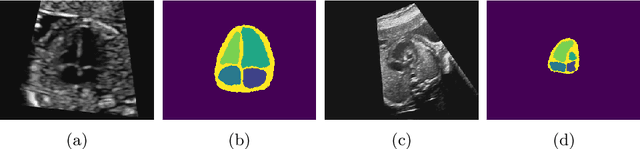

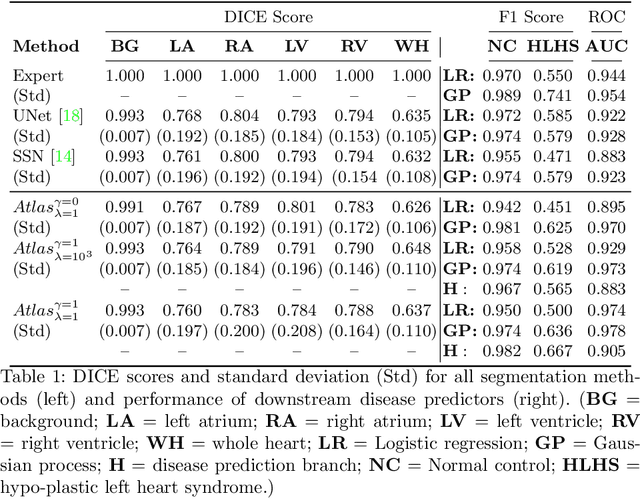

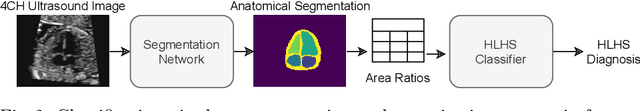

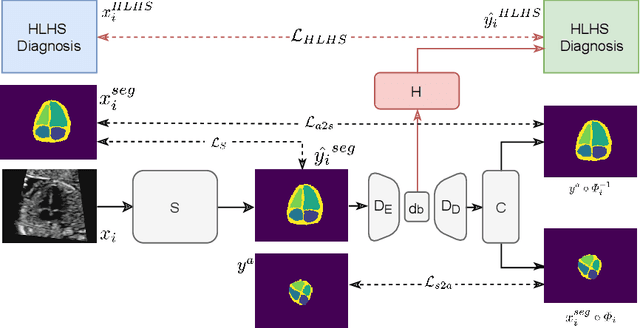

Abstract:Fetal ultrasound screening during pregnancy plays a vital role in the early detection of fetal malformations which have potential long-term health impacts. The level of skill required to diagnose such malformations from live ultrasound during examination is high and resources for screening are often limited. We present an interpretable, atlas-learning segmentation method for automatic diagnosis of Hypo-plastic Left Heart Syndrome (HLHS) from a single `4 Chamber Heart' view image. We propose to extend the recently introduced Image-and-Spatial Transformer Networks (Atlas-ISTN) into a framework that enables sensitising atlas generation to disease. In this framework we can jointly learn image segmentation, registration, atlas construction and disease prediction while providing a maximum level of clinical interpretability compared to direct image classification methods. As a result our segmentation allows diagnoses competitive with expert-derived manual diagnosis and yields an AUC-ROC of 0.978 (1043 cases for training, 260 for validation and 325 for testing).

Surface Agnostic Metrics for Cortical Volume Segmentation and Regression

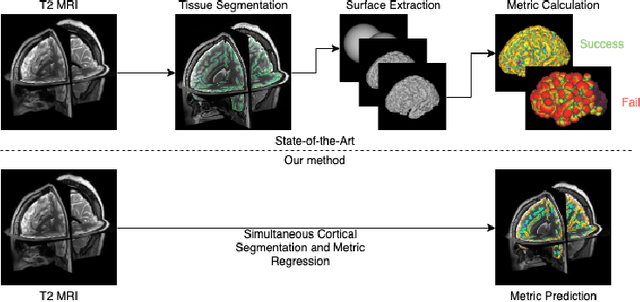

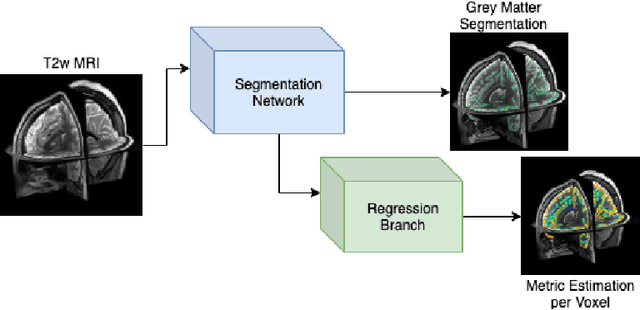

Oct 04, 2020

Abstract:The cerebral cortex performs higher-order brain functions and is thus implicated in a range of cognitive disorders. Current analysis of cortical variation is typically performed by fitting surface mesh models to inner and outer cortical boundaries and investigating metrics such as surface area and cortical curvature or thickness. These, however, take a long time to run, and are sensitive to motion and image and surface resolution, which can prohibit their use in clinical settings. In this paper, we instead propose a machine learning solution, training a novel architecture to predict cortical thickness and curvature metrics from T2 MRI images, while additionally returning metrics of prediction uncertainty. Our proposed model is tested on a clinical cohort (Down Syndrome) for which surface-based modelling often fails. Results suggest that deep convolutional neural networks are a viable option to predict cortical metrics across a range of brain development stages and pathologies.

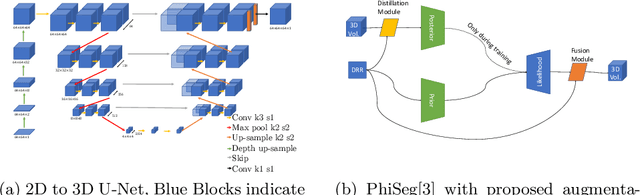

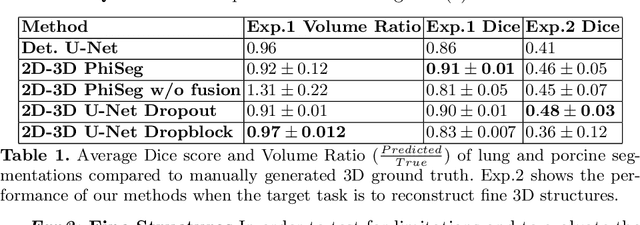

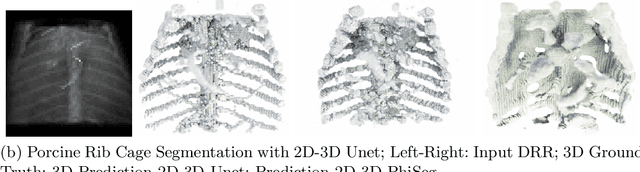

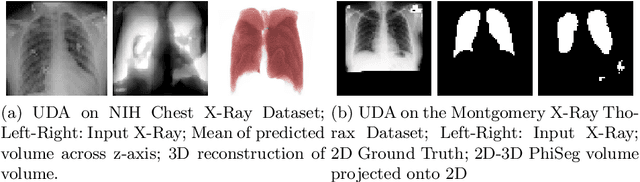

3D Probabilistic Segmentation and Volumetry from 2D projection images

Jun 23, 2020

Abstract:X-Ray imaging is quick, cheap and useful for front-line care assessment and intra-operative real-time imaging (e.g., C-Arm Fluoroscopy). However, it suffers from projective information loss and lacks vital volumetric information on which many essential diagnostic biomarkers are based on. In this paper we explore probabilistic methods to reconstruct 3D volumetric images from 2D imaging modalities and measure the models' performance and confidence. We show our models' performance on large connected structures and we test for limitations regarding fine structures and image domain sensitivity. We utilize fast end-to-end training of a 2D-3D convolutional networks, evaluate our method on 117 CT scans segmenting 3D structures from digitally reconstructed radiographs (DRRs) with a Dice score of $0.91 \pm 0.0013$. Source code will be made available by the time of the conference.

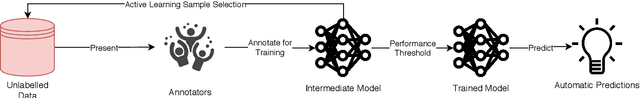

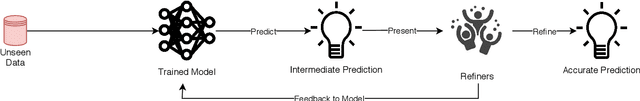

A Survey on Active Learning and Human-in-the-Loop Deep Learning for Medical Image Analysis

Oct 07, 2019

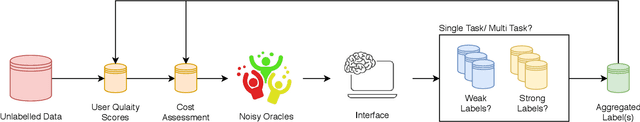

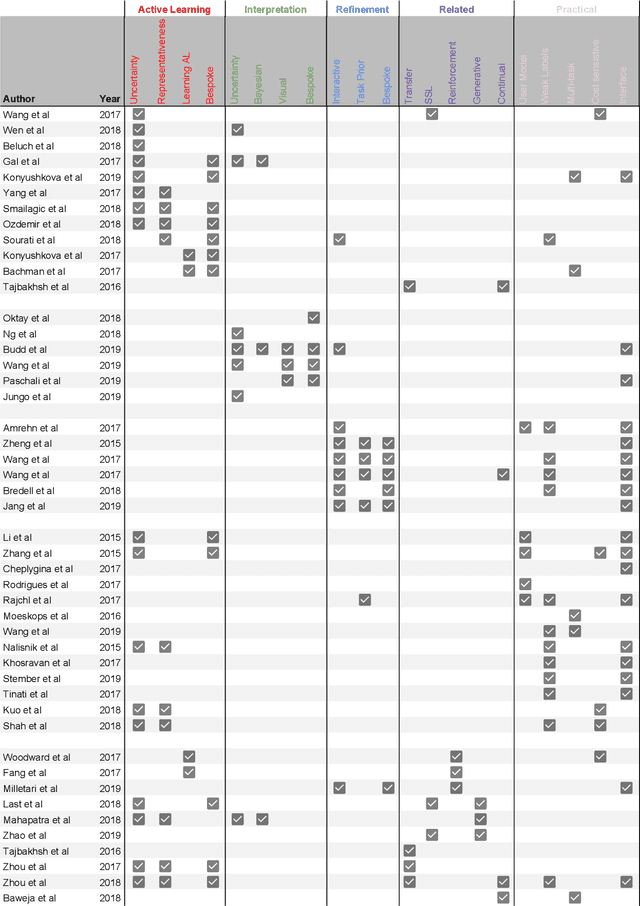

Abstract:Fully automatic deep learning has become the state-of-the-art technique for many tasks including image acquisition, analysis and interpretation, and for the extraction of clinically useful information for computer-aided detection, diagnosis, treatment planning, intervention and therapy. However, the unique challenges posed by medical image analysis suggest that retaining a human end-user in any deep learning enabled system will be beneficial. In this review we investigate the role that humans might play in the development and deployment of deep learning enabled diagnostic applications and focus on techniques that will retain a significant input from a human end user. Human-in-the-Loop computing is an area that we see as increasingly important in future research due to the safety-critical nature of working in the medical domain. We evaluate four key areas that we consider vital for deep learning in the clinical practice: (1) Active Learning - to choose the best data to annotate for optimal model performance; (2) Interpretation and Refinement - using iterative feedback to steer models to optima for a given prediction and offering meaningful ways to interpret and respond to predictions; (3) Practical considerations - developing full scale applications and the key considerations that need to be made before deployment; (4) Related Areas - research fields that will benefit human-in-the-loop computing as they evolve. We offer our opinions on the most promising directions of research and how various aspects of each area might be unified towards common goals.

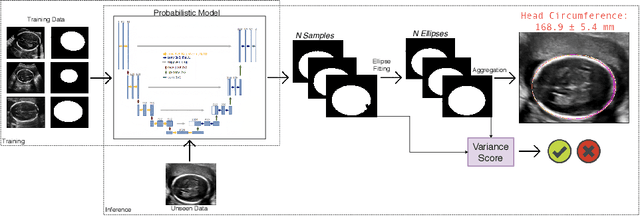

Confident Head Circumference Measurement from Ultrasound with Real-time Feedback for Sonographers

Aug 07, 2019

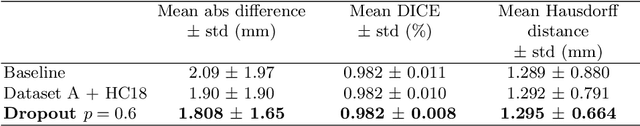

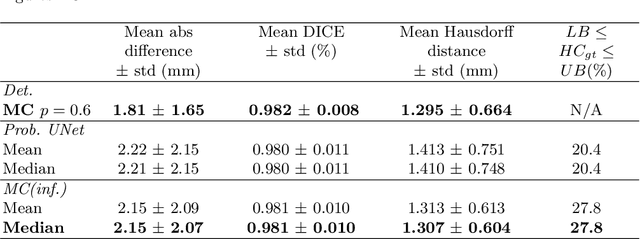

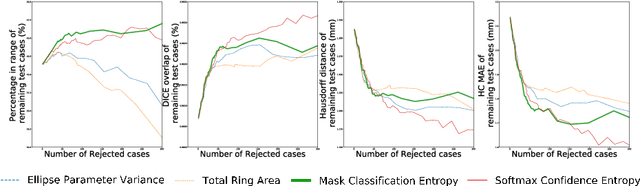

Abstract:Manual estimation of fetal Head Circumference (HC) from Ultrasound (US) is a key biometric for monitoring the healthy development of fetuses. Unfortunately, such measurements are subject to large inter-observer variability, resulting in low early-detection rates of fetal abnormalities. To address this issue, we propose a novel probabilistic Deep Learning approach for real-time automated estimation of fetal HC. This system feeds back statistics on measurement robustness to inform users how confident a deep neural network is in evaluating suitable views acquired during free-hand ultrasound examination. In real-time scenarios, this approach may be exploited to guide operators to scan planes that are as close as possible to the underlying distribution of training images, for the purpose of improving inter-operator consistency. We train on free-hand ultrasound data from over 2000 subjects (2848 training/540 test) and show that our method is able to predict HC measurements within 1.81$\pm$1.65mm deviation from the ground truth, with 50% of the test images fully contained within the predicted confidence margins, and an average of 1.82$\pm$1.78mm deviation from the margin for the remaining cases that are not fully contained.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge