Emma C Robinson

Surface Masked AutoEncoder: Self-Supervision for Cortical Imaging Data

Aug 10, 2023Abstract:Self-supervision has been widely explored as a means of addressing the lack of inductive biases in vision transformer architectures, which limits generalisation when networks are trained on small datasets. This is crucial in the context of cortical imaging, where phenotypes are complex and heterogeneous, but the available datasets are limited in size. This paper builds upon recent advancements in translating vision transformers to surface meshes and investigates the potential of Masked AutoEncoder (MAE) self-supervision for cortical surface learning. By reconstructing surface data from a masked version of the input, the proposed method effectively models cortical structure to learn strong representations that translate to improved performance in downstream tasks. We evaluate our approach on cortical phenotype regression using the developing Human Connectome Project (dHCP) and demonstrate that pre-training leads to a 26\% improvement in performance, with an 80\% faster convergence, compared to models trained from scratch. Furthermore, we establish that pre-training vision transformer models on large datasets, such as the UK Biobank (UKB), enables the acquisition of robust representations for finetuning in low-data scenarios. Our code and pre-trained models are publicly available at \url{https://github.com/metrics-lab/surface-vision-transformers}.

A Survey on Active Learning and Human-in-the-Loop Deep Learning for Medical Image Analysis

Oct 07, 2019

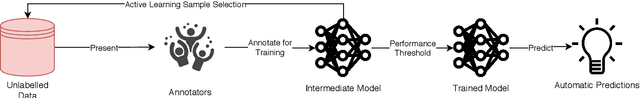

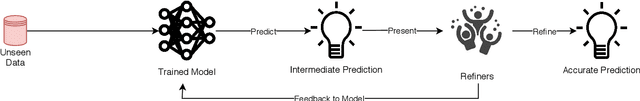

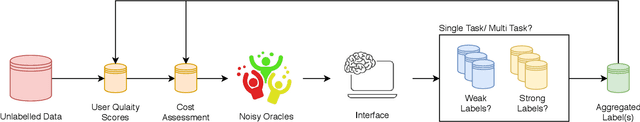

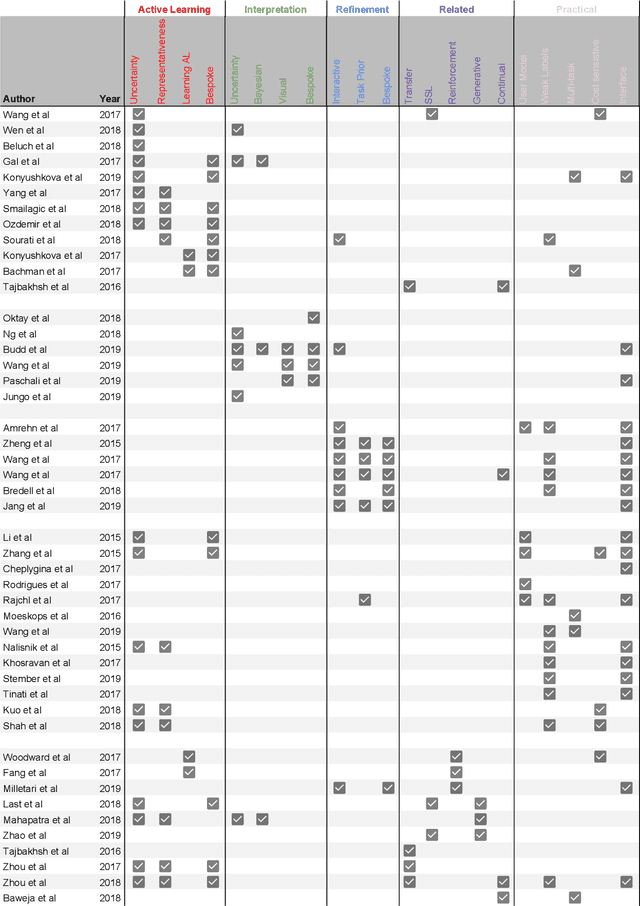

Abstract:Fully automatic deep learning has become the state-of-the-art technique for many tasks including image acquisition, analysis and interpretation, and for the extraction of clinically useful information for computer-aided detection, diagnosis, treatment planning, intervention and therapy. However, the unique challenges posed by medical image analysis suggest that retaining a human end-user in any deep learning enabled system will be beneficial. In this review we investigate the role that humans might play in the development and deployment of deep learning enabled diagnostic applications and focus on techniques that will retain a significant input from a human end user. Human-in-the-Loop computing is an area that we see as increasingly important in future research due to the safety-critical nature of working in the medical domain. We evaluate four key areas that we consider vital for deep learning in the clinical practice: (1) Active Learning - to choose the best data to annotate for optimal model performance; (2) Interpretation and Refinement - using iterative feedback to steer models to optima for a given prediction and offering meaningful ways to interpret and respond to predictions; (3) Practical considerations - developing full scale applications and the key considerations that need to be made before deployment; (4) Related Areas - research fields that will benefit human-in-the-loop computing as they evolve. We offer our opinions on the most promising directions of research and how various aspects of each area might be unified towards common goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge