Sai-Kit Yeung

360DVO: Deep Visual Odometry for Monocular 360-Degree Camera

Jan 05, 2026Abstract:Monocular omnidirectional visual odometry (OVO) systems leverage 360-degree cameras to overcome field-of-view limitations of perspective VO systems. However, existing methods, reliant on handcrafted features or photometric objectives, often lack robustness in challenging scenarios, such as aggressive motion and varying illumination. To address this, we present 360DVO, the first deep learning-based OVO framework. Our approach introduces a distortion-aware spherical feature extractor (DAS-Feat) that adaptively learns distortion-resistant features from 360-degree images. These sparse feature patches are then used to establish constraints for effective pose estimation within a novel omnidirectional differentiable bundle adjustment (ODBA) module. To facilitate evaluation in realistic settings, we also contribute a new real-world OVO benchmark. Extensive experiments on this benchmark and public synthetic datasets (TartanAir V2 and 360VO) demonstrate that 360DVO surpasses state-of-the-art baselines (including 360VO and OpenVSLAM), improving robustness by 50% and accuracy by 37.5%. Homepage: https://chris1004336379.github.io/360DVO-homepage

MarineEval: Assessing the Marine Intelligence of Vision-Language Models

Dec 24, 2025Abstract:We have witnessed promising progress led by large language models (LLMs) and further vision language models (VLMs) in handling various queries as a general-purpose assistant. VLMs, as a bridge to connect the visual world and language corpus, receive both visual content and various text-only user instructions to generate corresponding responses. Though great success has been achieved by VLMs in various fields, in this work, we ask whether the existing VLMs can act as domain experts, accurately answering marine questions, which require significant domain expertise and address special domain challenges/requirements. To comprehensively evaluate the effectiveness and explore the boundary of existing VLMs, we construct the first large-scale marine VLM dataset and benchmark called MarineEval, with 2,000 image-based question-answering pairs. During our dataset construction, we ensure the diversity and coverage of the constructed data: 7 task dimensions and 20 capacity dimensions. The domain requirements are specially integrated into the data construction and further verified by the corresponding marine domain experts. We comprehensively benchmark 17 existing VLMs on our MarineEval and also investigate the limitations of existing models in answering marine research questions. The experimental results reveal that existing VLMs cannot effectively answer the domain-specific questions, and there is still a large room for further performance improvements. We hope our new benchmark and observations will facilitate future research. Project Page: http://marineeval.hkustvgd.com/

ORCA: Object Recognition and Comprehension for Archiving Marine Species

Dec 24, 2025Abstract:Marine visual understanding is essential for monitoring and protecting marine ecosystems, enabling automatic and scalable biological surveys. However, progress is hindered by limited training data and the lack of a systematic task formulation that aligns domain-specific marine challenges with well-defined computer vision tasks, thereby limiting effective model application. To address this gap, we present ORCA, a multi-modal benchmark for marine research comprising 14,647 images from 478 species, with 42,217 bounding box annotations and 22,321 expert-verified instance captions. The dataset provides fine-grained visual and textual annotations that capture morphology-oriented attributes across diverse marine species. To catalyze methodological advances, we evaluate 18 state-of-the-art models on three tasks: object detection (closed-set and open-vocabulary), instance captioning, and visual grounding. Results highlight key challenges, including species diversity, morphological overlap, and specialized domain demands, underscoring the difficulty of marine understanding. ORCA thus establishes a comprehensive benchmark to advance research in marine domain. Project Page: http://orca.hkustvgd.com/.

TrackingWorld: World-centric Monocular 3D Tracking of Almost All Pixels

Dec 09, 2025Abstract:Monocular 3D tracking aims to capture the long-term motion of pixels in 3D space from a single monocular video and has witnessed rapid progress in recent years. However, we argue that the existing monocular 3D tracking methods still fall short in separating the camera motion from foreground dynamic motion and cannot densely track newly emerging dynamic subjects in the videos. To address these two limitations, we propose TrackingWorld, a novel pipeline for dense 3D tracking of almost all pixels within a world-centric 3D coordinate system. First, we introduce a tracking upsampler that efficiently lifts the arbitrary sparse 2D tracks into dense 2D tracks. Then, to generalize the current tracking methods to newly emerging objects, we apply the upsampler to all frames and reduce the redundancy of 2D tracks by eliminating the tracks in overlapped regions. Finally, we present an efficient optimization-based framework to back-project dense 2D tracks into world-centric 3D trajectories by estimating the camera poses and the 3D coordinates of these 2D tracks. Extensive evaluations on both synthetic and real-world datasets demonstrate that our system achieves accurate and dense 3D tracking in a world-centric coordinate frame.

MSC: A Marine Wildlife Video Dataset with Grounded Segmentation and Clip-Level Captioning

Aug 06, 2025Abstract:Marine videos present significant challenges for video understanding due to the dynamics of marine objects and the surrounding environment, camera motion, and the complexity of underwater scenes. Existing video captioning datasets, typically focused on generic or human-centric domains, often fail to generalize to the complexities of the marine environment and gain insights about marine life. To address these limitations, we propose a two-stage marine object-oriented video captioning pipeline. We introduce a comprehensive video understanding benchmark that leverages the triplets of video, text, and segmentation masks to facilitate visual grounding and captioning, leading to improved marine video understanding and analysis, and marine video generation. Additionally, we highlight the effectiveness of video splitting in order to detect salient object transitions in scene changes, which significantly enrich the semantics of captioning content. Our dataset and code have been released at https://msc.hkustvgd.com.

AUTV: Creating Underwater Video Datasets with Pixel-wise Annotations

Mar 17, 2025Abstract:Underwater video analysis, hampered by the dynamic marine environment and camera motion, remains a challenging task in computer vision. Existing training-free video generation techniques, learning motion dynamics on the frame-by-frame basis, often produce poor results with noticeable motion interruptions and misaligments. To address these issues, we propose AUTV, a framework for synthesizing marine video data with pixel-wise annotations. We demonstrate the effectiveness of this framework by constructing two video datasets, namely UTV, a real-world dataset comprising 2,000 video-text pairs, and SUTV, a synthetic video dataset including 10,000 videos with segmentation masks for marine objects. UTV provides diverse underwater videos with comprehensive annotations including appearance, texture, camera intrinsics, lighting, and animal behavior. SUTV can be used to improve underwater downstream tasks, which are demonstrated in video inpainting and video object segmentation.

Color Alignment in Diffusion

Mar 09, 2025Abstract:Diffusion models have shown great promise in synthesizing visually appealing images. However, it remains challenging to condition the synthesis at a fine-grained level, for instance, synthesizing image pixels following some generic color pattern. Existing image synthesis methods often produce contents that fall outside the desired pixel conditions. To address this, we introduce a novel color alignment algorithm that confines the generative process in diffusion models within a given color pattern. Specifically, we project diffusion terms, either imagery samples or latent representations, into a conditional color space to align with the input color distribution. This strategy simplifies the prediction in diffusion models within a color manifold while still allowing plausible structures in generated contents, thus enabling the generation of diverse contents that comply with the target color pattern. Experimental results demonstrate our state-of-the-art performance in conditioning and controlling of color pixels, while maintaining on-par generation quality and diversity in comparison with regular diffusion models.

SC-OmniGS: Self-Calibrating Omnidirectional Gaussian Splatting

Feb 07, 2025

Abstract:360-degree cameras streamline data collection for radiance field 3D reconstruction by capturing comprehensive scene data. However, traditional radiance field methods do not address the specific challenges inherent to 360-degree images. We present SC-OmniGS, a novel self-calibrating omnidirectional Gaussian splatting system for fast and accurate omnidirectional radiance field reconstruction using 360-degree images. Rather than converting 360-degree images to cube maps and performing perspective image calibration, we treat 360-degree images as a whole sphere and derive a mathematical framework that enables direct omnidirectional camera pose calibration accompanied by 3D Gaussians optimization. Furthermore, we introduce a differentiable omnidirectional camera model in order to rectify the distortion of real-world data for performance enhancement. Overall, the omnidirectional camera intrinsic model, extrinsic poses, and 3D Gaussians are jointly optimized by minimizing weighted spherical photometric loss. Extensive experiments have demonstrated that our proposed SC-OmniGS is able to recover a high-quality radiance field from noisy camera poses or even no pose prior in challenging scenarios characterized by wide baselines and non-object-centric configurations. The noticeable performance gain in the real-world dataset captured by consumer-grade omnidirectional cameras verifies the effectiveness of our general omnidirectional camera model in reducing the distortion of 360-degree images.

Align3R: Aligned Monocular Depth Estimation for Dynamic Videos

Dec 05, 2024

Abstract:Recent developments in monocular depth estimation methods enable high-quality depth estimation of single-view images but fail to estimate consistent video depth across different frames. Recent works address this problem by applying a video diffusion model to generate video depth conditioned on the input video, which is training-expensive and can only produce scale-invariant depth values without camera poses. In this paper, we propose a novel video-depth estimation method called Align3R to estimate temporal consistent depth maps for a dynamic video. Our key idea is to utilize the recent DUSt3R model to align estimated monocular depth maps of different timesteps. First, we fine-tune the DUSt3R model with additional estimated monocular depth as inputs for the dynamic scenes. Then, we apply optimization to reconstruct both depth maps and camera poses. Extensive experiments demonstrate that Align3R estimates consistent video depth and camera poses for a monocular video with superior performance than baseline methods.

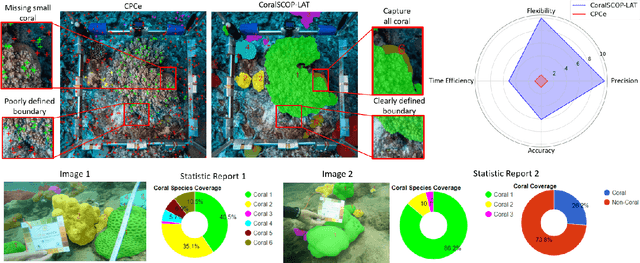

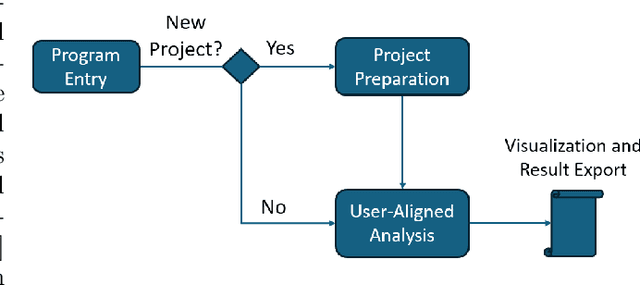

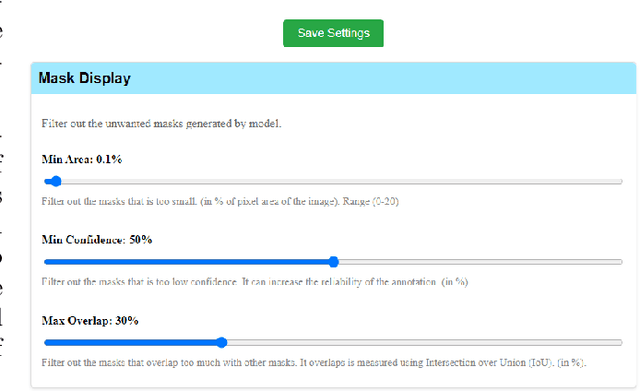

CoralSCOP-LAT: Labeling and Analyzing Tool for Coral Reef Images with Dense Mask

Oct 27, 2024

Abstract:Images of coral reefs provide invaluable information, which is essentially critical for surveying and monitoring the coral reef ecosystems. Robust and precise identification of coral reef regions within surveying imagery is paramount for assessing coral coverage, spatial distribution, and other statistical analyses. However, existing coral reef analytical approaches mainly focus on sparse points sampled from the whole imagery, which are highly subject to the sampling density and cannot accurately express the coral ambulance. Meanwhile, the analysis is both time-consuming and labor-intensive, and it is also limited to coral biologists. In this work, we propose CoralSCOP-LAT, an automatic and semi-automatic coral reef labeling and analysis tool, specially designed to segment coral reef regions (dense pixel masks) in coral reef images, significantly promoting analysis proficiency and accuracy. CoralSCOP-LAT leverages the advanced coral reef foundation model to accurately delineate coral regions, supporting dense coral reef analysis and reducing the dependency on manual annotation. The proposed CoralSCOP-LAT surpasses the existing tools by a large margin from analysis efficiency, accuracy, and flexibility. We perform comprehensive evaluations from various perspectives and the comparison demonstrates that CoralSCOP-LAT not only accelerates the coral reef analysis but also improves accuracy in coral segmentation and analysis. Our CoralSCOP-LAT, as the first dense coral reef analysis tool in the market, facilitates repeated large-scale coral reef monitoring analysis, contributing to more informed conservation efforts and sustainable management of coral reef ecosystems. Our tool will be available at https://coralscop.hkustvgd.com/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge