Ruchao Fan

RLBR: Reinforcement Learning with Biasing Rewards for Contextual Speech Large Language Models

Jan 19, 2026Abstract:Speech large language models (LLMs) have driven significant progress in end-to-end speech understanding and recognition, yet they continue to struggle with accurately recognizing rare words and domain-specific terminology. This paper presents a novel fine-tuning method, Reinforcement Learning with Biasing Rewards (RLBR), which employs a specialized biasing words preferred reward to explicitly emphasize biasing words in the reward calculation. In addition, we introduce reference-aware mechanisms that extend the reinforcement learning algorithm with reference transcription to strengthen the potential trajectory exploration space. Experiments on the LibriSpeech corpus across various biasing list sizes demonstrate that RLBR delivers substantial performance improvements over a strong supervised fine-tuning (SFT) baseline and consistently outperforms several recently published methods. The proposed approach achieves excellent performance on the LibriSpeech test-clean and test-other sets, reaching Biasing Word Error Rates (BWERs) of 0.59% / 2.11%, 1.09% / 3.24%, and 1.36% / 4.04% for biasing list sizes of 100, 500, and 1000, respectively, without compromising the overall WERs.

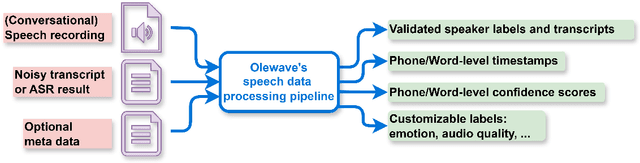

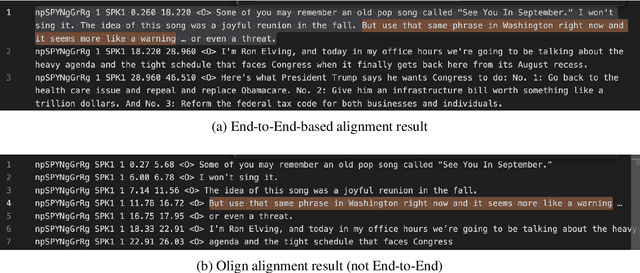

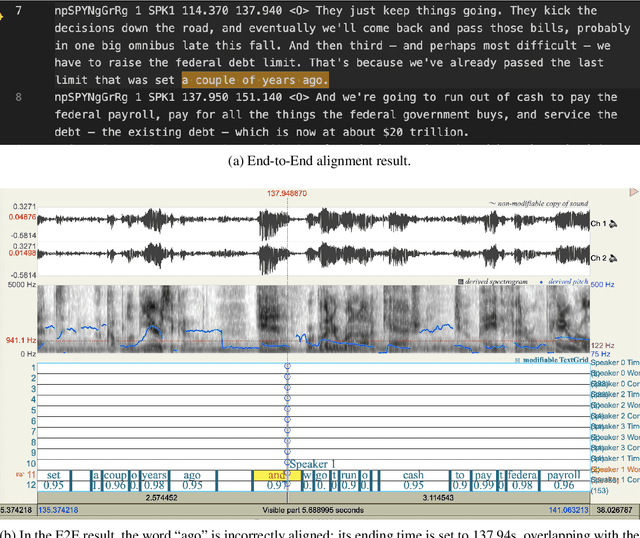

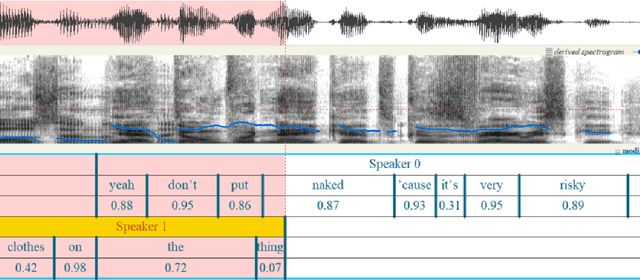

OleSpeech-IV: A Large-Scale Multispeaker and Multilingual Conversational Speech Dataset with Diverse Topics

Sep 04, 2025

Abstract:OleSpeech-IV dataset is a large-scale multispeaker and multilingual conversational speech dataset with diverse topics. The audio content comes from publicly-available English podcasts, talk shows, teleconferences, and other conversations. Speaker names, turns, and transcripts are human-sourced and refined by a proprietary pipeline, while additional information such as timestamps and confidence scores is derived from the pipeline. The IV denotes its position as Tier IV in the Olewave dataset series. In addition, we have open-sourced a subset, OleSpeech-IV-2025-EN-AR-100, for non-commercial research use.

Phi-Omni-ST: A multimodal language model for direct speech-to-speech translation

Jun 04, 2025Abstract:Speech-aware language models (LMs) have demonstrated capabilities in understanding spoken language while generating text-based responses. However, enabling them to produce speech output efficiently and effectively remains a challenge. In this paper, we present Phi-Omni-ST, a multimodal LM for direct speech-to-speech translation (ST), built on the open-source Phi-4 MM model. Phi-Omni-ST extends its predecessor by generating translated speech using an audio transformer head that predicts audio tokens with a delay relative to text tokens, followed by a streaming vocoder for waveform synthesis. Our experimental results on the CVSS-C dataset demonstrate Phi-Omni-ST's superior performance, significantly surpassing existing baseline models trained on the same dataset. Furthermore, when we scale up the training data and the model size, Phi-Omni-ST reaches on-par performance with the current SOTA model.

Towards Efficient Speech-Text Jointly Decoding within One Speech Language Model

Jun 04, 2025

Abstract:Speech language models (Speech LMs) enable end-to-end speech-text modelling within a single model, offering a promising direction for spoken dialogue systems. The choice of speech-text jointly decoding paradigm plays a critical role in performance, efficiency, and alignment quality. In this work, we systematically compare representative joint speech-text decoding strategies-including the interleaved, and parallel generation paradigms-under a controlled experimental setup using the same base language model, speech tokenizer and training data. Our results show that the interleaved approach achieves the best alignment. However it suffers from slow inference due to long token sequence length. To address this, we propose a novel early-stop interleaved (ESI) pattern that not only significantly accelerates decoding but also yields slightly better performance. Additionally, we curate high-quality question answering (QA) datasets to further improve speech QA performance.

Phi-4-Mini Technical Report: Compact yet Powerful Multimodal Language Models via Mixture-of-LoRAs

Mar 03, 2025

Abstract:We introduce Phi-4-Mini and Phi-4-Multimodal, compact yet highly capable language and multimodal models. Phi-4-Mini is a 3.8-billion-parameter language model trained on high-quality web and synthetic data, significantly outperforming recent open-source models of similar size and matching the performance of models twice its size on math and coding tasks requiring complex reasoning. This achievement is driven by a carefully curated synthetic data recipe emphasizing high-quality math and coding datasets. Compared to its predecessor, Phi-3.5-Mini, Phi-4-Mini features an expanded vocabulary size of 200K tokens to better support multilingual applications, as well as group query attention for more efficient long-sequence generation. Phi-4-Multimodal is a multimodal model that integrates text, vision, and speech/audio input modalities into a single model. Its novel modality extension approach leverages LoRA adapters and modality-specific routers to allow multiple inference modes combining various modalities without interference. For example, it now ranks first in the OpenASR leaderboard to date, although the LoRA component of the speech/audio modality has just 460 million parameters. Phi-4-Multimodal supports scenarios involving (vision + language), (vision + speech), and (speech/audio) inputs, outperforming larger vision-language and speech-language models on a wide range of tasks. Additionally, we experiment to further train Phi-4-Mini to enhance its reasoning capabilities. Despite its compact 3.8-billion-parameter size, this experimental version achieves reasoning performance on par with or surpassing significantly larger models, including DeepSeek-R1-Distill-Qwen-7B and DeepSeek-R1-Distill-Llama-8B.

AlignFormer: Modality Matching Can Achieve Better Zero-shot Instruction-Following Speech-LLM

Dec 02, 2024Abstract:Integrating speech into LLM (speech-LLM) has gaining increased attention recently. The mainstream solution is to connect a well-trained speech encoder and LLM with a neural adapter. However, the length mismatch between the speech and text sequences are not well handled, leading to imperfect modality matching between the speech and text. In this work, we propose a novel neural adapter, AlignFormer, to reduce the length gap between the two modalities. AlignFormer consists of CTC and dynamic-window QFormer layers, where the CTC alignment provides the dynamic window information for qformer layers. The LLM backbone is frozen in training to preserve its text capability, especially the instruction following capability. When training with only the ASR data, the proposed AlignFormer unlocks the instruction following capability for speech-LLM and the model can perform zero-shot speech translation (ST) and speech question answering (SQA) tasks. In fact, speech-LLM with AlignFormer can theoretically perform any tasks that the LLM backbone can deal with in the speech version. To evaluate the effectiveness of the instruction-following speech-LLM, we propose to use instruction following rate (IFR) and offer a systematic perspective for the IFR evaluation. In addition, we find that the audio position in training would affect the instruction following capability of speech-LLM and conduct an in-depth study on it. Our findings show that audio-first training achieves higher IFR than instruction-first training. The AlignFormer can achieve a near 100% IFR with audio-first training and game-changing improvements from zero to non-zero IFR on some evaluation data with instruction-first training. We believe that this study is a big step towards the perfect speech and text modality matching in the LLM embedding space.

CTC-GMM: CTC guided modality matching for fast and accurate streaming speech translation

Oct 07, 2024

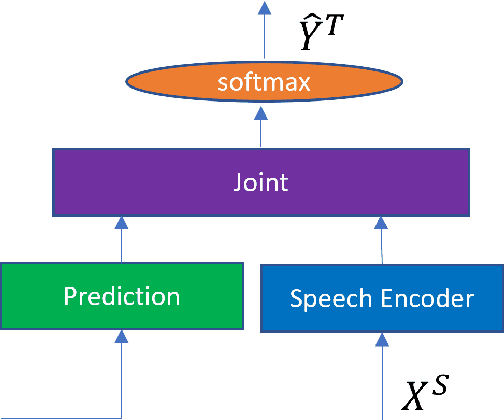

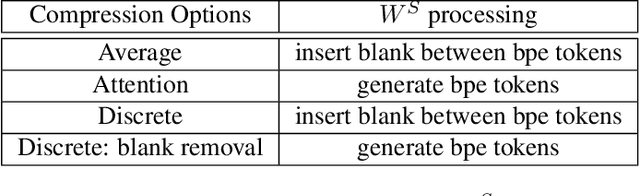

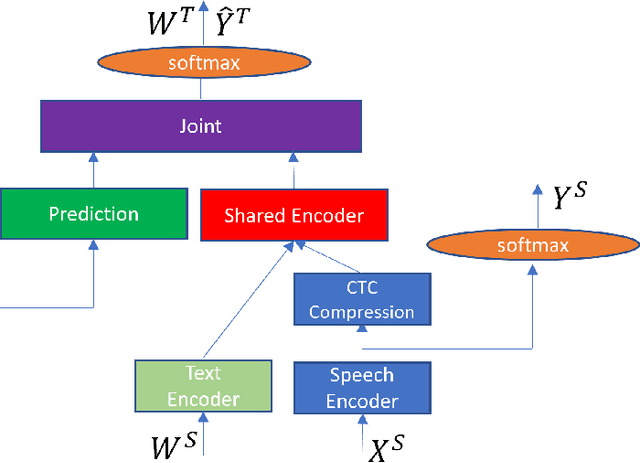

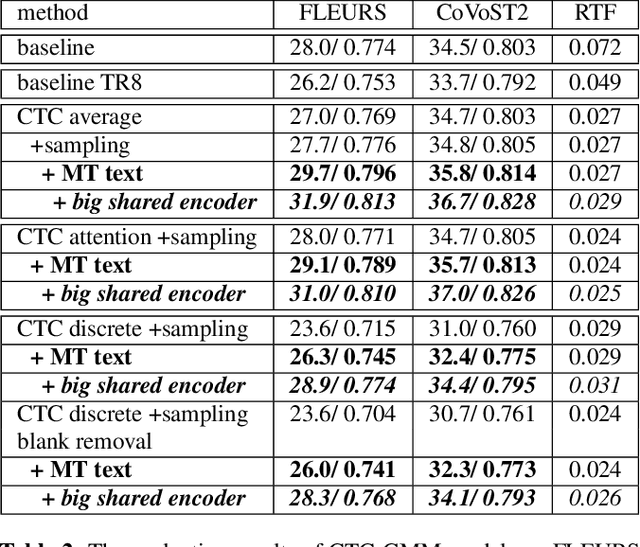

Abstract:Models for streaming speech translation (ST) can achieve high accuracy and low latency if they're developed with vast amounts of paired audio in the source language and written text in the target language. Yet, these text labels for the target language are often pseudo labels due to the prohibitive cost of manual ST data labeling. In this paper, we introduce a methodology named Connectionist Temporal Classification guided modality matching (CTC-GMM) that enhances the streaming ST model by leveraging extensive machine translation (MT) text data. This technique employs CTC to compress the speech sequence into a compact embedding sequence that matches the corresponding text sequence, allowing us to utilize matched {source-target} language text pairs from the MT corpora to refine the streaming ST model further. Our evaluations with FLEURS and CoVoST2 show that the CTC-GMM approach can increase translation accuracy relatively by 13.9% and 6.4% respectively, while also boosting decoding speed by 59.7% on GPU.

Benchmarking Children's ASR with Supervised and Self-supervised Speech Foundation Models

Jun 15, 2024Abstract:Speech foundation models (SFMs) have achieved state-of-the-art results for various speech tasks in supervised (e.g. Whisper) or self-supervised systems (e.g. WavLM). However, the performance of SFMs for child ASR has not been systematically studied. In addition, there is no benchmark for child ASR with standard evaluations, making the comparisons of novel ideas difficult. In this paper, we initiate and present a comprehensive benchmark on several child speech databases based on various SFMs (Whisper, Wav2vec2.0, HuBERT, and WavLM). Moreover, we investigate finetuning strategies by comparing various data augmentation and parameter-efficient finetuning (PEFT) methods. We observe that the behaviors of these methods are different when the model size increases. For example, PEFT matches the performance of full finetuning for large models but worse for small models. To stabilize finetuning using augmented data, we propose a perturbation invariant finetuning (PIF) loss as a regularization.

SOA: Reducing Domain Mismatch in SSL Pipeline by Speech Only Adaptation for Low Resource ASR

Jun 15, 2024

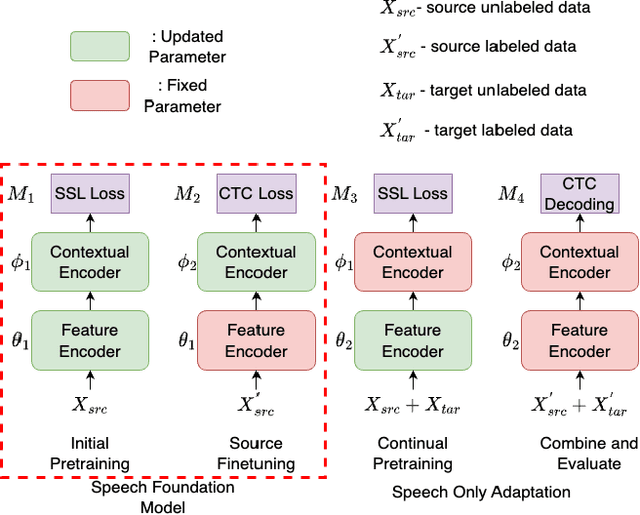

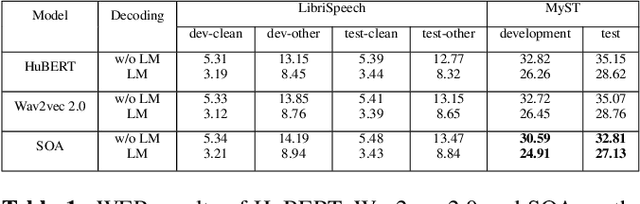

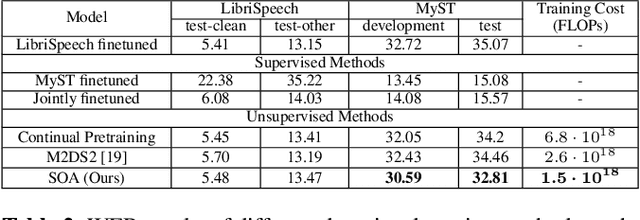

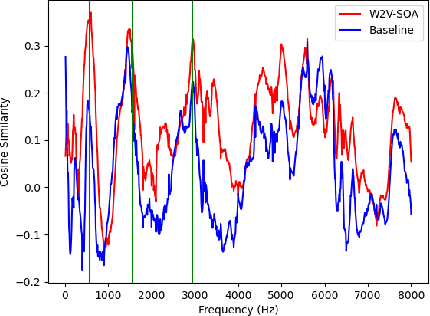

Abstract:Recently, speech foundation models have gained popularity due to their superiority in finetuning downstream ASR tasks. However, models finetuned on certain domains, such as LibriSpeech (adult read speech), behave poorly on other domains (child or noisy speech). One solution could be collecting as much labeled and diverse data as possible for joint finetuning on various domains. However, collecting target domain speech-text paired data and retraining the model is often costly and computationally expensive. In this paper, we introduce a simple yet effective method, speech only adaptation (SOA), based on speech foundation models (Wav2vec 2.0), which requires only speech input data from the target domain. Specifically, the Wav2vec 2.0 feature encoder is continually pretrained with the Wav2vec 2.0 loss on both the source and target domain data for domain adaptation, while the contextual encoder is frozen. Compared to a source domain finetuned model with the feature encoder being frozen during training, we find that replacing the frozen feature encoder with the adapted one provides significant WER improvements to the target domain while preserving the performance of the source domain. The effectiveness of SOA is examined on various low resource or domain mismatched ASR settings, including adult-child and clean-noisy speech.

UniEnc-CASSNAT: An Encoder-only Non-autoregressive ASR for Speech SSL Models

Feb 14, 2024

Abstract:Non-autoregressive automatic speech recognition (NASR) models have gained attention due to their parallelism and fast inference. The encoder-based NASR, e.g. connectionist temporal classification (CTC), can be initialized from the speech foundation models (SFM) but does not account for any dependencies among intermediate tokens. The encoder-decoder-based NASR, like CTC alignment-based single-step non-autoregressive transformer (CASS-NAT), can mitigate the dependency problem but is not able to efficiently integrate SFM. Inspired by the success of recent work of speech-text joint pre-training with a shared transformer encoder, we propose a new encoder-based NASR, UniEnc-CASSNAT, to combine the advantages of CTC and CASS-NAT. UniEnc-CASSNAT consists of only an encoder as the major module, which can be the SFM. The encoder plays the role of both the CASS-NAT encoder and decoder by two forward passes. The first pass of the encoder accepts the speech signal as input, while the concatenation of the speech signal and the token-level acoustic embedding is used as the input for the second pass. Examined on the Librispeech 100h, MyST, and Aishell1 datasets, the proposed UniEnc-CASSNAT achieves state-of-the-art NASR results and is better or comparable to CASS-NAT with only an encoder and hence, fewer model parameters. Our codes are publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge