Riku Murai

Imperial College London

MASt3R-SLAM: Real-Time Dense SLAM with 3D Reconstruction Priors

Dec 16, 2024

Abstract:We present a real-time monocular dense SLAM system designed bottom-up from MASt3R, a two-view 3D reconstruction and matching prior. Equipped with this strong prior, our system is robust on in-the-wild video sequences despite making no assumption on a fixed or parametric camera model beyond a unique camera centre. We introduce efficient methods for pointmap matching, camera tracking and local fusion, graph construction and loop closure, and second-order global optimisation. With known calibration, a simple modification to the system achieves state-of-the-art performance across various benchmarks. Altogether, we propose a plug-and-play monocular SLAM system capable of producing globally-consistent poses and dense geometry while operating at 15 FPS.

SAFER-Splat: A Control Barrier Function for Safe Navigation with Online Gaussian Splatting Maps

Sep 15, 2024Abstract:SAFER-Splat (Simultaneous Action Filtering and Environment Reconstruction) is a real-time, scalable, and minimally invasive action filter, based on control barrier functions, for safe robotic navigation in a detailed map constructed at runtime using Gaussian Splatting (GSplat). We propose a novel Control Barrier Function (CBF) that not only induces safety with respect to all Gaussian primitives in the scene, but when synthesized into a controller, is capable of processing hundreds of thousands of Gaussians while maintaining a minimal memory footprint and operating at 15 Hz during online Splat training. Of the total compute time, a small fraction of it consumes GPU resources, enabling uninterrupted training. The safety layer is minimally invasive, correcting robot actions only when they are unsafe. To showcase the safety filter, we also introduce SplatBridge, an open-source software package built with ROS for real-time GSplat mapping for robots. We demonstrate the safety and robustness of our pipeline first in simulation, where our method is 20-50x faster, safer, and less conservative than competing methods based on neural radiance fields. Further, we demonstrate simultaneous GSplat mapping and safety filtering on a drone hardware platform using only on-board perception. We verify that under teleoperation a human pilot cannot invoke a collision. Our videos and codebase can be found at https://chengine.github.io/safer-splat.

PixRO: Pixel-Distributed Rotational Odometry with Gaussian Belief Propagation

Jun 14, 2024

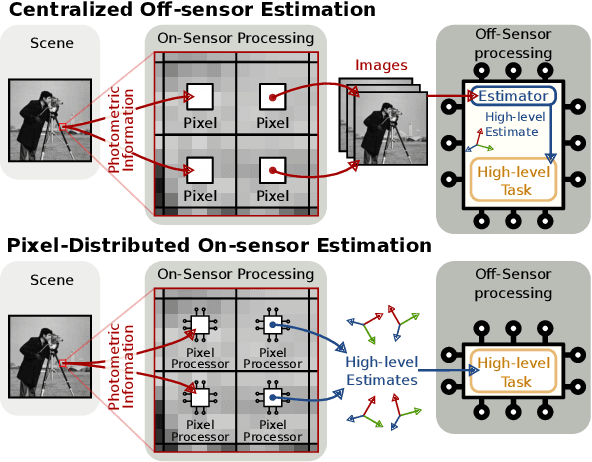

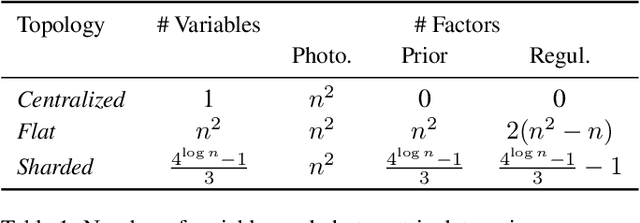

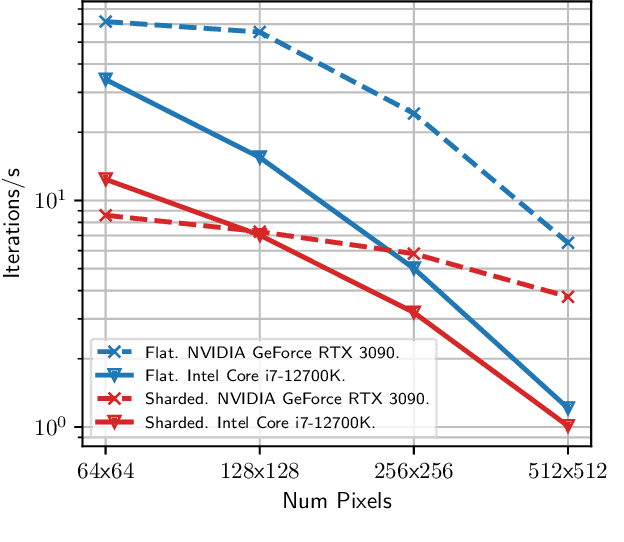

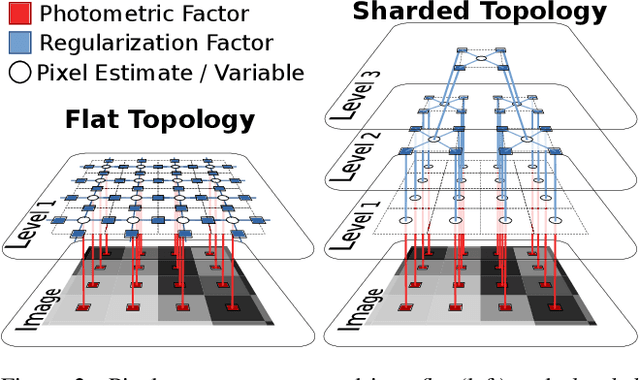

Abstract:Visual sensors are not only becoming better at capturing high-quality images but also they have steadily increased their capabilities in processing data on their own on-chip. Yet the majority of VO pipelines rely on the transmission and processing of full images in a centralized unit (e.g. CPU or GPU), which often contain much redundant and low-quality information for the task. In this paper, we address the task of frame-to-frame rotational estimation but, instead of reasoning about relative motion between frames using the full images, distribute the estimation at pixel-level. In this paradigm, each pixel produces an estimate of the global motion by only relying on local information and local message-passing with neighbouring pixels. The resulting per-pixel estimates can then be communicated to downstream tasks, yielding higher-level, informative cues instead of the original raw pixel-readings. We evaluate the proposed approach on real public datasets, where we offer detailed insights about this novel technique and open-source our implementation for the future benefit of the community.

Visual Inertial Odometry using Focal Plane Binary Features (BIT-VIO)

Mar 14, 2024

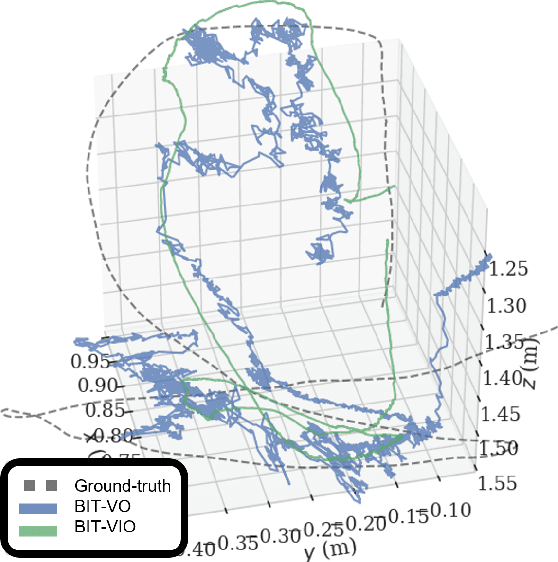

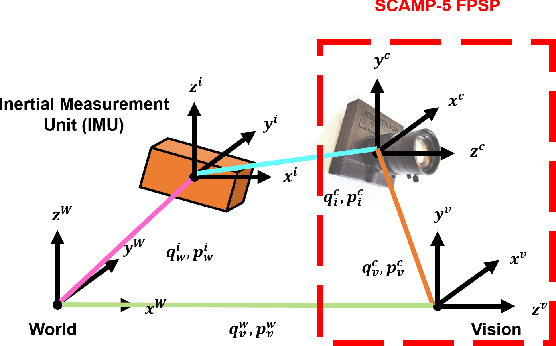

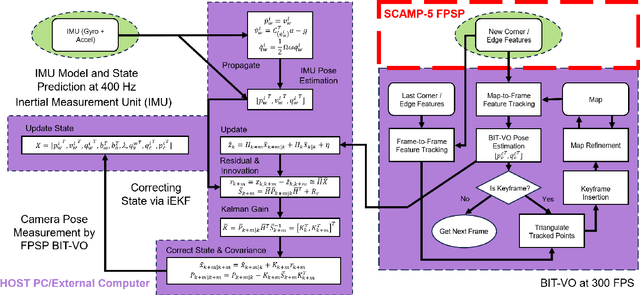

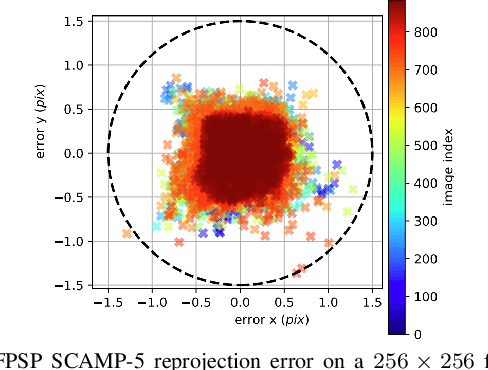

Abstract:Focal-Plane Sensor-Processor Arrays (FPSP)s are an emerging technology that can execute vision algorithms directly on the image sensor. Unlike conventional cameras, FPSPs perform computation on the image plane -- at individual pixels -- enabling high frame rate image processing while consuming low power, making them ideal for mobile robotics. FPSPs, such as the SCAMP-5, use parallel processing and are based on the Single Instruction Multiple Data (SIMD) paradigm. In this paper, we present BIT-VIO, the first Visual Inertial Odometry (VIO) which utilises SCAMP-5.BIT-VIO is a loosely-coupled iterated Extended Kalman Filter (iEKF) which fuses together the visual odometry running fast at 300 FPS with predictions from 400 Hz IMU measurements to provide accurate and smooth trajectories.

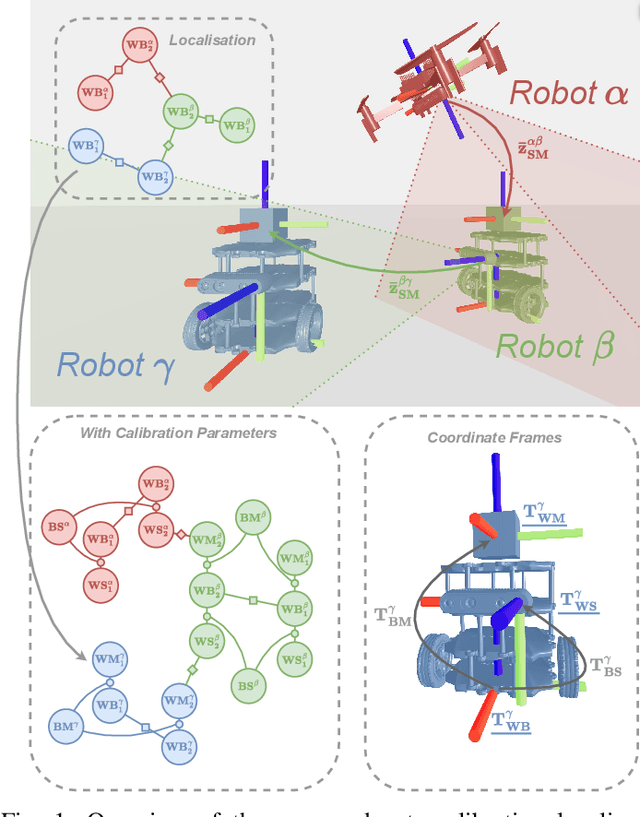

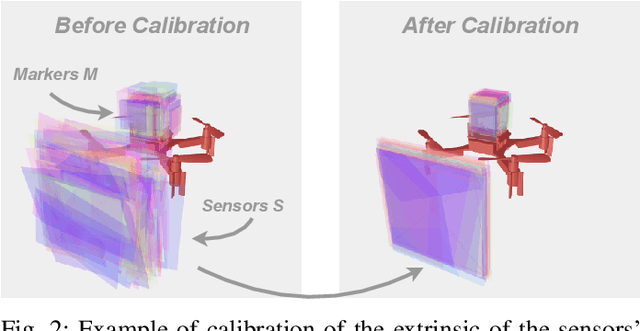

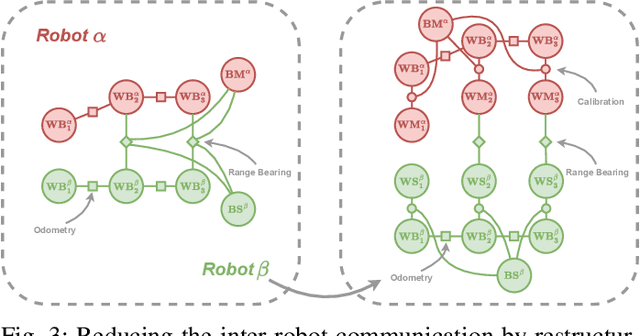

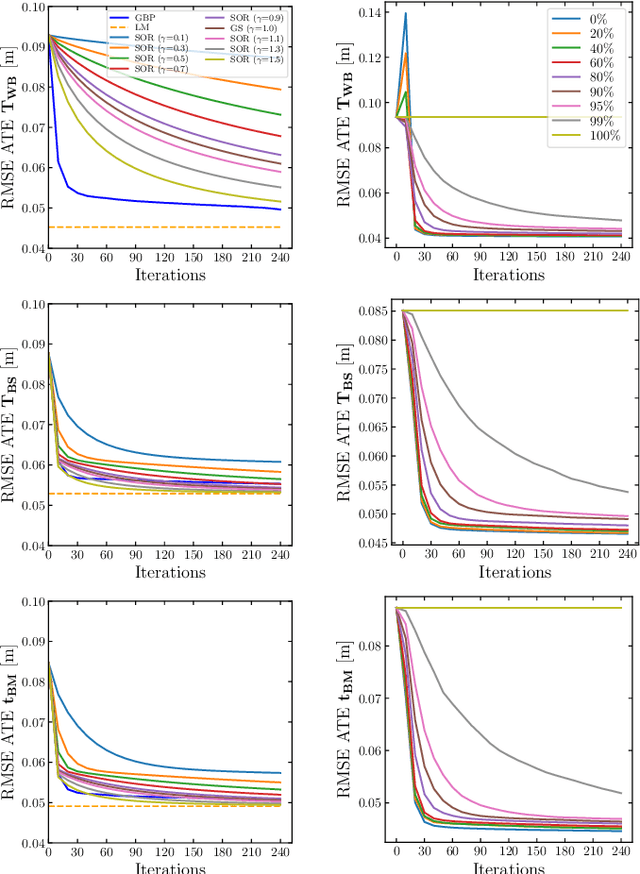

Distributed Simultaneous Localisation and Auto-Calibration using Gaussian Belief Propagation

Jan 26, 2024

Abstract:We present a novel scalable, fully distributed, and online method for simultaneous localisation and extrinsic calibration for multi-robot setups. Individual a priori unknown robot poses are probabilistically inferred as robots sense each other while simultaneously calibrating their sensors and markers extrinsic using Gaussian Belief Propagation. In the presented experiments, we show how our method not only yields accurate robot localisation and auto-calibration but also is able to perform under challenging circumstances such as highly noisy measurements, significant communication failures or limited communication range.

* Published in IEEE Robotics and Automation Letters (RA-L) 2024

Gaussian Splatting SLAM

Dec 11, 2023

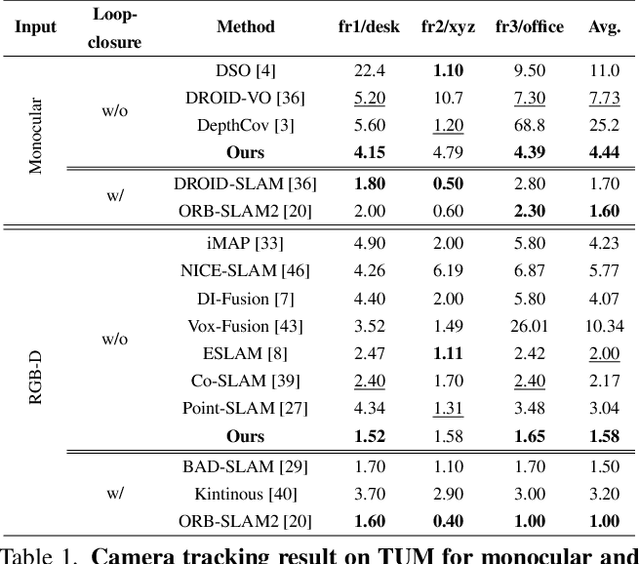

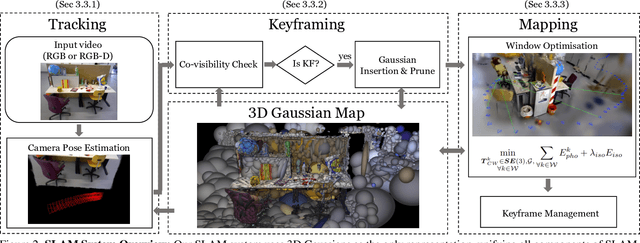

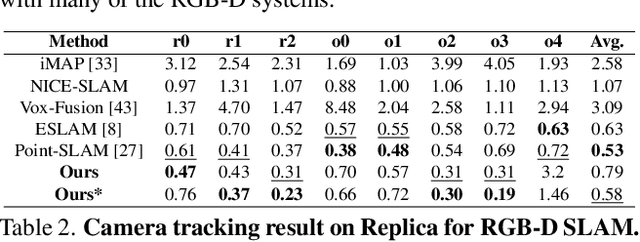

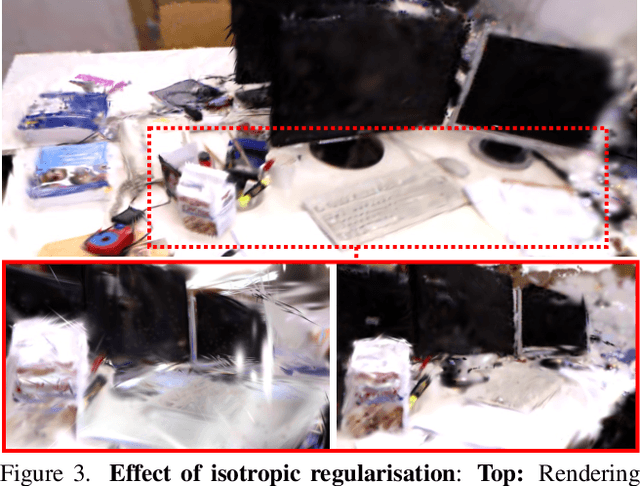

Abstract:We present the first application of 3D Gaussian Splatting to incremental 3D reconstruction using a single moving monocular or RGB-D camera. Our Simultaneous Localisation and Mapping (SLAM) method, which runs live at 3fps, utilises Gaussians as the only 3D representation, unifying the required representation for accurate, efficient tracking, mapping, and high-quality rendering. Several innovations are required to continuously reconstruct 3D scenes with high fidelity from a live camera. First, to move beyond the original 3DGS algorithm, which requires accurate poses from an offline Structure from Motion (SfM) system, we formulate camera tracking for 3DGS using direct optimisation against the 3D Gaussians, and show that this enables fast and robust tracking with a wide basin of convergence. Second, by utilising the explicit nature of the Gaussians, we introduce geometric verification and regularisation to handle the ambiguities occurring in incremental 3D dense reconstruction. Finally, we introduce a full SLAM system which not only achieves state-of-the-art results in novel view synthesis and trajectory estimation, but also reconstruction of tiny and even transparent objects.

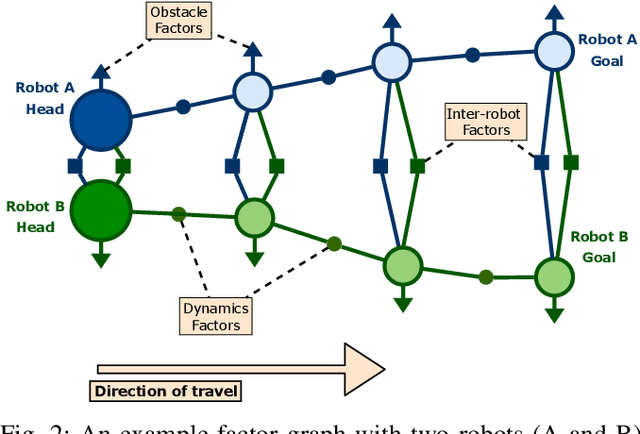

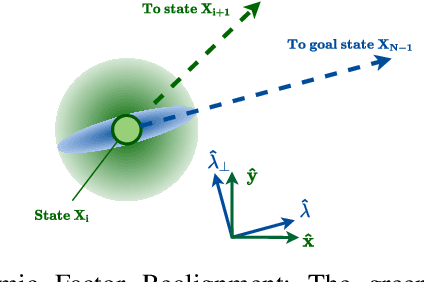

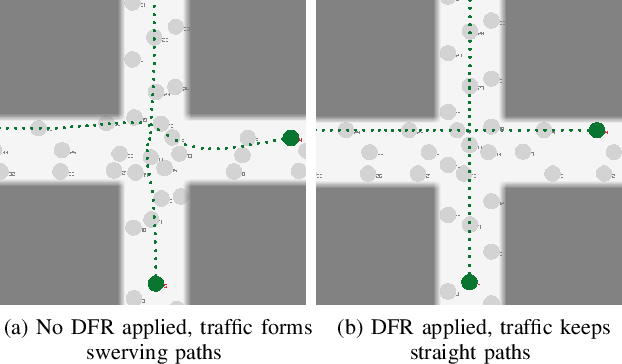

Distributing Collaborative Multi-Robot Planning with Gaussian Belief Propagation

Mar 22, 2022

Abstract:Precise coordinated planning enables safe and highly efficient motion when many robots must work together in tight spaces, but this would normally require centralised control of all devices which is difficult to scale. We demonstrate a new purely distributed technique based on Gaussian Belief Propagation on multi-robot planning problems formulated by a generic factor graph defining dynamics and collision constraints. We show that our method allows extremely high performance collaborative planning in a simulated road traffic scenario, where vehicles are able to cross each other at a busy multi-lane junction while maintaining much higher average speeds than alternative distributed planning techniques. We encourage the reader to view the accompanying video demonstration to this work at https://youtu.be/5d4LXbxgxaY.

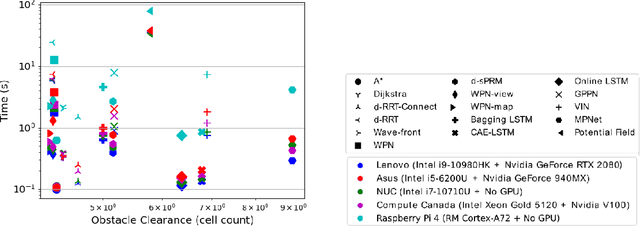

Systematic Comparison of Path Planning Algorithms using PathBench

Mar 07, 2022

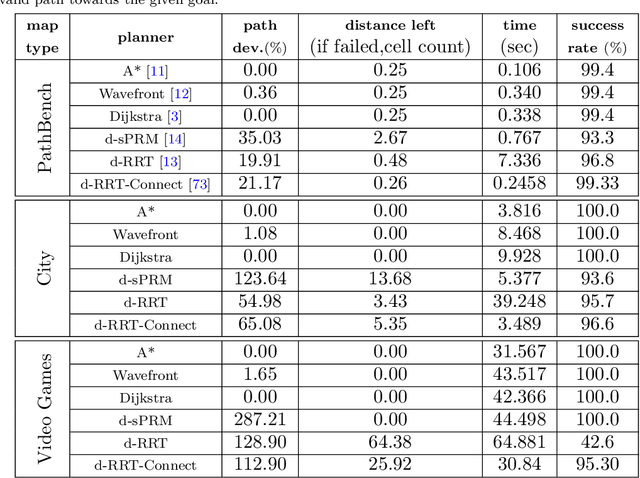

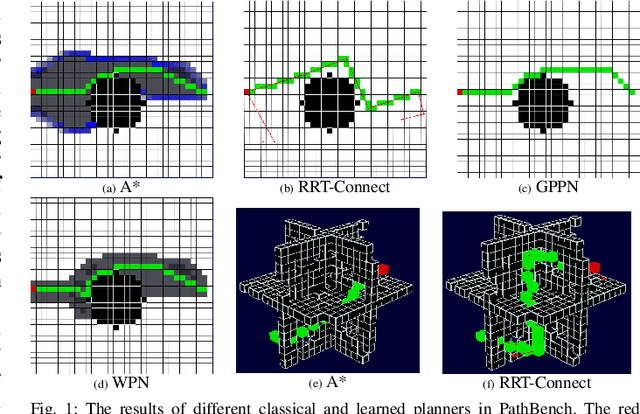

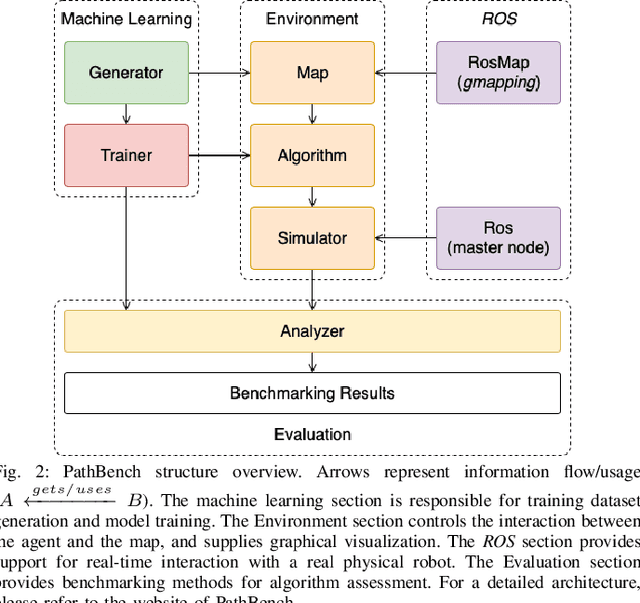

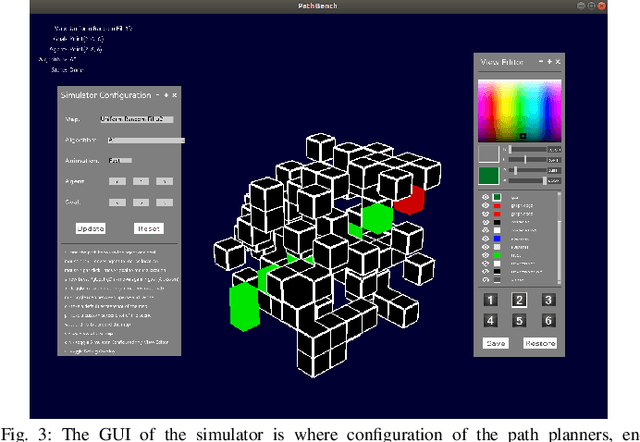

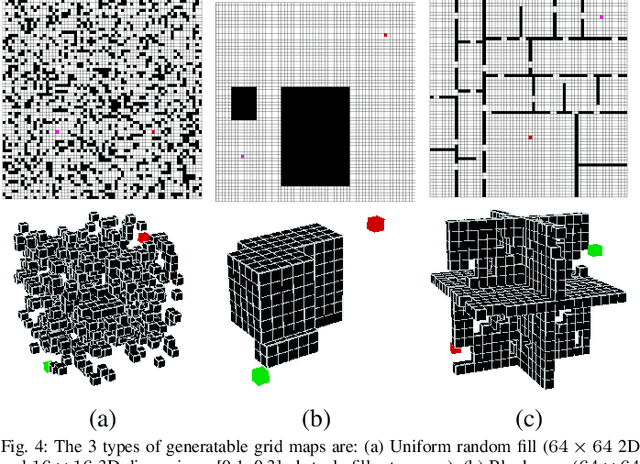

Abstract:Path planning is an essential component of mobile robotics. Classical path planning algorithms, such as wavefront and rapidly-exploring random tree (RRT) are used heavily in autonomous robots. With the recent advances in machine learning, development of learning-based path planning algorithms has been experiencing rapid growth. An unified path planning interface that facilitates the development and benchmarking of existing and new algorithms is needed. This paper presents PathBench, a platform for developing, visualizing, training, testing, and benchmarking of existing and future, classical and learning-based path planning algorithms in 2D and 3D grid world environments. Many existing path planning algorithms are supported; e.g. A*, Dijkstra, waypoint planning networks, value iteration networks, gated path planning networks; and integrating new algorithms is easy and clearly specified. The benchmarking ability of PathBench is explored in this paper by comparing algorithms across five different hardware systems and three different map types, including built-in PathBench maps, video game maps, and maps from real world databases. Metrics, such as path length, success rate, and computational time, were used to evaluate algorithms. Algorithmic analysis was also performed on a real world robot to demonstrate PathBench's support for Robot Operating System (ROS). PathBench is open source.

A Robot Web for Distributed Many-Device Localisation

Feb 07, 2022Abstract:We show that a distributed network of robots or other devices which make measurements of each other can collaborate to globally localise via efficient ad-hoc peer to peer communication. Our Robot Web solution is based on Gaussian Belief Propagation on the fundamental non-linear factor graph describing the probabilistic structure of all of the observations robots make internally or of each other, and is flexible for any type of robot, motion or sensor. We define a simple and efficient communication protocol which can be implemented by the publishing and reading of web pages or other asynchronous communication technologies. We show in simulations with up to 1000 robots interacting in arbitrary patterns that our solution convergently achieves global accuracy as accurate as a centralised non-linear factor graph solver while operating with high distributed efficiency of computation and communication. Via the use of robust factors in GBP, our method is tolerant to a high percentage of faults in sensor measurements or dropped communication packets.

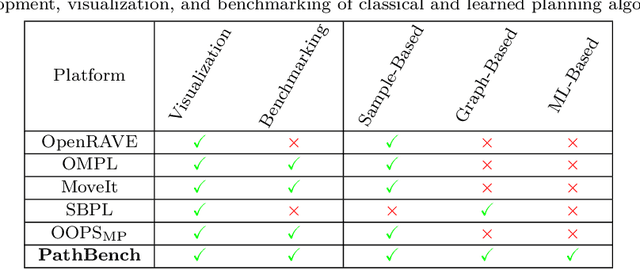

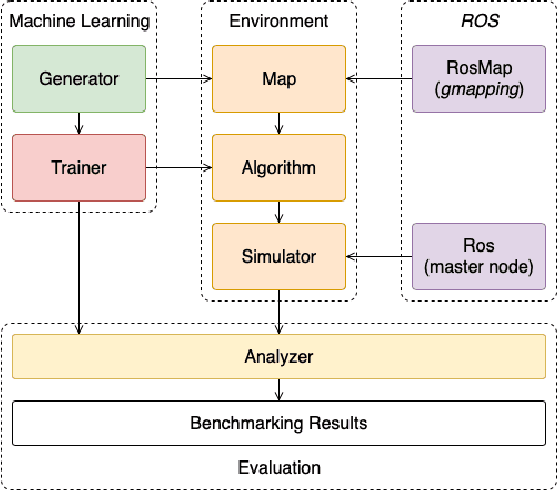

PathBench: A Benchmarking Platform for Classical and Learned Path Planning Algorithms

May 04, 2021

Abstract:Path planning is a key component in mobile robotics. A wide range of path planning algorithms exist, but few attempts have been made to benchmark the algorithms holistically or unify their interface. Moreover, with the recent advances in deep neural networks, there is an urgent need to facilitate the development and benchmarking of such learning-based planning algorithms. This paper presents PathBench, a platform for developing, visualizing, training, testing, and benchmarking of existing and future, classical and learned 2D and 3D path planning algorithms, while offering support for Robot Oper-ating System (ROS). Many existing path planning algorithms are supported; e.g. A*, wavefront, rapidly-exploring random tree, value iteration networks, gated path planning networks; and integrating new algorithms is easy and clearly specified. We demonstrate the benchmarking capability of PathBench by comparing implemented classical and learned algorithms for metrics, such as path length, success rate, computational time and path deviation. These evaluations are done on built-in PathBench maps and external path planning environments from video games and real world databases. PathBench is open source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge