Javier Yu

VISTA: Open-Vocabulary, Task-Relevant Robot Exploration with Online Semantic Gaussian Splatting

Jul 01, 2025Abstract:We present VISTA (Viewpoint-based Image selection with Semantic Task Awareness), an active exploration method for robots to plan informative trajectories that improve 3D map quality in areas most relevant for task completion. Given an open-vocabulary search instruction (e.g., "find a person"), VISTA enables a robot to explore its environment to search for the object of interest, while simultaneously building a real-time semantic 3D Gaussian Splatting reconstruction of the scene. The robot navigates its environment by planning receding-horizon trajectories that prioritize semantic similarity to the query and exploration of unseen regions of the environment. To evaluate trajectories, VISTA introduces a novel, efficient viewpoint-semantic coverage metric that quantifies both the geometric view diversity and task relevance in the 3D scene. On static datasets, our coverage metric outperforms state-of-the-art baselines, FisherRF and Bayes' Rays, in computation speed and reconstruction quality. In quadrotor hardware experiments, VISTA achieves 6x higher success rates in challenging maps, compared to baseline methods, while matching baseline performance in less challenging maps. Lastly, we show that VISTA is platform-agnostic by deploying it on a quadrotor drone and a Spot quadruped robot. Open-source code will be released upon acceptance of the paper.

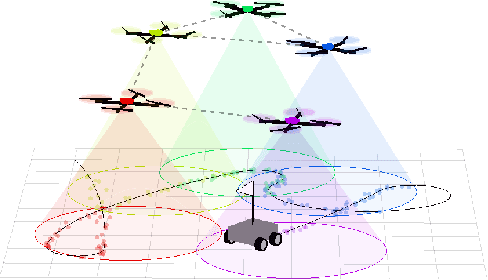

HAMMER: Heterogeneous, Multi-Robot Semantic Gaussian Splatting

Jan 24, 2025

Abstract:3D Gaussian Splatting offers expressive scene reconstruction, modeling a broad range of visual, geometric, and semantic information. However, efficient real-time map reconstruction with data streamed from multiple robots and devices remains a challenge. To that end, we propose HAMMER, a server-based collaborative Gaussian Splatting method that leverages widely available ROS communication infrastructure to generate 3D, metric-semantic maps from asynchronous robot data-streams with no prior knowledge of initial robot positions and varying on-device pose estimators. HAMMER consists of (i) a frame alignment module that transforms local SLAM poses and image data into a global frame and requires no prior relative pose knowledge, and (ii) an online module for training semantic 3DGS maps from streaming data. HAMMER handles mixed perception modes, adjusts automatically for variations in image pre-processing among different devices, and distills CLIP semantic codes into the 3D scene for open-vocabulary language queries. In our real-world experiments, HAMMER creates higher-fidelity maps (2x) compared to competing baselines and is useful for downstream tasks, such as semantic goal-conditioned navigation (e.g., ``go to the couch"). Accompanying content available at hammer-project.github.io.

SOUS VIDE: Cooking Visual Drone Navigation Policies in a Gaussian Splatting Vacuum

Dec 20, 2024

Abstract:We propose a new simulator, training approach, and policy architecture, collectively called SOUS VIDE, for end-to-end visual drone navigation. Our trained policies exhibit zero-shot sim-to-real transfer with robust real-world performance using only on-board perception and computation. Our simulator, called FiGS, couples a computationally simple drone dynamics model with a high visual fidelity Gaussian Splatting scene reconstruction. FiGS can quickly simulate drone flights producing photorealistic images at up to 130 fps. We use FiGS to collect 100k-300k observation-action pairs from an expert MPC with privileged state and dynamics information, randomized over dynamics parameters and spatial disturbances. We then distill this expert MPC into an end-to-end visuomotor policy with a lightweight neural architecture, called SV-Net. SV-Net processes color image, optical flow and IMU data streams into low-level body rate and thrust commands at 20Hz onboard a drone. Crucially, SV-Net includes a Rapid Motor Adaptation (RMA) module that adapts at runtime to variations in drone dynamics. In a campaign of 105 hardware experiments, we show SOUS VIDE policies to be robust to 30% mass variations, 40 m/s wind gusts, 60% changes in ambient brightness, shifting or removing objects from the scene, and people moving aggressively through the drone's visual field. Code, data, and experiment videos can be found on our project page: https://stanfordmsl.github.io/SousVide/.

SAFER-Splat: A Control Barrier Function for Safe Navigation with Online Gaussian Splatting Maps

Sep 15, 2024Abstract:SAFER-Splat (Simultaneous Action Filtering and Environment Reconstruction) is a real-time, scalable, and minimally invasive action filter, based on control barrier functions, for safe robotic navigation in a detailed map constructed at runtime using Gaussian Splatting (GSplat). We propose a novel Control Barrier Function (CBF) that not only induces safety with respect to all Gaussian primitives in the scene, but when synthesized into a controller, is capable of processing hundreds of thousands of Gaussians while maintaining a minimal memory footprint and operating at 15 Hz during online Splat training. Of the total compute time, a small fraction of it consumes GPU resources, enabling uninterrupted training. The safety layer is minimally invasive, correcting robot actions only when they are unsafe. To showcase the safety filter, we also introduce SplatBridge, an open-source software package built with ROS for real-time GSplat mapping for robots. We demonstrate the safety and robustness of our pipeline first in simulation, where our method is 20-50x faster, safer, and less conservative than competing methods based on neural radiance fields. Further, we demonstrate simultaneous GSplat mapping and safety filtering on a drone hardware platform using only on-board perception. We verify that under teleoperation a human pilot cannot invoke a collision. Our videos and codebase can be found at https://chengine.github.io/safer-splat.

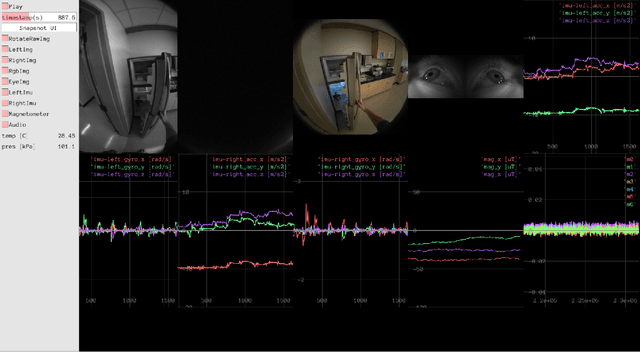

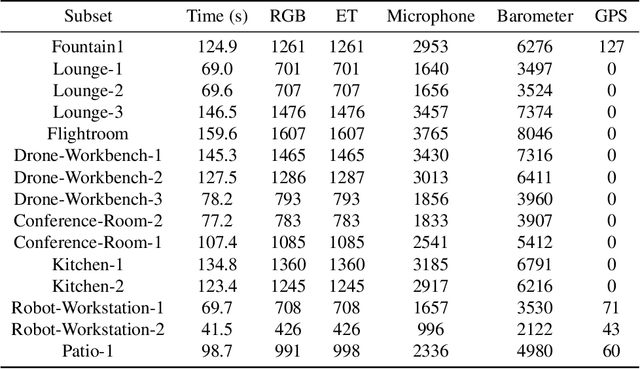

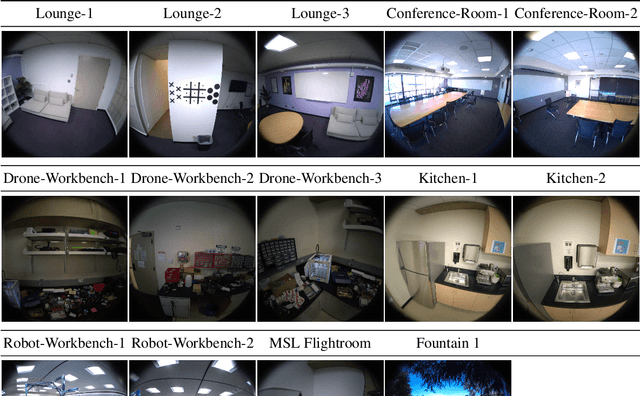

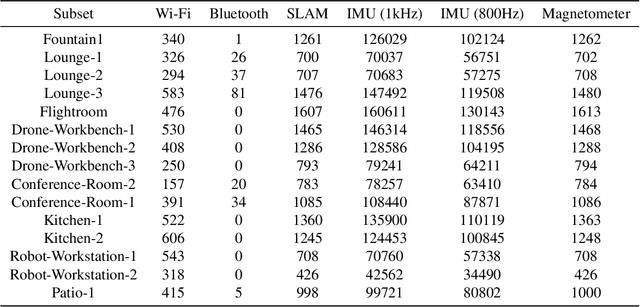

Aria-NeRF: Multimodal Egocentric View Synthesis

Nov 11, 2023

Abstract:We seek to accelerate research in developing rich, multimodal scene models trained from egocentric data, based on differentiable volumetric ray-tracing inspired by Neural Radiance Fields (NeRFs). The construction of a NeRF-like model from an egocentric image sequence plays a pivotal role in understanding human behavior and holds diverse applications within the realms of VR/AR. Such egocentric NeRF-like models may be used as realistic simulations, contributing significantly to the advancement of intelligent agents capable of executing tasks in the real-world. The future of egocentric view synthesis may lead to novel environment representations going beyond today's NeRFs by augmenting visual data with multimodal sensors such as IMU for egomotion tracking, audio sensors to capture surface texture and human language context, and eye-gaze trackers to infer human attention patterns in the scene. To support and facilitate the development and evaluation of egocentric multimodal scene modeling, we present a comprehensive multimodal egocentric video dataset. This dataset offers a comprehensive collection of sensory data, featuring RGB images, eye-tracking camera footage, audio recordings from a microphone, atmospheric pressure readings from a barometer, positional coordinates from GPS, connectivity details from Wi-Fi and Bluetooth, and information from dual-frequency IMU datasets (1kHz and 800Hz) paired with a magnetometer. The dataset was collected with the Meta Aria Glasses wearable device platform. The diverse data modalities and the real-world context captured within this dataset serve as a robust foundation for furthering our understanding of human behavior and enabling more immersive and intelligent experiences in the realms of VR, AR, and robotics.

NerfBridge: Bringing Real-time, Online Neural Radiance Field Training to Robotics

May 16, 2023Abstract:This work was presented at the IEEE International Conference on Robotics and Automation 2023 Workshop on Unconventional Spatial Representations. Neural radiance fields (NeRFs) are a class of implicit scene representations that model 3D environments from color images. NeRFs are expressive, and can model the complex and multi-scale geometry of real world environments, which potentially makes them a powerful tool for robotics applications. Modern NeRF training libraries can generate a photo-realistic NeRF from a static data set in just a few seconds, but are designed for offline use and require a slow pose optimization pre-computation step. In this work we propose NerfBridge, an open-source bridge between the Robot Operating System (ROS) and the popular Nerfstudio library for real-time, online training of NeRFs from a stream of images. NerfBridge enables rapid development of research on applications of NeRFs in robotics by providing an extensible interface to the efficient training pipelines and model libraries provided by Nerfstudio. As an example use case we outline a hardware setup that can be used NerfBridge to train a NeRF from images captured by a camera mounted to a quadrotor in both indoor and outdoor environments. For accompanying video https://youtu.be/EH0SLn-RcDg and code https://github.com/javieryu/nerf_bridge.

Distributed Optimization Methods for Multi-Robot Systems: Part II -- A Survey

Jan 26, 2023

Abstract:Although the field of distributed optimization is well-developed, relevant literature focused on the application of distributed optimization to multi-robot problems is limited. This survey constitutes the second part of a two-part series on distributed optimization applied to multi-robot problems. In this paper, we survey three main classes of distributed optimization algorithms -- distributed first-order methods, distributed sequential convex programming methods, and alternating direction method of multipliers (ADMM) methods -- focusing on fully-distributed methods that do not require coordination or computation by a central computer. We describe the fundamental structure of each category and note important variations around this structure, designed to address its associated drawbacks. Further, we provide practical implications of noteworthy assumptions made by distributed optimization algorithms, noting the classes of robotics problems suitable for these algorithms. Moreover, we identify important open research challenges in distributed optimization, specifically for robotics problem.

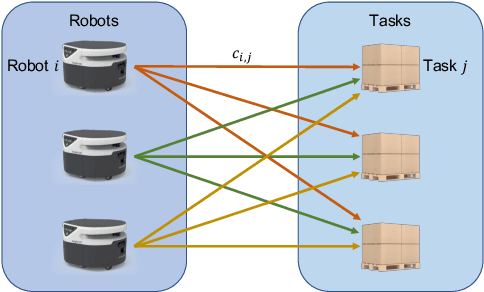

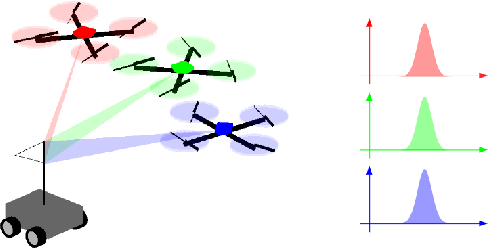

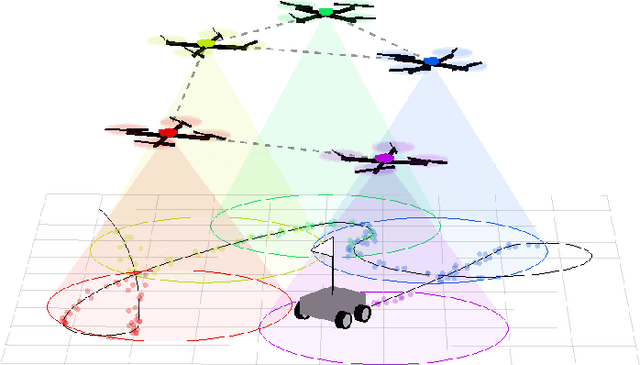

Distributed Optimization Methods for Multi-Robot Systems: Part I -- A Tutorial

Jan 26, 2023

Abstract:Distributed optimization provides a framework for deriving distributed algorithms for a variety of multi-robot problems. This tutorial constitutes the first part of a two-part series on distributed optimization applied to multi-robot problems, which seeks to advance the application of distributed optimization in robotics. In this tutorial, we demonstrate that many canonical multi-robot problems can be cast within the distributed optimization framework, such as multi-robot simultaneous localization and planning (SLAM), multi-robot target tracking, and multi-robot task assignment problems. We identify three broad categories of distributed optimization algorithms: distributed first-order methods, distributed sequential convex programming, and the alternating direction method of multipliers (ADMM). We describe the basic structure of each category and provide representative algorithms within each category. We then work through a simulation case study of multiple drones collaboratively tracking a ground vehicle. We compare solutions to this problem using a number of different distributed optimization algorithms. In addition, we implement a distributed optimization algorithm in hardware on a network of Rasberry Pis communicating with XBee modules to illustrate robustness to the challenges of real-world communication networks.

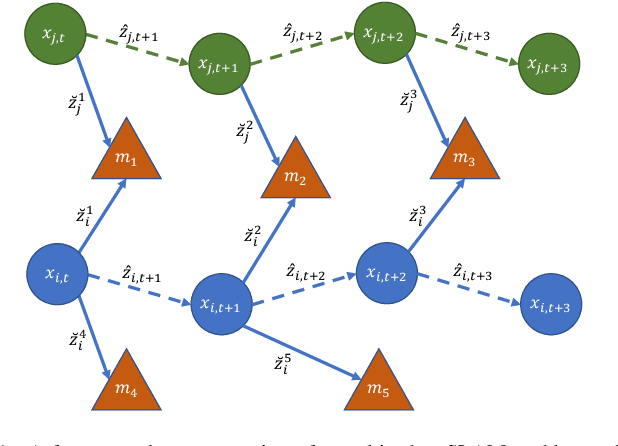

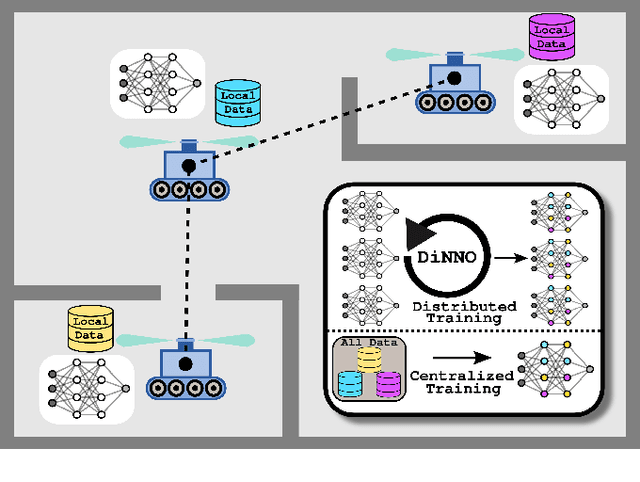

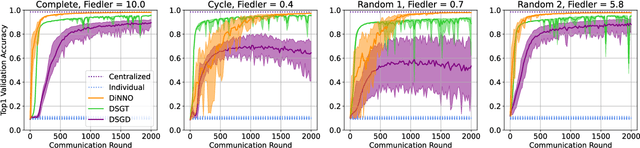

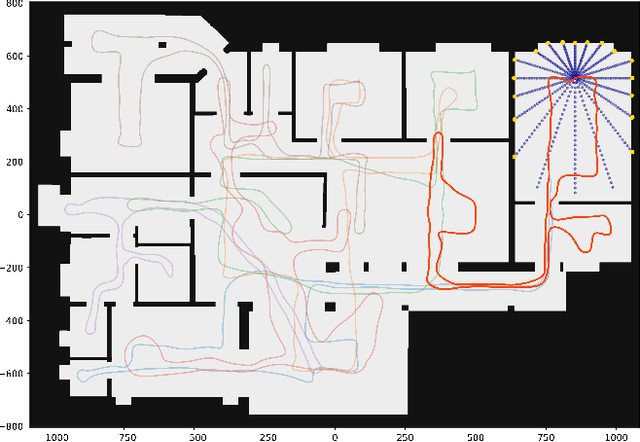

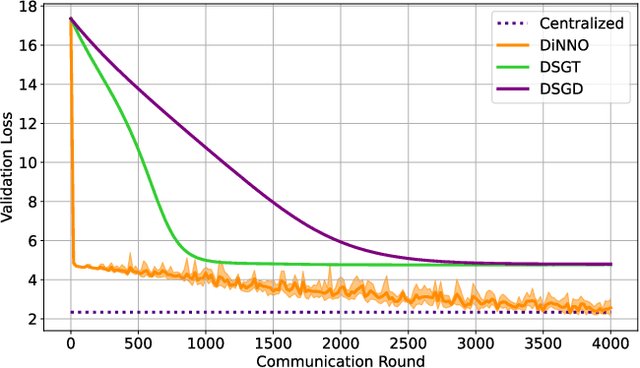

DiNNO: Distributed Neural Network Optimization for Multi-Robot Collaborative Learning

Sep 17, 2021

Abstract:We present a distributed algorithm that enables a group of robots to collaboratively optimize the parameters of a deep neural network model while communicating over a mesh network. Each robot only has access to its own data and maintains its own version of the neural network, but eventually learns a model that is as good as if it had been trained on all the data centrally. No robot sends raw data over the wireless network, preserving data privacy and ensuring efficient use of wireless bandwidth. At each iteration, each robot approximately optimizes an augmented Lagrangian function, then communicates the resulting weights to its neighbors, updates dual variables, and repeats. Eventually, all robots' local network weights reach a consensus. For convex objective functions, we prove this consensus is a global optimum. We compare our algorithm to two existing distributed deep neural network training algorithms in (i) an MNIST image classification task, (ii) a multi-robot implicit mapping task, and (iii) a multi-robot reinforcement learning task. In all of our experiments our method out performed baselines, and was able to achieve validation loss equivalent to centrally trained models. See \href{https://msl.stanford.edu/projects/dist_nn_train}{https://msl.stanford.edu/projects/dist\_nn\_train} for videos and a link to our GitHub repository.

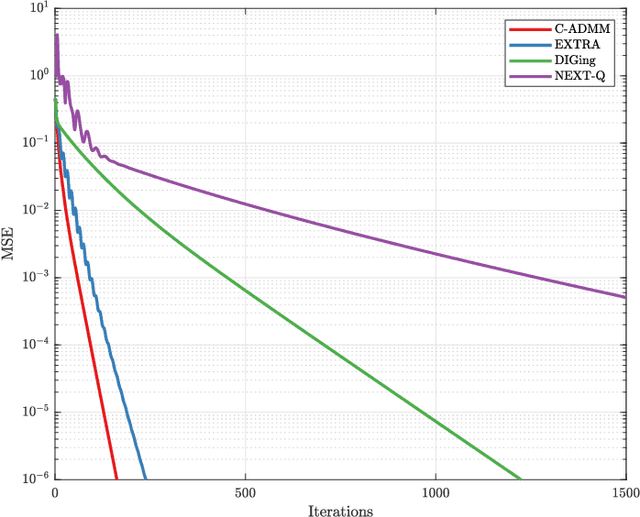

A Survey of Distributed Optimization Methods for Multi-Robot Systems

Mar 23, 2021

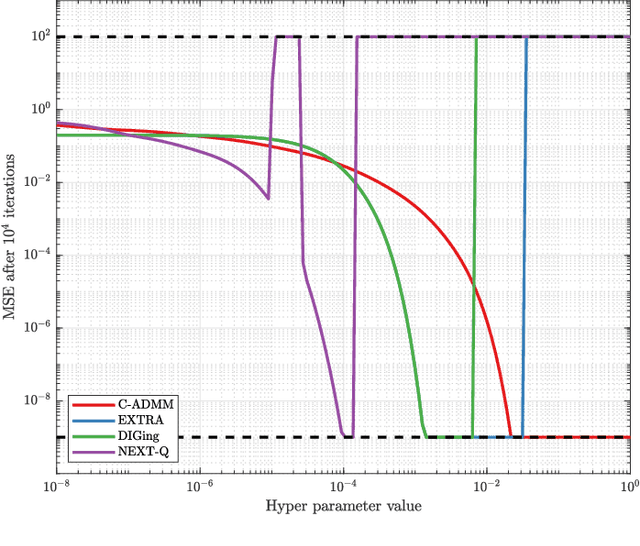

Abstract:Distributed optimization consists of multiple computation nodes working together to minimize a common objective function through local computation iterations and network-constrained communication steps. In the context of robotics, distributed optimization algorithms can enable multi-robot systems to accomplish tasks in the absence of centralized coordination. We present a general framework for applying distributed optimization as a module in a robotics pipeline. We survey several classes of distributed optimization algorithms and assess their practical suitability for multi-robot applications. We further compare the performance of different classes of algorithms in simulations for three prototypical multi-robot problem scenarios. The Consensus Alternating Direction Method of Multipliers (C-ADMM) emerges as a particularly attractive and versatile distributed optimization method for multi-robot systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge