PixRO: Pixel-Distributed Rotational Odometry with Gaussian Belief Propagation

Paper and Code

Jun 14, 2024

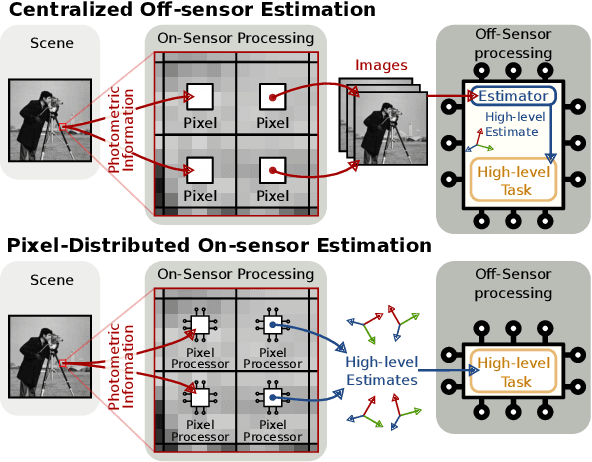

Visual sensors are not only becoming better at capturing high-quality images but also they have steadily increased their capabilities in processing data on their own on-chip. Yet the majority of VO pipelines rely on the transmission and processing of full images in a centralized unit (e.g. CPU or GPU), which often contain much redundant and low-quality information for the task. In this paper, we address the task of frame-to-frame rotational estimation but, instead of reasoning about relative motion between frames using the full images, distribute the estimation at pixel-level. In this paradigm, each pixel produces an estimate of the global motion by only relying on local information and local message-passing with neighbouring pixels. The resulting per-pixel estimates can then be communicated to downstream tasks, yielding higher-level, informative cues instead of the original raw pixel-readings. We evaluate the proposed approach on real public datasets, where we offer detailed insights about this novel technique and open-source our implementation for the future benefit of the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge