Riccardo Polvara

Active Informative Planning for UAV-based Weed Mapping using Discrete Gaussian Process Representations

Jan 19, 2026Abstract:Accurate agricultural weed mapping using unmanned aerial vehicles (UAVs) is crucial for precision farming. While traditional methods rely on rigid, pre-defined flight paths and intensive offline processing, informative path planning (IPP) offers a way to collect data adaptively where it is most needed. Gaussian process (GP) mapping provides a continuous model of weed distribution with built-in uncertainty. However, GPs must be discretised for practical use in autonomous planning. Many discretisation techniques exist, but the impact of discrete representation choice remains poorly understood. This paper investigates how different discrete GP representations influence both mapping quality and mission-level performance in UAV-based weed mapping. Considering a UAV equipped with a downward-facing camera, we implement a receding-horizon IPP strategy that selects sampling locations based on the map uncertainty, travel cost, and coverage penalties. We investigate multiple discretisation strategies for representing the GP posterior and use their induced map partitions to generate candidate viewpoints for planning. Experiments on real-world weed distributions show that representation choice significantly affects exploration behaviour and efficiency. Overall, our results demonstrate that discretisation is not only a representational detail but a key design choice that shapes planning dynamics, coverage efficiency, and computational load in online UAV weed mapping.

Keypoint Semantic Integration for Improved Feature Matching in Outdoor Agricultural Environments

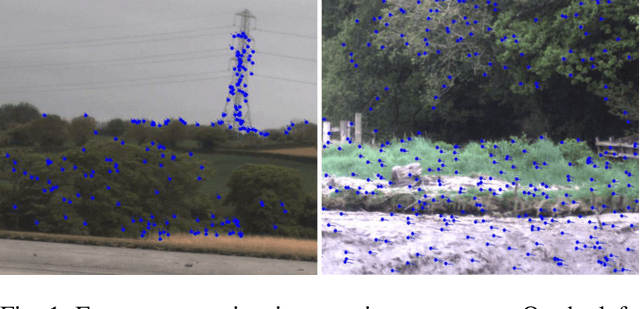

Mar 11, 2025Abstract:Robust robot navigation in outdoor environments requires accurate perception systems capable of handling visual challenges such as repetitive structures and changing appearances. Visual feature matching is crucial to vision-based pipelines but remains particularly challenging in natural outdoor settings due to perceptual aliasing. We address this issue in vineyards, where repetitive vine trunks and other natural elements generate ambiguous descriptors that hinder reliable feature matching. We hypothesise that semantic information tied to keypoint positions can alleviate perceptual aliasing by enhancing keypoint descriptor distinctiveness. To this end, we introduce a keypoint semantic integration technique that improves the descriptors in semantically meaningful regions within the image, enabling more accurate differentiation even among visually similar local features. We validate this approach in two vineyard perception tasks: (i) relative pose estimation and (ii) visual localisation. Across all tested keypoint types and descriptors, our method improves matching accuracy by 12.6%, demonstrating its effectiveness over multiple months in challenging vineyard conditions.

Discrete Gaussian Process Representations for Optimising UAV-based Precision Weed Mapping

Mar 10, 2025Abstract:Accurate agricultural weed mapping using UAVs is crucial for precision farming applications. Traditional methods rely on orthomosaic stitching from rigid flight paths, which is computationally intensive and time-consuming. Gaussian Process (GP)-based mapping offers continuous modelling of the underlying variable (i.e. weed distribution) but requires discretisation for practical tasks like path planning or visualisation. Current implementations often default to quadtrees or gridmaps without systematically evaluating alternatives. This study compares five discretisation methods: quadtrees, wedgelets, top-down binary space partition (BSP) trees using least square error (LSE), bottom-up BSP trees using graph merging, and variable-resolution hexagonal grids. Evaluations on real-world weed distributions measure visual similarity, mean squared error (MSE), and computational efficiency. Results show quadtrees perform best overall, but alternatives excel in specific scenarios: hexagons or BSP LSE suit fields with large, dominant weed patches, while quadtrees are optimal for dispersed small-scale distributions. These findings highlight the need to tailor discretisation approaches to weed distribution patterns (patch size, density, coverage) rather than relying on default methods. By choosing representations based on the underlying distribution, we can improve mapping accuracy and efficiency for precision agriculture applications.

End-to-end Unsupervised Learning of Long-Term 3D Stable objects

Jan 26, 2023

Abstract:3D point cloud semantic classification is an important task in robotics as it enables a better understanding of the mapped environment. This work proposes to learn the long-term stability of the 3D objects using a neural network based on PointNet++, where the long-term stable object refers to a static object that cannot move on its own (e.g. tree, pole, building). The training data is generated in an unsupervised manner by assigning a continuous label to individual points by exploiting multiple time slices of the same environment. Instead of using discrete labels, i.e. static/dynamic, we propose to use a continuous label value indicating point temporal stability to train a regression PointNet++ network. We evaluated our approach on point cloud data of two parking lots from the NCLT dataset. The experiments' performance reveals that static vs dynamic object classification is best performed by training a regression model, followed by thresholding, compared to directly training a classification model.

Collection and Evaluation of a Long-Term 4D Agri-Robotic Dataset

Nov 25, 2022

Abstract:Long-term autonomy is one of the most demanded capabilities looked into a robot. The possibility to perform the same task over and over on a long temporal horizon, offering a high standard of reproducibility and robustness, is appealing. Long-term autonomy can play a crucial role in the adoption of robotics systems for precision agriculture, for example in assisting humans in monitoring and harvesting crops in a large orchard. With this scope in mind, we report an ongoing effort in the long-term deployment of an autonomous mobile robot in a vineyard for data collection across multiple months. The main aim is to collect data from the same area at different points in time so to be able to analyse the impact of the environmental changes in the mapping and localisation tasks. In this work, we present a map-based localisation study taking 4 data sessions. We identify expected failures when the pre-built map visually differs from the environment's current appearance and we anticipate LTS-Net, a solution pointed at extracting stable temporal features for improving long-term 4D localisation results.

Benchmark of visual and 3D lidar SLAM systems in simulation environment for vineyards

Jul 12, 2021

Abstract:In this work, we present a comparative analysis of the trajectories estimated from various Simultaneous Localization and Mapping (SLAM) systems in a simulation environment for vineyards. Vineyard environment is challenging for SLAM methods, due to visual appearance changes over time, uneven terrain, and repeated visual patterns. For this reason, we created a simulation environment specifically for vineyards to help studying SLAM systems in such a challenging environment. We evaluated the following SLAM systems: LIO-SAM, StaticMapping, ORB-SLAM2, and RTAB-MAP in four different scenarios. The mobile robot used in this study equipped with 2D and 3D lidars, IMU, and RGB-D camera (Kinect v2). The results show good and encouraging performance of RTAB-MAP in such an environment.

Navigate-and-Seek: a Robotics Framework for People Localization in Agricultural Environments

Jul 08, 2021

Abstract:The agricultural domain offers a working environment where many human laborers are nowadays employed to maintain or harvest crops, with huge potential for productivity gains through the introduction of robotic automation. Detecting and localizing humans reliably and accurately in such an environment, however, is a prerequisite to many services offered by fleets of mobile robots collaborating with human workers. Consequently, in this paper, we expand on the concept of a topological particle filter (TPF) to accurately and individually localize and track workers in a farm environment, integrating information from heterogeneous sensors and combining local active sensing (exploiting a robot's onboard sensing employing a Next-Best-Sense planning approach) and global localization (using affordable IoT GNSS devices). We validate the proposed approach in topologies created for the deployment of robotics fleets to support fruit pickers in a real farm environment. By combining multi-sensor observations on the topological level complemented by active perception through the NBS approach, we show that we can improve the accuracy of picker localization in comparison to prior work.

Autonomous Quadrotor Landing using Deep Reinforcement Learning

Feb 27, 2018

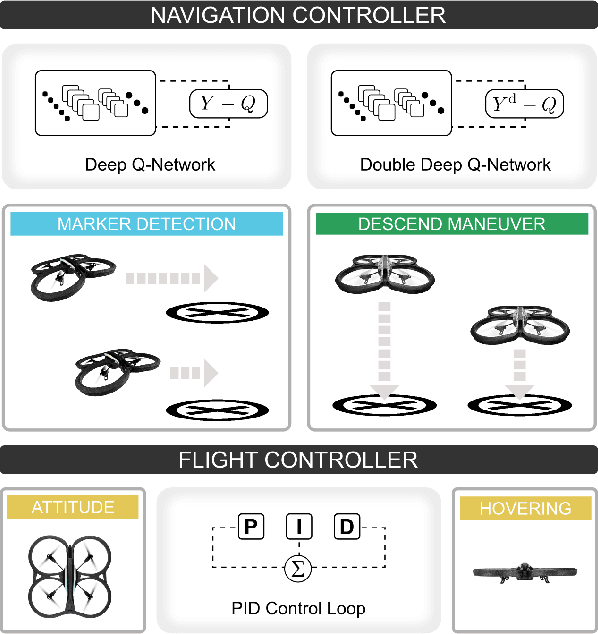

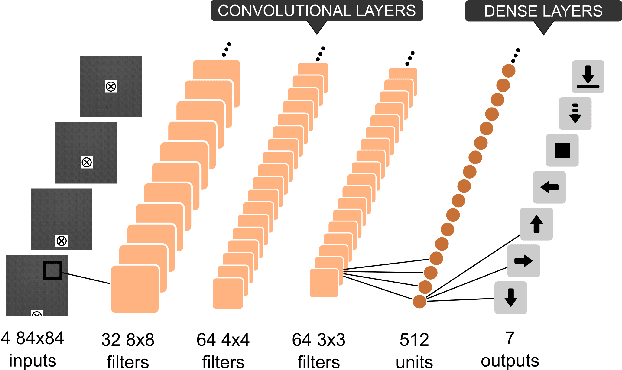

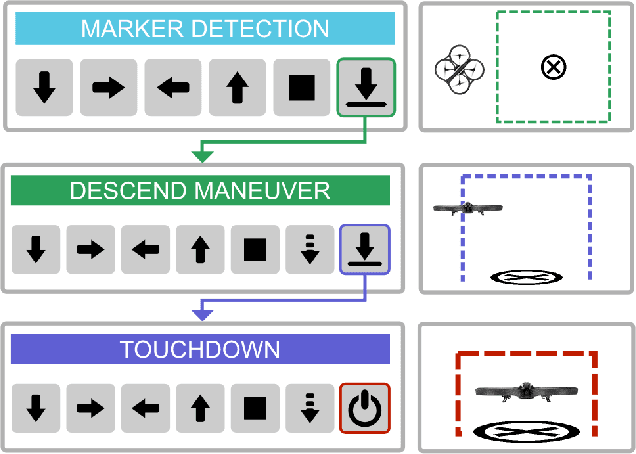

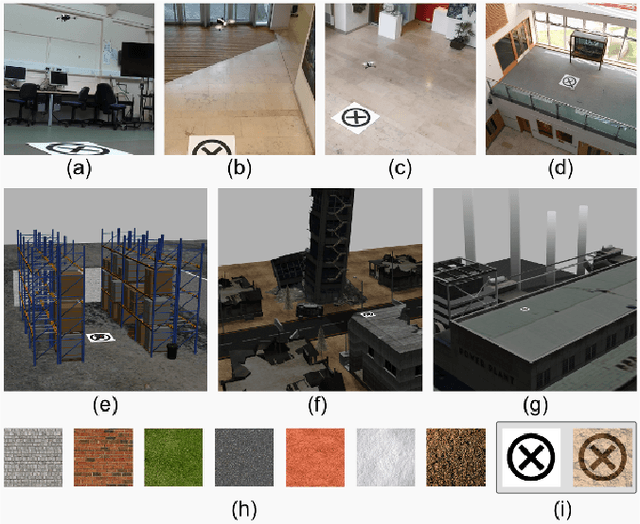

Abstract:Landing an unmanned aerial vehicle (UAV) on a ground marker is an open problem despite the effort of the research community. Previous attempts mostly focused on the analysis of hand-crafted geometric features and the use of external sensors in order to allow the vehicle to approach the land-pad. In this article, we propose a method based on deep reinforcement learning that only requires low-resolution images taken from a down-looking camera in order to identify the position of the marker and land the UAV on it. The proposed approach is based on a hierarchy of Deep Q-Networks (DQNs) used as high-level control policy for the navigation toward the marker. We implemented different technical solutions, such as the combination of vanilla and double DQNs, and a partitioned buffer replay. Using domain randomization we trained the vehicle on uniform textures and we tested it on a large variety of simulated and real-world environments. The overall performance is comparable with a state-of-the-art algorithm and human pilots.

A Next-Best-Smell Approach for Remote Gas Detection with a Mobile Robot

Jan 21, 2018

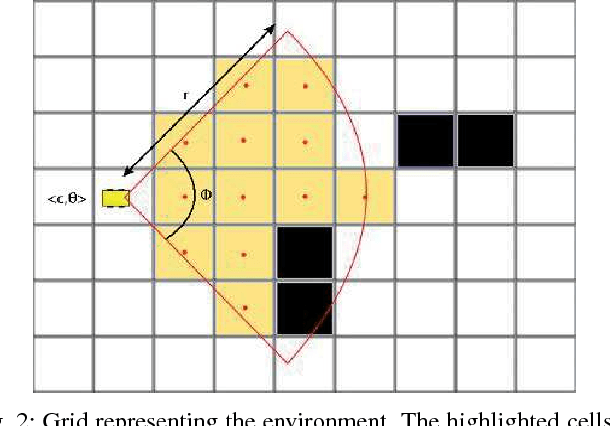

Abstract:The problem of gas detection is relevant to many real-world applications, such as leak detection in industrial settings and landfill monitoring. Using mobile robots for gas detection has several advantages and can reduce danger for humans. In our work, we address the problem of planning a path for a mobile robotic platform equipped with a remote gas sensor, which minimizes the time to detect all gas sources in a given environment. We cast this problem as a coverage planning problem by defining a basic sensing operation -- a scan with the remote gas sensor -- as the field of "view" of the sensor. Given the computing effort required by previously proposed offline approaches, in this paper we suggest a online coverage algorithm, called Next-Best-Smell, adapted from the Next-Best-View class of exploration algorithms. Our algorithm evaluates candidate locations with a global utility function, which combines utility values for travel distance, information gain, and sensing time, using Multi-Criteria Decision Making. In our experiments, conducted both in simulation and with a real robot, we found the performance of the Next-Best-Smell approach to be comparable with that of the state-of-the-art offline algorithm, at much lower computational cost.

Monocular Visual Odometry for an Unmanned Sea-Surface Vehicle

Jul 19, 2017

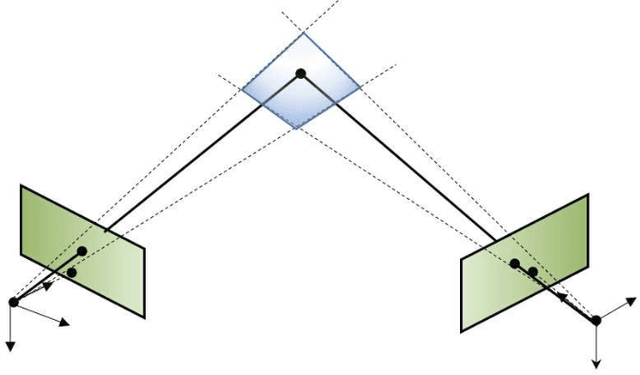

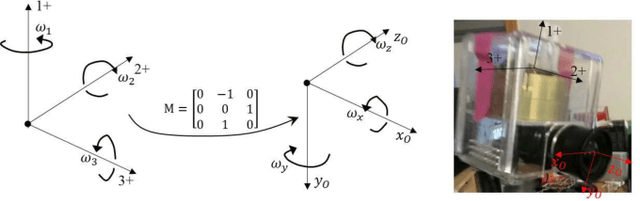

Abstract:We tackle the problem of localizing an autonomous sea-surface vehicle in river estuarine areas using monocular camera and angular velocity input from an inertial sensor. Our method is challenged by two prominent drawbacks associated with the environment, which are typically not present in standard visual simultaneous localization and mapping (SLAM) applications on land (or air): a) Scene depth varies significantly (from a few meters to several kilometers) and, b) In conjunction to the latter, there exists no ground plane to provide features with enough disparity based on which to reliably detect motion. To that end, we use the IMU orientation feedback in order to re-cast the problem of visual localization without the mapping component, although the map can be implicitly obtained from the camera pose estimates. We find that our method produces reliable odometry estimates for trajectories several hundred meters long in the water. To compare the visual odometry estimates with GPS based ground truth, we interpolate the trajectory with splines on a common parameter and obtain position error in meters recovering an optimal affine transformation between the two splines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge