Grzegorz Cielniak

From Simulation to Field: Learning Terrain Traversability for Real-World Deployment

Jan 12, 2025

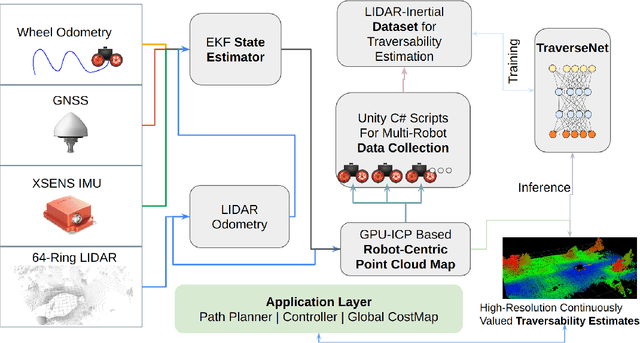

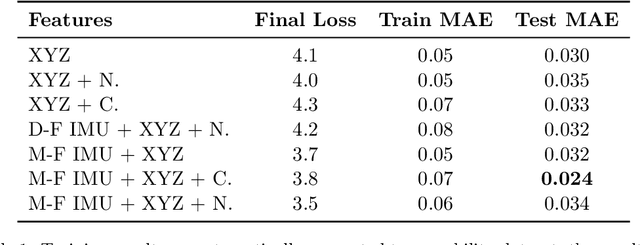

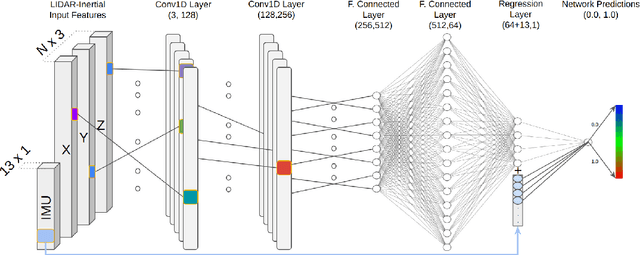

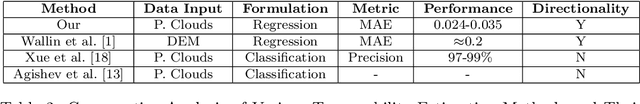

Abstract:The challenge of traversability estimation is a crucial aspect of autonomous navigation in unstructured outdoor environments such as forests. It involves determining whether certain areas are passable or risky for robots, taking into account factors like terrain irregularities, slopes, and potential obstacles. The majority of current methods for traversability estimation operate on the assumption of an offline computation, overlooking the significant influence of the robot's heading direction on accurate traversability estimates. In this work, we introduce a deep neural network that uses detailed geometric environmental data together with the robot's recent movement characteristics. This fusion enables the generation of robot direction awareness and continuous traversability estimates, essential for enhancing robot autonomy in challenging terrains like dense forests. The efficacy and significance of our approach are underscored by experiments conducted on both simulated and real robotic platforms in various environments, yielding quantitatively superior performance results compared to existing methods. Moreover, we demonstrate that our method, trained exclusively in a high-fidelity simulated setting, can accurately predict traversability in real-world applications without any real data collection. Our experiments showcase the advantages of our method for optimizing path-planning and exploration tasks within difficult outdoor environments, underscoring its practicality for effective, real-world robotic navigation. In the spirit of collaborative advancement, we have made the code implementation available to the public.

Unsupervised Tomato Split Anomaly Detection using Hyperspectral Imaging and Variational Autoencoders

Jan 06, 2025

Abstract:Tomato anomalies/damages pose a significant challenge in greenhouse farming. While this method of cultivation benefits from efficient resource utilization, anomalies can significantly degrade the quality of farm produce. A common anomaly associated with tomatoes is splitting, characterized by the development of cracks on the tomato skin, which degrades its quality. Detecting this type of anomaly is challenging due to dynamic variations in appearance and sizes, compounded by dataset scarcity. We address this problem in an unsupervised manner by utilizing a tailored variational autoencoder (VAE) with hyperspectral input. Preliminary analysis of the dataset enabled us to select the optimal range of wavelengths for detecting this anomaly. Our findings indicate that the 530nm - 550nm range is suitable for identifying tomato dry splits. The analysis on reconstruction loss allow us to not only detect the anomalies but also to some degree estimate the anomalous regions.

Resilient Timed Elastic Band Planner for Collision-Free Navigation in Unknown Environments

Dec 04, 2024

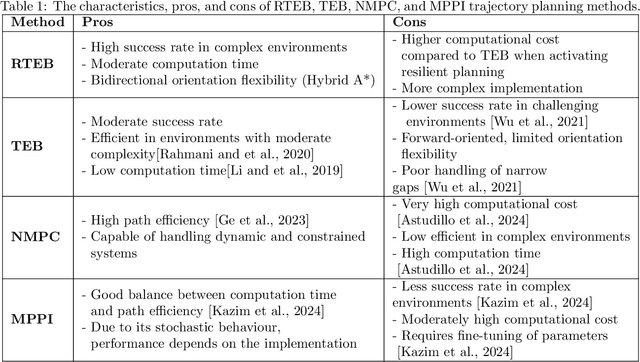

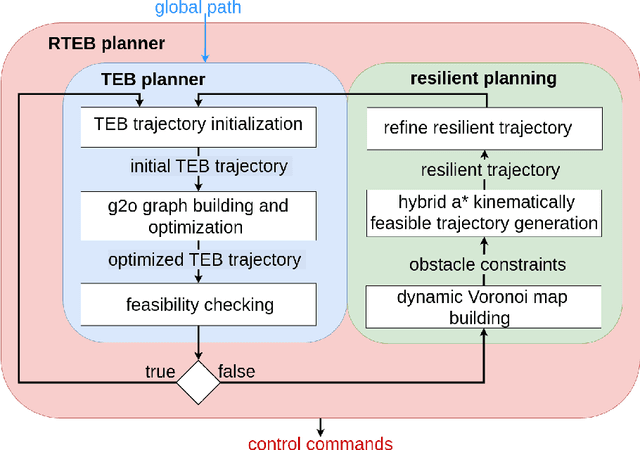

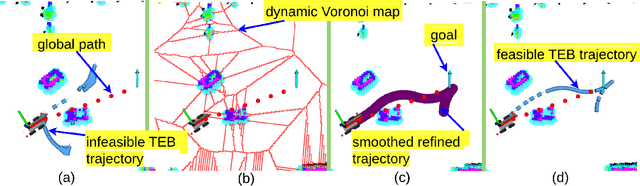

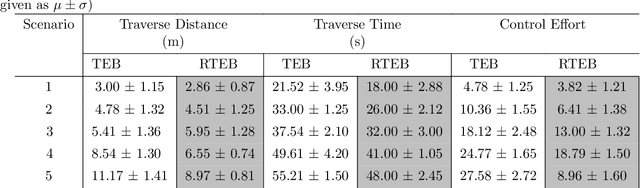

Abstract:In autonomous navigation, trajectory replanning, refinement, and control command generation are essential for effective motion planning. This paper presents a resilient approach to trajectory replanning addressing scenarios where the initial planner's solution becomes infeasible. The proposed method incorporates a hybrid A* algorithm to generate feasible trajectories when the primary planner fails and applies a soft constraints-based smoothing technique to refine these trajectories, ensuring continuity, obstacle avoidance, and kinematic feasibility. Obstacle constraints are modelled using a dynamic Voronoi map to improve navigation through narrow passages. This approach enhances the consistency of trajectory planning, speeds up convergence, and meets real-time computational requirements. In environments with around 30\% or higher obstacle density, the ratio of free space before and after placing new obstacles, the Resilient Timed Elastic Band (RTEB) planner achieves approximately 20\% reduction in traverse distance, traverse time, and control effort compared to the Timed Elastic Band (TEB) planner and Nonlinear Model Predictive Control (NMPC) planner. These improvements demonstrate the RTEB planner's potential for application in field robotics, particularly in agricultural and industrial environments, where navigating unstructured terrain is crucial for ensuring efficiency and operational resilience.

Interactive Image-Based Aphid Counting in Yellow Water Traps under Stirring Actions

Nov 15, 2024Abstract:The current vision-based aphid counting methods in water traps suffer from undercounts caused by occlusions and low visibility arising from dense aggregation of insects and other objects. To address this problem, we propose a novel aphid counting method through interactive stirring actions. We use interactive stirring to alter the distribution of aphids in the yellow water trap and capture a sequence of images which are then used for aphid detection and counting through an optimized small object detection network based on Yolov5. We also propose a counting confidence evaluation system to evaluate the confidence of count-ing results. The final counting result is a weighted sum of the counting results from all sequence images based on the counting confidence. Experimental results show that our proposed aphid detection network significantly outperforms the original Yolov5, with improvements of 33.9% in AP@0.5 and 26.9% in AP@[0.5:0.95] on the aphid test set. In addition, the aphid counting test results using our proposed counting confidence evaluation system show significant improvements over the static counting method, closely aligning with manual counting results.

Deep Learning for Precision Agriculture: Post-Spraying Evaluation and Deposition Estimation

Sep 24, 2024Abstract:Precision spraying evaluation requires automation primarily in post-spraying imagery. In this paper we propose an eXplainable Artificial Intelligence (XAI) computer vision pipeline to evaluate a precision spraying system post-spraying without the need for traditional agricultural methods. The developed system can semantically segment potential targets such as lettuce, chickweed, and meadowgrass and correctly identify if targets have been sprayed. Furthermore, this pipeline evaluates using a domain-specific Weakly Supervised Deposition Estimation task, allowing for class-specific quantification of spray deposit weights in {\mu}L. Estimation of coverage rates of spray deposition in a class-wise manner allows for further understanding of effectiveness of precision spraying systems. Our study evaluates different Class Activation Mapping techniques, namely AblationCAM and ScoreCAM, to determine which is more effective and interpretable for these tasks. In the pipeline, inference-only feature fusion is used to allow for further interpretability and to enable the automation of precision spraying evaluation post-spray. Our findings indicate that a Fully Convolutional Network with an EfficientNet-B0 backbone and inference-only feature fusion achieves an average absolute difference in deposition values of 156.8 {\mu}L across three classes in our test set. The dataset curated in this paper is publicly available at https://github.com/Harry-Rogers/PSIE

Optimising robotic operation speed with edge computing over 5G networks: Insights from selective harvesting robots

Jul 01, 2024

Abstract:Selective harvesting by autonomous robots will be a critical enabling technology for future farming. Increases in inflation and shortages of skilled labour are driving factors that can help encourage user acceptability of robotic harvesting. For example, robotic strawberry harvesting requires real-time high-precision fruit localisation, 3D mapping and path planning for 3-D cluster manipulation. Whilst industry and academia have developed multiple strawberry harvesting robots, none have yet achieved human-cost parity. Achieving this goal requires increased picking speed (perception, control and movement), accuracy and the development of low-cost robotic system designs. We propose the edge-server over 5G for Selective Harvesting (E5SH) system, which is an integration of high bandwidth and low latency Fifth Generation (5G) mobile network into a crop harvesting robotic platform, which we view as an enabler for future robotic harvesting systems. We also consider processing scale and speed in conjunction with system environmental and energy costs. A system architecture is presented and evaluated with support from quantitative results from a series of experiments that compare the performance of the system in response to different architecture choices, including image segmentation models, network infrastructure (5G vs WiFi) and messaging protocols such as Message Queuing Telemetry Transport (MQTT) and Transport Control Protocol Robot Operating System (TCPROS). Our results demonstrate that the E5SH system delivers step-change peak processing performance speedup of above 18-fold than a stand-alone embedded computing Nvidia Jetson Xavier NX (NJXN) system.

Dual-band feature selection for maturity classification of specialty crops by hyperspectral imaging

May 17, 2024

Abstract:The maturity classification of specialty crops such as strawberries and tomatoes is an essential agricultural downstream activity for selective harvesting and quality control (QC) at production and packaging sites. Recent advancements in Deep Learning (DL) have produced encouraging results in color images for maturity classification applications. However, hyperspectral imaging (HSI) outperforms methods based on color vision. Multivariate analysis methods and Convolutional Neural Networks (CNN) deliver promising results; however, a large amount of input data and the associated preprocessing requirements cause hindrances in practical application. Conventionally, the reflectance intensity in a given electromagnetic spectrum is employed in estimating fruit maturity. We present a feature extraction method to empirically demonstrate that the peak reflectance in subbands such as 500-670 nm (pigment band) and the wavelength of the peak position, and contrarily, the trough reflectance and its corresponding wavelength within 671-790 nm (chlorophyll band) are convenient to compute yet distinctive features for the maturity classification. The proposed feature selection method is beneficial because preprocessing, such as dimensionality reduction, is avoided before every prediction. The feature set is designed to capture these traits. The best SOTA methods, among 3D-CNN, 1D-CNN, and SVM, achieve at most 90.0 % accuracy for strawberries and 92.0 % for tomatoes on our dataset. Results show that the proposed method outperforms the SOTA as it yields an accuracy above 98.0 % in strawberry and 96.0 % in tomato classification. A comparative analysis of the time efficiency of these methods is also conducted, which shows the proposed method performs prediction at 13 Frames Per Second (FPS) compared to the maximum 1.16 FPS attained by the full-spectrum SVM classifier.

Lincoln's Annotated Spatio-Temporal Strawberry Dataset (LAST-Straw)

Mar 01, 2024Abstract:Automated phenotyping of plants for breeding and plant studies promises to provide quantitative metrics on plant traits at a previously unattainable observation frequency. Developers of tools for performing high-throughput phenotyping are, however, constrained by the availability of relevant datasets on which to perform validation. To this end, we present a spatio-temporal dataset of 3D point clouds of strawberry plants for two varieties, totalling 84 individual point clouds. We focus on the end use of such tools - the extraction of biologically relevant phenotypes - and demonstrate a phenotyping pipeline on the dataset. This comprises of the steps, including; segmentation, skeletonisation and tracking, and we detail how each stage facilitates the extraction of different phenotypes or provision of data insights. We particularly note that assessment is focused on the validation of phenotypes, extracted from the representations acquired at each step of the pipeline, rather than singularly focusing on assessing the representation itself. Therefore, where possible, we provide \textit{in silico} ground truth baselines for the phenotypes extracted at each step and introduce methodology for the quantitative assessment of skeletonisation and the length trait extracted thereof. This dataset contributes to the corpus of freely available agricultural/horticultural spatio-temporal data for the development of next-generation phenotyping tools, increasing the number of plant varieties available for research in this field and providing a basis for genuine comparison of new phenotyping methodology.

Key Point-based Orientation Estimation of Strawberries for Robotic Fruit Picking

Oct 17, 2023Abstract:Selective robotic harvesting is a promising technological solution to address labour shortages which are affecting modern agriculture in many parts of the world. For an accurate and efficient picking process, a robotic harvester requires the precise location and orientation of the fruit to effectively plan the trajectory of the end effector. The current methods for estimating fruit orientation employ either complete 3D information which typically requires registration from multiple views or rely on fully-supervised learning techniques, which require difficult-to-obtain manual annotation of the reference orientation. In this paper, we introduce a novel key-point-based fruit orientation estimation method allowing for the prediction of 3D orientation from 2D images directly. The proposed technique can work without full 3D orientation annotations but can also exploit such information for improved accuracy. We evaluate our work on two separate datasets of strawberry images obtained from real-world data collection scenarios. Our proposed method achieves state-of-the-art performance with an average error as low as $8^{\circ}$, improving predictions by $\sim30\%$ compared to previous work presented in~\cite{wagner2021efficient}. Furthermore, our method is suited for real-time robotic applications with fast inference times of $\sim30$ms.

Crop Row Switching for Vision-Based Navigation: A Comprehensive Approach for Efficient Crop Field Navigation

Sep 21, 2023

Abstract:Vision-based mobile robot navigation systems in arable fields are mostly limited to in-row navigation. The process of switching from one crop row to the next in such systems is often aided by GNSS sensors or multiple camera setups. This paper presents a novel vision-based crop row-switching algorithm that enables a mobile robot to navigate an entire field of arable crops using a single front-mounted camera. The proposed row-switching manoeuvre uses deep learning-based RGB image segmentation and depth data to detect the end of the crop row, and re-entry point to the next crop row which would be used in a multi-state row switching pipeline. Each state of this pipeline use visual feedback or wheel odometry of the robot to successfully navigate towards the next crop row. The proposed crop row navigation pipeline was tested in a real sugar beet field containing crop rows with discontinuities, varying light levels, shadows and irregular headland surfaces. The robot could successfully exit from one crop row and re-enter the next crop row using the proposed pipeline with absolute median errors averaging at 19.25 cm and 6.77{\deg} for linear and rotational steps of the proposed manoeuvre.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge