Junfeng Gao

Lincoln Agri-Robotics, Lincoln Institute for Agri-Food Technology, University of Lincoln, Lincoln, UK, Lincoln Centre for Autonomous System, University of Lincoln, Lincoln, UK

Harnessing Large Vision and Language Models in Agriculture: A Review

Jul 29, 2024Abstract:Large models can play important roles in many domains. Agriculture is another key factor affecting the lives of people around the world. It provides food, fabric, and coal for humanity. However, facing many challenges such as pests and diseases, soil degradation, global warming, and food security, how to steadily increase the yield in the agricultural sector is a problem that humans still need to solve. Large models can help farmers improve production efficiency and harvest by detecting a series of agricultural production tasks such as pests and diseases, soil quality, and seed quality. It can also help farmers make wise decisions through a variety of information, such as images, text, etc. Herein, we delve into the potential applications of large models in agriculture, from large language model (LLM) and large vision model (LVM) to large vision-language models (LVLM). After gaining a deeper understanding of multimodal large language models (MLLM), it can be recognized that problems such as agricultural image processing, agricultural question answering systems, and agricultural machine automation can all be solved by large models. Large models have great potential in the field of agriculture. We outline the current applications of agricultural large models, and aims to emphasize the importance of large models in the domain of agriculture. In the end, we envisage a future in which famers use MLLM to accomplish many tasks in agriculture, which can greatly improve agricultural production efficiency and yield.

Multispectral Fine-Grained Classification of Blackgrass in Wheat and Barley Crops

May 03, 2024Abstract:As the burden of herbicide resistance grows and the environmental repercussions of excessive herbicide use become clear, new ways of managing weed populations are needed. This is particularly true for cereal crops, like wheat and barley, that are staple food crops and occupy a globally significant portion of agricultural land. Even small improvements in weed management practices across these major food crops worldwide would yield considerable benefits for both the environment and global food security. Blackgrass is a major grass weed which causes particular problems in cereal crops in north-west Europe, a major cereal production area, because it has high levels of of herbicide resistance and is well adapted to agronomic practice in this region. With the use of machine vision and multispectral imaging, we investigate the effectiveness of state-of-the-art methods to identify blackgrass in wheat and barley crops. As part of this work, we provide a large dataset with which we evaluate several key aspects of blackgrass weed recognition. Firstly, we determine the performance of different CNN and transformer-based architectures on images from unseen fields. Secondly, we demonstrate the role that different spectral bands have on the performance of weed classification. Lastly, we evaluate the role of dataset size in classification performance for each of the models trialled. We find that even with a fairly modest quantity of training data an accuracy of almost 90% can be achieved on images from unseen fields.

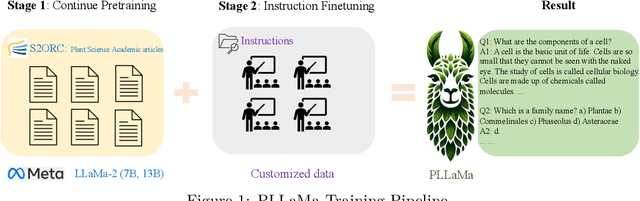

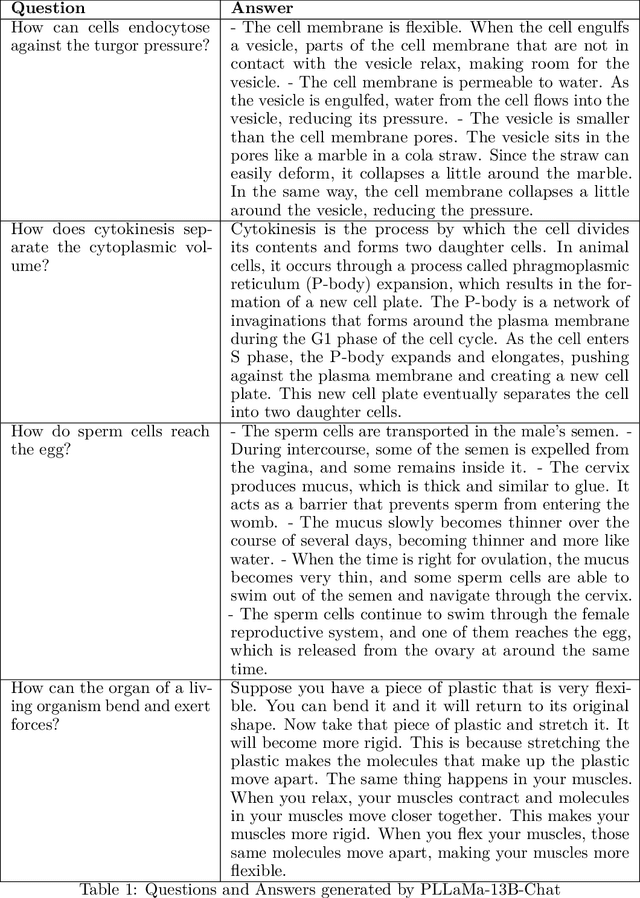

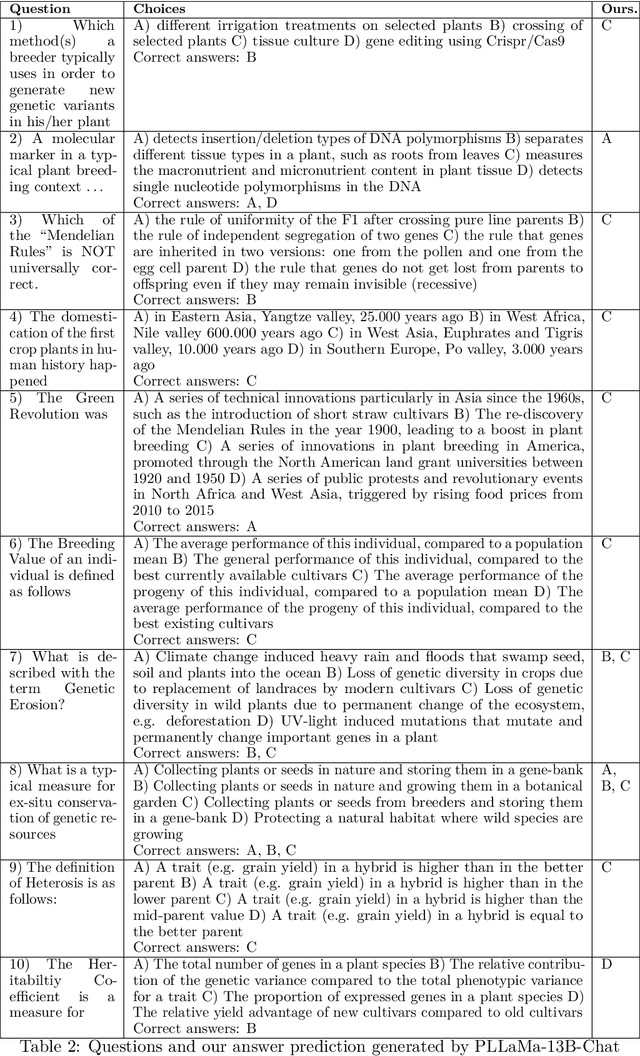

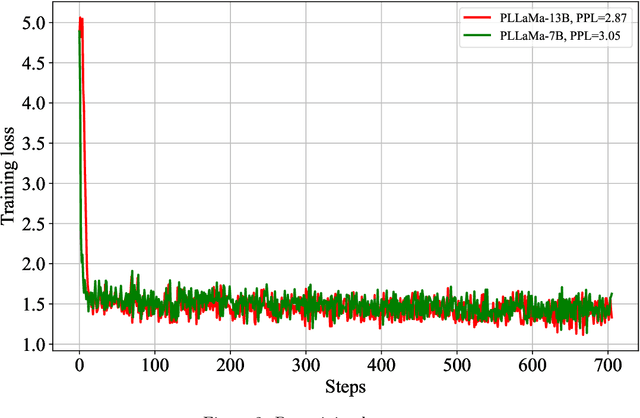

PLLaMa: An Open-source Large Language Model for Plant Science

Jan 03, 2024

Abstract:Large Language Models (LLMs) have exhibited remarkable capabilities in understanding and interacting with natural language across various sectors. However, their effectiveness is limited in specialized areas requiring high accuracy, such as plant science, due to a lack of specific expertise in these fields. This paper introduces PLLaMa, an open-source language model that evolved from LLaMa-2. It's enhanced with a comprehensive database, comprising more than 1.5 million scholarly articles in plant science. This development significantly enriches PLLaMa with extensive knowledge and proficiency in plant and agricultural sciences. Our initial tests, involving specific datasets related to plants and agriculture, show that PLLaMa substantially improves its understanding of plant science-related topics. Moreover, we have formed an international panel of professionals, including plant scientists, agricultural engineers, and plant breeders. This team plays a crucial role in verifying the accuracy of PLLaMa's responses to various academic inquiries, ensuring its effective and reliable application in the field. To support further research and development, we have made the model's checkpoints and source codes accessible to the scientific community. These resources are available for download at \url{https://github.com/Xianjun-Yang/PLLaMa}.

Crop Row Switching for Vision-Based Navigation: A Comprehensive Approach for Efficient Crop Field Navigation

Sep 21, 2023

Abstract:Vision-based mobile robot navigation systems in arable fields are mostly limited to in-row navigation. The process of switching from one crop row to the next in such systems is often aided by GNSS sensors or multiple camera setups. This paper presents a novel vision-based crop row-switching algorithm that enables a mobile robot to navigate an entire field of arable crops using a single front-mounted camera. The proposed row-switching manoeuvre uses deep learning-based RGB image segmentation and depth data to detect the end of the crop row, and re-entry point to the next crop row which would be used in a multi-state row switching pipeline. Each state of this pipeline use visual feedback or wheel odometry of the robot to successfully navigate towards the next crop row. The proposed crop row navigation pipeline was tested in a real sugar beet field containing crop rows with discontinuities, varying light levels, shadows and irregular headland surfaces. The robot could successfully exit from one crop row and re-enter the next crop row using the proposed pipeline with absolute median errors averaging at 19.25 cm and 6.77{\deg} for linear and rotational steps of the proposed manoeuvre.

Advancing Early Detection of Virus Yellows: Developing a Hybrid Convolutional Neural Network for Automatic Aphid Counting in Sugar Beet Fields

Aug 09, 2023

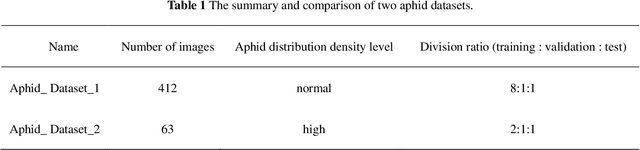

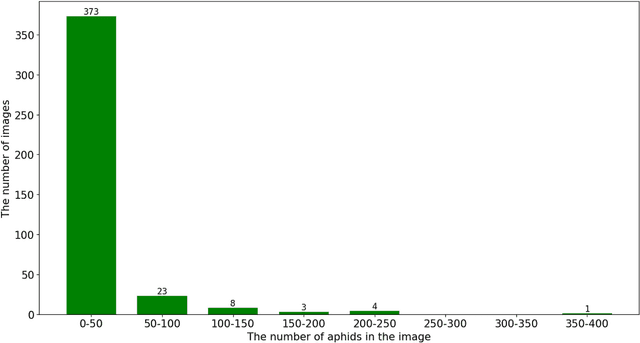

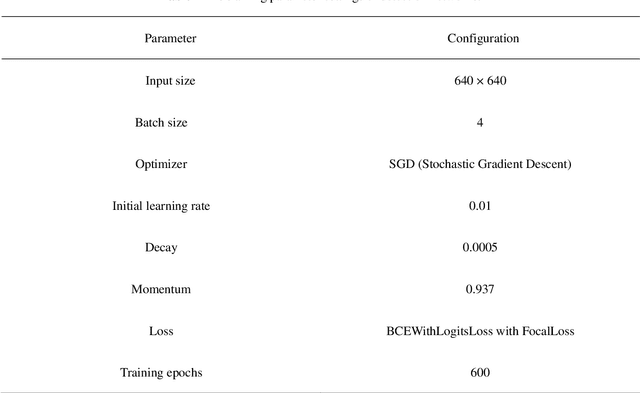

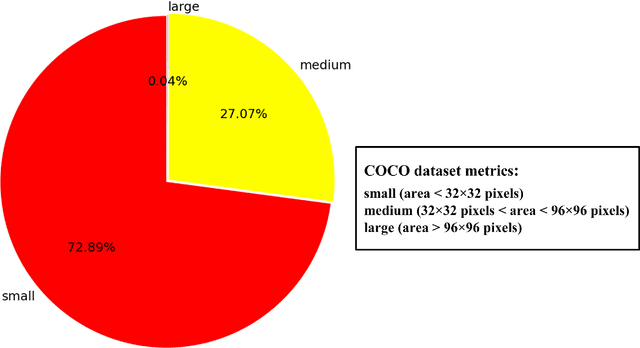

Abstract:Aphids are efficient vectors to transmit virus yellows in sugar beet fields. Timely monitoring and control of their populations are thus critical to prevent the large-scale outbreak of virus yellows. However, the manual counting of aphids, which is the most common practice, is labor-intensive and time-consuming. Additionally, two of the biggest challenges in aphid counting are that aphids are small objects and their density distributions are varied in different areas of the field. To address these challenges, we proposed a hybrid automatic aphid counting network architecture which integrates the detection network and the density map estimation network. When the distribution density of aphids is low, it utilizes an improved Yolov5 to count aphids. Conversely, when the distribution density of aphids is high, its witches to CSRNet to count aphids. To the best of our knowledge, this is the first framework integrating the detection network and the density map estimation network for counting tasks. Through comparison experiments of counting aphids, it verified that our proposed approach outperforms all other methods in counting aphids. It achieved the lowest MAE and RMSE values for both the standard and high-density aphid datasets: 2.93 and 4.01 (standard), and 34.19 and 38.66 (high-density), respectively. Moreover, the AP of the improved Yolov5 is 5% higher than that of the original Yolov5. Especially for extremely small aphids and densely distributed aphids, the detection performance of the improved Yolov5 is significantly better than the original Yolov5. This work provides an effective early warning for the virus yellows risk caused by aphids in sugar beet fields, offering protection for sugar beet growth and ensuring sugar beet yield. The datasets and project code are released at: https://github.com/JunfengGaolab/Counting-Aphids.

Leaving the Lines Behind: Vision-Based Crop Row Exit for Agricultural Robot Navigation

Jun 09, 2023Abstract:Usage of purely vision based solutions for row switching is not well explored in existing vision based crop row navigation frameworks. This method only uses RGB images for local feature matching based visual feedback to exit crop row. Depth images were used at crop row end to estimate the navigation distance within headland. The algorithm was tested on diverse headland areas with soil and vegetation. The proposed method could reach the end of the crop row and then navigate into the headland completely leaving behind the crop row with an error margin of 50 cm.

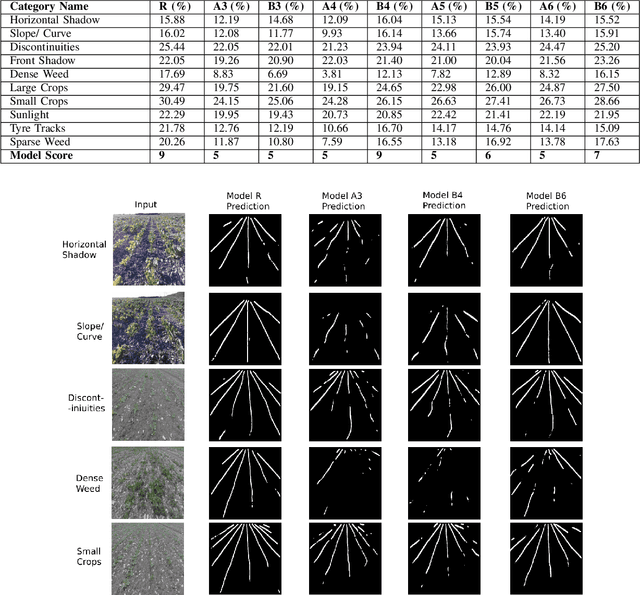

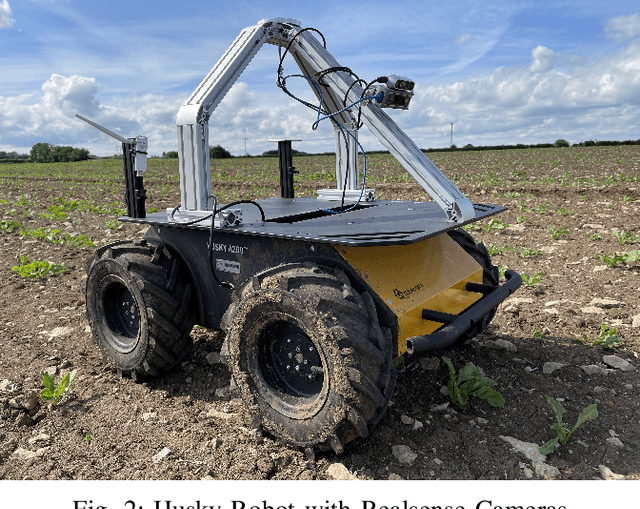

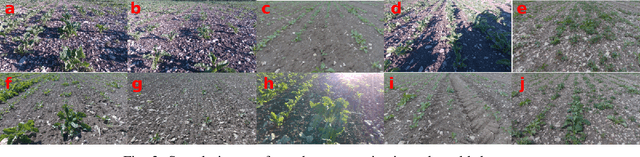

Vision based Crop Row Navigation under Varying Field Conditions in Arable Fields

Sep 28, 2022

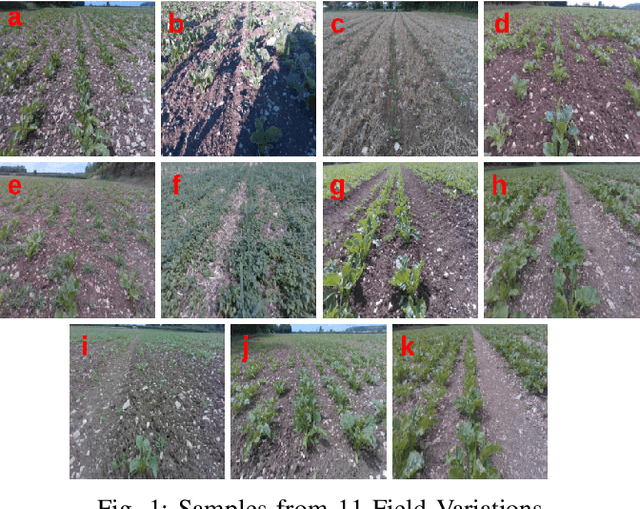

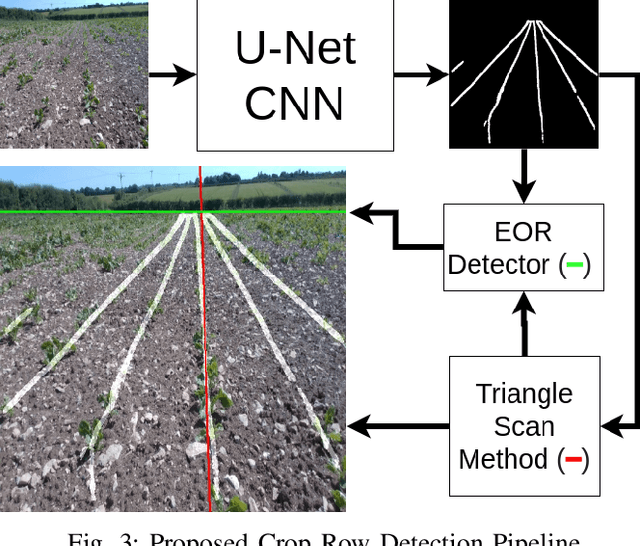

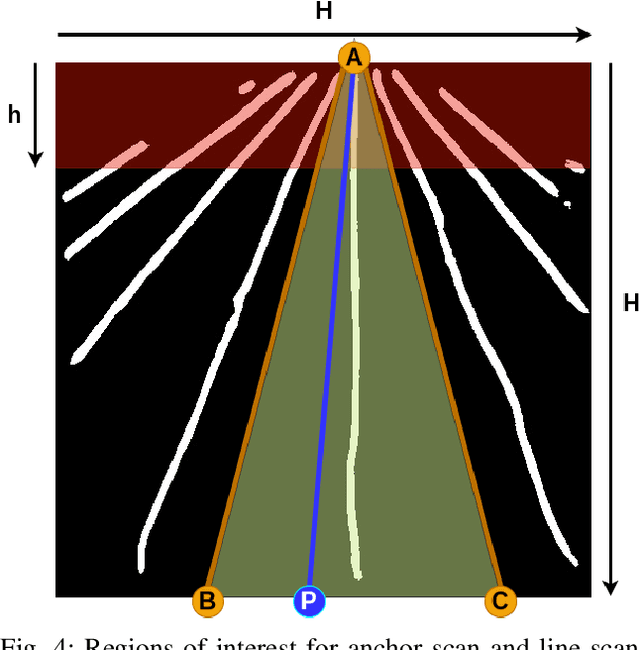

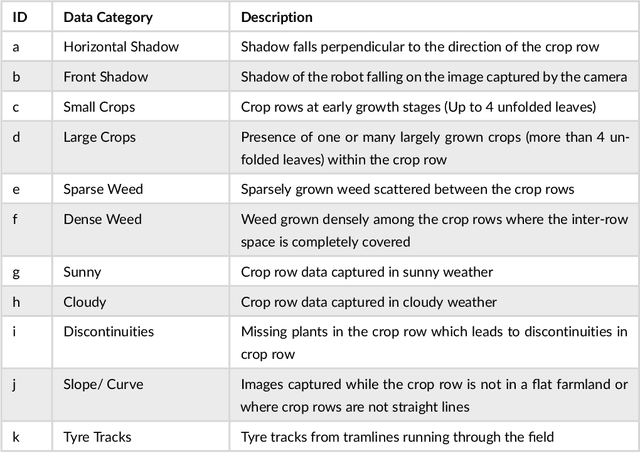

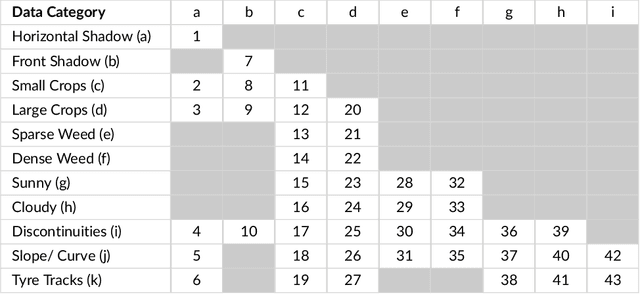

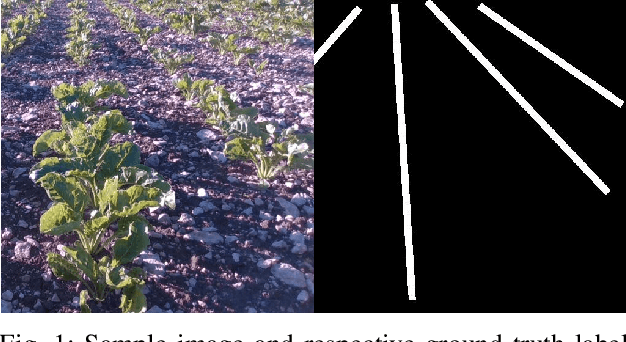

Abstract:Accurate crop row detection is often challenged by the varying field conditions present in real-world arable fields. Traditional colour based segmentation is unable to cater for all such variations. The lack of comprehensive datasets in agricultural environments limits the researchers from developing robust segmentation models to detect crop rows. We present a dataset for crop row detection with 11 field variations from Sugar Beet and Maize crops. We also present a novel crop row detection algorithm for visual servoing in crop row fields. Our algorithm can detect crop rows against varying field conditions such as curved crop rows, weed presence, discontinuities, growth stages, tramlines, shadows and light levels. Our method only uses RGB images from a front-mounted camera on a Husky robot to predict crop rows. Our method outperformed the classic colour based crop row detection baseline. Dense weed presence within inter-row space and discontinuities in crop rows were the most challenging field conditions for our crop row detection algorithm. Our method can detect the end of the crop row and navigate the robot towards the headland area when it reaches the end of the crop row.

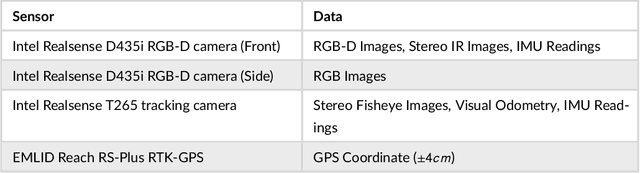

Deep learning-based Crop Row Following for Infield Navigation of Agri-Robots

Sep 09, 2022

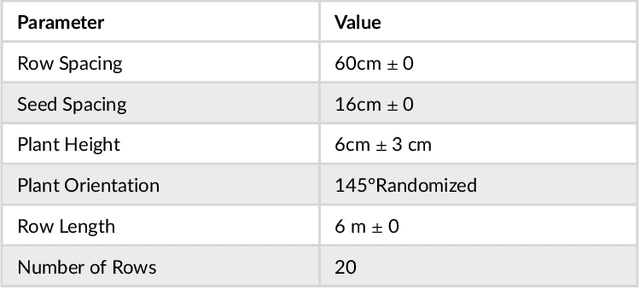

Abstract:Autonomous navigation in agricultural environments is often challenged by varying field conditions that may arise in arable fields. The state-of-the-art solutions for autonomous navigation in these agricultural environments will require expensive hardware such as RTK-GPS. This paper presents a robust crop row detection algorithm that can withstand those variations while detecting crop rows for visual servoing. A dataset of sugar beet images was created with 43 combinations of 11 field variations found in arable fields. The novel crop row detection algorithm is tested both for the crop row detection performance and also the capability of visual servoing along a crop row. The algorithm only uses RGB images as input and a convolutional neural network was used to predict crop row masks. Our algorithm outperformed the baseline method which uses colour-based segmentation for all the combinations of field variations. We use a combined performance indicator that accounts for the angular and displacement errors of the crop row detection. Our algorithm exhibited the worst performance during the early growth stages of the crop.

Towards Infield Navigation: leveraging simulated data for crop row detection

Apr 04, 2022

Abstract:Agricultural datasets for crop row detection are often bound by their limited number of images. This restricts the researchers from developing deep learning based models for precision agricultural tasks involving crop row detection. We suggest the utilization of small real-world datasets along with additional data generated by simulations to yield similar crop row detection performance as that of a model trained with a large real world dataset. Our method could reach the performance of a deep learning based crop row detection model trained with real-world data by using 60% less labelled real-world data. Our model performed well against field variations such as shadows, sunlight and grow stages. We introduce an automated pipeline to generate labelled images for crop row detection in simulation domain. An extensive comparison is done to analyze the contribution of simulated data towards reaching robust crop row detection in various real-world field scenarios.

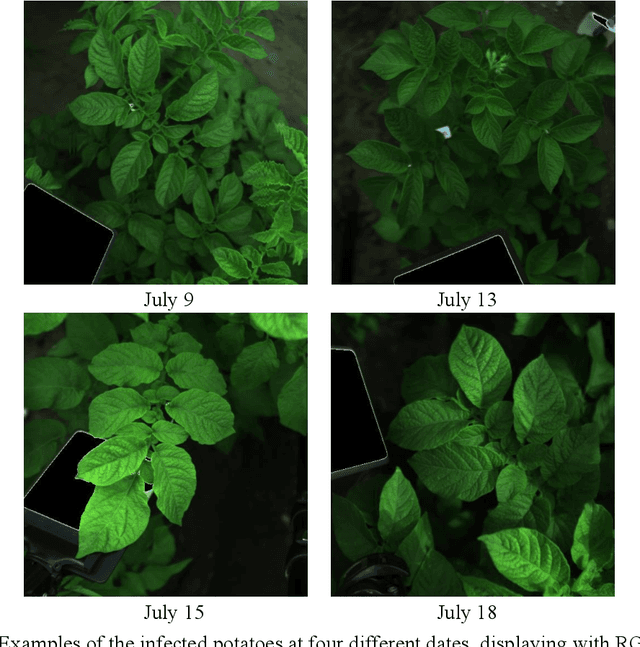

In-field early disease recognition of potato late blight based on deep learning and proximal hyperspectral imaging

Nov 23, 2021

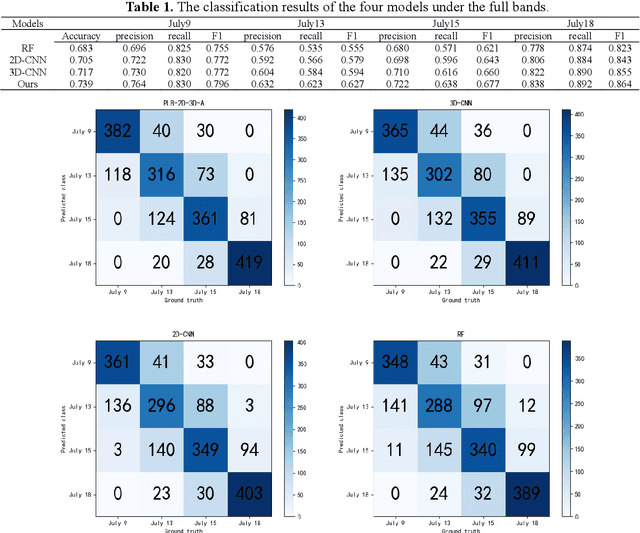

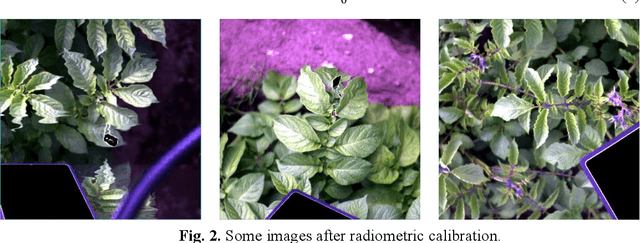

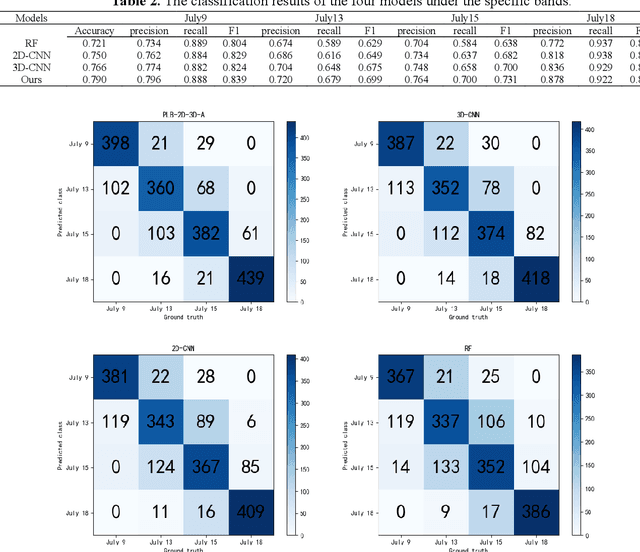

Abstract:Effective early detection of potato late blight (PLB) is an essential aspect of potato cultivation. However, it is a challenge to detect late blight at an early stage in fields with conventional imaging approaches because of the lack of visual cues displayed at the canopy level. Hyperspectral imaging can, capture spectral signals from a wide range of wavelengths also outside the visual wavelengths. In this context, we propose a deep learning classification architecture for hyperspectral images by combining 2D convolutional neural network (2D-CNN) and 3D-CNN with deep cooperative attention networks (PLB-2D-3D-A). First, 2D-CNN and 3D-CNN are used to extract rich spectral space features, and then the attention mechanism AttentionBlock and SE-ResNet are used to emphasize the salient features in the feature maps and increase the generalization ability of the model. The dataset is built with 15,360 images (64x64x204), cropped from 240 raw images captured in an experimental field with over 20 potato genotypes. The accuracy in the test dataset of 2000 images reached 0.739 in the full band and 0.790 in the specific bands (492nm, 519nm, 560nm, 592nm, 717nm and 765nm). This study shows an encouraging result for early detection of PLB with deep learning and proximal hyperspectral imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge