Deep learning-based Crop Row Following for Infield Navigation of Agri-Robots

Paper and Code

Sep 09, 2022

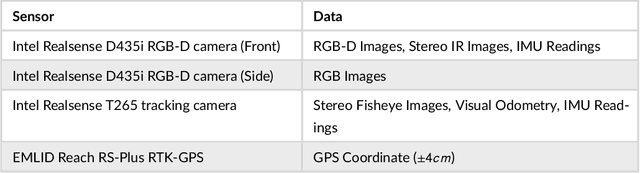

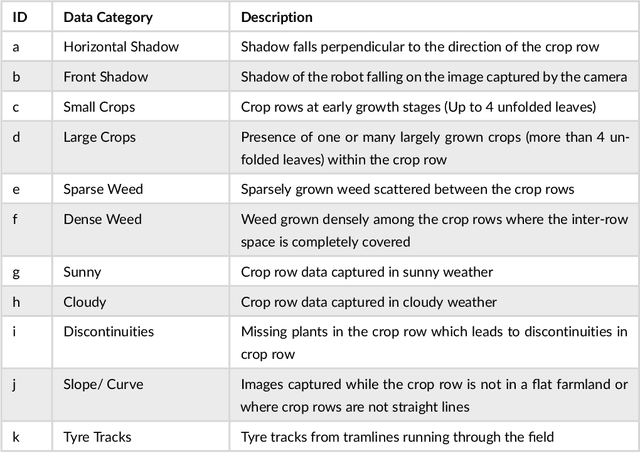

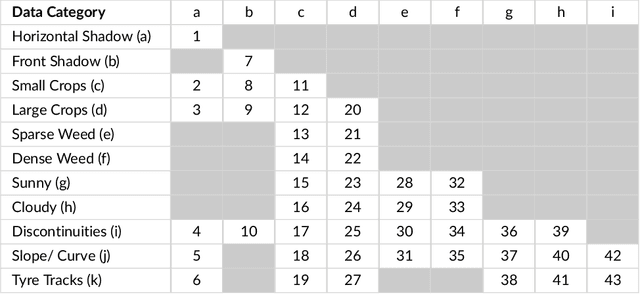

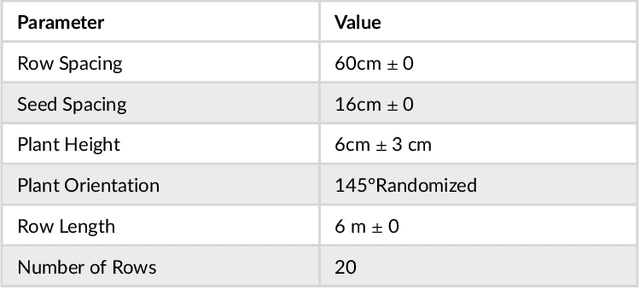

Autonomous navigation in agricultural environments is often challenged by varying field conditions that may arise in arable fields. The state-of-the-art solutions for autonomous navigation in these agricultural environments will require expensive hardware such as RTK-GPS. This paper presents a robust crop row detection algorithm that can withstand those variations while detecting crop rows for visual servoing. A dataset of sugar beet images was created with 43 combinations of 11 field variations found in arable fields. The novel crop row detection algorithm is tested both for the crop row detection performance and also the capability of visual servoing along a crop row. The algorithm only uses RGB images as input and a convolutional neural network was used to predict crop row masks. Our algorithm outperformed the baseline method which uses colour-based segmentation for all the combinations of field variations. We use a combined performance indicator that accounts for the angular and displacement errors of the crop row detection. Our algorithm exhibited the worst performance during the early growth stages of the crop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge