Justin Le Louëdec

Beacon2Science: Enhancing STEREO/HI beacon data1 with machine learning for efficient CME tracking

Mar 19, 2025Abstract:Observing and forecasting coronal mass ejections (CME) in real-time is crucial due to the strong geomagnetic storms they can generate that can have a potentially damaging effect, for example, on satellites and electrical devices. With its near-real-time availability, STEREO/HI beacon data is the perfect candidate for early forecasting of CMEs. However, previous work concluded that CME arrival prediction based on beacon data could not achieve the same accuracy as with high-resolution science data due to data gaps and lower quality. We present our novel pipeline entitled ''Beacon2Science'', bridging the gap between beacon and science data to improve CME tracking. Through this pipeline, we first enhance the quality (signal-to-noise ratio and spatial resolution) of beacon data. We then increase the time resolution of enhanced beacon images through learned interpolation to match science data's 40-minute resolution. We maximize information coherence between consecutive frames with adapted model architecture and loss functions through the different steps. The improved beacon images are comparable to science data, showing better CME visibility than the original beacon data. Furthermore, we compare CMEs tracked in beacon, enhanced beacon, and science images. The tracks extracted from enhanced beacon data are closer to those from science images, with a mean average error of $\sim 0.5 ^\circ$ of elongation compared to $1^\circ$ with original beacon data. The work presented in this paper paves the way for its application to forthcoming missions such as Vigil and PUNCH.

Key Point-based Orientation Estimation of Strawberries for Robotic Fruit Picking

Oct 17, 2023Abstract:Selective robotic harvesting is a promising technological solution to address labour shortages which are affecting modern agriculture in many parts of the world. For an accurate and efficient picking process, a robotic harvester requires the precise location and orientation of the fruit to effectively plan the trajectory of the end effector. The current methods for estimating fruit orientation employ either complete 3D information which typically requires registration from multiple views or rely on fully-supervised learning techniques, which require difficult-to-obtain manual annotation of the reference orientation. In this paper, we introduce a novel key-point-based fruit orientation estimation method allowing for the prediction of 3D orientation from 2D images directly. The proposed technique can work without full 3D orientation annotations but can also exploit such information for improved accuracy. We evaluate our work on two separate datasets of strawberry images obtained from real-world data collection scenarios. Our proposed method achieves state-of-the-art performance with an average error as low as $8^{\circ}$, improving predictions by $\sim30\%$ compared to previous work presented in~\cite{wagner2021efficient}. Furthermore, our method is suited for real-time robotic applications with fast inference times of $\sim30$ms.

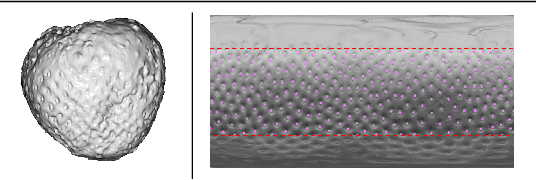

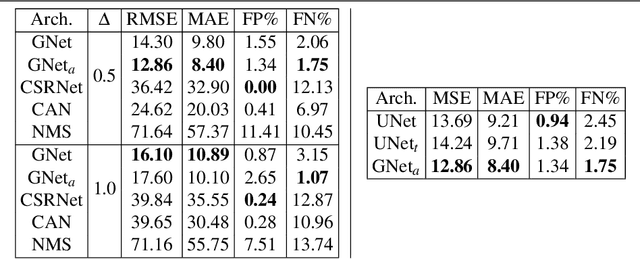

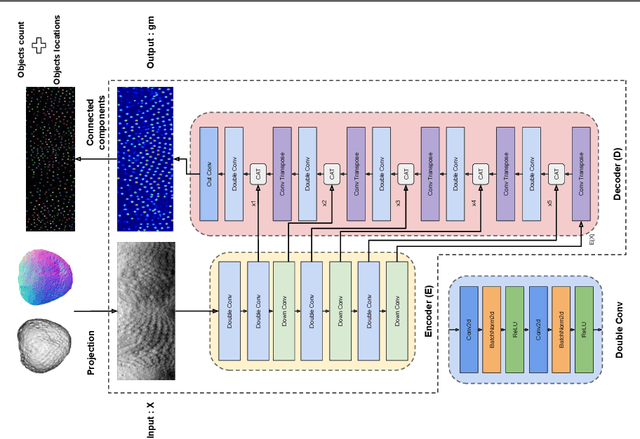

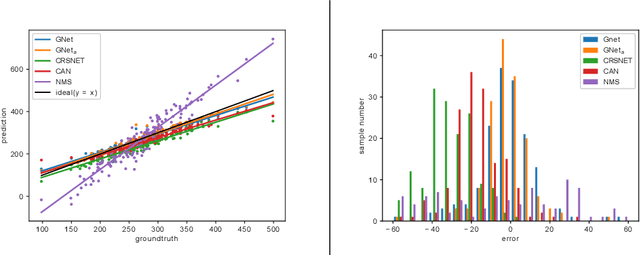

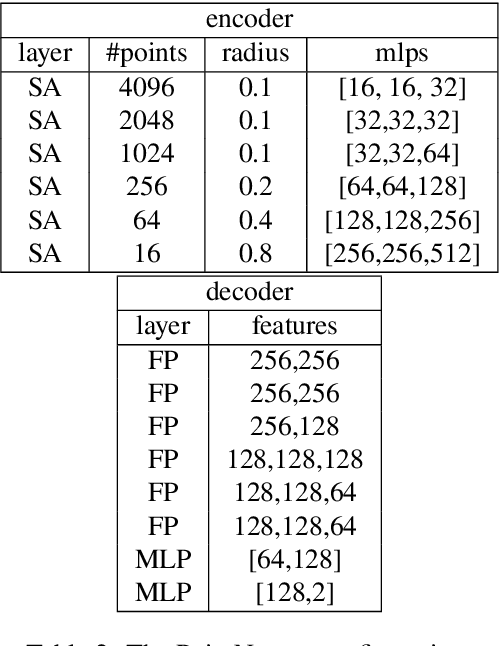

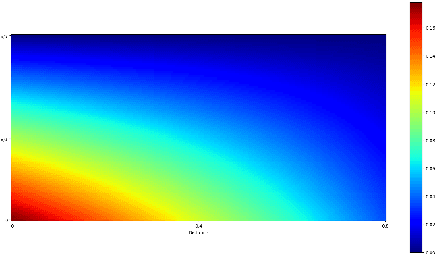

Gaussian map predictions for 3D surface feature localisation and counting

Dec 07, 2021

Abstract:In this paper, we propose to employ a Gaussian map representation to estimate precise location and count of 3D surface features, addressing the limitations of state-of-the-art methods based on density estimation which struggle in presence of local disturbances. Gaussian maps indicate probable object location and can be generated directly from keypoint annotations avoiding laborious and costly per-pixel annotations. We apply this method to the 3D spheroidal class of objects which can be projected into 2D shape representation enabling efficient processing by a neural network GNet, an improved UNet architecture, which generates the likely locations of surface features and their precise count. We demonstrate a practical use of this technique for counting strawberry achenes which is used as a fruit quality measure in phenotyping applications. The results of training the proposed system on several hundreds of 3D scans of strawberries from a publicly available dataset demonstrate the accuracy and precision of the system which outperforms the state-of-the-art density-based methods for this application.

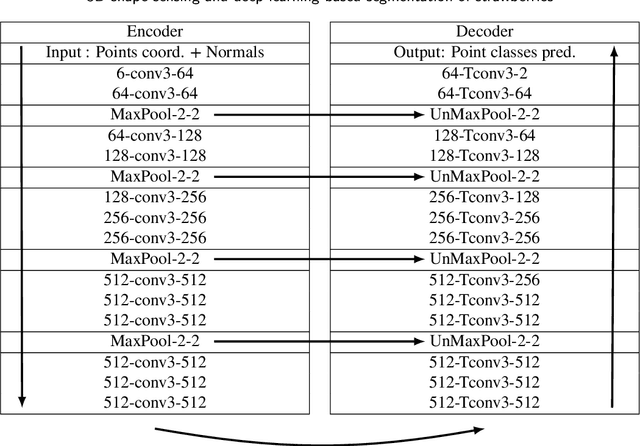

3D shape sensing and deep learning-based segmentation of strawberries

Nov 26, 2021

Abstract:Automation and robotisation of the agricultural sector are seen as a viable solution to socio-economic challenges faced by this industry. This technology often relies on intelligent perception systems providing information about crops, plants and the entire environment. The challenges faced by traditional 2D vision systems can be addressed by modern 3D vision systems which enable straightforward localisation of objects, size and shape estimation, or handling of occlusions. So far, the use of 3D sensing was mainly limited to indoor or structured environments. In this paper, we evaluate modern sensing technologies including stereo and time-of-flight cameras for 3D perception of shape in agriculture and study their usability for segmenting out soft fruit from background based on their shape. To that end, we propose a novel 3D deep neural network which exploits the organised nature of information originating from the camera-based 3D sensors. We demonstrate the superior performance and efficiency of the proposed architecture compared to the state-of-the-art 3D networks. Through a simulated study, we also show the potential of the 3D sensing paradigm for object segmentation in agriculture and provide insights and analysis of what shape quality is needed and expected for further analysis of crops. The results of this work should encourage researchers and companies to develop more accurate and robust 3D sensing technologies to assure their wider adoption in practical agricultural applications.

* 14 pages, 13 figures, accepted to Computers and Electronics in Agriculture

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge