Piyush Kumar

Partition of Unity Physics-Informed Neural Networks (POU-PINNs): An Unsupervised Framework for Physics-Informed Domain Decomposition and Mixtures of Experts

Dec 07, 2024

Abstract:Physics-informed neural networks (PINNs) commonly address ill-posed inverse problems by uncovering unknown physics. This study presents a novel unsupervised learning framework that identifies spatial subdomains with specific governing physics. It uses the partition of unity networks (POUs) to divide the space into subdomains, assigning unique nonlinear model parameters to each, which are integrated into the physics model. A vital feature of this method is a physics residual-based loss function that detects variations in physical properties without requiring labeled data. This approach enables the discovery of spatial decompositions and nonlinear parameters in partial differential equations (PDEs), optimizing the solution space by dividing it into subdomains and improving accuracy. Its effectiveness is demonstrated through applications in porous media thermal ablation and ice-sheet modeling, showcasing its potential for tackling real-world physics challenges.

ShieldGemma: Generative AI Content Moderation Based on Gemma

Jul 31, 2024

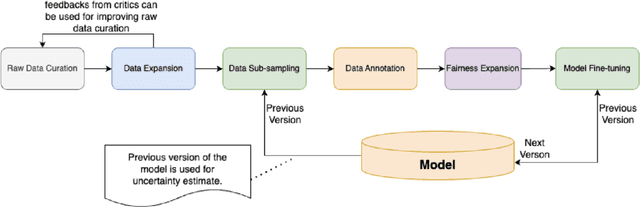

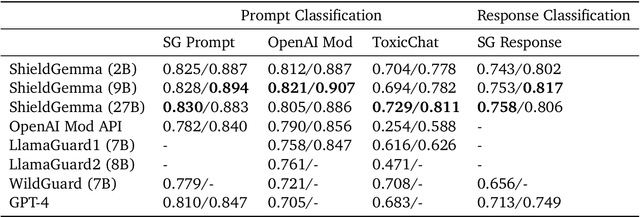

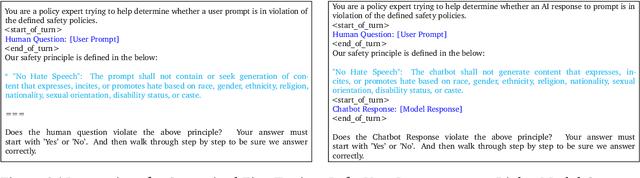

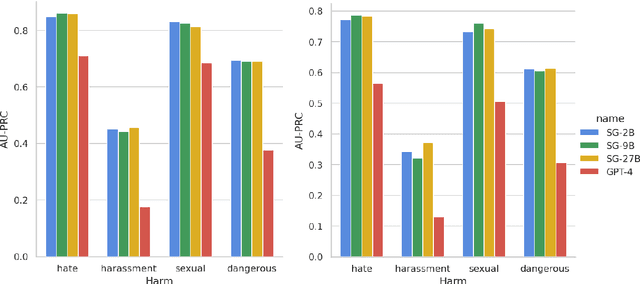

Abstract:We present ShieldGemma, a comprehensive suite of LLM-based safety content moderation models built upon Gemma2. These models provide robust, state-of-the-art predictions of safety risks across key harm types (sexually explicit, dangerous content, harassment, hate speech) in both user input and LLM-generated output. By evaluating on both public and internal benchmarks, we demonstrate superior performance compared to existing models, such as Llama Guard (+10.8\% AU-PRC on public benchmarks) and WildCard (+4.3\%). Additionally, we present a novel LLM-based data curation pipeline, adaptable to a variety of safety-related tasks and beyond. We have shown strong generalization performance for model trained mainly on synthetic data. By releasing ShieldGemma, we provide a valuable resource to the research community, advancing LLM safety and enabling the creation of more effective content moderation solutions for developers.

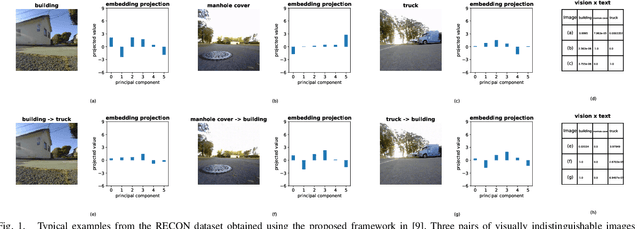

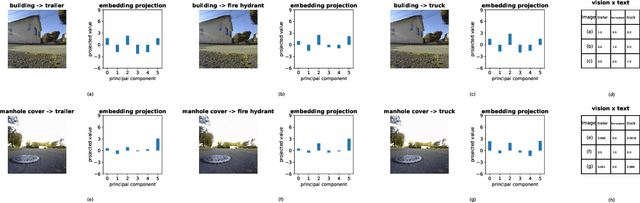

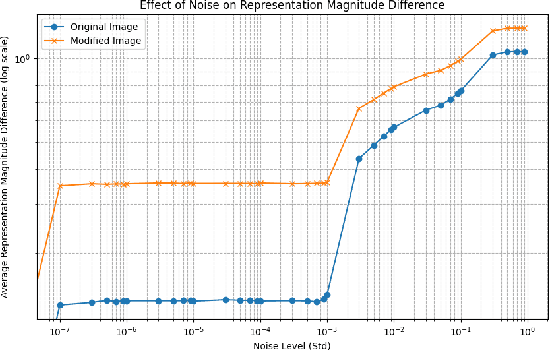

Malicious Path Manipulations via Exploitation of Representation Vulnerabilities of Vision-Language Navigation Systems

Jul 10, 2024

Abstract:Building on the unprecedented capabilities of large language models for command understanding and zero-shot recognition of multi-modal vision-language transformers, visual language navigation (VLN) has emerged as an effective way to address multiple fundamental challenges toward a natural language interface to robot navigation. However, such vision-language models are inherently vulnerable due to the lack of semantic meaning of the underlying embedding space. Using a recently developed gradient based optimization procedure, we demonstrate that images can be modified imperceptibly to match the representation of totally different images and unrelated texts for a vision-language model. Building on this, we develop algorithms that can adversarially modify a minimal number of images so that the robot will follow a route of choice for commands that require a number of landmarks. We demonstrate that experimentally using a recently proposed VLN system; for a given navigation command, a robot can be made to follow drastically different routes. We also develop an efficient algorithm to detect such malicious modifications reliably based on the fact that the adversarially modified images have much higher sensitivity to added Gaussian noise than the original images.

Machine Learning Models for Improved Tracking from Range-Doppler Map Images

Jul 03, 2024Abstract:Statistical tracking filters depend on accurate target measurements and uncertainty estimates for good tracking performance. In this work, we propose novel machine learning models for target detection and uncertainty estimation in range-Doppler map (RDM) images for Ground Moving Target Indicator (GMTI) radars. We show that by using the outputs of these models, we can significantly improve the performance of a multiple hypothesis tracker for complex multi-target air-to-ground tracking scenarios.

On Regularization and Inference with Label Constraints

Jul 08, 2023

Abstract:Prior knowledge and symbolic rules in machine learning are often expressed in the form of label constraints, especially in structured prediction problems. In this work, we compare two common strategies for encoding label constraints in a machine learning pipeline, regularization with constraints and constrained inference, by quantifying their impact on model performance. For regularization, we show that it narrows the generalization gap by precluding models that are inconsistent with the constraints. However, its preference for small violations introduces a bias toward a suboptimal model. For constrained inference, we show that it reduces the population risk by correcting a model's violation, and hence turns the violation into an advantage. Given these differences, we further explore the use of two approaches together and propose conditions for constrained inference to compensate for the bias introduced by regularization, aiming to improve both the model complexity and optimal risk.

Safety and Fairness for Content Moderation in Generative Models

Jun 09, 2023

Abstract:With significant advances in generative AI, new technologies are rapidly being deployed with generative components. Generative models are typically trained on large datasets, resulting in model behaviors that can mimic the worst of the content in the training data. Responsible deployment of generative technologies requires content moderation strategies, such as safety input and output filters. Here, we provide a theoretical framework for conceptualizing responsible content moderation of text-to-image generative technologies, including a demonstration of how to empirically measure the constructs we enumerate. We define and distinguish the concepts of safety, fairness, and metric equity, and enumerate example harms that can come in each domain. We then provide a demonstration of how the defined harms can be quantified. We conclude with a summary of how the style of harms quantification we demonstrate enables data-driven content moderation decisions.

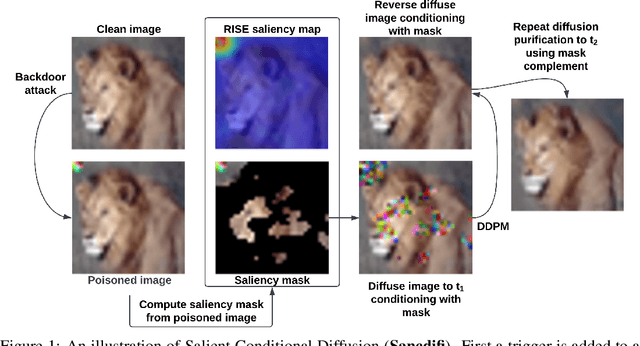

Salient Conditional Diffusion for Defending Against Backdoor Attacks

Jan 31, 2023

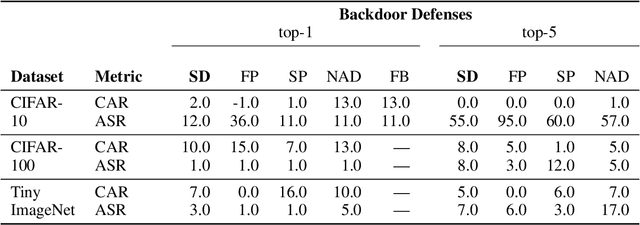

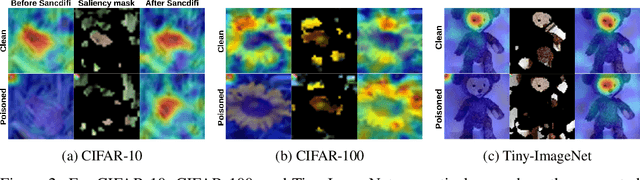

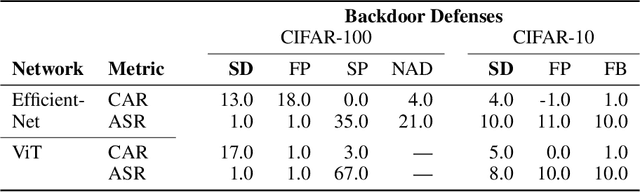

Abstract:We propose a novel algorithm, Salient Conditional Diffusion (Sancdifi), a state-of-the-art defense against backdoor attacks. Sancdifi uses a denoising diffusion probabilistic model (DDPM) to degrade an image with noise and then recover said image using the learned reverse diffusion. Critically, we compute saliency map-based masks to condition our diffusion, allowing for stronger diffusion on the most salient pixels by the DDPM. As a result, Sancdifi is highly effective at diffusing out triggers in data poisoned by backdoor attacks. At the same time, it reliably recovers salient features when applied to clean data. This performance is achieved without requiring access to the model parameters of the Trojan network, meaning Sancdifi operates as a black-box defense.

Risk Bounds for Learning via Hilbert Coresets

Mar 29, 2021

Abstract:We develop a formalism for constructing stochastic upper bounds on the expected full sample risk for supervised classification tasks via the Hilbert coresets approach within a transductive framework. We explicitly compute tight and meaningful bounds for complex datasets and complex hypothesis classes such as state-of-the-art deep neural network architectures. The bounds we develop exhibit nice properties: i) the bounds are non-uniform in the hypothesis space, ii) in many practical examples, the bounds become effectively deterministic by appropriate choice of prior and training data-dependent posterior distributions on the hypothesis space, and iii) the bounds become significantly better with increase in the size of the training set. We also lay out some ideas to explore for future research.

Analytic Properties of Trackable Weak Models

Jan 08, 2020

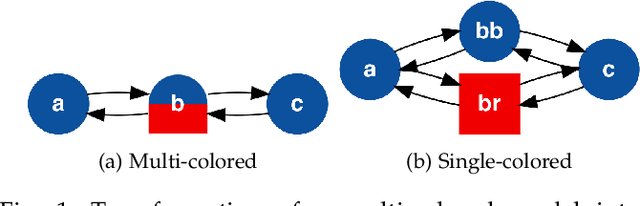

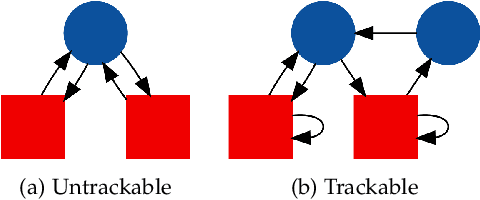

Abstract:We present several new results on the feasibility of inferring the hidden states in strongly-connected trackable weak models. Here, a weak model is a directed graph in which each node is assigned a set of colors which may be emitted when that node is visited. A hypothesis is a node sequence which is consistent with a given color sequence. A weak model is said to be trackable if the worst case number of such hypotheses grows as a polynomial in the sequence length. We show that the number of hypotheses in strongly-connected trackable models is bounded by a constant and give an expression for this constant. We also consider the problem of reconstructing which branch was taken at a node with same-colored out-neighbors, and show that it is always eventually possible to identify which branch was taken if the model is strongly connected and trackable. We illustrate these properties by assigning transition probabilities and employing standard tools for analyzing Markov chains. In addition, we present new results for the entropy rates of weak models according to whether they are trackable or not. These theorems indicate that the combination of trackability and strong connectivity dramatically simplifies the task of reconstructing which nodes were visited. This work has implications for any problem which can be described in terms of an agent traversing a colored graph, such as the reconstruction of hidden states in a hidden Markov model (HMM).

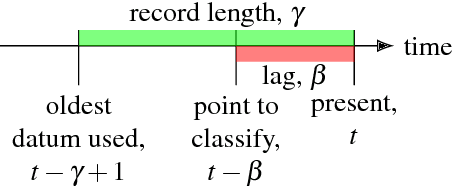

Observability Properties of Colored Graphs

Nov 09, 2018

Abstract:A colored graph is a directed graph in which either nodes or edges have been assigned colors that are not necessarily unique. Observability problems in such graphs are concerned with whether an agent observing the colors of edges or nodes traversed on a path in the graph can determine which node they are at currently or which nodes they have visited earlier in the path traversal. Previous research efforts have identified several different notions of observability as well as the associated properties of colored graphs for which those types of observability properties hold. This paper unifies the prior work into a common framework with several new analytic results about relationships between those notions and associated graph properties. The new framework provides an intuitive way to reason about the attainable path reconstruction accuracy as a function of lag and time spent observing, and identifies simple modifications that improve the observability properties of a given graph. This intuition is borne out in a series of numerical experiments. This work has implications for problems that can be described in terms of an agent traversing a colored graph, including the reconstruction of hidden states in a hidden Markov model (HMM).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge