Peng Qiao

Transolver is a Linear Transformer: Revisiting Physics-Attention through the Lens of Linear Attention

Nov 11, 2025

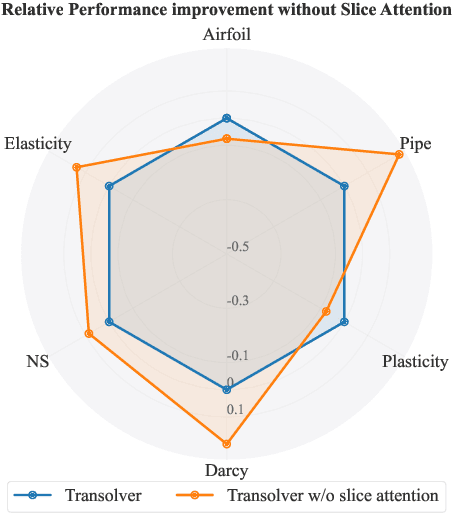

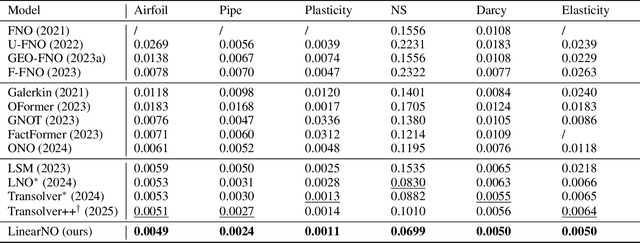

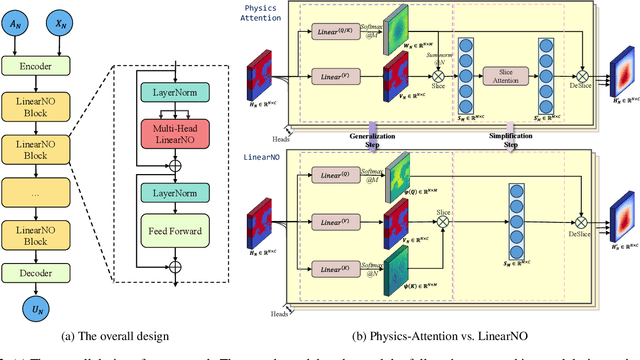

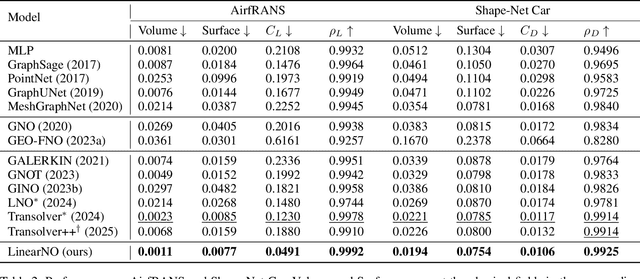

Abstract:Recent advances in Transformer-based Neural Operators have enabled significant progress in data-driven solvers for Partial Differential Equations (PDEs). Most current research has focused on reducing the quadratic complexity of attention to address the resulting low training and inference efficiency. Among these works, Transolver stands out as a representative method that introduces Physics-Attention to reduce computational costs. Physics-Attention projects grid points into slices for slice attention, then maps them back through deslicing. However, we observe that Physics-Attention can be reformulated as a special case of linear attention, and that the slice attention may even hurt the model performance. Based on these observations, we argue that its effectiveness primarily arises from the slice and deslice operations rather than interactions between slices. Building on this insight, we propose a two-step transformation to redesign Physics-Attention into a canonical linear attention, which we call Linear Attention Neural Operator (LinearNO). Our method achieves state-of-the-art performance on six standard PDE benchmarks, while reducing the number of parameters by an average of 40.0% and computational cost by 36.2%. Additionally, it delivers superior performance on two challenging, industrial-level datasets: AirfRANS and Shape-Net Car.

Mono3R: Exploiting Monocular Cues for Geometric 3D Reconstruction

Apr 18, 2025Abstract:Recent advances in data-driven geometric multi-view 3D reconstruction foundation models (e.g., DUSt3R) have shown remarkable performance across various 3D vision tasks, facilitated by the release of large-scale, high-quality 3D datasets. However, as we observed, constrained by their matching-based principles, the reconstruction quality of existing models suffers significant degradation in challenging regions with limited matching cues, particularly in weakly textured areas and low-light conditions. To mitigate these limitations, we propose to harness the inherent robustness of monocular geometry estimation to compensate for the inherent shortcomings of matching-based methods. Specifically, we introduce a monocular-guided refinement module that integrates monocular geometric priors into multi-view reconstruction frameworks. This integration substantially enhances the robustness of multi-view reconstruction systems, leading to high-quality feed-forward reconstructions. Comprehensive experiments across multiple benchmarks demonstrate that our method achieves substantial improvements in both mutli-view camera pose estimation and point cloud accuracy.

Regist3R: Incremental Registration with Stereo Foundation Model

Apr 16, 2025Abstract:Multi-view 3D reconstruction has remained an essential yet challenging problem in the field of computer vision. While DUSt3R and its successors have achieved breakthroughs in 3D reconstruction from unposed images, these methods exhibit significant limitations when scaling to multi-view scenarios, including high computational cost and cumulative error induced by global alignment. To address these challenges, we propose Regist3R, a novel stereo foundation model tailored for efficient and scalable incremental reconstruction. Regist3R leverages an incremental reconstruction paradigm, enabling large-scale 3D reconstructions from unordered and many-view image collections. We evaluate Regist3R on public datasets for camera pose estimation and 3D reconstruction. Our experiments demonstrate that Regist3R achieves comparable performance with optimization-based methods while significantly improving computational efficiency, and outperforms existing multi-view reconstruction models. Furthermore, to assess its performance in real-world applications, we introduce a challenging oblique aerial dataset which has long spatial spans and hundreds of views. The results highlight the effectiveness of Regist3R. We also demonstrate the first attempt to reconstruct large-scale scenes encompassing over thousands of views through pointmap-based foundation models, showcasing its potential for practical applications in large-scale 3D reconstruction tasks, including urban modeling, aerial mapping, and beyond.

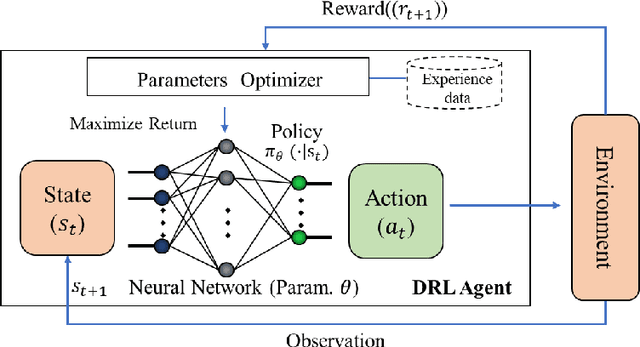

Highly Parallelized Reinforcement Learning Training with Relaxed Assignment Dependencies

Feb 27, 2025Abstract:As the demands for superior agents grow, the training complexity of Deep Reinforcement Learning (DRL) becomes higher. Thus, accelerating training of DRL has become a major research focus. Dividing the DRL training process into subtasks and using parallel computation can effectively reduce training costs. However, current DRL training systems lack sufficient parallelization due to data assignment between subtask components. This assignment issue has been ignored, but addressing it can further boost training efficiency. Therefore, we propose a high-throughput distributed RL training system called TianJi. It relaxes assignment dependencies between subtask components and enables event-driven asynchronous communication. Meanwhile, TianJi maintains clear boundaries between subtask components. To address convergence uncertainty from relaxed assignment dependencies, TianJi proposes a distributed strategy based on the balance of sample production and consumption. The strategy controls the staleness of samples to correct their quality, ensuring convergence. We conducted extensive experiments. TianJi achieves a convergence time acceleration ratio of up to 4.37 compared to related comparison systems. When scaled to eight computational nodes, TianJi shows a convergence time speedup of 1.6 and a throughput speedup of 7.13 relative to XingTian, demonstrating its capability to accelerate training and scalability. In data transmission efficiency experiments, TianJi significantly outperforms other systems, approaching hardware limits. TianJi also shows effectiveness in on-policy algorithms, achieving convergence time acceleration ratios of 4.36 and 2.95 compared to RLlib and XingTian. TianJi is accessible at https://github.com/HiPRL/TianJi.git.

Acceleration for Deep Reinforcement Learning using Parallel and Distributed Computing: A Survey

Nov 08, 2024

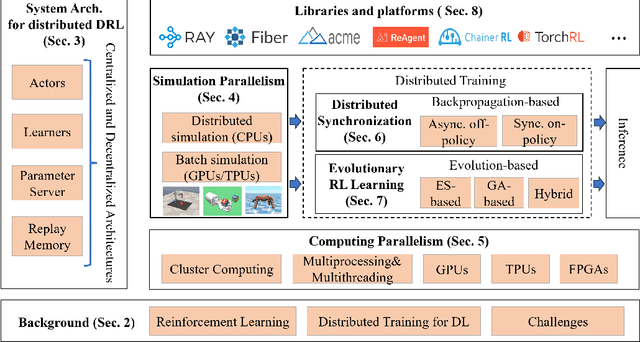

Abstract:Deep reinforcement learning has led to dramatic breakthroughs in the field of artificial intelligence for the past few years. As the amount of rollout experience data and the size of neural networks for deep reinforcement learning have grown continuously, handling the training process and reducing the time consumption using parallel and distributed computing is becoming an urgent and essential desire. In this paper, we perform a broad and thorough investigation on training acceleration methodologies for deep reinforcement learning based on parallel and distributed computing, providing a comprehensive survey in this field with state-of-the-art methods and pointers to core references. In particular, a taxonomy of literature is provided, along with a discussion of emerging topics and open issues. This incorporates learning system architectures, simulation parallelism, computing parallelism, distributed synchronization mechanisms, and deep evolutionary reinforcement learning. Further, we compare 16 current open-source libraries and platforms with criteria of facilitating rapid development. Finally, we extrapolate future directions that deserve further research.

Introducing Diminutive Causal Structure into Graph Representation Learning

Jun 13, 2024Abstract:When engaging in end-to-end graph representation learning with Graph Neural Networks (GNNs), the intricate causal relationships and rules inherent in graph data pose a formidable challenge for the model in accurately capturing authentic data relationships. A proposed mitigating strategy involves the direct integration of rules or relationships corresponding to the graph data into the model. However, within the domain of graph representation learning, the inherent complexity of graph data obstructs the derivation of a comprehensive causal structure that encapsulates universal rules or relationships governing the entire dataset. Instead, only specialized diminutive causal structures, delineating specific causal relationships within constrained subsets of graph data, emerge as discernible. Motivated by empirical insights, it is observed that GNN models exhibit a tendency to converge towards such specialized causal structures during the training process. Consequently, we posit that the introduction of these specific causal structures is advantageous for the training of GNN models. Building upon this proposition, we introduce a novel method that enables GNN models to glean insights from these specialized diminutive causal structures, thereby enhancing overall performance. Our method specifically extracts causal knowledge from the model representation of these diminutive causal structures and incorporates interchange intervention to optimize the learning process. Theoretical analysis serves to corroborate the efficacy of our proposed method. Furthermore, empirical experiments consistently demonstrate significant performance improvements across diverse datasets.

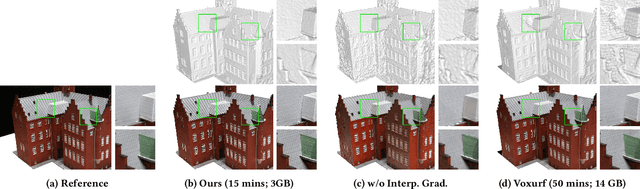

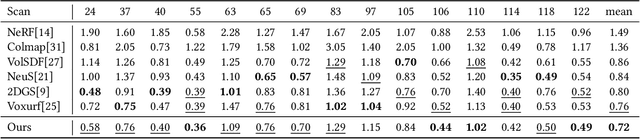

VoxNeuS: Enhancing Voxel-Based Neural Surface Reconstruction via Gradient Interpolation

Jun 11, 2024

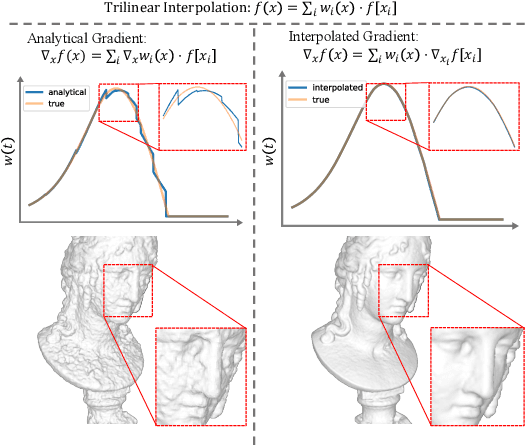

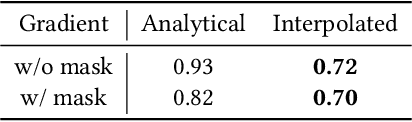

Abstract:Neural Surface Reconstruction learns a Signed Distance Field~(SDF) to reconstruct the 3D model from multi-view images. Previous works adopt voxel-based explicit representation to improve efficiency. However, they ignored the gradient instability of interpolation in the voxel grid, leading to degradation on convergence and smoothness. Besides, previous works entangled the optimization of geometry and radiance, which leads to the deformation of geometry to explain radiance, causing artifacts when reconstructing textured planes. In this work, we reveal that the instability of gradient comes from its discontinuity during trilinear interpolation, and propose to use the interpolated gradient instead of the original analytical gradient to eliminate the discontinuity. Based on gradient interpolation, we propose VoxNeuS, a lightweight surface reconstruction method for computational and memory efficient neural surface reconstruction. Thanks to the explicit representation, the gradient of regularization terms, i.e. Eikonal and curvature loss, are directly solved, avoiding computation and memory-access overhead. Further, VoxNeuS adopts a geometry-radiance disentangled architecture to handle the geometry deformation from radiance optimization. The experimental results show that VoxNeuS achieves better reconstruction quality than previous works. The entire training process takes 15 minutes and less than 3 GB of memory on a single 2080ti GPU.

DistGrid: Scalable Scene Reconstruction with Distributed Multi-resolution Hash Grid

May 08, 2024

Abstract:Neural Radiance Field~(NeRF) achieves extremely high quality in object-scaled and indoor scene reconstruction. However, there exist some challenges when reconstructing large-scale scenes. MLP-based NeRFs suffer from limited network capacity, while volume-based NeRFs are heavily memory-consuming when the scene resolution increases. Recent approaches propose to geographically partition the scene and learn each sub-region using an individual NeRF. Such partitioning strategies help volume-based NeRF exceed the single GPU memory limit and scale to larger scenes. However, this approach requires multiple background NeRF to handle out-of-partition rays, which leads to redundancy of learning. Inspired by the fact that the background of current partition is the foreground of adjacent partition, we propose a scalable scene reconstruction method based on joint Multi-resolution Hash Grids, named DistGrid. In this method, the scene is divided into multiple closely-paved yet non-overlapped Axis-Aligned Bounding Boxes, and a novel segmented volume rendering method is proposed to handle cross-boundary rays, thereby eliminating the need for background NeRFs. The experiments demonstrate that our method outperforms existing methods on all evaluated large-scale scenes, and provides visually plausible scene reconstruction. The scalability of our method on reconstruction quality is further evaluated qualitatively and quantitatively.

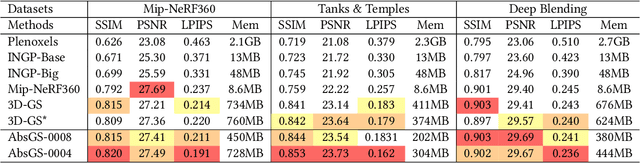

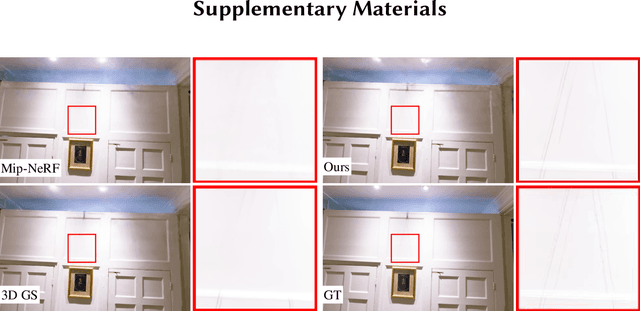

AbsGS: Recovering Fine Details for 3D Gaussian Splatting

Apr 16, 2024

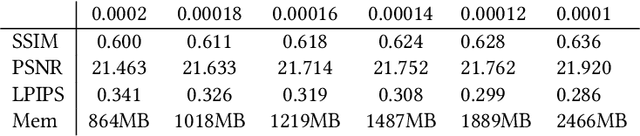

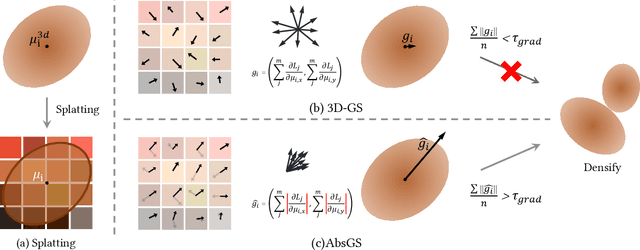

Abstract:3D Gaussian Splatting (3D-GS) technique couples 3D Gaussian primitives with differentiable rasterization to achieve high-quality novel view synthesis results while providing advanced real-time rendering performance. However, due to the flaw of its adaptive density control strategy in 3D-GS, it frequently suffers from over-reconstruction issue in intricate scenes containing high-frequency details, leading to blurry rendered images. The underlying reason for the flaw has still been under-explored. In this work, we present a comprehensive analysis of the cause of aforementioned artifacts, namely gradient collision, which prevents large Gaussians in over-reconstructed regions from splitting. To address this issue, we propose the novel homodirectional view-space positional gradient as the criterion for densification. Our strategy efficiently identifies large Gaussians in over-reconstructed regions, and recovers fine details by splitting. We evaluate our proposed method on various challenging datasets. The experimental results indicate that our approach achieves the best rendering quality with reduced or similar memory consumption. Our method is easy to implement and can be incorporated into a wide variety of most recent Gaussian Splatting-based methods. We will open source our codes upon formal publication. Our project page is available at: https://ty424.github.io/AbsGS.github.io/

TFDMNet: A Novel Network Structure Combines the Time Domain and Frequency Domain Features

Jan 29, 2024Abstract:Convolutional neural network (CNN) has achieved impressive success in computer vision during the past few decades. The image convolution operation helps CNNs to get good performance on image-related tasks. However, it also has high computation complexity and hard to be parallelized. This paper proposes a novel Element-wise Multiplication Layer (EML) to replace convolution layers, which can be trained in the frequency domain. Theoretical analyses show that EMLs lower the computation complexity and easier to be parallelized. Moreover, we introduce a Weight Fixation mechanism to alleviate the problem of over-fitting, and analyze the working behavior of Batch Normalization and Dropout in the frequency domain. To get the balance between the computation complexity and memory usage, we propose a new network structure, namely Time-Frequency Domain Mixture Network (TFDMNet), which combines the advantages of both convolution layers and EMLs. Experimental results imply that TFDMNet achieves good performance on MNIST, CIFAR-10 and ImageNet databases with less number of operations comparing with corresponding CNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge