Nan Ma

VQ-DSC-R: Robust Vector Quantized-Enabled Digital Semantic Communication With OFDM Transmission

Feb 05, 2026Abstract:Digital mapping of semantic features is essential for achieving interoperability between semantic communication and practical digital infrastructure. However, current research efforts predominantly concentrate on analog semantic communication with simplified channel models. To bridge these gaps, we develop a robust vector quantized-enabled digital semantic communication (VQ-DSC-R) system built upon orthogonal frequency division multiplexing (OFDM) transmission. Our work encompasses the framework design of VQ-DSC-R, followed by a comprehensive optimization study. Firstly, we design a Swin Transformer-based backbone for hierarchical semantic feature extraction, integrated with VQ modules that map the features into a shared semantic quantized codebook (SQC) for efficient index transmission. Secondly, we propose a differentiable vector quantization with adaptive noise-variance (ANDVQ) scheme to mitigate quantization errors in SQC, which dynamically adjusts the quantization process using K-nearest neighbor statistics, while exponential moving average mechanism stabilizes SQC training. Thirdly, for robust index transmission over multipath fading channel and noise, we develop a conditional diffusion model (CDM) to refine channel state information, and design an attention-based module to dynamically adapt to channel noise. The entire VQ-DSC-R system is optimized via a three-stage training strategy. Extensive experiments demonstrate superiority of VQ-DSC-R over benchmark schemes, achieving high compression ratios and robust performance in practical scenarios.

Secure Intellicise Wireless Network: Agentic AI for Coverless Semantic Steganography Communication

Jan 23, 2026Abstract:Semantic Communication (SemCom), leveraging its significant advantages in transmission efficiency and reliability, has emerged as a core technology for constructing future intellicise (intelligent and concise) wireless networks. However, intelligent attacks represented by semantic eavesdropping pose severe challenges to the security of SemCom. To address this challenge, Semantic Steganographic Communication (SemSteCom) achieves ``invisible'' encryption by implicitly embedding private semantic information into cover modality carriers. The state-of-the-art study has further introduced generative diffusion models to directly generate stega images without relying on original cover images, effectively enhancing steganographic capacity. Nevertheless, the recovery process of private images is highly dependent on the guidance of private semantic keys, which may be inferred by intelligent eavesdroppers, thereby introducing new security threats. To address this issue, we propose an Agentic AI-driven SemSteCom (AgentSemSteCom) scheme, which includes semantic extraction, digital token controlled reference image generation, coverless steganography, semantic codec, and optional task-oriented enhancement modules. The proposed AgentSemSteCom scheme obviates the need for both cover images and private semantic keys, thereby boosting steganographic capacity while reinforcing transmission security. The simulation results on open-source datasets verify that, AgentSemSteCom achieves better transmission quality and higher security levels than the baseline scheme.

Integrated Sensing and Semantic Communication with Adaptive Source-Channel Coding

Jan 19, 2026Abstract:Semantic communication has emerged as a new paradigm to facilitate the performance of integrated sensing and communication systems in 6G. However, most of the existing works mainly focus on sensing data compression to reduce the subsequent communication overheads, without considering the integrated transmission framework for both the SemCom and sensing tasks. This paper proposes an adaptive source-channel coding and beamforming design framework for integrated sensing and SemCom systems by jointly optimizing the coding rate for SemCom task and the transmit beamforming for both the SemCom and sensing tasks. Specifically, an end-to-end semantic distortion function is approximated by deriving an upper bound composing of source and channel coding induced components, and then a hybrid Cramér-Rao bound (HCRB) is also derived for target position under imperfect time synchronization. To facilitate the joint optimization, a distortion minimization problem is formulated by considering the HCRB threshold, channel uses, and power budget. Subsequently, an alternative optimization algorithm composed of successive convex approximation and fractional programming is proposed to address this problem by decoupling it into two subproblems for coding rate and beamforming designs, respectively. Simulation results demonstrate that our proposed scheme outperforms the conventional deep joint source-channel coding -water filling-zero forcing benchmark.

Vector Quantized-Aided XL-MIMO CSI Feedback with Channel Adaptive Transmission

Jan 12, 2026Abstract:Efficient channel state information (CSI) feedback is critical for 6G extremely large-scale multiple-input multiple-output (XL-MIMO) systems to mitigate channel interference. However, the massive antenna scale imposes a severe burden on feedback overhead. Meanwhile, existing quantized feedback methods face dual challenges of limited quantization precision and insufficient channel robustness when compressing high-dimensional channel features into discrete symbols. To reduce these gaps, guided by the deep joint source-channel coding (DJSCC) framework, we propose a vector quantized (VQ)-aided scheme for CSI feedback in XL-MIMO systems considering the near-field effect, named VQ-DJSCC-F. Firstly, taking advantage of the sparsity of near-field channels in the polar-delay domain, we extract energy-concentrated features to reduce dimensionality. Then, we simultaneously design the Transformer and CNN (convolutional neural network) architectures as the backbones to hierarchically extract CSI features, followed by VQ modules projecting features into a discrete latent space. The entropy loss regularization in synergy with an exponential moving average (EMA) update strategy is introduced to maximize quantization precision. Furthermore, we develop an attention mechanism-driven channel adaptation module to mitigate the impact of wireless channel fading on the transmission of index sequences. Simulation results demonstrate that the proposed scheme achieves superior CSI reconstruction accuracy with lower feedback overheads under varying channel conditions.

Flow matching-based generative models for MIMO channel estimation

Nov 14, 2025Abstract:Diffusion model (DM)-based channel estimation, which generates channel samples via a posteriori sampling stepwise with denoising process, has shown potential in high-precision channel state information (CSI) acquisition. However, slow sampling speed is an essential challenge for recent developed DM-based schemes. To alleviate this problem, we propose a novel flow matching (FM)-based generative model for multiple-input multiple-output (MIMO) channel estimation. We first formulate the channel estimation problem within FM framework, where the conditional probability path is constructed from the noisy channel distribution to the true channel distribution. In this case, the path evolves along the straight-line trajectory at a constant speed. Then, guided by this, we derive the velocity field that depends solely on the noise statistics to guide generative models training. Furthermore, during the sampling phase, we utilize the trained velocity field as prior information for channel estimation, which allows for quick and reliable noise channel enhancement via ordinary differential equation (ODE) Euler solver. Finally, numerical results demonstrate that the proposed FM-based channel estimation scheme can significantly reduce the sampling overhead compared to other popular DM-based schemes, such as the score matching (SM)-based scheme. Meanwhile, it achieves superior channel estimation accuracy under different channel conditions.

VQ-VAE Based Digital Semantic Communication with Importance-Aware OFDM Transmission

Aug 12, 2025

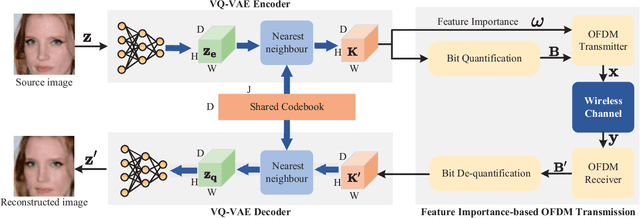

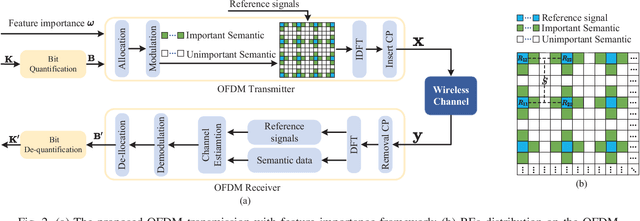

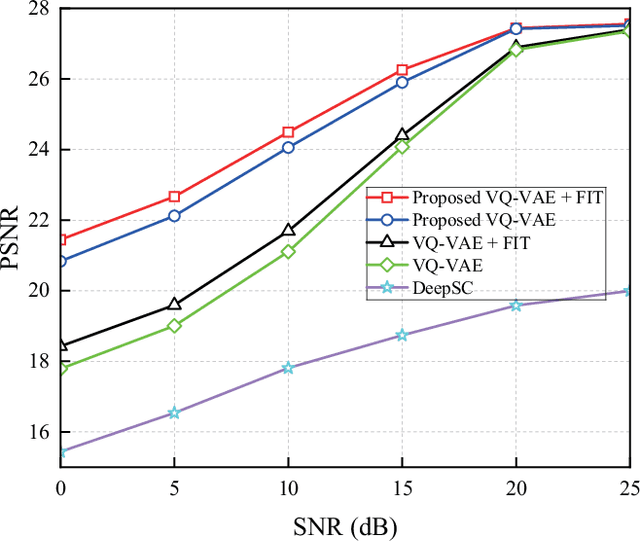

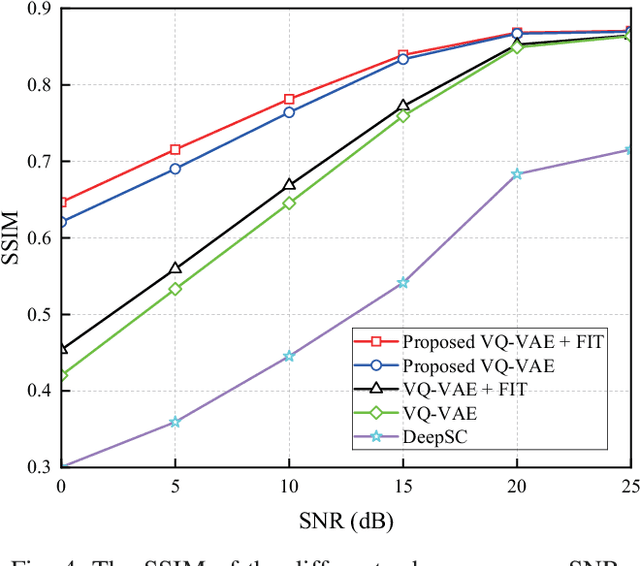

Abstract:Semantic communication (SemCom) significantly reduces redundant data and improves transmission efficiency by extracting the latent features of information. However, most of the conventional deep learning-based SemCom systems focus on analog transmission and lack in compatibility with practical digital communications. This paper proposes a vector quantized-variational autoencoder (VQ-VAE) based digital SemCom system that directly transmits the semantic features and incorporates the importance-aware orthogonal frequency division multiplexing (OFDM) transmission to enhance the SemCom performance, where the VQ-VAE generates a discrete codebook shared between the transmitter and receiver. At transmitter, the latent semantic features are firstly extracted by VQ-VAE, and then the shared codebook is adopted to match these features, which are subsequently transformed into a discrete version to adapt the digital transmission. To protect the semantic information, an importance-aware OFDM transmission strategy is proposed to allocate the key features near the OFDM reference signals, where the feature importance is derived from the gradient-based method. At the receiver, the features are rematched with the shared codebook to further correct errors. Finally, experimental results demonstrate that our proposed scheme outperforms the conventional DeepSC and achieves better reconstruction performance under low SNR region.

AHPPEBot: Autonomous Robot for Tomato Harvesting based on Phenotyping and Pose Estimation

May 11, 2024

Abstract:To address the limitations inherent to conventional automated harvesting robots specifically their suboptimal success rates and risk of crop damage, we design a novel bot named AHPPEBot which is capable of autonomous harvesting based on crop phenotyping and pose estimation. Specifically, In phenotyping, the detection, association, and maturity estimation of tomato trusses and individual fruits are accomplished through a multi-task YOLOv5 model coupled with a detection-based adaptive DBScan clustering algorithm. In pose estimation, we employ a deep learning model to predict seven semantic keypoints on the pedicel. These keypoints assist in the robot's path planning, minimize target contact, and facilitate the use of our specialized end effector for harvesting. In autonomous tomato harvesting experiments conducted in commercial greenhouses, our proposed robot achieved a harvesting success rate of 86.67%, with an average successful harvest time of 32.46 s, showcasing its continuous and robust harvesting capabilities. The result underscores the potential of harvesting robots to bridge the labor gap in agriculture.

Semantic Entropy Can Simultaneously Benefit Transmission Efficiency and Channel Security of Wireless Semantic Communications

Feb 07, 2024Abstract:Recently proliferated deep learning-based semantic communications (DLSC) focus on how transmitted symbols efficiently convey a desired meaning to the destination. However, the sensitivity of neural models and the openness of wireless channels cause the DLSC system to be extremely fragile to various malicious attacks. This inspires us to ask a question: "Can we further exploit the advantages of transmission efficiency in wireless semantic communications while also alleviating its security disadvantages?". Keeping this in mind, we propose SemEntropy, a novel method that answers the above question by exploring the semantics of data for both adaptive transmission and physical layer encryption. Specifically, we first introduce semantic entropy, which indicates the expectation of various semantic scores regarding the transmission goal of the DLSC. Equipped with such semantic entropy, we can dynamically assign informative semantics to Orthogonal Frequency Division Multiplexing (OFDM) subcarriers with better channel conditions in a fine-grained manner. We also use the entropy to guide semantic key generation to safeguard communications over open wireless channels. By doing so, both transmission efficiency and channel security can be simultaneously improved. Extensive experiments over various benchmarks show the effectiveness of the proposed SemEntropy. We discuss the reason why our proposed method benefits secure transmission of DLSC, and also give some interesting findings, e.g., SemEntropy can keep the semantic accuracy remain 95% with 60% less transmission.

Spectrum-guided Feature Enhancement Network for Event Person Re-Identification

Feb 02, 2024Abstract:As a cutting-edge biosensor, the event camera holds significant potential in the field of computer vision, particularly regarding privacy preservation. However, compared to traditional cameras, event streams often contain noise and possess extremely sparse semantics, posing a formidable challenge for event-based person re-identification (event Re-ID). To address this, we introduce a novel event person re-identification network: the Spectrum-guided Feature Enhancement Network (SFE-Net). This network consists of two innovative components: the Multi-grain Spectrum Attention Mechanism (MSAM) and the Consecutive Patch Dropout Module (CPDM). MSAM employs a fourier spectrum transform strategy to filter event noise, while also utilizing an event-guided multi-granularity attention strategy to enhance and capture discriminative person semantics. CPDM employs a consecutive patch dropout strategy to generate multiple incomplete feature maps, encouraging the deep Re-ID model to equally perceive each effective region of the person's body and capture robust person descriptors. Extensive experiments on Event Re-ID datasets demonstrate that our SFE-Net achieves the best performance in this task.

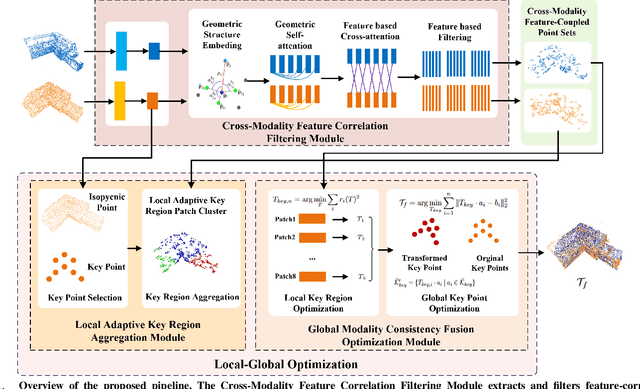

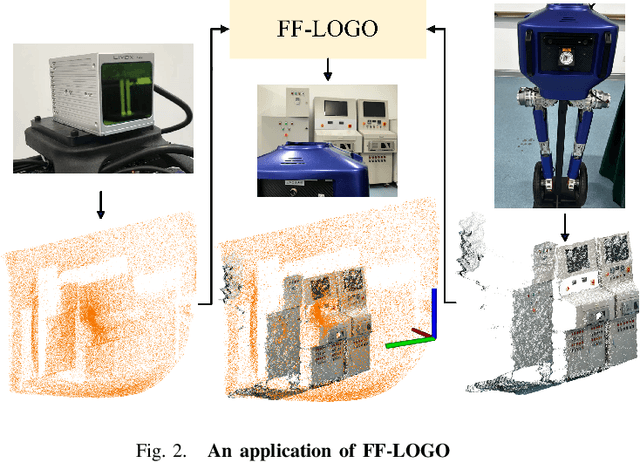

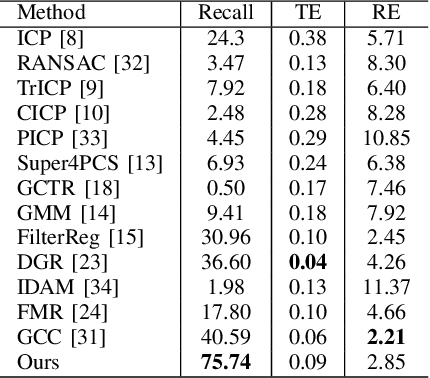

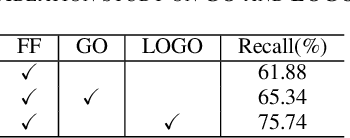

FF-LOGO: Cross-Modality Point Cloud Registration with Feature Filtering and Local to Global Optimization

Sep 16, 2023

Abstract:Cross-modality point cloud registration is confronted with significant challenges due to inherent differences in modalities between different sensors. We propose a cross-modality point cloud registration framework FF-LOGO: a cross-modality point cloud registration method with feature filtering and local-global optimization. The cross-modality feature correlation filtering module extracts geometric transformation-invariant features from cross-modality point clouds and achieves point selection by feature matching. We also introduce a cross-modality optimization process, including a local adaptive key region aggregation module and a global modality consistency fusion optimization module. Experimental results demonstrate that our two-stage optimization significantly improves the registration accuracy of the feature association and selection module. Our method achieves a substantial increase in recall rate compared to the current state-of-the-art methods on the 3DCSR dataset, improving from 40.59% to 75.74%. Our code will be available at https://github.com/wangmohan17/FFLOGO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge