N. M. Anoop Krishnan

Civil Engineering Department, Indian Institute of Technology Delhi, New Delhi, India, Yardi School of Artificial Intelligence, Indian Institute of Technology Delhi, New Delhi, India

Sustainable Materials Discovery in the Era of Artificial Intelligence

Jan 29, 2026Abstract:Artificial intelligence (AI) has transformed materials discovery, enabling rapid exploration of chemical space through generative models and surrogate screening. Yet current AI workflows optimize performance first, deferring sustainability to post synthesis assessment. This creates inefficiency by the time environmental burdens are quantified, resources have been invested in potentially unsustainable solutions. The disconnect between atomic scale design and lifecycle assessment (LCA) reflects fundamental challenges, data scarcity across heterogeneous sources, scale gaps from atoms to industrial systems, uncertainty in synthesis pathways, and the absence of frameworks that co-optimize performance with environmental impact. We propose to integrate upstream machine learning (ML) assisted materials discovery with downstream lifecycle assessment into a uniform ML-LCA environment. The framework ML-LCA integrates five components, information extraction for building materials-environment knowledge bases, harmonized databases linking properties to sustainability metrics, multi-scale models bridging atomic properties to lifecycle impacts, ensemble prediction of manufacturing pathways with uncertainty quantification, and uncertainty-aware optimization enabling simultaneous performance-sustainability navigation. Case studies spanning glass, cement, semiconductor photoresists, and polymers demonstrate both necessity and feasibility while identifying material-specific integration challenges. Realizing ML-LCA demands coordinated advances in data infrastructure, ex-ante assessment methodologies, multi-objective optimization, and regulatory alignment enabling the discovery of materials that are sustainable by design rather than by chance.

On the Equivalence of Regression and Classification

Nov 06, 2025Abstract:A formal link between regression and classification has been tenuous. Even though the margin maximization term $\|w\|$ is used in support vector regression, it has at best been justified as a regularizer. We show that a regression problem with $M$ samples lying on a hyperplane has a one-to-one equivalence with a linearly separable classification task with $2M$ samples. We show that margin maximization on the equivalent classification task leads to a different regression formulation than traditionally used. Using the equivalence, we demonstrate a ``regressability'' measure, that can be used to estimate the difficulty of regressing a dataset, without needing to first learn a model for it. We use the equivalence to train neural networks to learn a linearizing map, that transforms input variables into a space where a linear regressor is adequate.

Energy & Force Regression on DFT Trajectories is Not Enough for Universal Machine Learning Interatomic Potentials

Feb 05, 2025

Abstract:Universal Machine Learning Interactomic Potentials (MLIPs) enable accelerated simulations for materials discovery. However, current research efforts fail to impactfully utilize MLIPs due to: 1. Overreliance on Density Functional Theory (DFT) for MLIP training data creation; 2. MLIPs' inability to reliably and accurately perform large-scale molecular dynamics (MD) simulations for diverse materials; 3. Limited understanding of MLIPs' underlying capabilities. To address these shortcomings, we aargue that MLIP research efforts should prioritize: 1. Employing more accurate simulation methods for large-scale MLIP training data creation (e.g. Coupled Cluster Theory) that cover a wide range of materials design spaces; 2. Creating MLIP metrology tools that leverage large-scale benchmarking, visualization, and interpretability analyses to provide a deeper understanding of MLIPs' inner workings; 3. Developing computationally efficient MLIPs to execute MD simulations that accurately model a broad set of materials properties. Together, these interdisciplinary research directions can help further the real-world application of MLIPs to accurately model complex materials at device scale.

CoNOAir: A Neural Operator for Forecasting Carbon Monoxide Evolution in Cities

Jan 13, 2025

Abstract:Carbon Monoxide (CO) is a dominant pollutant in urban areas due to the energy generation from fossil fuels for industry, automobile, and domestic requirements. Forecasting the evolution of CO in real-time can enable the deployment of effective early warning systems and intervention strategies. However, the computational cost associated with the physics and chemistry-based simulation makes it prohibitive to implement such a model at the city and country scale. To address this challenge, here, we present a machine learning model based on neural operator, namely, Complex Neural Operator for Air Quality (CoNOAir), that can effectively forecast CO concentrations. We demonstrate this by developing a country-level model for short-term (hourly) and long-term (72-hour) forecasts of CO concentrations. Our model outperforms state-of-the-art models such as Fourier neural operators (FNO) and provides reliable predictions for both short and long-term forecasts. We further analyse the capability of the model to capture extreme events and generate forecasts in urban cities in India. Interestingly, we observe that the model predicts the next hour CO concentrations with R2 values greater than 0.95 for all the cities considered. The deployment of such a model can greatly assist the governing bodies to provide early warning, plan intervention strategies, and develop effective strategies by considering several what-if scenarios. Altogether, the present approach could provide a fillip to real-time predictions of CO pollution in urban cities.

Industrial-scale Prediction of Cement Clinker Phases using Machine Learning

Dec 16, 2024

Abstract:Cement production, exceeding 4.1 billion tonnes and contributing 2.4 tonnes of CO2 annually, faces critical challenges in quality control and process optimization. While traditional process models for cement manufacturing are confined to steady-state conditions with limited predictive capability for mineralogical phases, modern plants operate under dynamic conditions that demand real-time quality assessment. Here, exploiting a comprehensive two-year operational dataset from an industrial cement plant, we present a machine learning framework that accurately predicts clinker mineralogy from process data. Our model achieves unprecedented prediction accuracy for major clinker phases while requiring minimal input parameters, demonstrating robust performance under varying operating conditions. Through post-hoc explainable algorithms, we interpret the hierarchical relationships between clinker oxides and phase formation, providing insights into the functioning of an otherwise black-box model. This digital twin framework can potentially enable real-time optimization of cement production, thereby providing a route toward reducing material waste and ensuring quality while reducing the associated emissions under real plant conditions. Our approach represents a significant advancement in industrial process control, offering a scalable solution for sustainable cement manufacturing.

Foundational Large Language Models for Materials Research

Dec 12, 2024

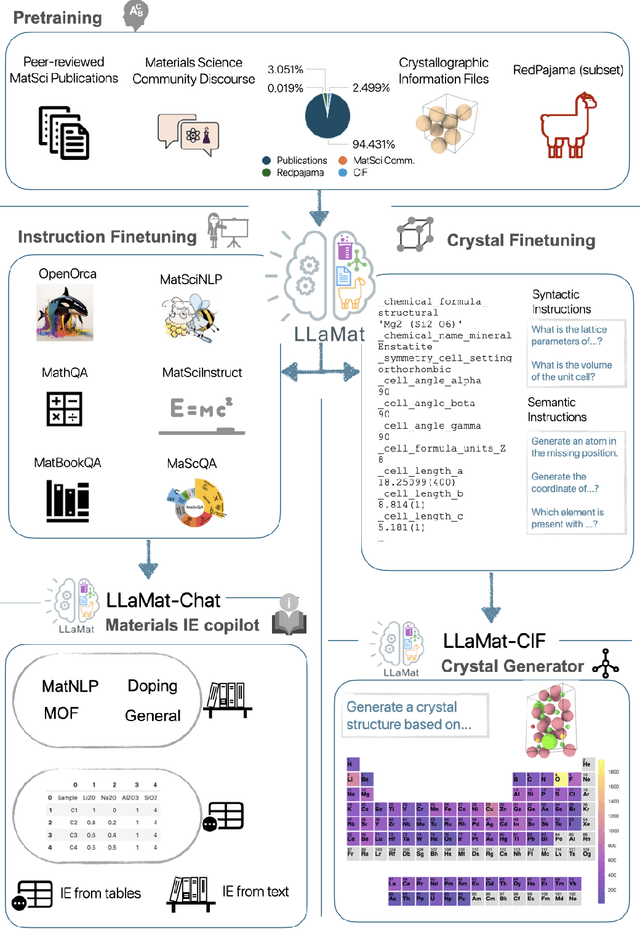

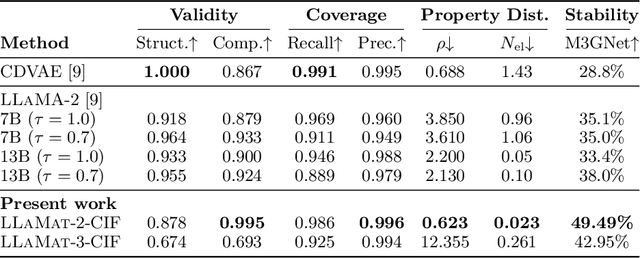

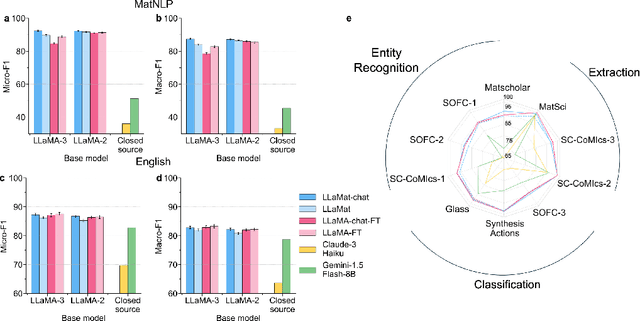

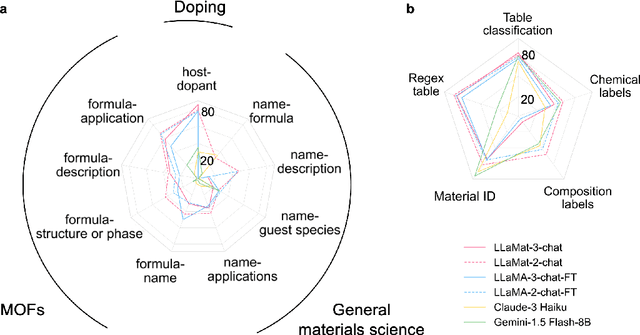

Abstract:Materials discovery and development are critical for addressing global challenges. Yet, the exponential growth in materials science literature comprising vast amounts of textual data has created significant bottlenecks in knowledge extraction, synthesis, and scientific reasoning. Large Language Models (LLMs) offer unprecedented opportunities to accelerate materials research through automated analysis and prediction. Still, their effective deployment requires domain-specific adaptation for understanding and solving domain-relevant tasks. Here, we present LLaMat, a family of foundational models for materials science developed through continued pretraining of LLaMA models on an extensive corpus of materials literature and crystallographic data. Through systematic evaluation, we demonstrate that LLaMat excels in materials-specific NLP and structured information extraction while maintaining general linguistic capabilities. The specialized LLaMat-CIF variant demonstrates unprecedented capabilities in crystal structure generation, predicting stable crystals with high coverage across the periodic table. Intriguingly, despite LLaMA-3's superior performance in comparison to LLaMA-2, we observe that LLaMat-2 demonstrates unexpectedly enhanced domain-specific performance across diverse materials science tasks, including structured information extraction from text and tables, more particularly in crystal structure generation, a potential adaptation rigidity in overtrained LLMs. Altogether, the present work demonstrates the effectiveness of domain adaptation towards developing practically deployable LLM copilots for materials research. Beyond materials science, our findings reveal important considerations for domain adaptation of LLMs, such as model selection, training methodology, and domain-specific performance, which may influence the development of specialized scientific AI systems.

Probing the limitations of multimodal language models for chemistry and materials research

Nov 25, 2024

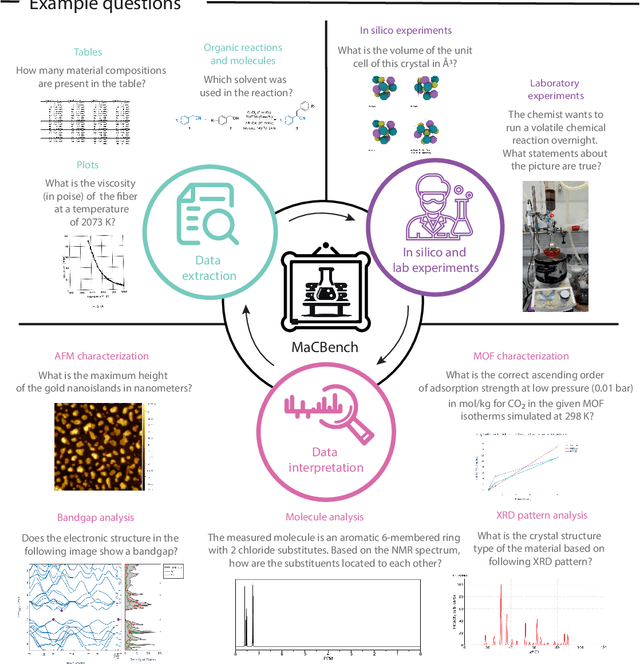

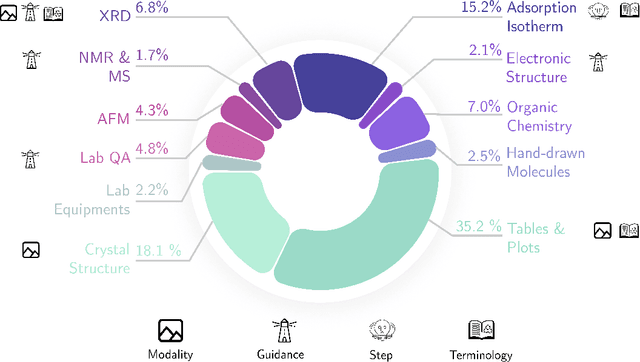

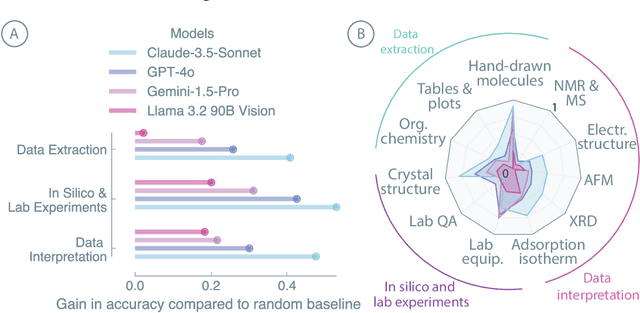

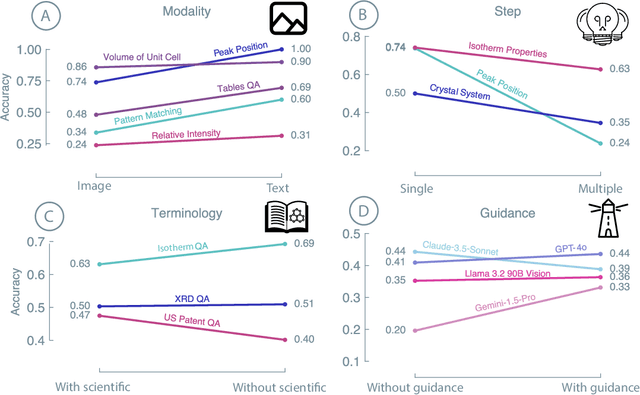

Abstract:Recent advancements in artificial intelligence have sparked interest in scientific assistants that could support researchers across the full spectrum of scientific workflows, from literature review to experimental design and data analysis. A key capability for such systems is the ability to process and reason about scientific information in both visual and textual forms - from interpreting spectroscopic data to understanding laboratory setups. Here, we introduce MaCBench, a comprehensive benchmark for evaluating how vision-language models handle real-world chemistry and materials science tasks across three core aspects: data extraction, experimental understanding, and results interpretation. Through a systematic evaluation of leading models, we find that while these systems show promising capabilities in basic perception tasks - achieving near-perfect performance in equipment identification and standardized data extraction - they exhibit fundamental limitations in spatial reasoning, cross-modal information synthesis, and multi-step logical inference. Our insights have important implications beyond chemistry and materials science, suggesting that developing reliable multimodal AI scientific assistants may require advances in curating suitable training data and approaches to training those models.

TAGMol: Target-Aware Gradient-guided Molecule Generation

Jun 03, 2024

Abstract:3D generative models have shown significant promise in structure-based drug design (SBDD), particularly in discovering ligands tailored to specific target binding sites. Existing algorithms often focus primarily on ligand-target binding, characterized by binding affinity. Moreover, models trained solely on target-ligand distribution may fall short in addressing the broader objectives of drug discovery, such as the development of novel ligands with desired properties like drug-likeness, and synthesizability, underscoring the multifaceted nature of the drug design process. To overcome these challenges, we decouple the problem into molecular generation and property prediction. The latter synergistically guides the diffusion sampling process, facilitating guided diffusion and resulting in the creation of meaningful molecules with the desired properties. We call this guided molecular generation process as TAGMol. Through experiments on benchmark datasets, TAGMol demonstrates superior performance compared to state-of-the-art baselines, achieving a 22% improvement in average Vina Score and yielding favorable outcomes in essential auxiliary properties. This establishes TAGMol as a comprehensive framework for drug generation.

MaScQA: A Question Answering Dataset for Investigating Materials Science Knowledge of Large Language Models

Aug 17, 2023

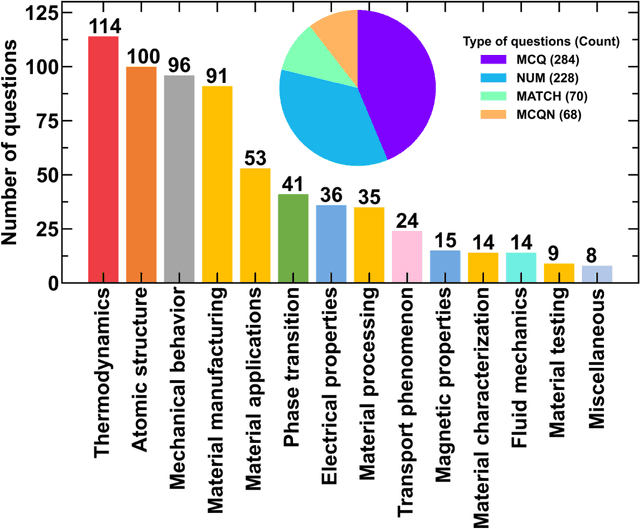

Abstract:Information extraction and textual comprehension from materials literature are vital for developing an exhaustive knowledge base that enables accelerated materials discovery. Language models have demonstrated their capability to answer domain-specific questions and retrieve information from knowledge bases. However, there are no benchmark datasets in the materials domain that can evaluate the understanding of the key concepts by these language models. In this work, we curate a dataset of 650 challenging questions from the materials domain that require the knowledge and skills of a materials student who has cleared their undergraduate degree. We classify these questions based on their structure and the materials science domain-based subcategories. Further, we evaluate the performance of GPT-3.5 and GPT-4 models on solving these questions via zero-shot and chain of thought prompting. It is observed that GPT-4 gives the best performance (~62% accuracy) as compared to GPT-3.5. Interestingly, in contrast to the general observation, no significant improvement in accuracy is observed with the chain of thought prompting. To evaluate the limitations, we performed an error analysis, which revealed conceptual errors (~64%) as the major contributor compared to computational errors (~36%) towards the reduced performance of LLMs. We hope that the dataset and analysis performed in this work will promote further research in developing better materials science domain-specific LLMs and strategies for information extraction.

Graph Neural Stochastic Differential Equations for Learning Brownian Dynamics

Jun 20, 2023Abstract:Neural networks (NNs) that exploit strong inductive biases based on physical laws and symmetries have shown remarkable success in learning the dynamics of physical systems directly from their trajectory. However, these works focus only on the systems that follow deterministic dynamics, for instance, Newtonian or Hamiltonian dynamics. Here, we propose a framework, namely Brownian graph neural networks (BROGNET), combining stochastic differential equations (SDEs) and GNNs to learn Brownian dynamics directly from the trajectory. We theoretically show that BROGNET conserves the linear momentum of the system, which in turn, provides superior performance on learning dynamics as revealed empirically. We demonstrate this approach on several systems, namely, linear spring, linear spring with binary particle types, and non-linear spring systems, all following Brownian dynamics at finite temperatures. We show that BROGNET significantly outperforms proposed baselines across all the benchmarked Brownian systems. In addition, we demonstrate zero-shot generalizability of BROGNET to simulate unseen system sizes that are two orders of magnitude larger and to different temperatures than those used during training. Altogether, our study contributes to advancing the understanding of the intricate dynamics of Brownian motion and demonstrates the effectiveness of graph neural networks in modeling such complex systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge