Manuel López-Ibáñez

University of Málaga, Spain

Transfer Learning of Surrogate Models: Integrating Domain Warping and Affine Transformations

Jan 30, 2025Abstract:Surrogate models provide efficient alternatives to computationally demanding real-world processes but often require large datasets for effective training. A promising solution to this limitation is the transfer of pre-trained surrogate models to new tasks. Previous studies have investigated the transfer of differentiable and non-differentiable surrogate models, typically assuming an affine transformation between the source and target functions. This paper extends previous research by addressing a broader range of transformations, including linear and nonlinear variations. Specifically, we consider the combination of an unknown input warping, such as one modelled by the beta cumulative distribution function, with an unspecified affine transformation. Our approach achieves transfer learning by employing a limited number of data points from the target task to optimize these transformations, minimizing empirical loss on the transfer dataset. We validate the proposed method on the widely used Black-Box Optimization Benchmark (BBOB) testbed and a real-world transfer learning task from the automobile industry. The results underscore the significant advantages of the approach, revealing that the transferred surrogate significantly outperforms both the original surrogate and the one built from scratch using the transfer dataset, particularly in data-scarce scenarios.

Transfer Learning of Surrogate Models via Domain Affine Transformation Across Synthetic and Real-World Benchmarks

Jan 23, 2025

Abstract:Surrogate models are frequently employed as efficient substitutes for the costly execution of real-world processes. However, constructing a high-quality surrogate model often demands extensive data acquisition. A solution to this issue is to transfer pre-trained surrogate models for new tasks, provided that certain invariances exist between tasks. This study focuses on transferring non-differentiable surrogate models (e.g., random forest) from a source function to a target function, where we assume their domains are related by an unknown affine transformation, using only a limited amount of transfer data points evaluated on the target. Previous research attempts to tackle this challenge for differentiable models, e.g., Gaussian process regression, which minimizes the empirical loss on the transfer data by tuning the affine transformations. In this paper, we extend the previous work to the random forest model and assess its effectiveness on a widely-used artificial problem set - Black-Box Optimization Benchmark (BBOB) testbed, and on four real-world transfer learning problems. The results highlight the significant practical advantages of the proposed method, particularly in reducing both the data requirements and computational costs of training surrogate models for complex real-world scenarios.

Using the Empirical Attainment Function for Analyzing Single-objective Black-box Optimization Algorithms

Apr 02, 2024

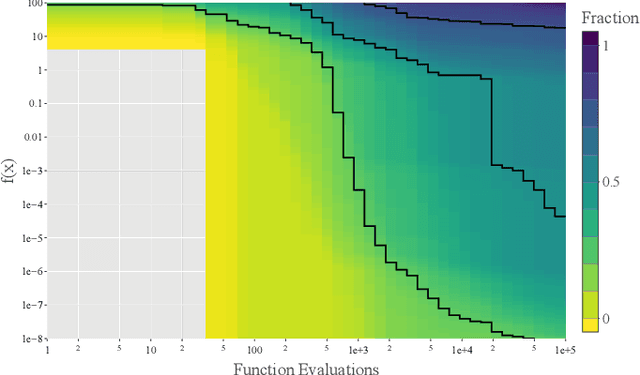

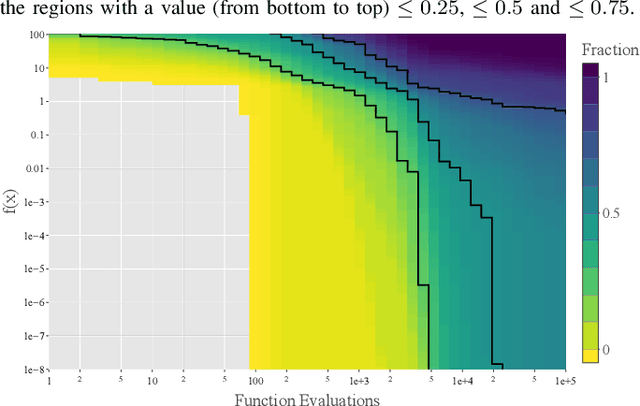

Abstract:A widely accepted way to assess the performance of iterative black-box optimizers is to analyze their empirical cumulative distribution function (ECDF) of pre-defined quality targets achieved not later than a given runtime. In this work, we consider an alternative approach, based on the empirical attainment function (EAF) and we show that the target-based ECDF is an approximation of the EAF. We argue that the EAF has several advantages over the target-based ECDF. In particular, it does not require defining a priori quality targets per function, captures performance differences more precisely, and enables the use of additional summary statistics that enrich the analysis. We also show that the average area over the convergence curves is a simpler-to-calculate, but equivalent, measure of anytime performance. To facilitate the accessibility of the EAF, we integrate a module to compute it into the IOHanalyzer platform. Finally, we illustrate the use of the EAF via synthetic examples and via the data available for the BBOB suite.

Analysis of modular CMA-ES on strict box-constrained problems in the SBOX-COST benchmarking suite

May 24, 2023

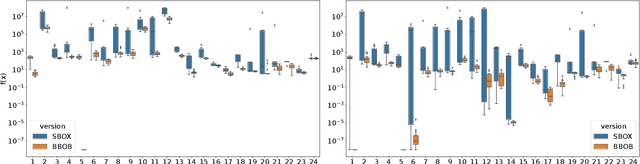

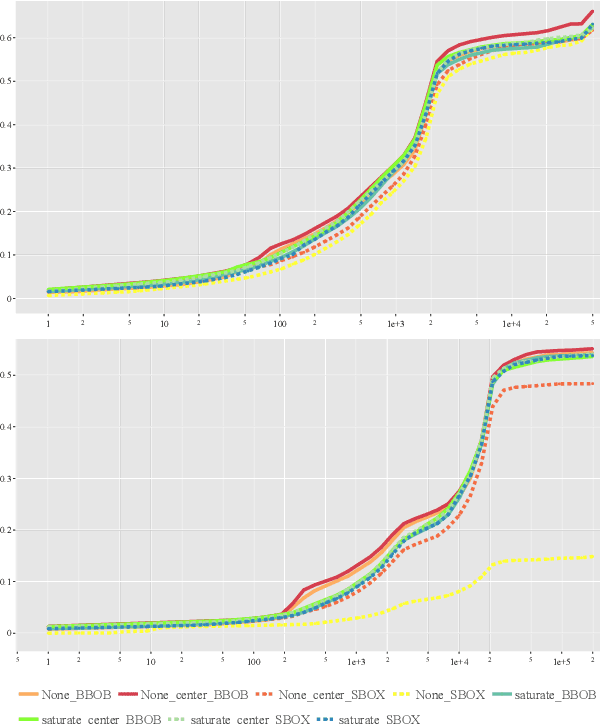

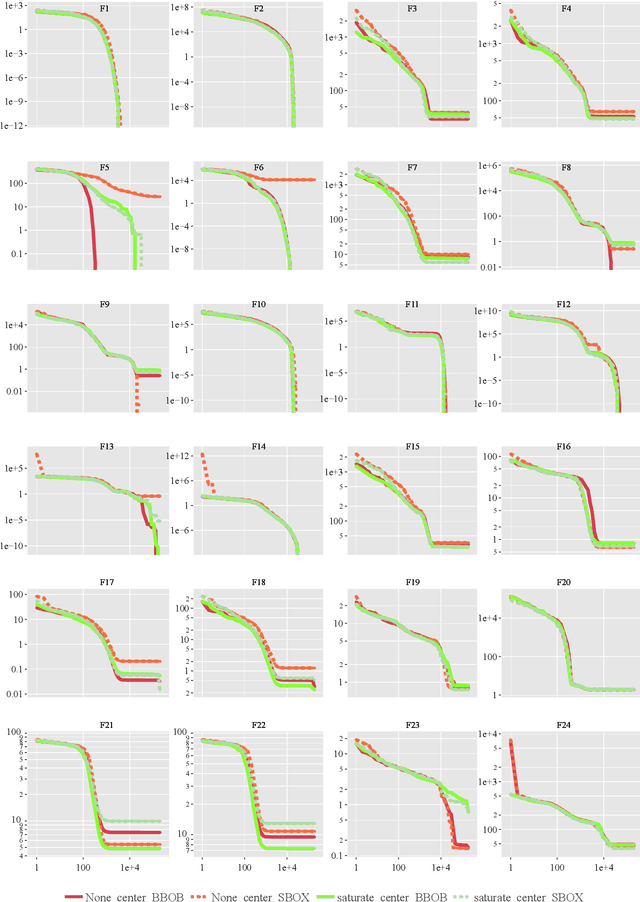

Abstract:Box-constraints limit the domain of decision variables and are common in real-world optimization problems, for example, due to physical, natural or spatial limitations. Consequently, solutions violating a box-constraint may not be evaluable. This assumption is often ignored in the literature, e.g., existing benchmark suites, such as COCO/BBOB, allow the optimizer to evaluate infeasible solutions. This paper presents an initial study on the strict-box-constrained benchmarking suite (SBOX-COST), which is a variant of the well-known BBOB benchmark suite that enforces box-constraints by returning an invalid evaluation value for infeasible solutions. Specifically, we want to understand the performance difference between BBOB and SBOX-COST as a function of two initialization methods and six constraint-handling strategies all tested with modular CMA-ES. We find that, contrary to what may be expected, handling box-constraints by saturation is not always better than not handling them at all. However, across all BBOB functions, saturation is better than not handling, and the difference increases with the number of dimensions. Strictly enforcing box-constraints also has a clear negative effect on the performance of classical CMA-ES (with uniform random initialization and no constraint handling), especially as problem dimensionality increases.

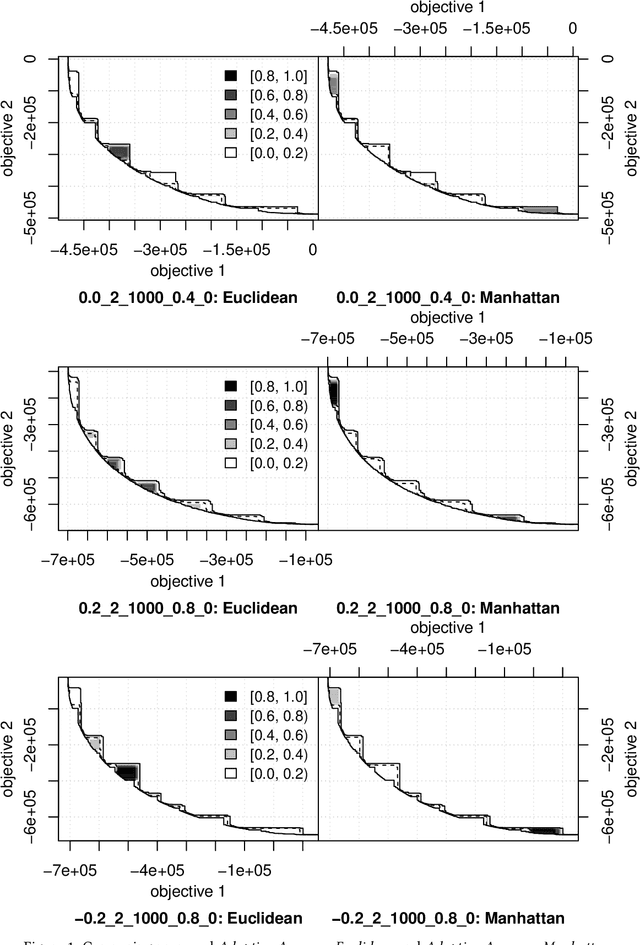

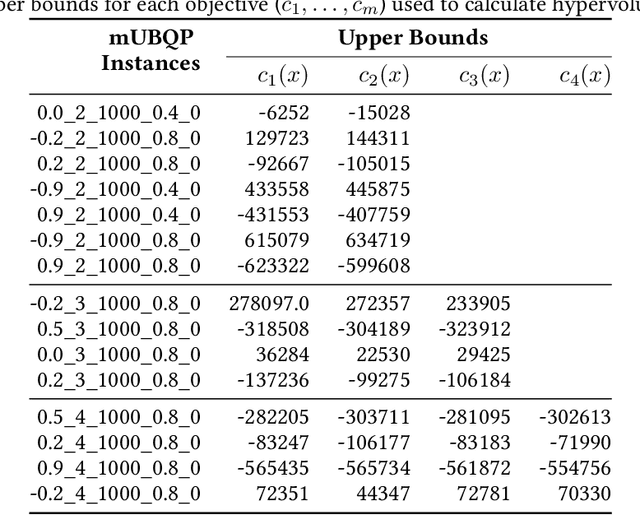

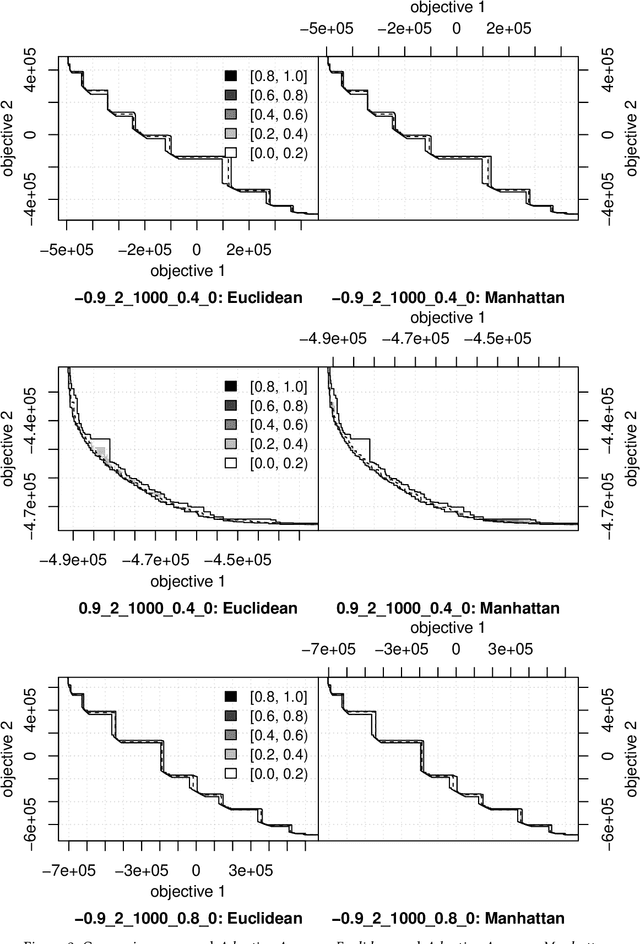

Applying Ising Machines to Multi-objective QUBOs

May 19, 2023

Abstract:Multi-objective optimisation problems involve finding solutions with varying trade-offs between multiple and often conflicting objectives. Ising machines are physical devices that aim to find the absolute or approximate ground states of an Ising model. To apply Ising machines to multi-objective problems, a weighted sum objective function is used to convert multi-objective into single-objective problems. However, deriving scalarisation weights that archives evenly distributed solutions across the Pareto front is not trivial. Previous work has shown that adaptive weights based on dichotomic search, and one based on averages of previously explored weights can explore the Pareto front quicker than uniformly generated weights. However, these adaptive methods have only been applied to bi-objective problems in the past. In this work, we extend the adaptive method based on averages in two ways: (i)~we extend the adaptive method of deriving scalarisation weights for problems with two or more objectives, and (ii)~we use an alternative measure of distance to improve performance. We compare the proposed method with existing ones and show that it leads to the best performance on multi-objective Unconstrained Binary Quadratic Programming (mUBQP) instances with 3 and 4 objectives and that it is competitive with the best one for instances with 2 objectives.

Multi-Objective Archiving

Mar 16, 2023Abstract:Most multi-objective optimisation algorithms maintain an archive explicitly or implicitly during their search. Such an archive can be solely used to store high-quality solutions presented to the decision maker, but in many cases may participate in the search process (e.g., as the population in evolutionary computation). Over the last two decades, archiving, the process of comparing new solutions with previous ones and deciding how to update the archive/population, stands as an important issue in evolutionary multi-objective optimisation (EMO). This is evidenced by constant efforts from the community on developing various effective archiving methods, ranging from conventional Pareto-based methods to more recent indicator-based and decomposition-based ones. However, the focus of these efforts is on empirical performance comparison in terms of specific quality indicators; there is lack of systematic study of archiving methods from a general theoretical perspective. In this paper, we attempt to conduct a systematic overview of multi-objective archiving, in the hope of paving the way to understand archiving algorithms from a holistic perspective of theory and practice, and more importantly providing a guidance on how to design theoretically desirable and practically useful archiving algorithms. In doing so, we also present that archiving algorithms based on weakly Pareto compliant indicators (e.g., epsilon-indicator), as long as designed properly, can achieve the same theoretical desirables as archivers based on Pareto compliant indicators (e.g., hypervolume indicator). Such desirables include the property limit-optimal, the limit form of the possible optimal property that a bounded archiving algorithm can have with respect to the most general form of superiority between solution sets.

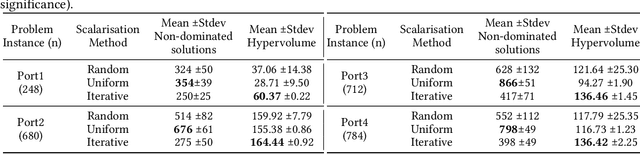

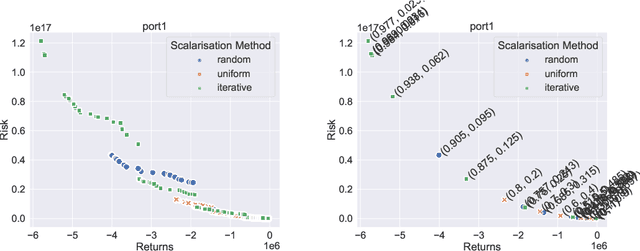

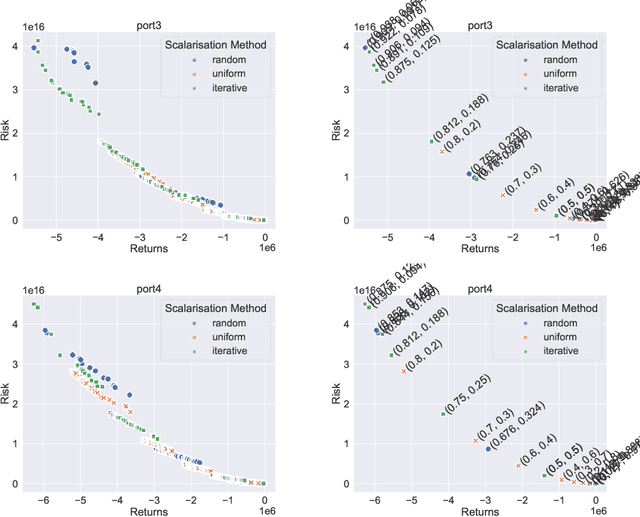

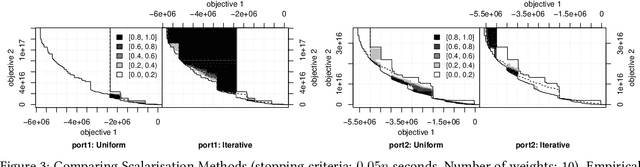

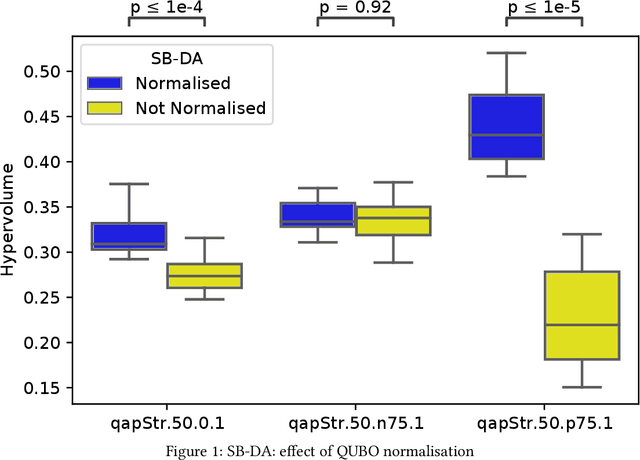

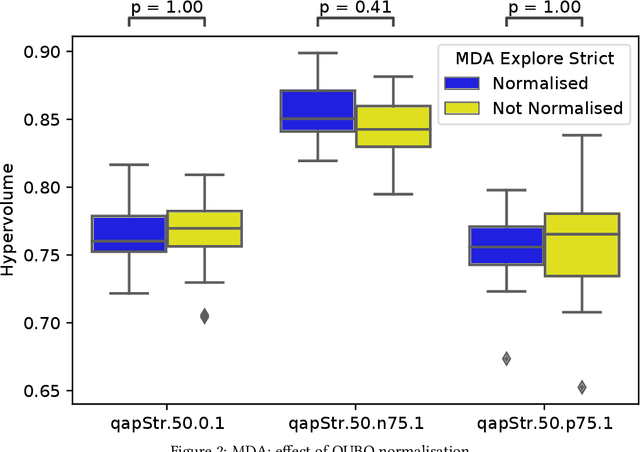

A Study of Scalarisation Techniques for Multi-Objective QUBO Solving

Oct 20, 2022

Abstract:In recent years, there has been significant research interest in solving Quadratic Unconstrained Binary Optimisation (QUBO) problems. Physics-inspired optimisation algorithms have been proposed for deriving optimal or sub-optimal solutions to QUBOs. These methods are particularly attractive within the context of using specialised hardware, such as quantum computers, application specific CMOS and other high performance computing resources for solving optimisation problems. These solvers are then applied to QUBO formulations of combinatorial optimisation problems. Quantum and quantum-inspired optimisation algorithms have shown promising performance when applied to academic benchmarks as well as real-world problems. However, QUBO solvers are single objective solvers. To make them more efficient at solving problems with multiple objectives, a decision on how to convert such multi-objective problems to single-objective problems need to be made. In this study, we compare methods of deriving scalarisation weights when combining two objectives of the cardinality constrained mean-variance portfolio optimisation problem into one. We show significant performance improvement (measured in terms of hypervolume) when using a method that iteratively fills the largest space in the Pareto front compared to a n\"aive approach using uniformly generated weights.

Improving Nevergrad's Algorithm Selection Wizard NGOpt through Automated Algorithm Configuration

Sep 09, 2022Abstract:Algorithm selection wizards are effective and versatile tools that automatically select an optimization algorithm given high-level information about the problem and available computational resources, such as number and type of decision variables, maximal number of evaluations, possibility to parallelize evaluations, etc. State-of-the-art algorithm selection wizards are complex and difficult to improve. We propose in this work the use of automated configuration methods for improving their performance by finding better configurations of the algorithms that compose them. In particular, we use elitist iterated racing (irace) to find CMA configurations for specific artificial benchmarks that replace the hand-crafted CMA configurations currently used in the NGOpt wizard provided by the Nevergrad platform. We discuss in detail the setup of irace for the purpose of generating configurations that work well over the diverse set of problem instances within each benchmark. Our approach improves the performance of the NGOpt wizard, even on benchmark suites that were not part of the tuning by irace.

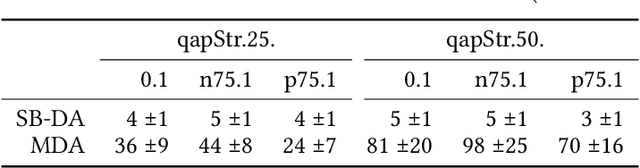

Multi-objective QUBO Solver: Bi-objective Quadratic Assignment

May 26, 2022

Abstract:Quantum and quantum-inspired optimisation algorithms are designed to solve problems represented in binary, quadratic and unconstrained form. Combinatorial optimisation problems are therefore often formulated as Quadratic Unconstrained Binary Optimisation Problems (QUBO) to solve them with these algorithms. Moreover, these QUBO solvers are often implemented using specialised hardware to achieve enormous speedups, e.g. Fujitsu's Digital Annealer (DA) and D-Wave's Quantum Annealer. However, these are single-objective solvers, while many real-world problems feature multiple conflicting objectives. Thus, a common practice when using these QUBO solvers is to scalarise such multi-objective problems into a sequence of single-objective problems. Due to design trade-offs of these solvers, formulating each scalarisation may require more time than finding a local optimum. We present the first attempt to extend the algorithm supporting a commercial QUBO solver as a multi-objective solver that is not based on scalarisation. The proposed multi-objective DA algorithm is validated on the bi-objective Quadratic Assignment Problem. We observe that algorithm performance significantly depends on the archiving strategy adopted, and that combining DA with non-scalarisation methods to optimise multiple objectives outperforms the current scalarised version of the DA in terms of final solution quality.

Are Evolutionary Algorithms Safe Optimizers?

Mar 24, 2022

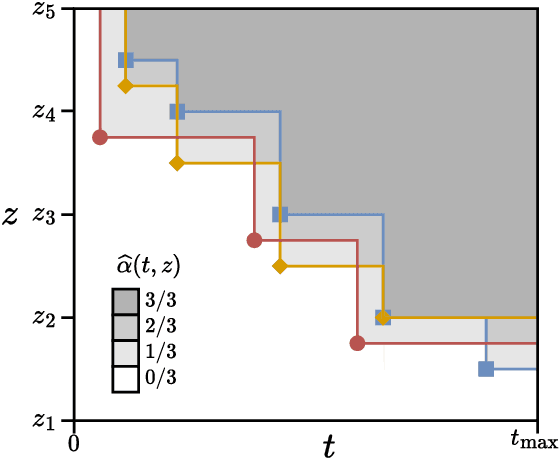

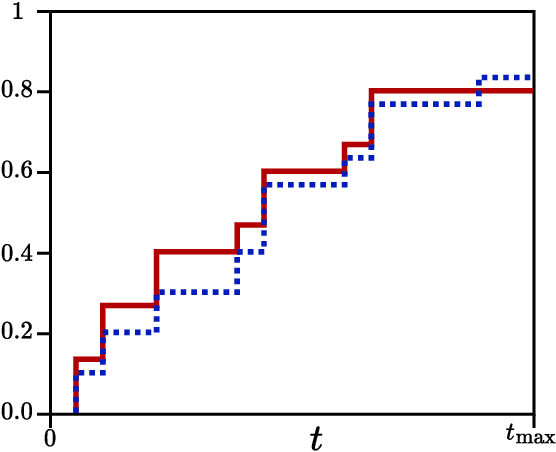

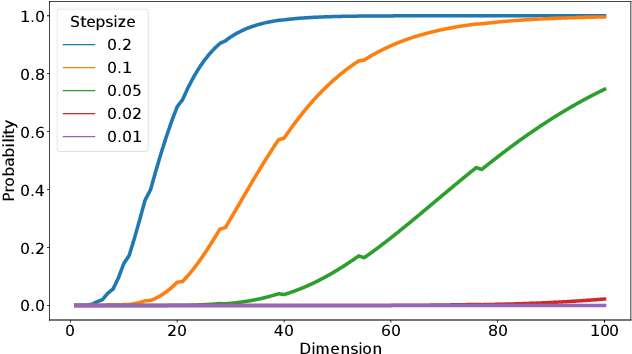

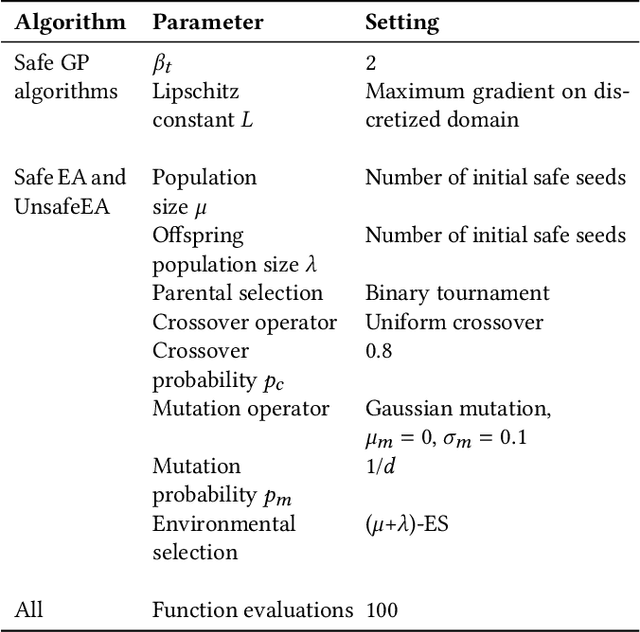

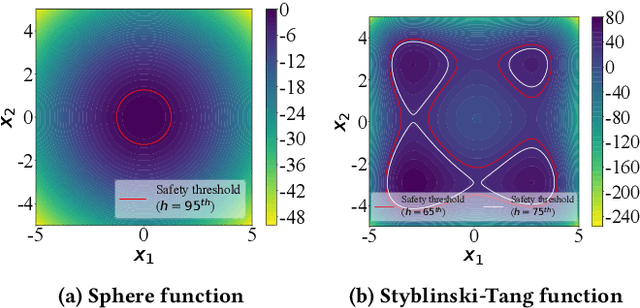

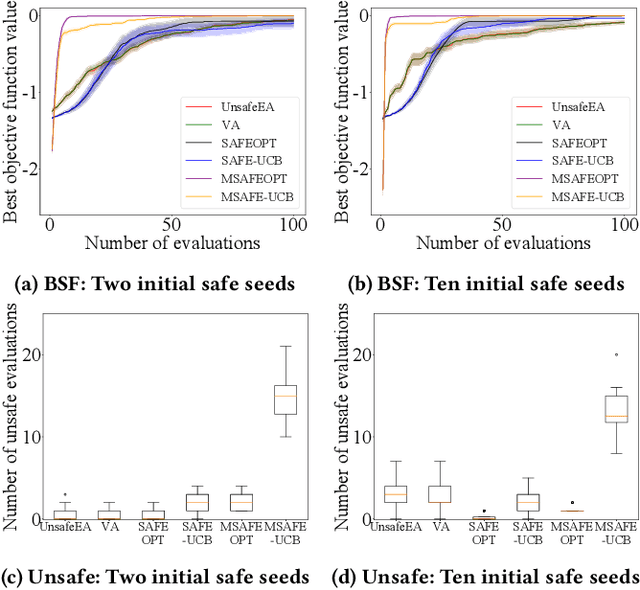

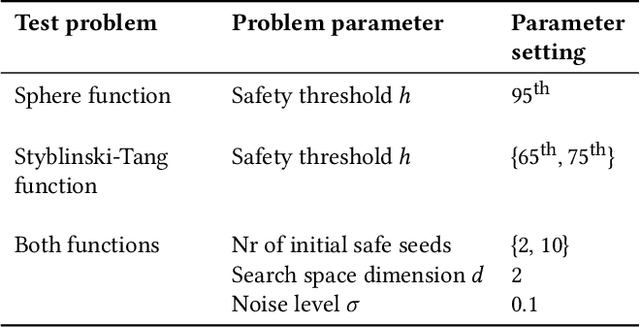

Abstract:We consider a type of constrained optimization problem, where the violation of a constraint leads to an irrevocable loss, such as breakage of a valuable experimental resource/platform or loss of human life. Such problems are referred to as safe optimization problems (SafeOPs). While SafeOPs have received attention in the machine learning community in recent years, there was little interest in the evolutionary computation (EC) community despite some early attempts between 2009 and 2011. Moreover, there is a lack of acceptable guidelines on how to benchmark different algorithms for SafeOPs, an area where the EC community has significant experience in. Driven by the need for more efficient algorithms and benchmark guidelines for SafeOPs, the objective of this paper is to reignite the interest of this problem class in the EC community. To achieve this we (i) provide a formal definition of SafeOPs and contrast it to other types of optimization problems that the EC community is familiar with, (ii) investigate the impact of key SafeOP parameters on the performance of selected safe optimization algorithms, (iii) benchmark EC against state-of-the-art safe optimization algorithms from the machine learning community, and (iv) provide an open-source Python framework to replicate and extend our work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge