Olaf Mersmann

DOLPHIN

Benchmarking that Matters: Rethinking Benchmarking for Practical Impact

Nov 15, 2025Abstract:Benchmarking has driven scientific progress in Evolutionary Computation, yet current practices fall short of real-world needs. Widely used synthetic suites such as BBOB and CEC isolate algorithmic phenomena but poorly reflect the structure, constraints, and information limitations of continuous and mixed-integer optimization problems in practice. This disconnect leads to the misuse of benchmarking suites for competitions, automated algorithm selection, and industrial decision-making, despite these suites being designed for different purposes. We identify key gaps in current benchmarking practices and tooling, including limited availability of real-world-inspired problems, missing high-level features, and challenges in multi-objective and noisy settings. We propose a vision centered on curated real-world-inspired benchmarks, practitioner-accessible feature spaces and community-maintained performance databases. Real progress requires coordinated effort: A living benchmarking ecosystem that evolves with real-world insights and supports both scientific understanding and industrial use.

Bed-Attached Vibration Sensor System: A Machine Learning Approach for Fall Detection in Nursing Homes

Dec 06, 2024

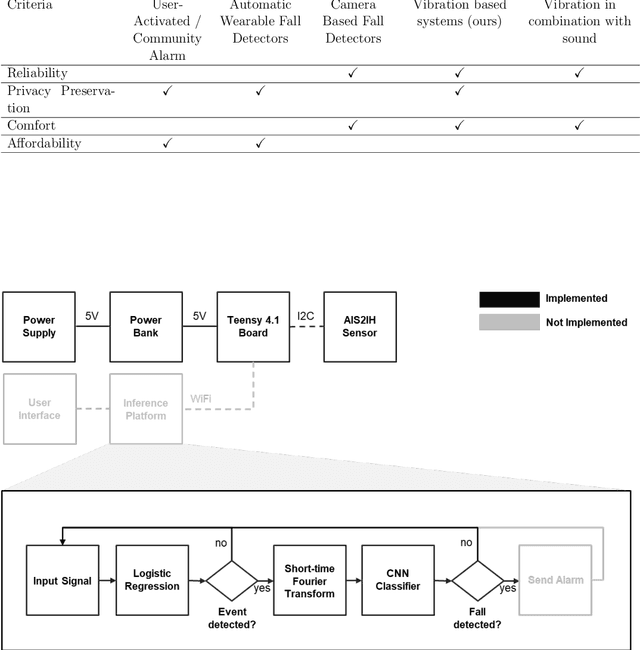

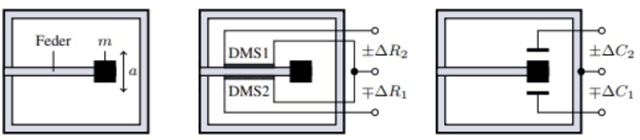

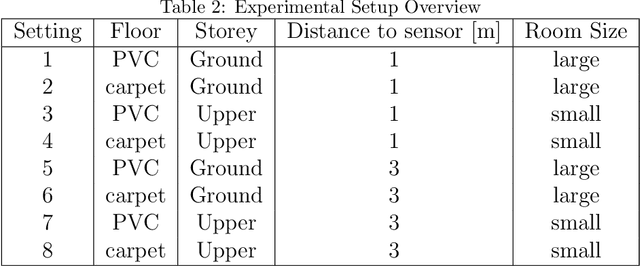

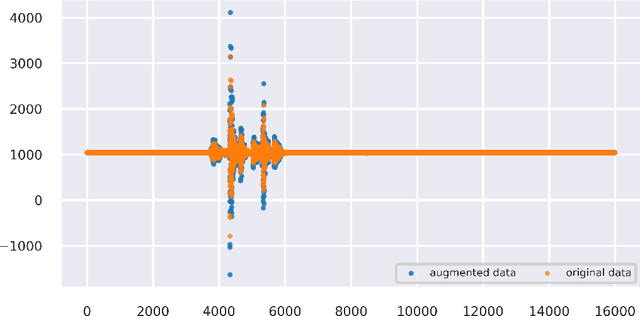

Abstract:The increasing shortage of nursing staff and the acute risk of falls in nursing homes pose significant challenges for the healthcare system. This study presents the development of an automated fall detection system integrated into care beds, aimed at enhancing patient safety without compromising privacy through wearables or video monitoring. Mechanical vibrations transmitted through the bed frame are processed using a short-time Fourier transform, enabling robust classification of distinct human fall patterns with a convolutional neural network. Challenges pertaining to the quantity and diversity of the data are addressed, proposing the generation of additional data with a specific emphasis on enhancing variation. While the model shows promising results in distinguishing fall events from noise using lab data, further testing in real-world environments is recommended for validation and improvement. Despite limited available data, the proposed system shows the potential for an accurate and rapid response to falls, mitigating health implications, and addressing the needs of an aging population. This case study was performed as part of the ZIM Project. Further research on sensors enhanced by artificial intelligence will be continued in the ShapeFuture Project.

Analysis of modular CMA-ES on strict box-constrained problems in the SBOX-COST benchmarking suite

May 24, 2023

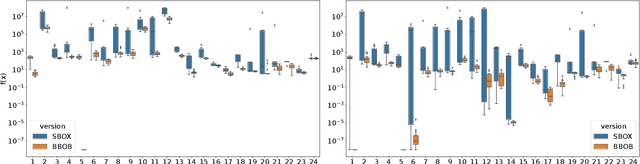

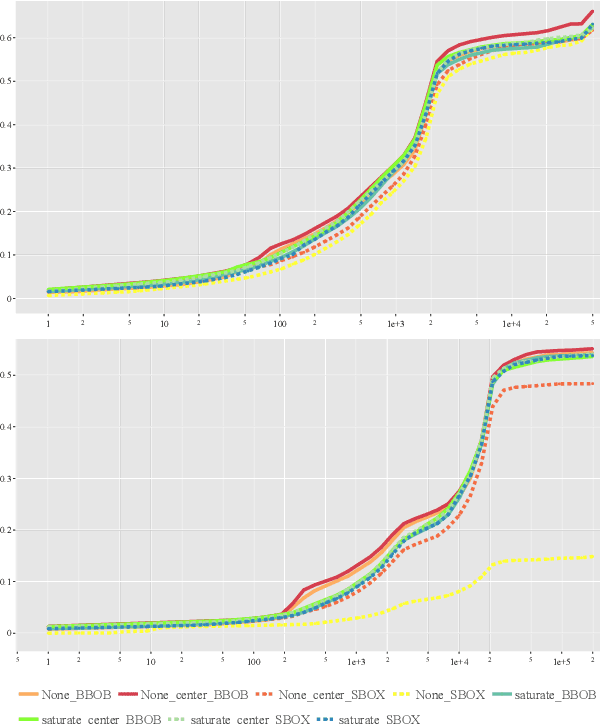

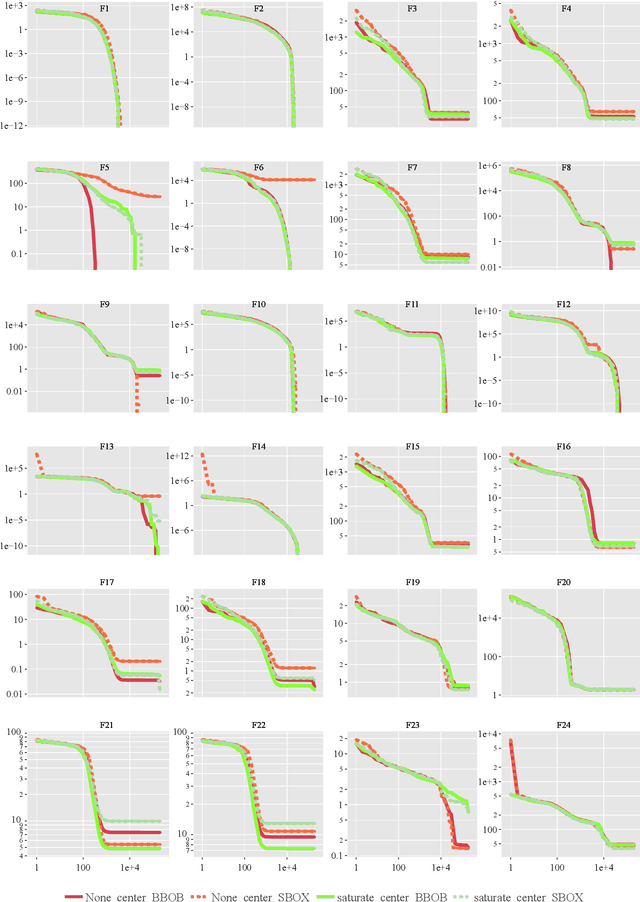

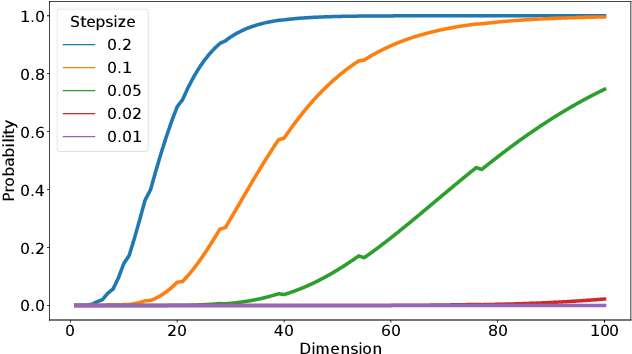

Abstract:Box-constraints limit the domain of decision variables and are common in real-world optimization problems, for example, due to physical, natural or spatial limitations. Consequently, solutions violating a box-constraint may not be evaluable. This assumption is often ignored in the literature, e.g., existing benchmark suites, such as COCO/BBOB, allow the optimizer to evaluate infeasible solutions. This paper presents an initial study on the strict-box-constrained benchmarking suite (SBOX-COST), which is a variant of the well-known BBOB benchmark suite that enforces box-constraints by returning an invalid evaluation value for infeasible solutions. Specifically, we want to understand the performance difference between BBOB and SBOX-COST as a function of two initialization methods and six constraint-handling strategies all tested with modular CMA-ES. We find that, contrary to what may be expected, handling box-constraints by saturation is not always better than not handling them at all. However, across all BBOB functions, saturation is better than not handling, and the difference increases with the number of dimensions. Strictly enforcing box-constraints also has a clear negative effect on the performance of classical CMA-ES (with uniform random initialization and no constraint handling), especially as problem dimensionality increases.

Experimental Investigation and Evaluation of Model-based Hyperparameter Optimization

Jul 19, 2021

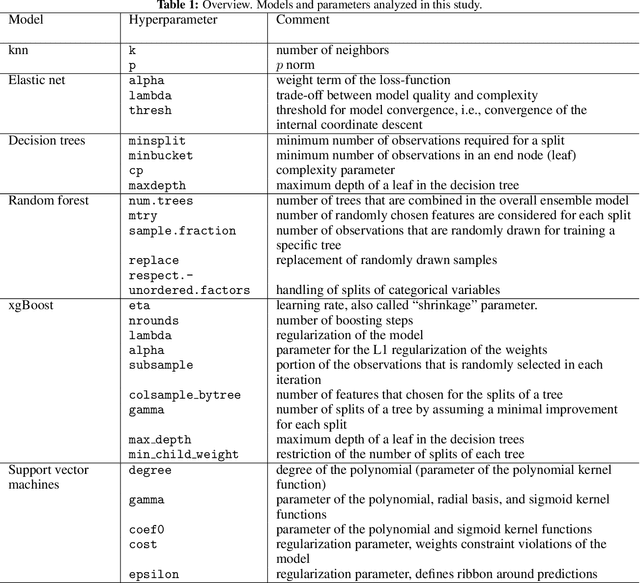

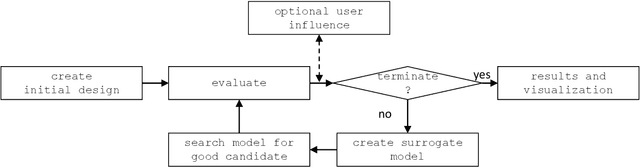

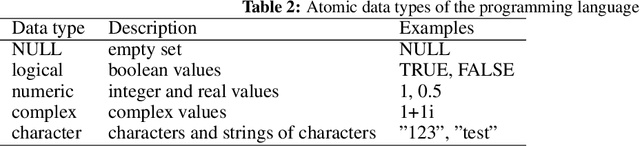

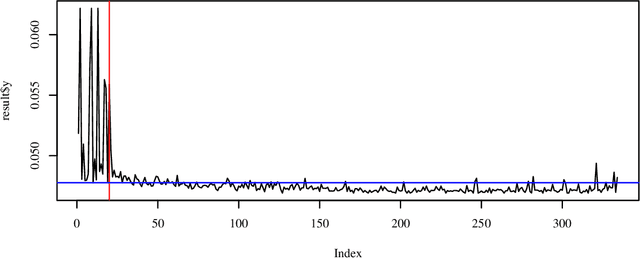

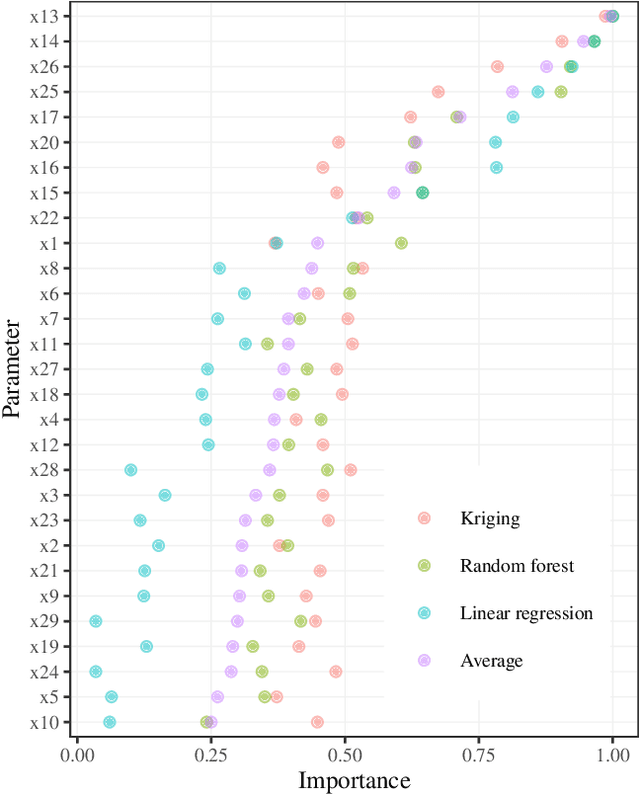

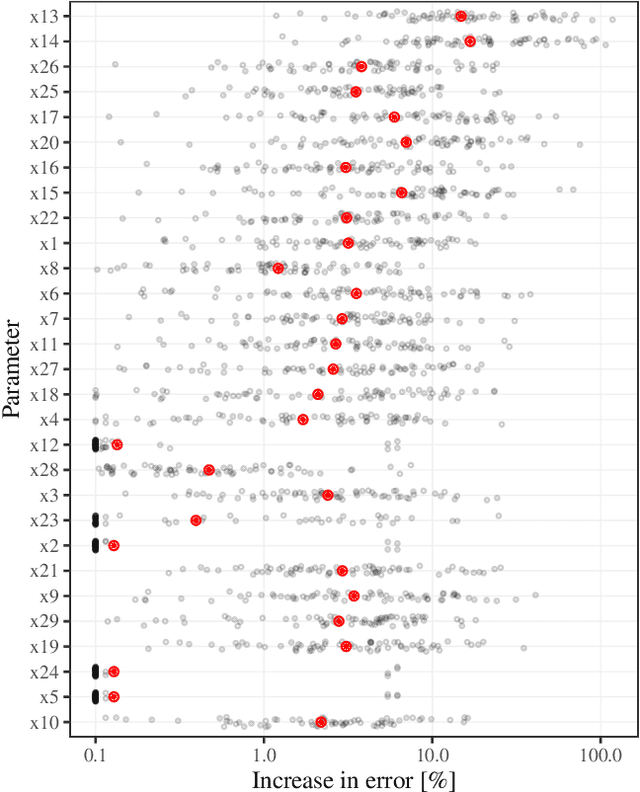

Abstract:Machine learning algorithms such as random forests or xgboost are gaining more importance and are increasingly incorporated into production processes in order to enable comprehensive digitization and, if possible, automation of processes. Hyperparameters of these algorithms used have to be set appropriately, which can be referred to as hyperparameter tuning or optimization. Based on the concept of tunability, this article presents an overview of theoretical and practical results for popular machine learning algorithms. This overview is accompanied by an experimental analysis of 30 hyperparameters from six relevant machine learning algorithms. In particular, it provides (i) a survey of important hyperparameters, (ii) two parameter tuning studies, and (iii) one extensive global parameter tuning study, as well as (iv) a new way, based on consensus ranking, to analyze results from multiple algorithms. The R package mlr is used as a uniform interface to the machine learning models. The R package SPOT is used to perform the actual tuning (optimization). All additional code is provided together with this paper.

Resource Planning for Hospitals Under Special Consideration of the COVID-19 Pandemic: Optimization and Sensitivity Analysis

May 16, 2021

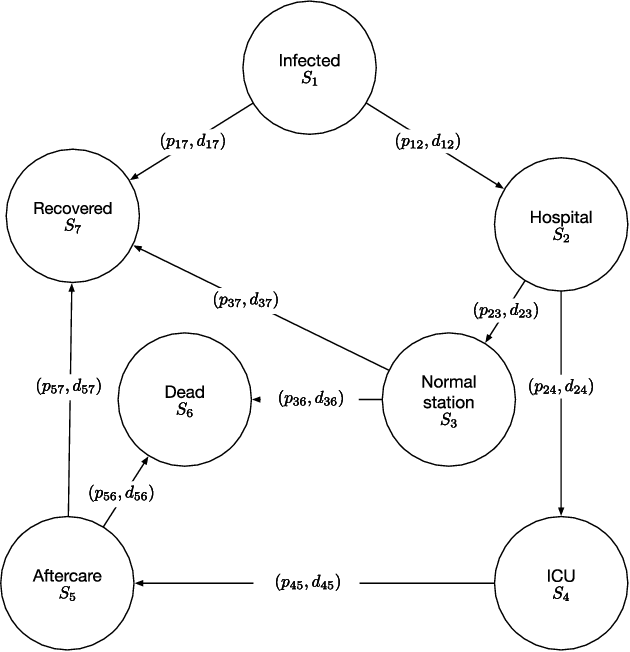

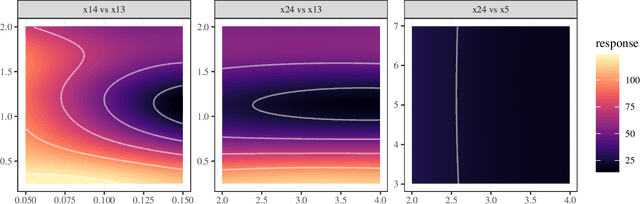

Abstract:Crises like the COVID-19 pandemic pose a serious challenge to health-care institutions. They need to plan the resources required for handling the increased load, for instance, hospital beds and ventilators. To support the resource planning of local health authorities from the Cologne region, BaBSim.Hospital, a tool for capacity planning based on discrete event simulation, was created. The predictive quality of the simulation is determined by 29 parameters. Reasonable default values of these parameters were obtained in detailed discussions with medical professionals. We aim to investigate and optimize these parameters to improve BaBSim.Hospital. First approaches with "out-of-the-box" optimization algorithms failed. Implementing a surrogate-based optimization approach generated useful results in a reasonable time. To understand the behavior of the algorithm and to get valuable insights into the fitness landscape, an in-depth sensitivity analysis was performed. The sensitivity analysis is crucial for the optimization process because it allows focusing the optimization on the most important parameters. We illustrate how this reduces the problem dimension without compromising the resulting accuracy. The presented approach is applicable to many other real-world problems, e.g., the development of new elevator systems to cover the last mile or simulation of student flow in academic study periods.

Hospital Capacity Planning Using Discrete Event Simulation Under Special Consideration of the COVID-19 Pandemic

Dec 14, 2020Abstract:We present a resource-planning tool for hospitals under special consideration of the COVID-19 pandemic, called babsim.hospital. It provides many advantages for crisis teams, e.g., comparison with their own local planning, simulation of local events, simulation of several scenarios (worst / best case). There are benefits for medical professionals, e.g, analysis of the pandemic at local, regional, state and federal level, the consideration of special risk groups, tools for validating the length of stays and transition probabilities. Finally, there are potential advantages for administration, management, e.g., assessment of the situation of individual hospitals taking local events into account, consideration of relevant resources such as beds, ventilators, rooms, protective clothing, and personnel planning, e.g., medical and nursing staff. babsim.hospital combines simulation, optimization, statistics, and artificial intelligence processes in a very efficient way. The core is a discrete, event-based simulation model.

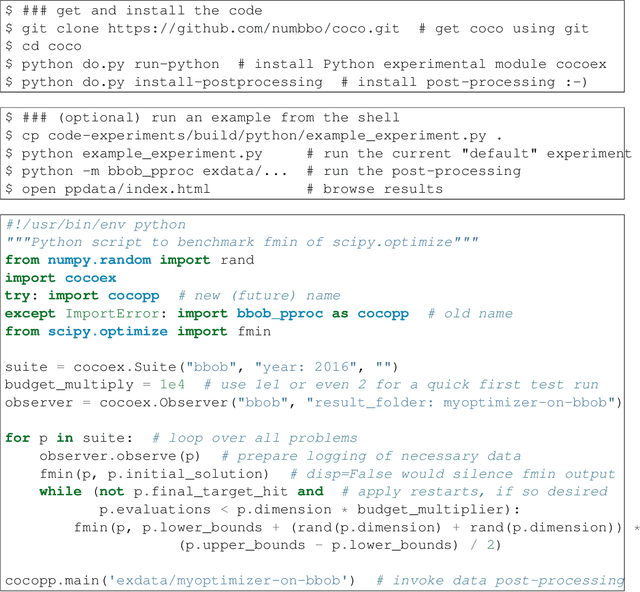

COCO: A Platform for Comparing Continuous Optimizers in a Black-Box Setting

Aug 01, 2016

Abstract:COCO is a platform for Comparing Continuous Optimizers in a black-box setting. It aims at automatizing the tedious and repetitive task of benchmarking numerical optimization algorithms to the greatest possible extent. We present the rationals behind the development of the platform as a general proposition for a guideline towards better benchmarking. We detail underlying fundamental concepts of COCO such as its definition of a problem, the idea of instances, the relevance of target values, and runtime as central performance measure. Finally, we give a quick overview of the basic code structure and the available test suites.

COCO: The Experimental Procedure

May 19, 2016Abstract:We present a budget-free experimental setup and procedure for benchmarking numericaloptimization algorithms in a black-box scenario. This procedure can be applied with the COCO benchmarking platform. We describe initialization of and input to the algorithm and touch upon therelevance of termination and restarts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge