Lars Lindemann

KTH Royal Institute of Technology, Stockholm, Sweden

eCP: Informative uncertainty quantification via Equivariantized Conformal Prediction with pre-trained models

Feb 03, 2026Abstract:We study the effect of group symmetrization of pre-trained models on conformal prediction (CP), a post-hoc, distribution-free, finite-sample method of uncertainty quantification that offers formal coverage guarantees under the assumption of data exchangeability. Unfortunately, CP uncertainty regions can grow significantly in long horizon missions, rendering the statistical guarantees uninformative. To that end, we propose infusing CP with geometric information via group-averaging of the pretrained predictor to distribute the non-conformity mass across the orbits. Each sample now is treated as a representative of an orbit, thus uncertainty can be mitigated by other samples entangled to it via the orbit inducing elements of the symmetry group. Our approach provably yields contracted non-conformity scores in increasing convex order, implying improved exponential-tail bounds and sharper conformal prediction sets in expectation, especially at high confidence levels. We then propose an experimental design to test these theoretical claims in pedestrian trajectory prediction.

Safe Planning in Interactive Environments via Iterative Policy Updates and Adversarially Robust Conformal Prediction

Nov 13, 2025Abstract:Safe planning of an autonomous agent in interactive environments -- such as the control of a self-driving vehicle among pedestrians and human-controlled vehicles -- poses a major challenge as the behavior of the environment is unknown and reactive to the behavior of the autonomous agent. This coupling gives rise to interaction-driven distribution shifts where the autonomous agent's control policy may change the environment's behavior, thereby invalidating safety guarantees in existing work. Indeed, recent works have used conformal prediction (CP) to generate distribution-free safety guarantees using observed data of the environment. However, CP's assumption on data exchangeability is violated in interactive settings due to a circular dependency where a control policy update changes the environment's behavior, and vice versa. To address this gap, we propose an iterative framework that robustly maintains safety guarantees across policy updates by quantifying the potential impact of a planned policy update on the environment's behavior. We realize this via adversarially robust CP where we perform a regular CP step in each episode using observed data under the current policy, but then transfer safety guarantees across policy updates by analytically adjusting the CP result to account for distribution shifts. This adjustment is performed based on a policy-to-trajectory sensitivity analysis, resulting in a safe, episodic open-loop planner. We further conduct a contraction analysis of the system providing conditions under which both the CP results and the policy updates are guaranteed to converge. We empirically demonstrate these safety and convergence guarantees on a two-dimensional car-pedestrian case study. To the best of our knowledge, these are the first results that provide valid safety guarantees in such interactive settings.

Split Conformal Prediction in the Function Space with Neural Operators

Sep 04, 2025Abstract:Uncertainty quantification for neural operators remains an open problem in the infinite-dimensional setting due to the lack of finite-sample coverage guarantees over functional outputs. While conformal prediction offers finite-sample guarantees in finite-dimensional spaces, it does not directly extend to function-valued outputs. Existing approaches (Gaussian processes, Bayesian neural networks, and quantile-based operators) require strong distributional assumptions or yield conservative coverage. This work extends split conformal prediction to function spaces following a two step method. We first establish finite-sample coverage guarantees in a finite-dimensional space using a discretization map in the output function space. Then these guarantees are lifted to the function-space by considering the asymptotic convergence as the discretization is refined. To characterize the effect of resolution, we decompose the conformal radius into discretization, calibration, and misspecification components. This decomposition motivates a regression-based correction to transfer calibration across resolutions. Additionally, we propose two diagnostic metrics (conformal ensemble score and internal agreement) to quantify forecast degradation in autoregressive settings. Empirical results show that our method maintains calibrated coverage with less variation under resolution shifts and achieves better coverage in super-resolution tasks.

Multi-Agent Path Finding Among Dynamic Uncontrollable Agents with Statistical Safety Guarantees

Jul 29, 2025

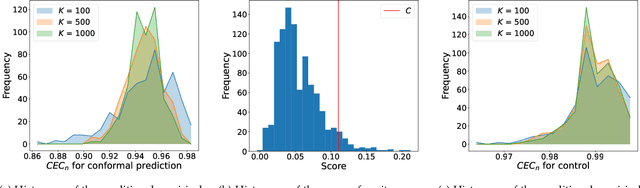

Abstract:Existing multi-agent path finding (MAPF) solvers do not account for uncertain behavior of uncontrollable agents. We present a novel variant of Enhanced Conflict-Based Search (ECBS), for both one-shot and lifelong MAPF in dynamic environments with uncontrollable agents. Our method consists of (1) training a learned predictor for the movement of uncontrollable agents, (2) quantifying the prediction error using conformal prediction (CP), a tool for statistical uncertainty quantification, and (3) integrating these uncertainty intervals into our modified ECBS solver. Our method can account for uncertain agent behavior, comes with statistical guarantees on collision-free paths for one-shot missions, and scales to lifelong missions with a receding horizon sequence of one-shot instances. We run our algorithm, CP-Solver, across warehouse and game maps, with competitive throughput and reduced collisions.

Deep Equivariant Multi-Agent Control Barrier Functions

Jun 09, 2025Abstract:With multi-agent systems increasingly deployed autonomously at scale in complex environments, ensuring safety of the data-driven policies is critical. Control Barrier Functions have emerged as an effective tool for enforcing safety constraints, yet existing learning-based methods often lack in scalability, generalization and sampling efficiency as they overlook inherent geometric structures of the system. To address this gap, we introduce symmetries-infused distributed Control Barrier Functions, enforcing the satisfaction of intrinsic symmetries on learnable graph-based safety certificates. We theoretically motivate the need for equivariant parametrization of CBFs and policies, and propose a simple, yet efficient and adaptable methodology for constructing such equivariant group-modular networks via the compatible group actions. This approach encodes safety constraints in a distributed data-efficient manner, enabling zero-shot generalization to larger and denser swarms. Through extensive simulations on multi-robot navigation tasks, we demonstrate that our method outperforms state-of-the-art baselines in terms of safety, scalability, and task success rates, highlighting the importance of embedding symmetries in safe distributed neural policies.

PCA-DDReach: Efficient Statistical Reachability Analysis of Stochastic Dynamical Systems via Principal Component Analysis

May 20, 2025Abstract:This study presents a scalable data-driven algorithm designed to efficiently address the challenging problem of reachability analysis. Analysis of cyber-physical systems (CPS) relies typically on parametric physical models of dynamical systems. However, identifying parametric physical models for complex CPS is challenging due to their complexity, uncertainty, and variability, often rendering them as black-box oracles. As an alternative, one can treat these complex systems as black-box models and use trajectory data sampled from the system (e.g., from high-fidelity simulators or the real system) along with machine learning techniques to learn models that approximate the underlying dynamics. However, these machine learning models can be inaccurate, highlighting the need for statistical tools to quantify errors. Recent advancements in the field include the incorporation of statistical uncertainty quantification tools such as conformal inference (CI) that can provide probabilistic reachable sets with provable guarantees. Recent work has even highlighted the ability of these tools to address the case where the distribution of trajectories sampled during training time are different from the distribution of trajectories encountered during deployment time. However, accounting for such distribution shifts typically results in more conservative guarantees. This is undesirable in practice and motivates us to present techniques that can reduce conservatism. Here, we propose a new approach that reduces conservatism and improves scalability by combining conformal inference with Principal Component Analysis (PCA). We show the effectiveness of our technique on various case studies, including a 12-dimensional quadcopter and a 27-dimensional hybrid system known as the powertrain.

Nonconvex Obstacle Avoidance using Efficient Sampling-Based Distance Functions

Apr 12, 2025Abstract:We consider nonconvex obstacle avoidance where a robot described by nonlinear dynamics and a nonconvex shape has to avoid nonconvex obstacles. Obstacle avoidance is a fundamental problem in robotics and well studied in control. However, existing solutions are computationally expensive (e.g., model predictive controllers), neglect nonlinear dynamics (e.g., graph-based planners), use diffeomorphic transformations into convex domains (e.g., for star shapes), or are conservative due to convex overapproximations. The key challenge here is that the computation of the distance between the shapes of the robot and the obstacles is a nonconvex problem. We propose efficient computation of this distance via sampling-based distance functions. We quantify the sampling error and show that, for certain systems, such sampling-based distance functions are valid nonsmooth control barrier functions. We also study how to deal with disturbances on the robot dynamics in our setting. Finally, we illustrate our method on a robot navigation task involving an omnidirectional robot and nonconvex obstacles. We also analyze performance and computational efficiency of our controller as a function of the number of samples.

Formal Verification and Control with Conformal Prediction

Aug 31, 2024

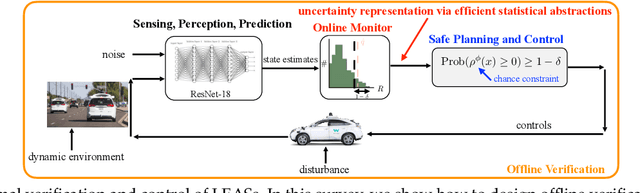

Abstract:In this survey, we design formal verification and control algorithms for autonomous systems with practical safety guarantees using conformal prediction (CP), a statistical tool for uncertainty quantification. We focus on learning-enabled autonomous systems (LEASs) in which the complexity of learning-enabled components (LECs) is a major bottleneck that hampers the use of existing model-based verification and design techniques. Instead, we advocate for the use of CP, and we will demonstrate its use in formal verification, systems and control theory, and robotics. We argue that CP is specifically useful due to its simplicity (easy to understand, use, and modify), generality (requires no assumptions on learned models and data distributions, i.e., is distribution-free), and efficiency (real-time capable and accurate). We pursue the following goals with this survey. First, we provide an accessible introduction to CP for non-experts who are interested in using CP to solve problems in autonomy. Second, we show how to use CP for the verification of LECs, e.g., for verifying input-output properties of neural networks. Third and fourth, we review recent articles that use CP for safe control design as well as offline and online verification of LEASs. We summarize their ideas in a unifying framework that can deal with the complexity of LEASs in a computationally efficient manner. In our exposition, we consider simple system specifications, e.g., robot navigation tasks, as well as complex specifications formulated in temporal logic formalisms. Throughout our survey, we compare to other statistical techniques (e.g., scenario optimization, PAC-Bayes theory, etc.) and how these techniques have been used in verification and control. Lastly, we point the reader to open problems and future research directions.

Statistical Reachability Analysis of Stochastic Cyber-Physical Systems under Distribution Shift

Jul 16, 2024

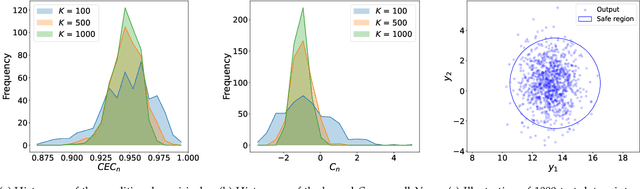

Abstract:Reachability analysis is a popular method to give safety guarantees for stochastic cyber-physical systems (SCPSs) that takes in a symbolic description of the system dynamics and uses set-propagation methods to compute an overapproximation of the set of reachable states over a bounded time horizon. In this paper, we investigate the problem of performing reachability analysis for an SCPS that does not have a symbolic description of the dynamics, but instead is described using a digital twin model that can be simulated to generate system trajectories. An important challenge is that the simulator implicitly models a probability distribution over the set of trajectories of the SCPS; however, it is typical to have a sim2real gap, i.e., the actual distribution of the trajectories in a deployment setting may be shifted from the distribution assumed by the simulator. We thus propose a statistical reachability analysis technique that, given a user-provided threshold $1-\epsilon$, provides a set that guarantees that any reachable state during deployment lies in this set with probability not smaller than this threshold. Our method is based on three main steps: (1) learning a deterministic surrogate model from sampled trajectories, (2) conducting reachability analysis over the surrogate model, and (3) employing {\em robust conformal inference} using an additional set of sampled trajectories to quantify the surrogate model's distribution shift with respect to the deployed SCPS. To counter conservatism in reachable sets, we propose a novel method to train surrogate models that minimizes a quantile loss term (instead of the usual mean squared loss), and a new method that provides tighter guarantees using conformal inference using a normalized surrogate error. We demonstrate the effectiveness of our technique on various case studies.

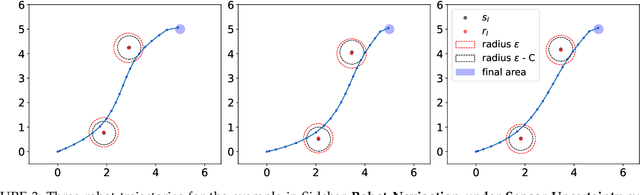

Recursively Feasible Shrinking-Horizon MPC in Dynamic Environments with Conformal Prediction Guarantees

May 17, 2024Abstract:In this paper, we focus on the problem of shrinking-horizon Model Predictive Control (MPC) in uncertain dynamic environments. We consider controlling a deterministic autonomous system that interacts with uncontrollable stochastic agents during its mission. Employing tools from conformal prediction, existing works derive high-confidence prediction regions for the unknown agent trajectories, and integrate these regions in the design of suitable safety constraints for MPC. Despite guaranteeing probabilistic safety of the closed-loop trajectories, these constraints do not ensure feasibility of the respective MPC schemes for the entire duration of the mission. We propose a shrinking-horizon MPC that guarantees recursive feasibility via a gradual relaxation of the safety constraints as new prediction regions become available online. This relaxation enforces the safety constraints to hold over the least restrictive prediction region from the set of all available prediction regions. In a comparative case study with the state of the art, we empirically show that our approach results in tighter prediction regions and verify recursive feasibility of our MPC scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge