Kanokphan Lertniphonphan

PCIE_Interaction Solution for Ego4D Social Interaction Challenge

May 30, 2025Abstract:This report presents our team's PCIE_Interaction solution for the Ego4D Social Interaction Challenge at CVPR 2025, addressing both Looking At Me (LAM) and Talking To Me (TTM) tasks. The challenge requires accurate detection of social interactions between subjects and the camera wearer, with LAM relying exclusively on face crop sequences and TTM combining speaker face crops with synchronized audio segments. In the LAM track, we employ face quality enhancement and ensemble methods. For the TTM task, we extend visual interaction analysis by fusing audio and visual cues, weighted by a visual quality score. Our approach achieved 0.81 and 0.71 mean average precision (mAP) on the LAM and TTM challenges leader board. Code is available at https://github.com/KanokphanL/PCIE_Ego4D_Social_Interaction

PCIE_Pose Solution for EgoExo4D Pose and Proficiency Estimation Challenge

May 30, 2025Abstract:This report introduces our team's (PCIE_EgoPose) solutions for the EgoExo4D Pose and Proficiency Estimation Challenges at CVPR2025. Focused on the intricate task of estimating 21 3D hand joints from RGB egocentric videos, which are complicated by subtle movements and frequent occlusions, we developed the Hand Pose Vision Transformer (HP-ViT+). This architecture synergizes a Vision Transformer and a CNN backbone, using weighted fusion to refine the hand pose predictions. For the EgoExo4D Body Pose Challenge, we adopted a multimodal spatio-temporal feature integration strategy to address the complexities of body pose estimation across dynamic contexts. Our methods achieved remarkable performance: 8.31 PA-MPJPE in the Hand Pose Challenge and 11.25 MPJPE in the Body Pose Challenge, securing championship titles in both competitions. We extended our pose estimation solutions to the Proficiency Estimation task, applying core technologies such as transformer-based architectures. This extension enabled us to achieve a top-1 accuracy of 0.53, a SOTA result, in the Demonstrator Proficiency Estimation competition.

PCIE_EgoHandPose Solution for EgoExo4D Hand Pose Challenge

Jun 18, 2024Abstract:This report presents our team's 'PCIE_EgoHandPose' solution for the EgoExo4D Hand Pose Challenge at CVPR2024. The main goal of the challenge is to accurately estimate hand poses, which involve 21 3D joints, using an RGB egocentric video image provided for the task. This task is particularly challenging due to the subtle movements and occlusions. To handle the complexity of the task, we propose the Hand Pose Vision Transformer (HP-ViT). The HP-ViT comprises a ViT backbone and transformer head to estimate joint positions in 3D, utilizing MPJPE and RLE loss function. Our approach achieved the 1st position in the Hand Pose challenge with 25.51 MPJPE and 8.49 PA-MPJPE. Code is available at https://github.com/KanokphanL/PCIE_EgoHandPose

PCIE_LAM Solution for Ego4D Looking At Me Challenge

Jun 18, 2024

Abstract:This report presents our team's 'PCIE_LAM' solution for the Ego4D Looking At Me Challenge at CVPR2024. The main goal of the challenge is to accurately determine if a person in the scene is looking at the camera wearer, based on a video where the faces of social partners have been localized. Our proposed solution, InternLSTM, consists of an InternVL image encoder and a Bi-LSTM network. The InternVL extracts spatial features, while the Bi-LSTM extracts temporal features. However, this task is highly challenging due to the distance between the person in the scene and the camera movement, which results in significant blurring in the face image. To address the complexity of the task, we implemented a Gaze Smoothing filter to eliminate noise or spikes from the output. Our approach achieved the 1st position in the looking at me challenge with 0.81 mAP and 0.93 accuracy rate. Code is available at https://github.com/KanokphanL/Ego4D_LAM_InternLSTM

Technical Report for Argoverse Challenges on Unified Sensor-based Detection, Tracking, and Forecasting

Nov 27, 2023

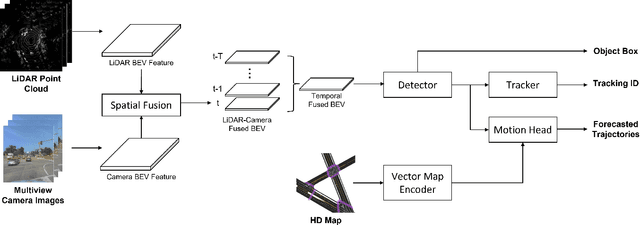

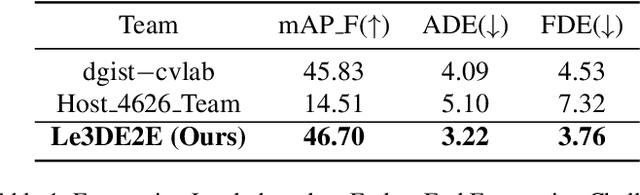

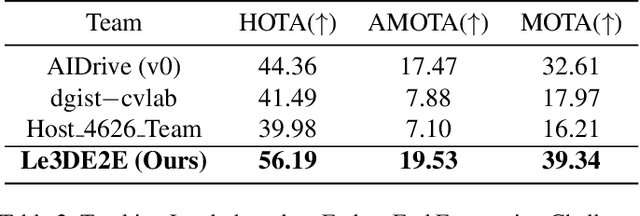

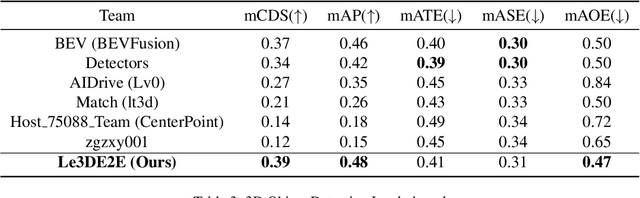

Abstract:This report presents our Le3DE2E solution for unified sensor-based detection, tracking, and forecasting in Argoverse Challenges at CVPR 2023 Workshop on Autonomous Driving (WAD). We propose a unified network that incorporates three tasks, including detection, tracking, and forecasting. This solution adopts a strong Bird's Eye View (BEV) encoder with spatial and temporal fusion and generates unified representations for multi-tasks. The solution was tested in the Argoverse 2 sensor dataset to evaluate the detection, tracking, and forecasting of 26 object categories. We achieved 1st place in Detection, Tracking, and Forecasting on the E2E Forecasting track in Argoverse Challenges at CVPR 2023 WAD.

Technical Report for Argoverse Challenges on 4D Occupancy Forecasting

Nov 27, 2023Abstract:This report presents our Le3DE2E_Occ solution for 4D Occupancy Forecasting in Argoverse Challenges at CVPR 2023 Workshop on Autonomous Driving (WAD). Our solution consists of a strong LiDAR-based Bird's Eye View (BEV) encoder with temporal fusion and a two-stage decoder, which combines a DETR head and a UNet decoder. The solution was tested on the Argoverse 2 sensor dataset to evaluate the occupancy state 3 seconds in the future. Our solution achieved 18% lower L1 Error (3.57) than the baseline and got the 1 place on the 4D Occupancy Forecasting task in Argoverse Challenges at CVPR 2023.

The 2nd Workshop on Maritime Computer Vision 2024

Nov 23, 2023

Abstract:The 2nd Workshop on Maritime Computer Vision (MaCVi) 2024 addresses maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicles (USV). Three challenges categories are considered: (i) UAV-based Maritime Object Tracking with Re-identification, (ii) USV-based Maritime Obstacle Segmentation and Detection, (iii) USV-based Maritime Boat Tracking. The USV-based Maritime Obstacle Segmentation and Detection features three sub-challenges, including a new embedded challenge addressing efficicent inference on real-world embedded devices. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 195 submissions. All datasets, evaluation code, and the leaderboard are available to the public at https://macvi.org/workshop/macvi24.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge