Kacper Kania

AnyStyle: Single-Pass Multimodal Stylization for 3D Gaussian Splatting

Feb 03, 2026Abstract:The growing demand for rapid and scalable 3D asset creation has driven interest in feed-forward 3D reconstruction methods, with 3D Gaussian Splatting (3DGS) emerging as an effective scene representation. While recent approaches have demonstrated pose-free reconstruction from unposed image collections, integrating stylization or appearance control into such pipelines remains underexplored. Existing attempts largely rely on image-based conditioning, which limits both controllability and flexibility. In this work, we introduce AnyStyle, a feed-forward 3D reconstruction and stylization framework that enables pose-free, zero-shot stylization through multimodal conditioning. Our method supports both textual and visual style inputs, allowing users to control the scene appearance using natural language descriptions or reference images. We propose a modular stylization architecture that requires only minimal architectural modifications and can be integrated into existing feed-forward 3D reconstruction backbones. Experiments demonstrate that AnyStyle improves style controllability over prior feed-forward stylization methods while preserving high-quality geometric reconstruction. A user study further confirms that AnyStyle achieves superior stylization quality compared to an existing state-of-the-art approach. Repository: https://github.com/joaxkal/AnyStyle.

LumiGauss: High-Fidelity Outdoor Relighting with 2D Gaussian Splatting

Aug 06, 2024

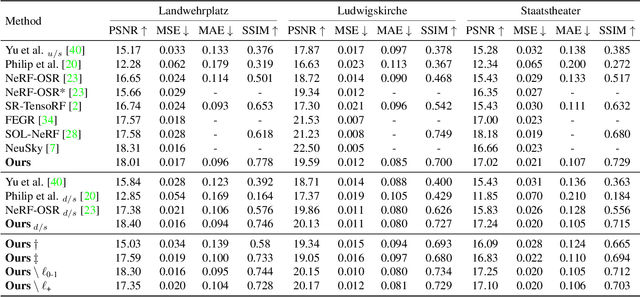

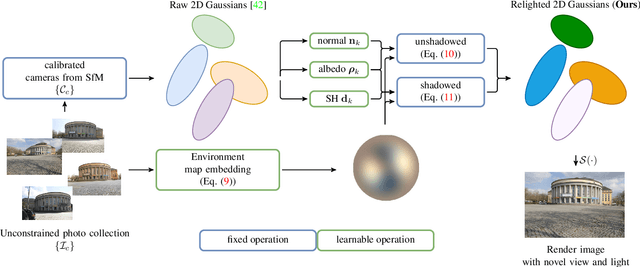

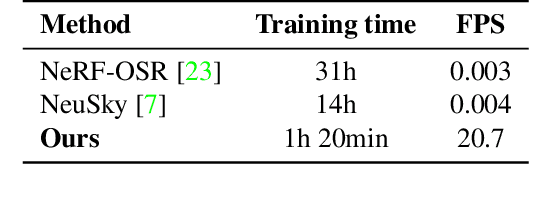

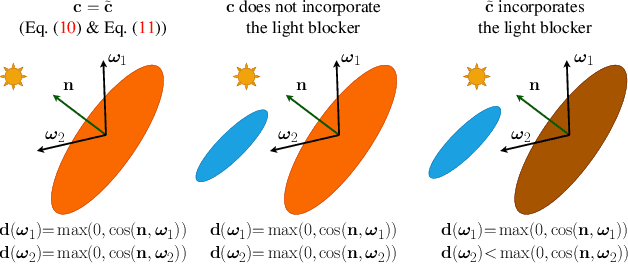

Abstract:Decoupling lighting from geometry using unconstrained photo collections is notoriously challenging. Solving it would benefit many users, as creating complex 3D assets takes days of manual labor. Many previous works have attempted to address this issue, often at the expense of output fidelity, which questions the practicality of such methods. We introduce LumiGauss, a technique that tackles 3D reconstruction of scenes and environmental lighting through 2D Gaussian Splatting. Our approach yields high-quality scene reconstructions and enables realistic lighting synthesis under novel environment maps. We also propose a method for enhancing the quality of shadows, common in outdoor scenes, by exploiting spherical harmonics properties. Our approach facilitates seamless integration with game engines and enables the use of fast precomputed radiance transfer. We validate our method on the NeRF-OSR dataset, demonstrating superior performance over baseline methods. Moreover, LumiGauss can synthesize realistic images when applying novel environment maps.

GeoGen: Geometry-Aware Generative Modeling via Signed Distance Functions

Jun 07, 2024

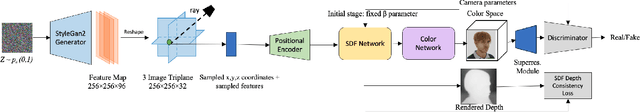

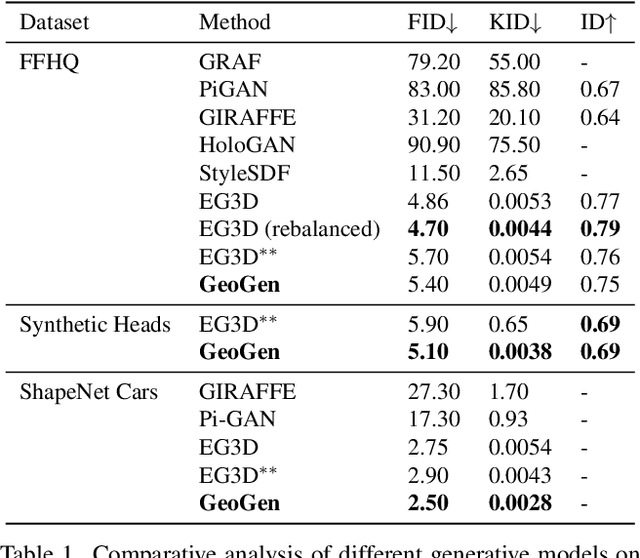

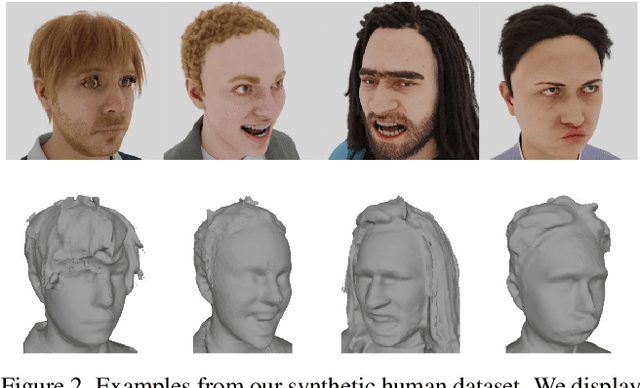

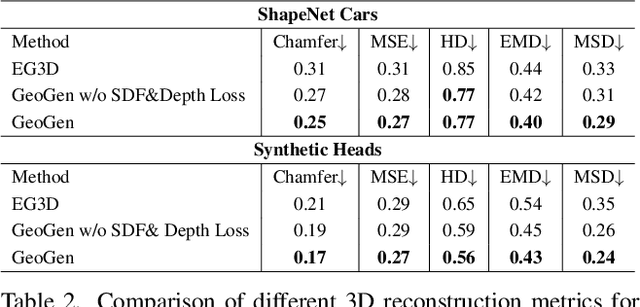

Abstract:We introduce a new generative approach for synthesizing 3D geometry and images from single-view collections. Most existing approaches predict volumetric density to render multi-view consistent images. By employing volumetric rendering using neural radiance fields, they inherit a key limitation: the generated geometry is noisy and unconstrained, limiting the quality and utility of the output meshes. To address this issue, we propose GeoGen, a new SDF-based 3D generative model trained in an end-to-end manner. Initially, we reinterpret the volumetric density as a Signed Distance Function (SDF). This allows us to introduce useful priors to generate valid meshes. However, those priors prevent the generative model from learning details, limiting the applicability of the method to real-world scenarios. To alleviate that problem, we make the transformation learnable and constrain the rendered depth map to be consistent with the zero-level set of the SDF. Through the lens of adversarial training, we encourage the network to produce higher fidelity details on the output meshes. For evaluation, we introduce a synthetic dataset of human avatars captured from 360-degree camera angles, to overcome the challenges presented by real-world datasets, which often lack 3D consistency and do not cover all camera angles. Our experiments on multiple datasets show that GeoGen produces visually and quantitatively better geometry than the previous generative models based on neural radiance fields.

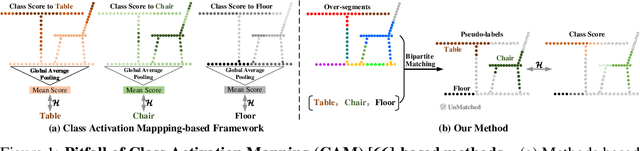

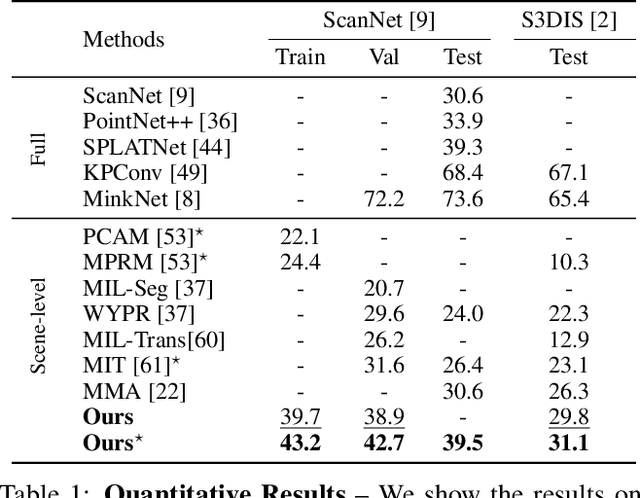

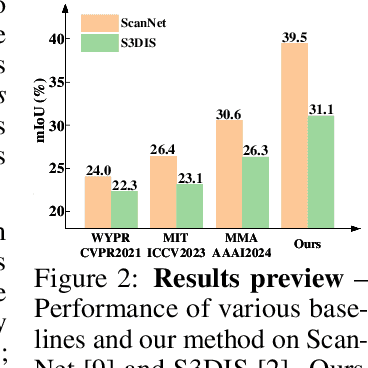

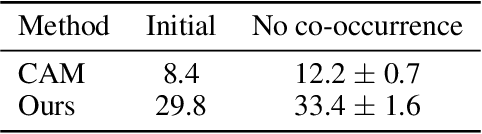

Densify Your Labels: Unsupervised Clustering with Bipartite Matching for Weakly Supervised Point Cloud Segmentation

Dec 11, 2023

Abstract:We propose a weakly supervised semantic segmentation method for point clouds that predicts "per-point" labels from just "whole-scene" annotations while achieving the performance of recent fully supervised approaches. Our core idea is to propagate the scene-level labels to each point in the point cloud by creating pseudo labels in a conservative way. Specifically, we over-segment point cloud features via unsupervised clustering and associate scene-level labels with clusters through bipartite matching, thus propagating scene labels only to the most relevant clusters, leaving the rest to be guided solely via unsupervised clustering. We empirically demonstrate that over-segmentation and bipartite assignment plays a crucial role. We evaluate our method on ScanNet and S3DIS datasets, outperforming state of the art, and demonstrate that we can achieve results comparable to fully supervised methods.

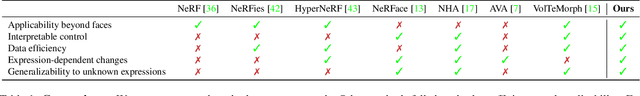

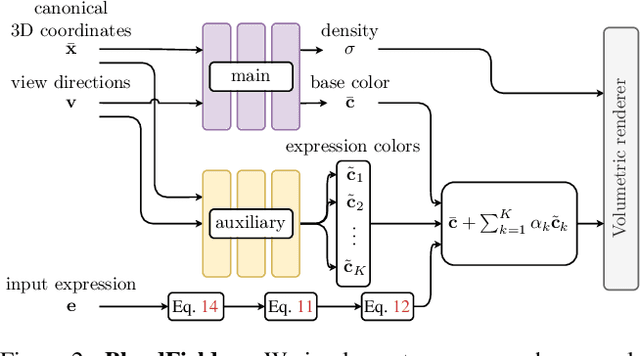

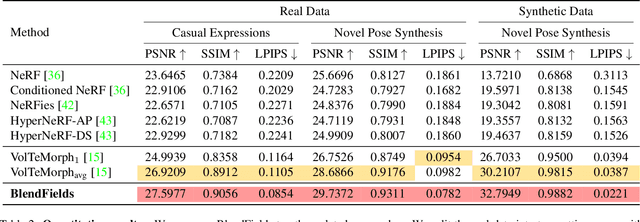

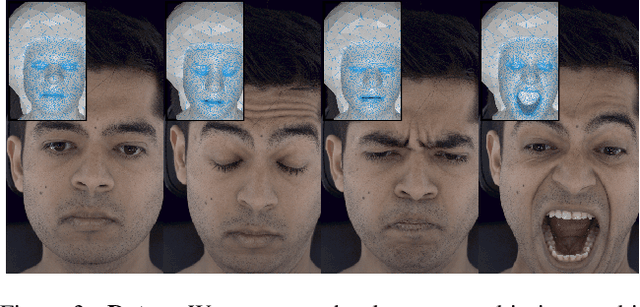

BlendFields: Few-Shot Example-Driven Facial Modeling

May 12, 2023

Abstract:Generating faithful visualizations of human faces requires capturing both coarse and fine-level details of the face geometry and appearance. Existing methods are either data-driven, requiring an extensive corpus of data not publicly accessible to the research community, or fail to capture fine details because they rely on geometric face models that cannot represent fine-grained details in texture with a mesh discretization and linear deformation designed to model only a coarse face geometry. We introduce a method that bridges this gap by drawing inspiration from traditional computer graphics techniques. Unseen expressions are modeled by blending appearance from a sparse set of extreme poses. This blending is performed by measuring local volumetric changes in those expressions and locally reproducing their appearance whenever a similar expression is performed at test time. We show that our method generalizes to unseen expressions, adding fine-grained effects on top of smooth volumetric deformations of a face, and demonstrate how it generalizes beyond faces.

CoNeRF: Controllable Neural Radiance Fields

Dec 06, 2021

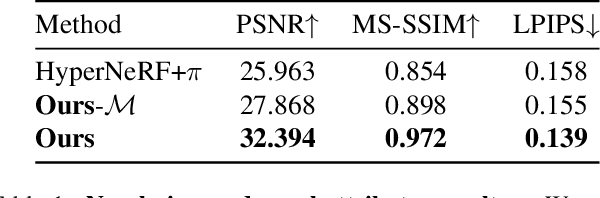

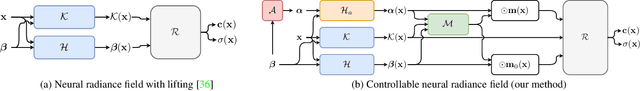

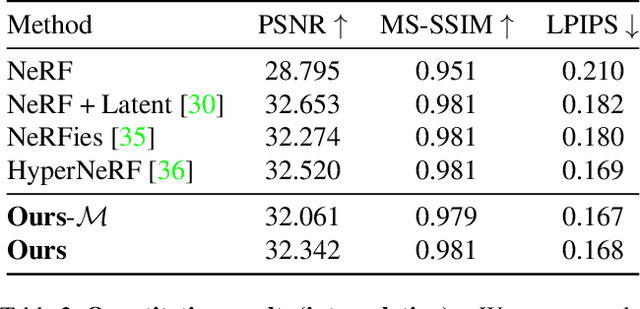

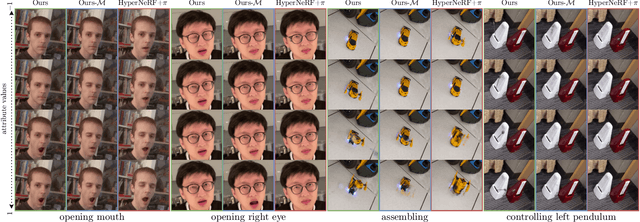

Abstract:We extend neural 3D representations to allow for intuitive and interpretable user control beyond novel view rendering (i.e. camera control). We allow the user to annotate which part of the scene one wishes to control with just a small number of mask annotations in the training images. Our key idea is to treat the attributes as latent variables that are regressed by the neural network given the scene encoding. This leads to a few-shot learning framework, where attributes are discovered automatically by the framework, when annotations are not provided. We apply our method to various scenes with different types of controllable attributes (e.g. expression control on human faces, or state control in movement of inanimate objects). Overall, we demonstrate, to the best of our knowledge, for the first time novel view and novel attribute re-rendering of scenes from a single video.

TrajeVAE -- Controllable Human Motion Generation from Trajectories

Apr 01, 2021

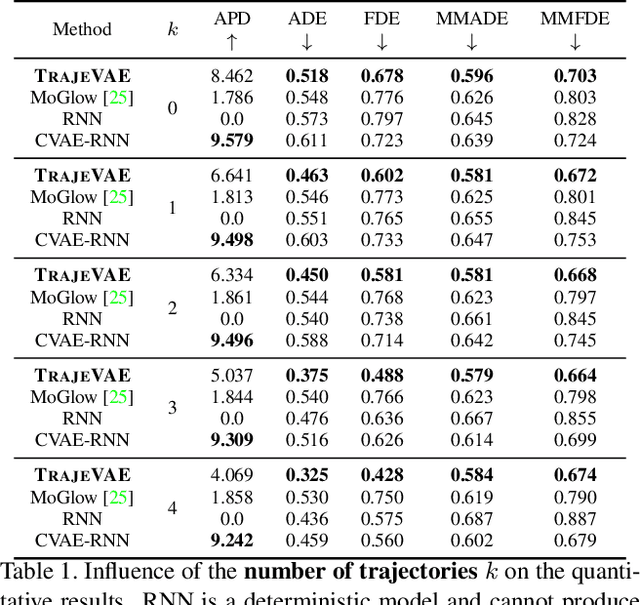

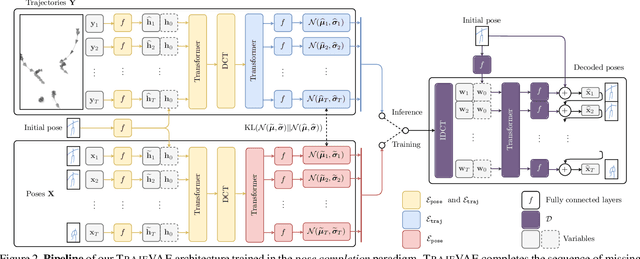

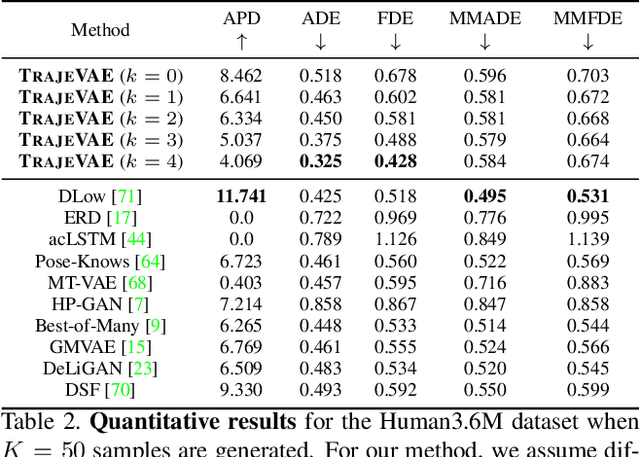

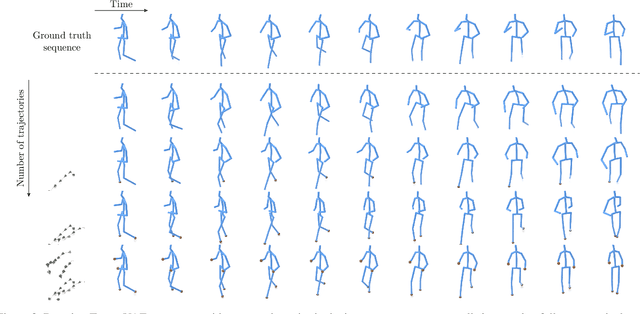

Abstract:The generation of plausible and controllable 3D human motion animations is a long-standing problem that often requires a manual intervention of skilled artists. Existing machine learning approaches try to semi-automate this process by allowing the user to input partial information about the future movement. However, they are limited in two significant ways: they either base their pose prediction on past prior frames with no additional control over the future poses or allow the user to input only a single trajectory that precludes fine-grained control over the output. To mitigate these two issues, we reformulate the problem of future pose prediction into pose completion in space and time where trajectories are represented as poses with missing joints. We show that such a framework can generalize to other neural networks designed for future pose prediction. Once trained in this framework, a model is capable of predicting sequences from any number of trajectories. To leverage this notion, we propose a novel transformer-like architecture, TrajeVAE, that provides a versatile framework for 3D human animation. We demonstrate that TrajeVAE outperforms trajectory-based reference approaches and methods that base their predictions on past poses in terms of accuracy. We also show that it can predict reasonable future poses even if provided only with an initial pose.

Modeling 3D Surface Manifolds with a Locally Conditioned Atlas

Feb 11, 2021

Abstract:Recently proposed 3D object reconstruction methods represent a mesh with an atlas - a set of planar patches approximating the surface. However, their application in a real-world scenario is limited since the surfaces of reconstructed objects contain discontinuities, which degrades the quality of the final mesh. This is mainly caused by independent processing of individual patches, and in this work, we postulate to mitigate this limitation by preserving local consistency around patch vertices. To that end, we introduce a Locally Conditioned Atlas (LoCondA), a framework for representing a 3D object hierarchically in a generative model. Firstly, the model maps a point cloud of an object into a sphere. Secondly, by leveraging a spherical prior, we enforce the mapping to be locally consistent on the sphere and on the target object. This way, we can sample a mesh quad on that sphere and project it back onto the object's manifold. With LoCondA, we can produce topologically diverse objects while maintaining quads to be stitched together. We show that the proposed approach provides structurally coherent reconstructions while producing meshes of quality comparable to the competitors.

Representing Point Clouds with Generative Conditional Invertible Flow Networks

Oct 07, 2020

Abstract:In this paper, we propose a simple yet effective method to represent point clouds as sets of samples drawn from a cloud-specific probability distribution. This interpretation matches intrinsic characteristics of point clouds: the number of points and their ordering within a cloud is not important as all points are drawn from the proximity of the object boundary. We postulate to represent each cloud as a parameterized probability distribution defined by a generative neural network. Once trained, such a model provides a natural framework for point cloud manipulation operations, such as aligning a new cloud into a default spatial orientation. To exploit similarities between same-class objects and to improve model performance, we turn to weight sharing: networks that model densities of points belonging to objects in the same family share all parameters with the exception of a small, object-specific embedding vector. We show that these embedding vectors capture semantic relationships between objects. Our method leverages generative invertible flow networks to learn embeddings as well as to generate point clouds. Thanks to this formulation and contrary to similar approaches, we are able to train our model in an end-to-end fashion. As a result, our model offers competitive or superior quantitative results on benchmark datasets, while enabling unprecedented capabilities to perform cloud manipulation tasks, such as point cloud registration and regeneration, by a generative network.

UCSG-Net -- Unsupervised Discovering of Constructive Solid Geometry Tree

Jul 03, 2020

Abstract:Signed distance field (SDF) is a prominent implicit representation of 3D meshes. Methods that are based on such representation achieved state-of-the-art 3D shape reconstruction quality. However, these methods struggle to reconstruct non-convex shapes. One remedy is to incorporate a constructive solid geometry framework (CSG) that represents a shape as a decomposition into primitives. It allows to embody a 3D shape of high complexity and non-convexity with a simple tree representation of Boolean operations. Nevertheless, existing approaches are supervised and require the entire CSG parse tree that is given upfront during the training process. On the contrary, we propose a model that extracts a CSG parse tree without any supervision - UCSG-Net. Our model predicts parameters of primitives and binarizes their SDF representation through differentiable indicator function. It is achieved jointly with discovering the structure of a Boolean operators tree. The model selects dynamically which operator combination over primitives leads to the reconstruction of high fidelity. We evaluate our method on 2D and 3D autoencoding tasks. We show that the predicted parse tree representation is interpretable and can be used in CAD software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge