Shaobo Xia

Source-Free Domain Adaptation for Geospatial Point Cloud Semantic Segmentation

Jan 13, 2026Abstract:Semantic segmentation of 3D geospatial point clouds is pivotal for remote sensing applications. However, variations in geographic patterns across regions and data acquisition strategies induce significant domain shifts, severely degrading the performance of deployed models. Existing domain adaptation methods typically rely on access to source-domain data. However, this requirement is rarely met due to data privacy concerns, regulatory policies, and data transmission limitations. This motivates the largely underexplored setting of source-free unsupervised domain adaptation (SFUDA), where only a pretrained model and unlabeled target-domain data are available. In this paper, we propose LoGo (Local-Global Dual-Consensus), a novel SFUDA framework specifically designed for geospatial point clouds. At the local level, we introduce a class-balanced prototype estimation module that abandons conventional global threshold filtering in favor of an intra-class independent anchor mining strategy. This ensures that robust feature prototypes can be generated even for sample-scarce tail classes, effectively mitigating the feature collapse caused by long-tailed distributions. At the global level, we introduce an optimal transport-based global distribution alignment module that formulates pseudo-label assignment as a global optimization problem. By enforcing global distribution constraints, this module effectively corrects the over-dominance of head classes inherent in local greedy assignments, preventing model predictions from being severely biased towards majority classes. Finally, we propose a dual-consistency pseudo-label filtering mechanism. This strategy retains only high-confidence pseudo-labels where local multi-augmented ensemble predictions align with global optimal transport assignments for self-training.

APCoTTA: Continual Test-Time Adaptation for Semantic Segmentation of Airborne LiDAR Point Clouds

May 15, 2025Abstract:Airborne laser scanning (ALS) point cloud segmentation is a fundamental task for large-scale 3D scene understanding. In real-world applications, models are typically fixed after training. However, domain shifts caused by changes in the environment, sensor types, or sensor degradation often lead to a decline in model performance. Continuous Test-Time Adaptation (CTTA) offers a solution by adapting a source-pretrained model to evolving, unlabeled target domains. Despite its potential, research on ALS point clouds remains limited, facing challenges such as the absence of standardized datasets and the risk of catastrophic forgetting and error accumulation during prolonged adaptation. To tackle these challenges, we propose APCoTTA, the first CTTA method tailored for ALS point cloud semantic segmentation. We propose a dynamic trainable layer selection module. This module utilizes gradient information to select low-confidence layers for training, and the remaining layers are kept frozen, mitigating catastrophic forgetting. To further reduce error accumulation, we propose an entropy-based consistency loss. By losing such samples based on entropy, we apply consistency loss only to the reliable samples, enhancing model stability. In addition, we propose a random parameter interpolation mechanism, which randomly blends parameters from the selected trainable layers with those of the source model. This approach helps balance target adaptation and source knowledge retention, further alleviating forgetting. Finally, we construct two benchmarks, ISPRSC and H3DC, to address the lack of CTTA benchmarks for ALS point cloud segmentation. Experimental results demonstrate that APCoTTA achieves the best performance on two benchmarks, with mIoU improvements of approximately 9% and 14% over direct inference. The new benchmarks and code are available at https://github.com/Gaoyuan2/APCoTTA.

NoKSR: Kernel-Free Neural Surface Reconstruction via Point Cloud Serialization

Feb 19, 2025Abstract:We present a novel approach to large-scale point cloud surface reconstruction by developing an efficient framework that converts an irregular point cloud into a signed distance field (SDF). Our backbone builds upon recent transformer-based architectures (i.e., PointTransformerV3), that serializes the point cloud into a locality-preserving sequence of tokens. We efficiently predict the SDF value at a point by aggregating nearby tokens, where fast approximate neighbors can be retrieved thanks to the serialization. We serialize the point cloud at different levels/scales, and non-linearly aggregate a feature to predict the SDF value. We show that aggregating across multiple scales is critical to overcome the approximations introduced by the serialization (i.e. false negatives in the neighborhood). Our frameworks sets the new state-of-the-art in terms of accuracy and efficiency (better or similar performance with half the latency of the best prior method, coupled with a simpler implementation), particularly on outdoor datasets where sparse-grid methods have shown limited performance.

Diffusion Models Meet Remote Sensing: Principles, Methods, and Perspectives

Apr 13, 2024

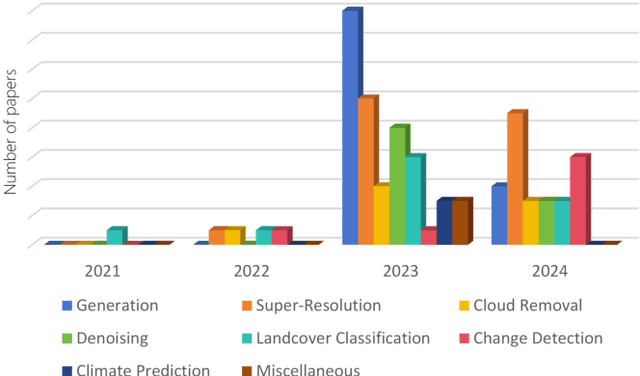

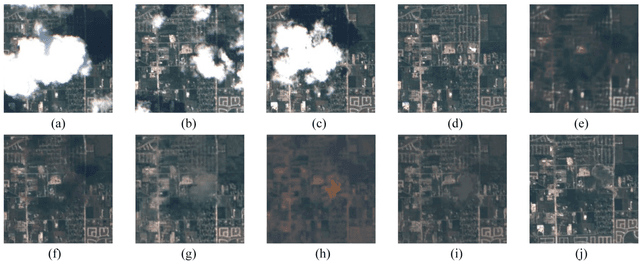

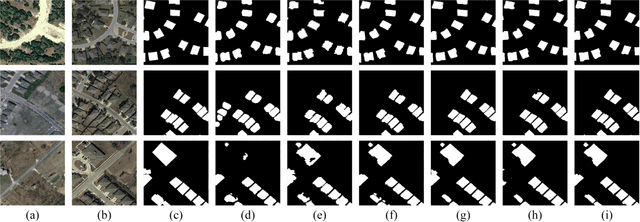

Abstract:As a newly emerging advance in deep generative models, diffusion models have achieved state-of-the-art results in many fields, including computer vision, natural language processing, and molecule design. The remote sensing community has also noticed the powerful ability of diffusion models and quickly applied them to a variety of tasks for image processing. Given the rapid increase in research on diffusion models in the field of remote sensing, it is necessary to conduct a comprehensive review of existing diffusion model-based remote sensing papers, to help researchers recognize the potential of diffusion models and provide some directions for further exploration. Specifically, this paper first introduces the theoretical background of diffusion models, and then systematically reviews the applications of diffusion models in remote sensing, including image generation, enhancement, and interpretation. Finally, the limitations of existing remote sensing diffusion models and worthy research directions for further exploration are discussed and summarized.

Densify Your Labels: Unsupervised Clustering with Bipartite Matching for Weakly Supervised Point Cloud Segmentation

Dec 11, 2023

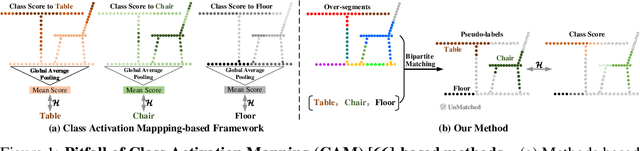

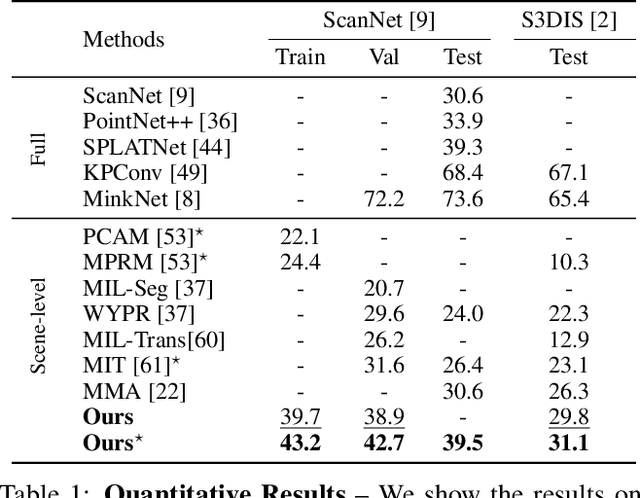

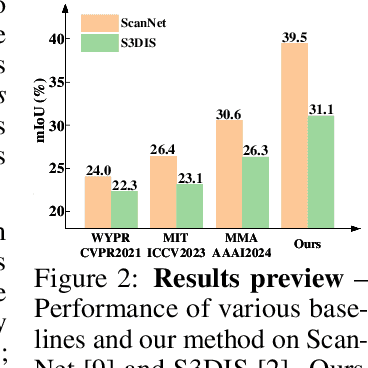

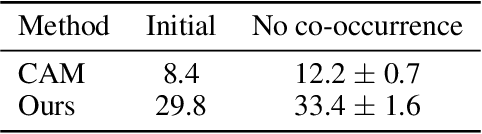

Abstract:We propose a weakly supervised semantic segmentation method for point clouds that predicts "per-point" labels from just "whole-scene" annotations while achieving the performance of recent fully supervised approaches. Our core idea is to propagate the scene-level labels to each point in the point cloud by creating pseudo labels in a conservative way. Specifically, we over-segment point cloud features via unsupervised clustering and associate scene-level labels with clusters through bipartite matching, thus propagating scene labels only to the most relevant clusters, leaving the rest to be guided solely via unsupervised clustering. We empirically demonstrate that over-segmentation and bipartite assignment plays a crucial role. We evaluate our method on ScanNet and S3DIS datasets, outperforming state of the art, and demonstrate that we can achieve results comparable to fully supervised methods.

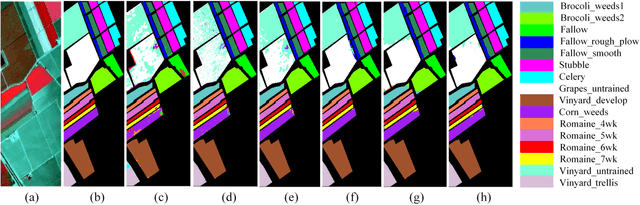

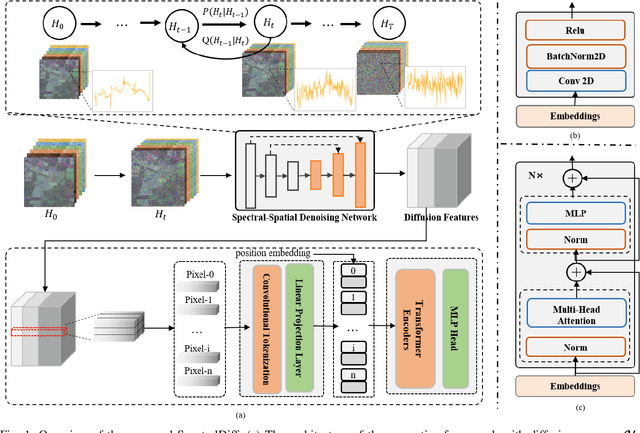

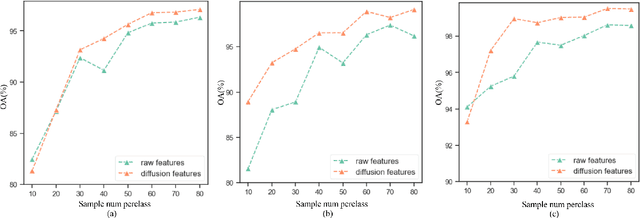

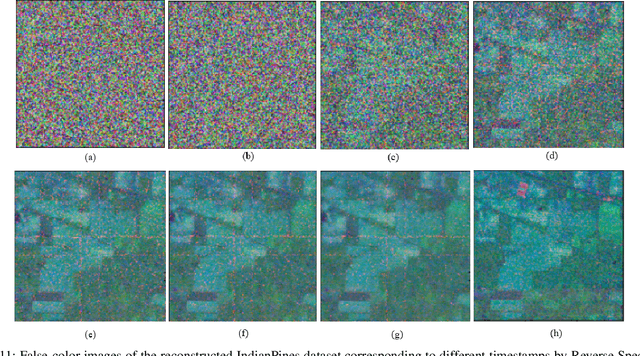

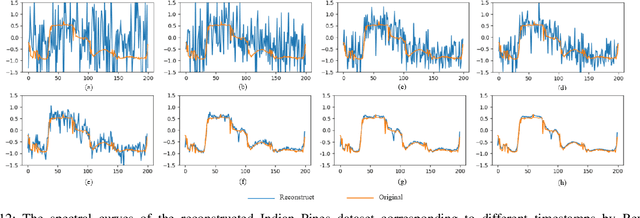

SpectralDiff: Hyperspectral Image Classification with Spectral-Spatial Diffusion Models

Apr 12, 2023

Abstract:Hyperspectral image (HSI) classification is an important topic in the field of remote sensing, and has a wide range of applications in Earth science. HSIs contain hundreds of continuous bands, which are characterized by high dimension and high correlation between adjacent bands. The high dimension and redundancy of HSI data bring great difficulties to HSI classification. In recent years, a large number of HSI feature extraction and classification methods based on deep learning have been proposed. However, their ability to model the global relationships among samples in both spatial and spectral domains is still limited. In order to solve this problem, an HSI classification method with spectral-spatial diffusion models is proposed. The proposed method realizes the reconstruction of spectral-spatial distribution of the training samples with the forward and reverse spectral-spatial diffusion process, thus modeling the global spatial-spectral relationship between samples. Then, we use the spectral-spatial denoising network of the reverse process to extract the unsupervised diffusion features. Features extracted by the spectral-spatial diffusion models can achieve cross-sample perception from the reconstructed distribution of the training samples, thus obtaining better classification performance. Experiments on three public HSI datasets show that the proposed method can achieve better performance than the state-of-the-art methods. The source code and the pre-trained spectral-spatial diffusion model will be publicly available at https://github.com/chenning0115/SpectralDiff.

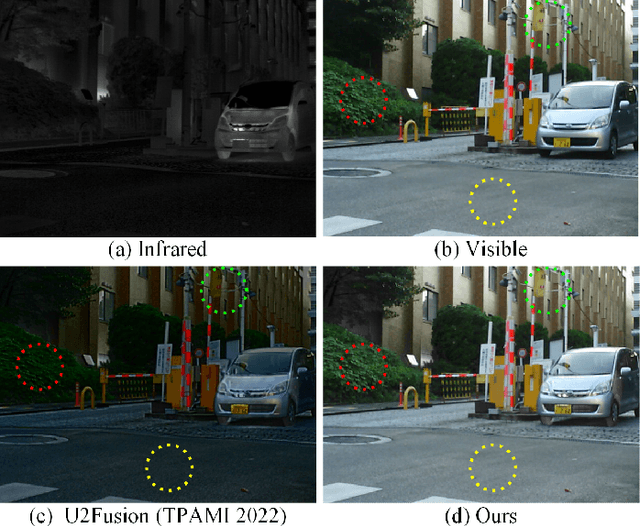

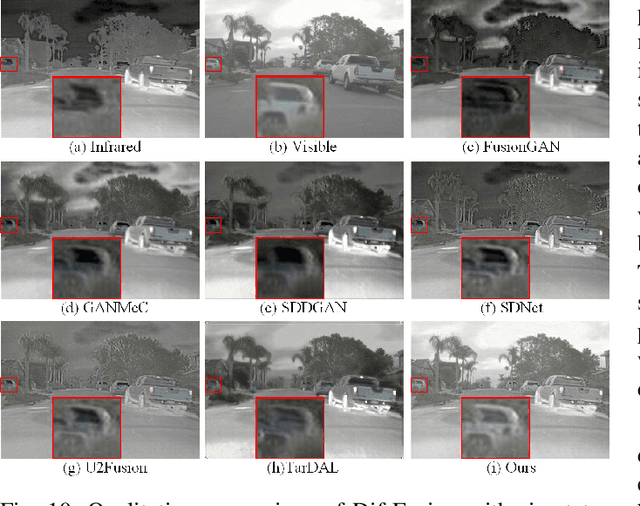

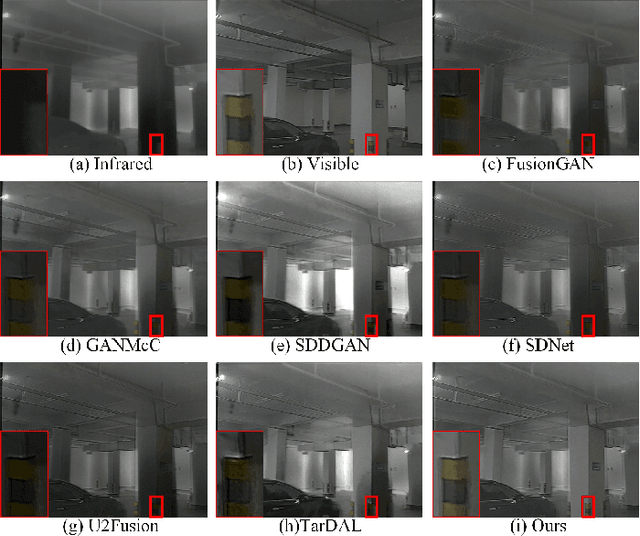

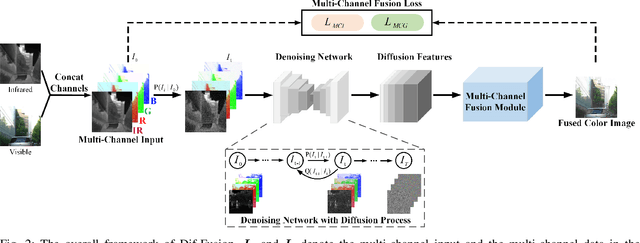

Dif-Fusion: Towards High Color Fidelity in Infrared and Visible Image Fusion with Diffusion Models

Jan 19, 2023

Abstract:Color plays an important role in human visual perception, reflecting the spectrum of objects. However, the existing infrared and visible image fusion methods rarely explore how to handle multi-spectral/channel data directly and achieve high color fidelity. This paper addresses the above issue by proposing a novel method with diffusion models, termed as Dif-Fusion, to generate the distribution of the multi-channel input data, which increases the ability of multi-source information aggregation and the fidelity of colors. In specific, instead of converting multi-channel images into single-channel data in existing fusion methods, we create the multi-channel data distribution with a denoising network in a latent space with forward and reverse diffusion process. Then, we use the the denoising network to extract the multi-channel diffusion features with both visible and infrared information. Finally, we feed the multi-channel diffusion features to the multi-channel fusion module to directly generate the three-channel fused image. To retain the texture and intensity information, we propose multi-channel gradient loss and intensity loss. Along with the current evaluation metrics for measuring texture and intensity fidelity, we introduce a new evaluation metric to quantify color fidelity. Extensive experiments indicate that our method is more effective than other state-of-the-art image fusion methods, especially in color fidelity.

Uncertainty Quantification for Hyperspectral Image Denoising Frameworks based on Low-rank Matrix Approximation

Apr 23, 2020

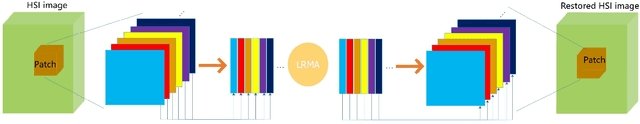

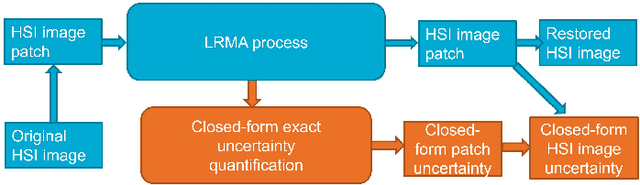

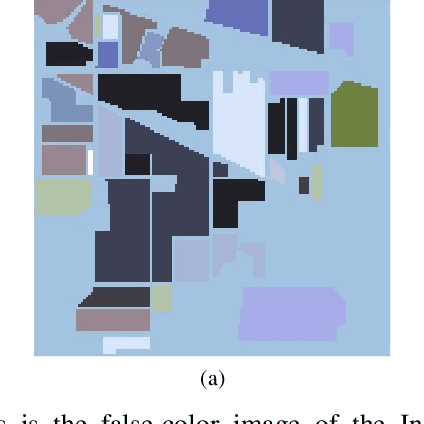

Abstract:Low-rank matrix approximation (LRMA) is a technique widely applied in hyperspectral images (HSI) denoising or completion. The uncertainty quantification of the estimated restored HSI, however, has not been addressed in previous researches. The lack of uncertainty of the product significantly limits the applications like multi-source or multi-scale data fusion, data assimilation and product confidence quantification, since these applications require an accurate way to describe the statistical distributions of the source data. To address this issue, we propose a prior-free closed-form element-wise uncertainty quantification method for the LRMA based HSI restoration. The proposed approach only requires the uncertainty of the observed HSI and can yield uncertainty in a limited amount of time and with similar time complexity comparing to the LRMA technique. We conduct extensive experiments to validate that the closed-form uncertainty describes the estimation accurately, is robust to at least 10\% ratio of random impulse noises and takes only around 10-20% amount of time of LRMA. All the experiments indicate that the proposed closed-form uncertainty quantification method is more applicable to be deployed to real-world applications than the baseline Monte-Carlo tests.

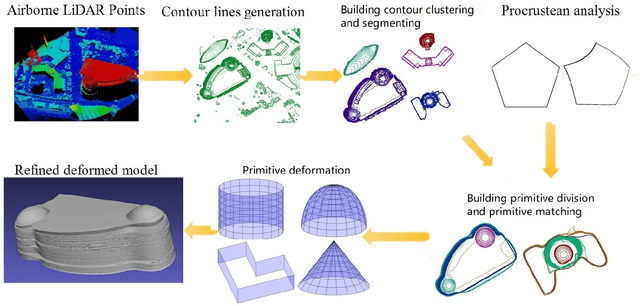

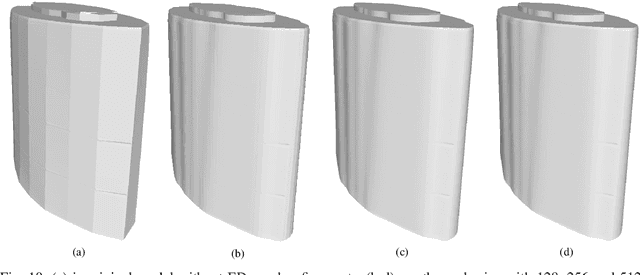

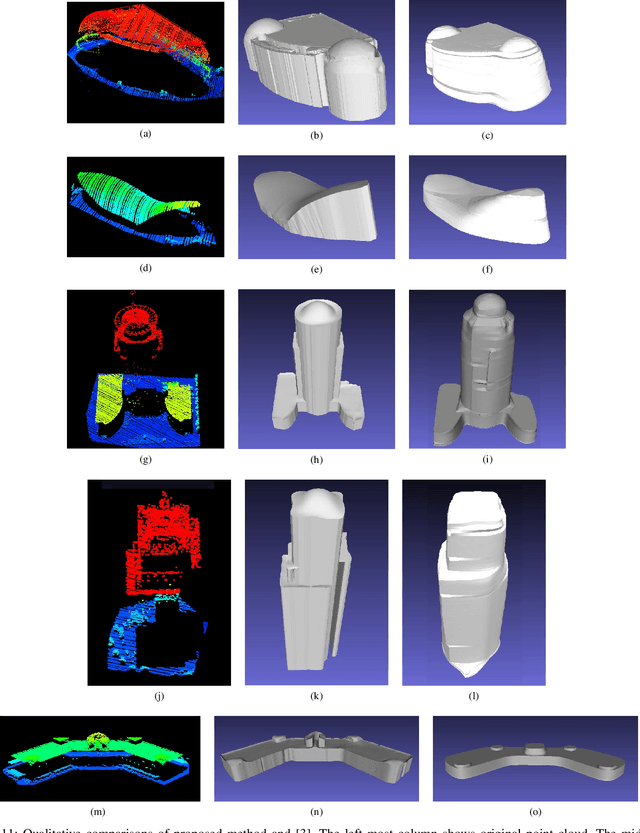

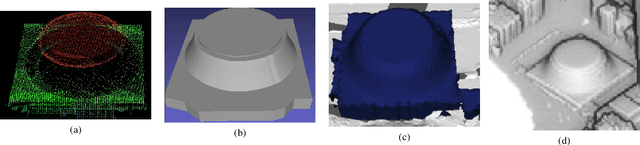

Curved Buildings Reconstruction from Airborne LiDAR Data by Matching and Deforming Geometric Primitives

Mar 22, 2020

Abstract:Airborne LiDAR (Light Detection and Ranging) data is widely applied in building reconstruction, with studies reporting success in typical buildings. However, the reconstruction of curved buildings remains an open research problem. To this end, we propose a new framework for curved building reconstruction via assembling and deforming geometric primitives. The input LiDAR point cloud are first converted into contours where individual buildings are identified. After recognizing geometric units (primitives) from building contours, we get initial models by matching basic geometric primitives to these primitives. To polish assembly models, we employ a warping field for model refinements. Specifically, an embedded deformation (ED) graph is constructed via downsampling the initial model. Then, the point-to-model displacements are minimized by adjusting node parameters in the ED graph based on our objective function. The presented framework is validated on several highly curved buildings collected by various LiDAR in different cities. The experimental results, as well as accuracy comparison, demonstrate the advantage and effectiveness of our method. {The new insight attributes to an efficient reconstruction manner.} Moreover, we prove that the primitive-based framework significantly reduces the data storage to 10-20 percent of classical mesh models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge