TrajeVAE -- Controllable Human Motion Generation from Trajectories

Paper and Code

Apr 01, 2021

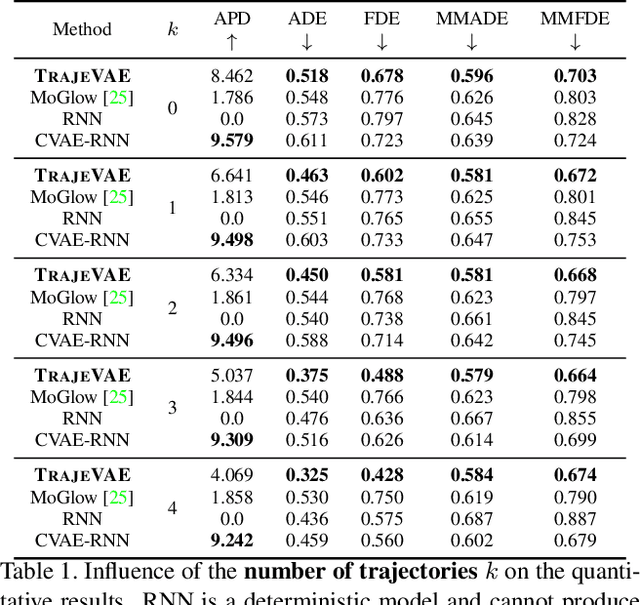

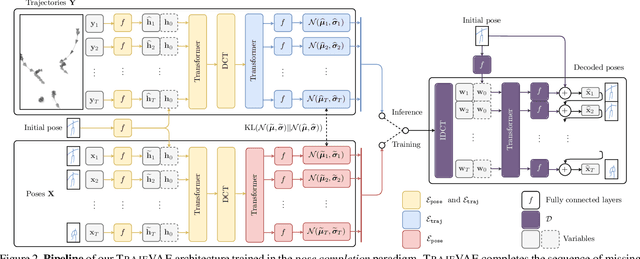

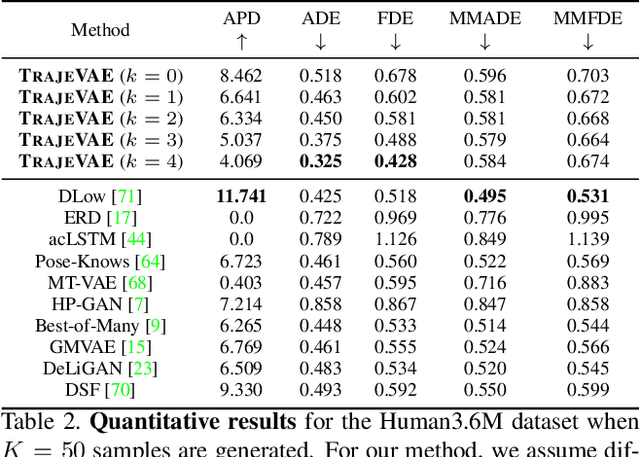

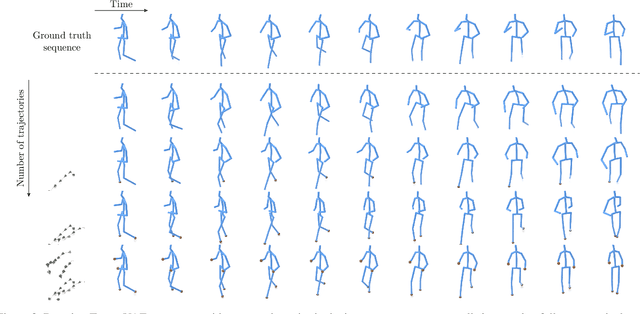

The generation of plausible and controllable 3D human motion animations is a long-standing problem that often requires a manual intervention of skilled artists. Existing machine learning approaches try to semi-automate this process by allowing the user to input partial information about the future movement. However, they are limited in two significant ways: they either base their pose prediction on past prior frames with no additional control over the future poses or allow the user to input only a single trajectory that precludes fine-grained control over the output. To mitigate these two issues, we reformulate the problem of future pose prediction into pose completion in space and time where trajectories are represented as poses with missing joints. We show that such a framework can generalize to other neural networks designed for future pose prediction. Once trained in this framework, a model is capable of predicting sequences from any number of trajectories. To leverage this notion, we propose a novel transformer-like architecture, TrajeVAE, that provides a versatile framework for 3D human animation. We demonstrate that TrajeVAE outperforms trajectory-based reference approaches and methods that base their predictions on past poses in terms of accuracy. We also show that it can predict reasonable future poses even if provided only with an initial pose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge