Juntong Peng

QuantV2X: A Fully Quantized Multi-Agent System for Cooperative Perception

Sep 03, 2025Abstract:Cooperative perception through Vehicle-to-Everything (V2X) communication offers significant potential for enhancing vehicle perception by mitigating occlusions and expanding the field of view. However, past research has predominantly focused on improving accuracy metrics without addressing the crucial system-level considerations of efficiency, latency, and real-world deployability. Noticeably, most existing systems rely on full-precision models, which incur high computational and transmission costs, making them impractical for real-time operation in resource-constrained environments. In this paper, we introduce \textbf{QuantV2X}, the first fully quantized multi-agent system designed specifically for efficient and scalable deployment of multi-modal, multi-agent V2X cooperative perception. QuantV2X introduces a unified end-to-end quantization strategy across both neural network models and transmitted message representations that simultaneously reduces computational load and transmission bandwidth. Remarkably, despite operating under low-bit constraints, QuantV2X achieves accuracy comparable to full-precision systems. More importantly, when evaluated under deployment-oriented metrics, QuantV2X reduces system-level latency by 3.2$\times$ and achieves a +9.5 improvement in mAP30 over full-precision baselines. Furthermore, QuantV2X scales more effectively, enabling larger and more capable models to fit within strict memory budgets. These results highlight the viability of a fully quantized multi-agent intermediate fusion system for real-world deployment. The system will be publicly released to promote research in this field: https://github.com/ucla-mobility/QuantV2X.

ViLaD: A Large Vision Language Diffusion Framework for End-to-End Autonomous Driving

Aug 18, 2025Abstract:End-to-end autonomous driving systems built on Vision Language Models (VLMs) have shown significant promise, yet their reliance on autoregressive architectures introduces some limitations for real-world applications. The sequential, token-by-token generation process of these models results in high inference latency and cannot perform bidirectional reasoning, making them unsuitable for dynamic, safety-critical environments. To overcome these challenges, we introduce ViLaD, a novel Large Vision Language Diffusion (LVLD) framework for end-to-end autonomous driving that represents a paradigm shift. ViLaD leverages a masked diffusion model that enables parallel generation of entire driving decision sequences, significantly reducing computational latency. Moreover, its architecture supports bidirectional reasoning, allowing the model to consider both past and future simultaneously, and supports progressive easy-first generation to iteratively improve decision quality. We conduct comprehensive experiments on the nuScenes dataset, where ViLaD outperforms state-of-the-art autoregressive VLM baselines in both planning accuracy and inference speed, while achieving a near-zero failure rate. Furthermore, we demonstrate the framework's practical viability through a real-world deployment on an autonomous vehicle for an interactive parking task, confirming its effectiveness and soundness for practical applications.

Communication-Efficient Multi-Agent 3D Detection via Hybrid Collaboration

Aug 09, 2025Abstract:Collaborative 3D detection can substantially boost detection performance by allowing agents to exchange complementary information. It inherently results in a fundamental trade-off between detection performance and communication bandwidth. To tackle this bottleneck issue, we propose a novel hybrid collaboration that adaptively integrates two types of communication messages: perceptual outputs, which are compact, and raw observations, which offer richer information. This approach focuses on two key aspects: i) integrating complementary information from two message types and ii) prioritizing the most critical data within each type. By adaptively selecting the most critical set of messages, it ensures optimal perceptual information and adaptability, effectively meeting the demands of diverse communication scenarios.Building on this hybrid collaboration, we present \texttt{HyComm}, a communication-efficient LiDAR-based collaborative 3D detection system. \texttt{HyComm} boasts two main benefits: i) it facilitates adaptable compression rates for messages, addressing various communication requirements, and ii) it uses standardized data formats for messages. This ensures they are independent of specific detection models, fostering adaptability across different agent configurations. To evaluate HyComm, we conduct experiments on both real-world and simulation datasets: DAIR-V2X and OPV2V. HyComm consistently outperforms previous methods and achieves a superior performance-bandwidth trade-off regardless of whether agents use the same or varied detection models. It achieves a lower communication volume of more than 2,006$\times$ and still outperforms Where2comm on DAIR-V2X in terms of AP50. The related code will be released.

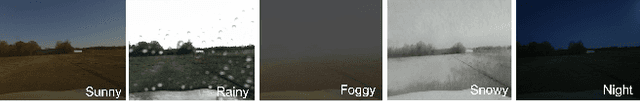

A Hierarchical Test Platform for Vision Language Model (VLM)-Integrated Real-World Autonomous Driving

Jun 17, 2025Abstract:Vision-Language Models (VLMs) have demonstrated notable promise in autonomous driving by offering the potential for multimodal reasoning through pretraining on extensive image-text pairs. However, adapting these models from broad web-scale data to the safety-critical context of driving presents a significant challenge, commonly referred to as domain shift. Existing simulation-based and dataset-driven evaluation methods, although valuable, often fail to capture the full complexity of real-world scenarios and cannot easily accommodate repeatable closed-loop testing with flexible scenario manipulation. In this paper, we introduce a hierarchical real-world test platform specifically designed to evaluate VLM-integrated autonomous driving systems. Our approach includes a modular, low-latency on-vehicle middleware that allows seamless incorporation of various VLMs, a clearly separated perception-planning-control architecture that can accommodate both VLM-based and conventional modules, and a configurable suite of real-world testing scenarios on a closed track that facilitates controlled yet authentic evaluations. We demonstrate the effectiveness of the proposed platform`s testing and evaluation ability with a case study involving a VLM-enabled autonomous vehicle, highlighting how our test framework supports robust experimentation under diverse conditions.

Generative AI for Autonomous Driving: Frontiers and Opportunities

May 13, 2025Abstract:Generative Artificial Intelligence (GenAI) constitutes a transformative technological wave that reconfigures industries through its unparalleled capabilities for content creation, reasoning, planning, and multimodal understanding. This revolutionary force offers the most promising path yet toward solving one of engineering's grandest challenges: achieving reliable, fully autonomous driving, particularly the pursuit of Level 5 autonomy. This survey delivers a comprehensive and critical synthesis of the emerging role of GenAI across the autonomous driving stack. We begin by distilling the principles and trade-offs of modern generative modeling, encompassing VAEs, GANs, Diffusion Models, and Large Language Models (LLMs). We then map their frontier applications in image, LiDAR, trajectory, occupancy, video generation as well as LLM-guided reasoning and decision making. We categorize practical applications, such as synthetic data workflows, end-to-end driving strategies, high-fidelity digital twin systems, smart transportation networks, and cross-domain transfer to embodied AI. We identify key obstacles and possibilities such as comprehensive generalization across rare cases, evaluation and safety checks, budget-limited implementation, regulatory compliance, ethical concerns, and environmental effects, while proposing research plans across theoretical assurances, trust metrics, transport integration, and socio-technical influence. By unifying these threads, the survey provides a forward-looking reference for researchers, engineers, and policymakers navigating the convergence of generative AI and advanced autonomous mobility. An actively maintained repository of cited works is available at https://github.com/taco-group/GenAI4AD.

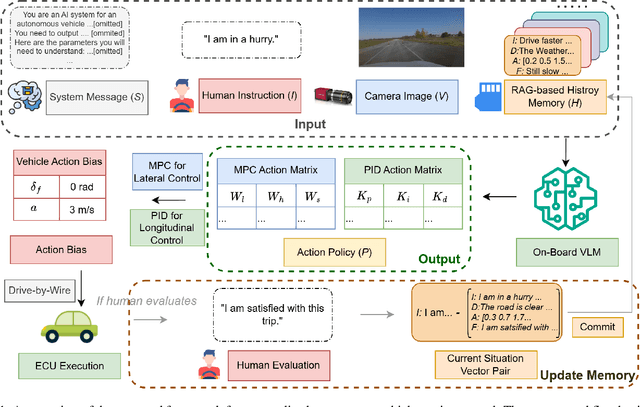

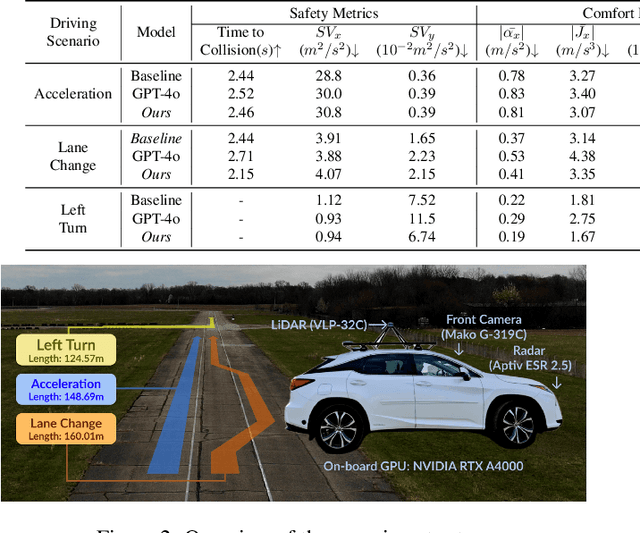

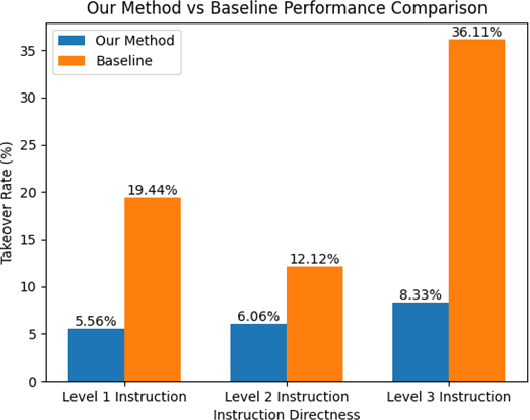

On-Board Vision-Language Models for Personalized Autonomous Vehicle Motion Control: System Design and Real-World Validation

Nov 17, 2024

Abstract:Personalized driving refers to an autonomous vehicle's ability to adapt its driving behavior or control strategies to match individual users' preferences and driving styles while maintaining safety and comfort standards. However, existing works either fail to capture every individual preference precisely or become computationally inefficient as the user base expands. Vision-Language Models (VLMs) offer promising solutions to this front through their natural language understanding and scene reasoning capabilities. In this work, we propose a lightweight yet effective on-board VLM framework that provides low-latency personalized driving performance while maintaining strong reasoning capabilities. Our solution incorporates a Retrieval-Augmented Generation (RAG)-based memory module that enables continuous learning of individual driving preferences through human feedback. Through comprehensive real-world vehicle deployment and experiments, our system has demonstrated the ability to provide safe, comfortable, and personalized driving experiences across various scenarios and significantly reduce takeover rates by up to 76.9%. To the best of our knowledge, this work represents the first end-to-end VLM-based motion control system in real-world autonomous vehicles.

Compatible Transformer for Irregularly Sampled Multivariate Time Series

Oct 17, 2023

Abstract:To analyze multivariate time series, most previous methods assume regular subsampling of time series, where the interval between adjacent measurements and the number of samples remain unchanged. Practically, data collection systems could produce irregularly sampled time series due to sensor failures and interventions. However, existing methods designed for regularly sampled multivariate time series cannot directly handle irregularity owing to misalignment along both temporal and variate dimensions. To fill this gap, we propose Compatible Transformer (CoFormer), a transformer-based encoder to achieve comprehensive temporal-interaction feature learning for each individual sample in irregular multivariate time series. In CoFormer, we view each sample as a unique variate-time point and leverage intra-variate/inter-variate attentions to learn sample-wise temporal/interaction features based on intra-variate/inter-variate neighbors. With CoFormer as the core, we can analyze irregularly sampled multivariate time series for many downstream tasks, including classification and prediction. We conduct extensive experiments on 3 real-world datasets and validate that the proposed CoFormer significantly and consistently outperforms existing methods.

Graph-based Decentralized Task Allocation for Multi-Robot Target Localization

Sep 16, 2023Abstract:We introduce a new approach to address the task allocation problem in a system of heterogeneous robots comprising of Unmanned Ground Vehicles (UGVs) and Unmanned Aerial Vehicles (UAVs). The proposed model, \texttt{\method}, or \textbf{G}raph \textbf{A}ttention \textbf{T}ask \textbf{A}llocato\textbf{R} aggregates information from neighbors in the multi-robot system, with the aim of achieving joint optimality in the target localization efficiency.Being decentralized, our method is highly robust and adaptable to situations where collaborators may change over time, ensuring the continuity of the mission. We also proposed heterogeneity-aware preprocessing to let all the different types of robots collaborate with a uniform model.The experimental results demonstrate the effectiveness and scalability of the proposed approach in a range of simulated scenarios. The model can allocate targets' positions close to the expert algorithm's result, with a median spatial gap less than a unit length. This approach can be used in multi-robot systems deployed in search and rescue missions, environmental monitoring, and disaster response.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge