Juho Kim

DiscoverLLM: From Executing Intents to Discovering Them

Feb 03, 2026Abstract:To handle ambiguous and open-ended requests, Large Language Models (LLMs) are increasingly trained to interact with users to surface intents they have not yet expressed (e.g., ask clarification questions). However, users are often ambiguous because they have not yet formed their intents: they must observe and explore outcomes to discover what they want. Simply asking "what kind of tone do you want?" fails when users themselves do not know. We introduce DiscoverLLM, a novel and generalizable framework that trains LLMs to help users form and discover their intents. Central to our approach is a novel user simulator that models cognitive state with a hierarchy of intents that progressively concretize as the model surfaces relevant options -- where the degree of concretization serves as a reward signal that models can be trained to optimize. Resulting models learn to collaborate with users by adaptively diverging (i.e., explore options) when intents are unclear, and converging (i.e., refine and implement) when intents concretize. Across proposed interactive benchmarks in creative writing, technical writing, and SVG drawing, DiscoverLLM achieves over 10% higher task performance while reducing conversation length by up to 40%. In a user study with 75 human participants, DiscoverLLM improved conversation satisfaction and efficiency compared to baselines.

ClearFairy: Capturing Creative Workflows through Decision Structuring, In-Situ Questioning, and Rationale Inference

Sep 18, 2025Abstract:Capturing professionals' decision-making in creative workflows is essential for reflection, collaboration, and knowledge sharing, yet existing methods often leave rationales incomplete and implicit decisions hidden. To address this, we present CLEAR framework that structures reasoning into cognitive decision steps-linked units of actions, artifacts, and self-explanations that make decisions traceable. Building on this framework, we introduce ClearFairy, a think-aloud AI assistant for UI design that detects weak explanations, asks lightweight clarifying questions, and infers missing rationales to ease the knowledge-sharing burden. In a study with twelve creative professionals, 85% of ClearFairy's inferred rationales were accepted, increasing strong explanations from 14% to over 83% of decision steps without adding cognitive demand. The captured steps also enhanced generative AI agents in Figma, yielding next-action predictions better aligned with professionals and producing more coherent design outcomes. For future research on human knowledge-grounded creative AI agents, we release a dataset of captured 417 decision steps.

Evaluations at Work: Measuring the Capabilities of GenAI in Use

May 15, 2025Abstract:Current AI benchmarks miss the messy, multi-turn nature of human-AI collaboration. We present an evaluation framework that decomposes real-world tasks into interdependent subtasks, letting us track both LLM performance and users' strategies across a dialogue. Complementing this framework, we develop a suite of metrics, including a composite usage derived from semantic similarity, word overlap, and numerical matches; structural coherence; intra-turn diversity; and a novel measure of the "information frontier" reflecting the alignment between AI outputs and users' working knowledge. We demonstrate our methodology in a financial valuation task that mirrors real-world complexity. Our empirical findings reveal that while greater integration of LLM-generated content generally enhances output quality, its benefits are moderated by factors such as response incoherence, excessive subtask diversity, and the distance of provided information from users' existing knowledge. These results suggest that proactive dialogue strategies designed to inject novelty may inadvertently undermine task performance. Our work thus advances a more holistic evaluation of human-AI collaboration, offering both a robust methodological framework and actionable insights for developing more effective AI-augmented work processes.

VideoMix: Aggregating How-To Videos for Task-Oriented Learning

Mar 27, 2025Abstract:Tutorial videos are a valuable resource for people looking to learn new tasks. People often learn these skills by viewing multiple tutorial videos to get an overall understanding of a task by looking at different approaches to achieve the task. However, navigating through multiple videos can be time-consuming and mentally demanding as these videos are scattered and not easy to skim. We propose VideoMix, a system that helps users gain a holistic understanding of a how-to task by aggregating information from multiple videos on the task. Insights from our formative study (N=12) reveal that learners value understanding potential outcomes, required materials, alternative methods, and important details shared by different videos. Powered by a Vision-Language Model pipeline, VideoMix extracts and organizes this information, presenting concise textual summaries alongside relevant video clips, enabling users to quickly digest and navigate the content. A comparative user study (N=12) demonstrated that VideoMix enabled participants to gain a more comprehensive understanding of tasks with greater efficiency than a baseline video interface, where videos are viewed independently. Our findings highlight the potential of a task-oriented, multi-video approach where videos are organized around a shared goal, offering an enhanced alternative to conventional video-based learning.

Iffy-Or-Not: Extending the Web to Support the Critical Evaluation of Fallacious Texts

Mar 18, 2025Abstract:Social platforms have expanded opportunities for deliberation with the comments being used to inform one's opinion. However, using such information to form opinions is challenged by unsubstantiated or false content. To enhance the quality of opinion formation and potentially confer resistance to misinformation, we developed Iffy-Or-Not (ION), a browser extension that seeks to invoke critical thinking when reading texts. With three features guided by argumentation theory, ION highlights fallacious content, suggests diverse queries to probe them with, and offers deeper questions to consider and chat with others about. From a user study (N=18), we found that ION encourages users to be more attentive to the content, suggests queries that align with or are preferable to their own, and poses thought-provoking questions that expands their perspectives. However, some participants expressed aversion to ION due to misalignments with their information goals and thinking predispositions. Potential backfiring effects with ION are discussed.

An LLM-Integrated Framework for Completion, Management, and Tracing of STPA

Mar 15, 2025

Abstract:In many safety-critical engineering domains, hazard analysis techniques are an essential part of requirement elicitation. Of the methods proposed for this task, STPA (System-Theoretic Process Analysis) represents a relatively recent development in the field. The completion, management, and traceability of this hazard analysis technique present a time-consuming challenge to the requirements and safety engineers involved. In this paper, we introduce a free, open-source software framework to build STPA models with several automated workflows powered by large language models (LLMs). In past works, LLMs have been successfully integrated into a myriad of workflows across various fields. Here, we demonstrate that LLMs can be used to complete tasks associated with STPA with a high degree of accuracy, saving the time and effort of the human engineers involved. We experimentally validate our method on real-world STPA models built by requirement engineers and researchers. The source code of our software framework is available at the following link: https://github.com/blueskysolarracing/stpa.

Automatically Evaluating the Paper Reviewing Capability of Large Language Models

Feb 24, 2025Abstract:Peer review is essential for scientific progress, but it faces challenges such as reviewer shortages and growing workloads. Although Large Language Models (LLMs) show potential for providing assistance, research has reported significant limitations in the reviews they generate. While the insights are valuable, conducting the analysis is challenging due to the considerable time and effort required, especially given the rapid pace of LLM developments. To address the challenge, we developed an automatic evaluation pipeline to assess the LLMs' paper review capability by comparing them with expert-generated reviews. By constructing a dataset consisting of 676 OpenReview papers, we examined the agreement between LLMs and experts in their strength and weakness identifications. The results showed that LLMs lack balanced perspectives, significantly overlook novelty assessment when criticizing, and produce poor acceptance decisions. Our automated pipeline enables a scalable evaluation of LLMs' paper review capability over time.

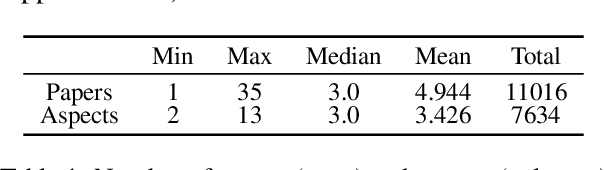

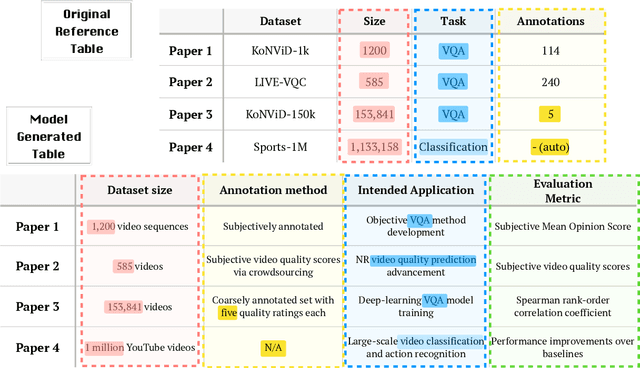

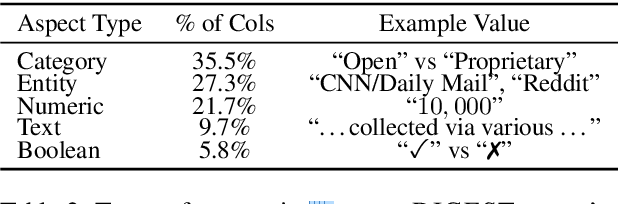

ArxivDIGESTables: Synthesizing Scientific Literature into Tables using Language Models

Oct 25, 2024

Abstract:When conducting literature reviews, scientists often create literature review tables - tables whose rows are publications and whose columns constitute a schema, a set of aspects used to compare and contrast the papers. Can we automatically generate these tables using language models (LMs)? In this work, we introduce a framework that leverages LMs to perform this task by decomposing it into separate schema and value generation steps. To enable experimentation, we address two main challenges: First, we overcome a lack of high-quality datasets to benchmark table generation by curating and releasing arxivDIGESTables, a new dataset of 2,228 literature review tables extracted from ArXiv papers that synthesize a total of 7,542 research papers. Second, to support scalable evaluation of model generations against human-authored reference tables, we develop DecontextEval, an automatic evaluation method that aligns elements of tables with the same underlying aspects despite differing surface forms. Given these tools, we evaluate LMs' abilities to reconstruct reference tables, finding this task benefits from additional context to ground the generation (e.g. table captions, in-text references). Finally, through a human evaluation study we find that even when LMs fail to fully reconstruct a reference table, their generated novel aspects can still be useful.

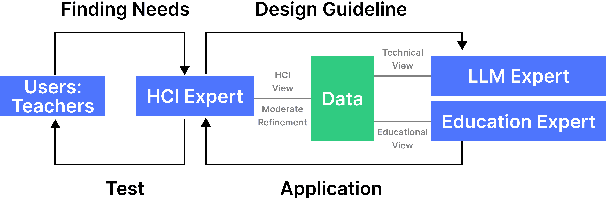

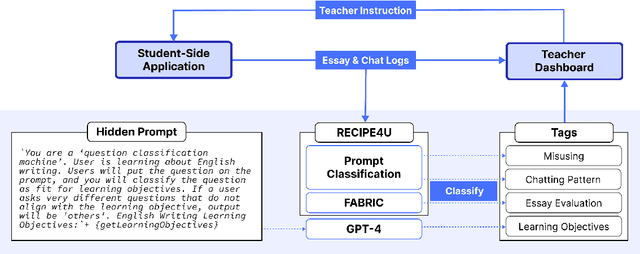

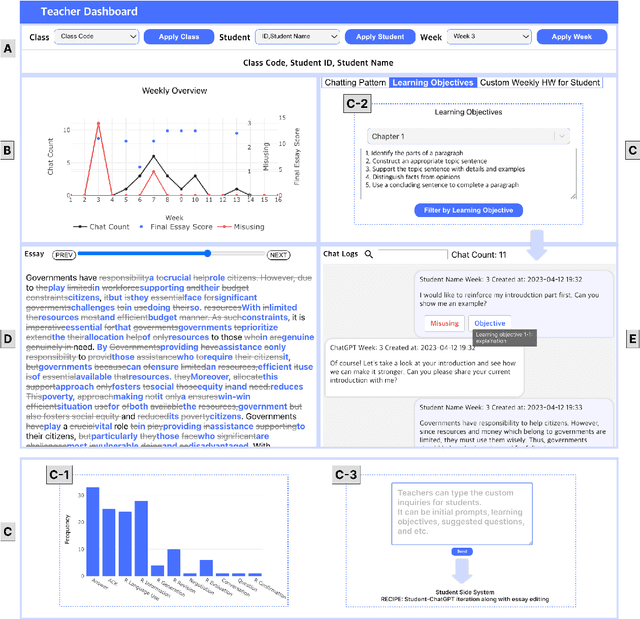

LLM-Driven Learning Analytics Dashboard for Teachers in EFL Writing Education

Oct 19, 2024

Abstract:This paper presents the development of a dashboard designed specifically for teachers in English as a Foreign Language (EFL) writing education. Leveraging LLMs, the dashboard facilitates the analysis of student interactions with an essay writing system, which integrates ChatGPT for real-time feedback. The dashboard aids teachers in monitoring student behavior, identifying noneducational interaction with ChatGPT, and aligning instructional strategies with learning objectives. By combining insights from NLP and Human-Computer Interaction (HCI), this study demonstrates how a human-centered approach can enhance the effectiveness of teacher dashboards, particularly in ChatGPT-integrated learning.

GPU-Accelerated Counterfactual Regret Minimization

Aug 27, 2024Abstract:Counterfactual regret minimization (CFR) is a family of algorithms of no-regret learning dynamics capable of solving large-scale imperfect information games. There has been a notable lack of work on making CFR more computationally efficient. We propose implementing this algorithm as a series of dense and sparse matrix and vector operations, thereby making it highly parallelizable for a graphical processing unit. Our experiments show that our implementation performs up to about 352.5 times faster than OpenSpiel's Python implementation and up to about 22.2 times faster than OpenSpiel's C++ implementation and the speedup becomes more pronounced as the size of the game being solved grows.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge