Jinze Chen

Event-Based Eye Tracking. 2025 Event-based Vision Workshop

Apr 25, 2025

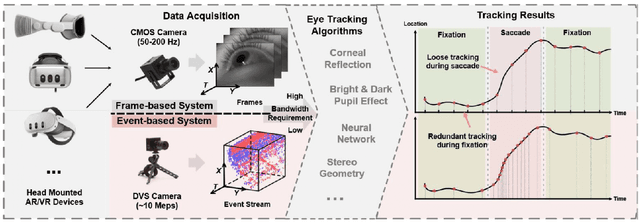

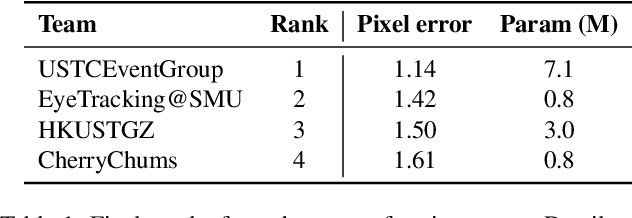

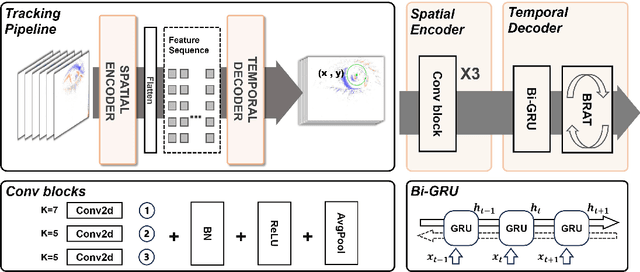

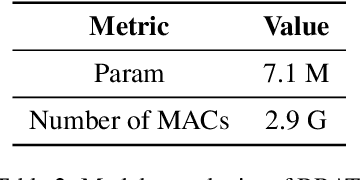

Abstract:This survey serves as a review for the 2025 Event-Based Eye Tracking Challenge organized as part of the 2025 CVPR event-based vision workshop. This challenge focuses on the task of predicting the pupil center by processing event camera recorded eye movement. We review and summarize the innovative methods from teams rank the top in the challenge to advance future event-based eye tracking research. In each method, accuracy, model size, and number of operations are reported. In this survey, we also discuss event-based eye tracking from the perspective of hardware design.

Event Signal Filtering via Probability Flux Estimation

Apr 10, 2025Abstract:Events offer a novel paradigm for capturing scene dynamics via asynchronous sensing, but their inherent randomness often leads to degraded signal quality. Event signal filtering is thus essential for enhancing fidelity by reducing this internal randomness and ensuring consistent outputs across diverse acquisition conditions. Unlike traditional time series that rely on fixed temporal sampling to capture steady-state behaviors, events encode transient dynamics through polarity and event intervals, making signal modeling significantly more complex. To address this, the theoretical foundation of event generation is revisited through the lens of diffusion processes. The state and process information within events is modeled as continuous probability flux at threshold boundaries of the underlying irradiance diffusion. Building on this insight, a generative, online filtering framework called Event Density Flow Filter (EDFilter) is introduced. EDFilter estimates event correlation by reconstructing the continuous probability flux from discrete events using nonparametric kernel smoothing, and then resamples filtered events from this flux. To optimize fidelity over time, spatial and temporal kernels are employed in a time-varying optimization framework. A fast recursive solver with O(1) complexity is proposed, leveraging state-space models and lookup tables for efficient likelihood computation. Furthermore, a new real-world benchmark Rotary Event Dataset (RED) is released, offering microsecond-level ground truth irradiance for full-reference event filtering evaluation. Extensive experiments validate EDFilter's performance across tasks like event filtering, super-resolution, and direct event-based blob tracking. Significant gains in downstream applications such as SLAM and video reconstruction underscore its robustness and effectiveness.

Event-Based Eye Tracking. AIS 2024 Challenge Survey

Apr 17, 2024

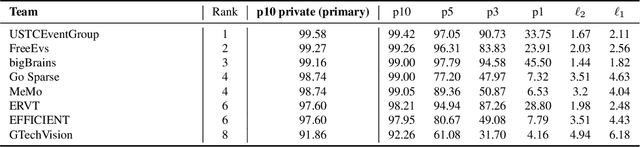

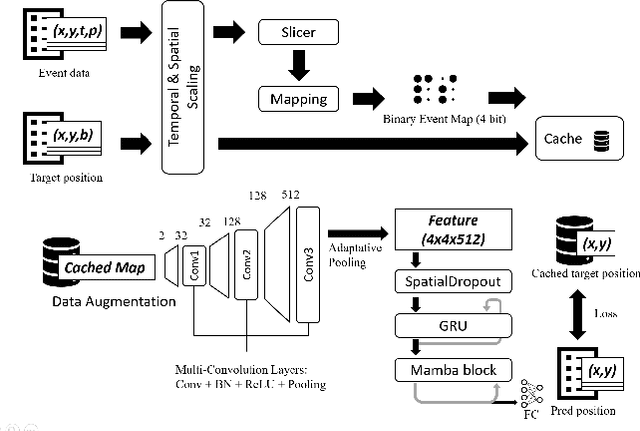

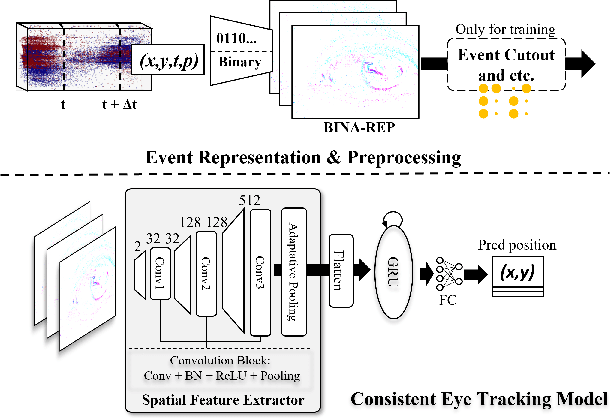

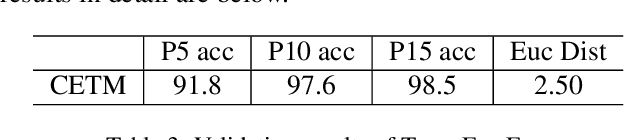

Abstract:This survey reviews the AIS 2024 Event-Based Eye Tracking (EET) Challenge. The task of the challenge focuses on processing eye movement recorded with event cameras and predicting the pupil center of the eye. The challenge emphasizes efficient eye tracking with event cameras to achieve good task accuracy and efficiency trade-off. During the challenge period, 38 participants registered for the Kaggle competition, and 8 teams submitted a challenge factsheet. The novel and diverse methods from the submitted factsheets are reviewed and analyzed in this survey to advance future event-based eye tracking research.

Event-based Asynchronous HDR Imaging by Temporal Incident Light Modulation

Mar 14, 2024Abstract:Dynamic Range (DR) is a pivotal characteristic of imaging systems. Current frame-based cameras struggle to achieve high dynamic range imaging due to the conflict between globally uniform exposure and spatially variant scene illumination. In this paper, we propose AsynHDR, a Pixel-Asynchronous HDR imaging system, based on key insights into the challenges in HDR imaging and the unique event-generating mechanism of Dynamic Vision Sensors (DVS). Our proposed AsynHDR system integrates the DVS with a set of LCD panels. The LCD panels modulate the irradiance incident upon the DVS by altering their transparency, thereby triggering the pixel-independent event streams. The HDR image is subsequently decoded from the event streams through our temporal-weighted algorithm. Experiments under standard test platform and several challenging scenes have verified the feasibility of the system in HDR imaging task.

E-MLB: Multilevel Benchmark for Event-Based Camera Denoising

Mar 21, 2023Abstract:Event cameras, such as dynamic vision sensors (DVS), are biologically inspired vision sensors that have advanced over conventional cameras in high dynamic range, low latency and low power consumption, showing great application potential in many fields. Event cameras are more sensitive to junction leakage current and photocurrent as they output differential signals, losing the smoothing function of the integral imaging process in the RGB camera. The logarithmic conversion further amplifies noise, especially in low-contrast conditions. Recently, researchers proposed a series of datasets and evaluation metrics but limitations remain: 1) the existing datasets are small in scale and insufficient in noise diversity, which cannot reflect the authentic working environments of event cameras; and 2) the existing denoising evaluation metrics are mostly referenced evaluation metrics, relying on APS information or manual annotation. To address the above issues, we construct a large-scale event denoising dataset (multilevel benchmark for event denoising, E-MLB) for the first time, which consists of 100 scenes, each with four noise levels, that is 12 times larger than the largest existing denoising dataset. We also propose the first nonreference event denoising metric, the event structural ratio (ESR), which measures the structural intensity of given events. ESR is inspired by the contrast metric, but is independent of the number of events and projection direction. Based on the proposed benchmark and ESR, we evaluate the most representative denoising algorithms, including classic and SOTA, and provide denoising baselines under various scenes and noise levels. The corresponding results and codes are available at https://github.com/KugaMaxx/cuke-emlb.

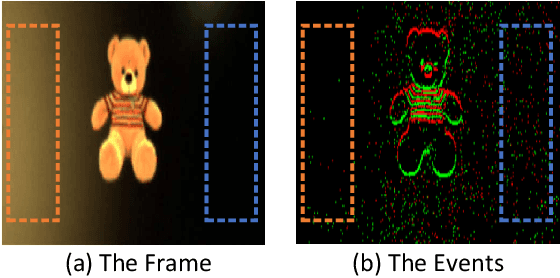

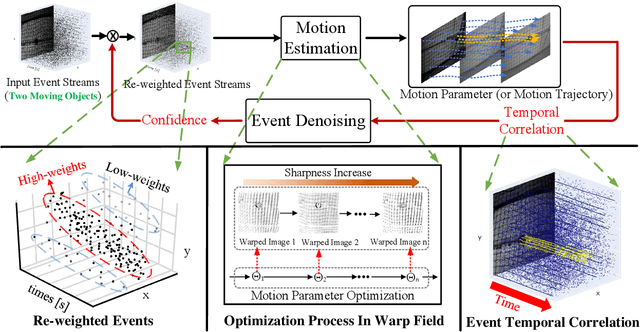

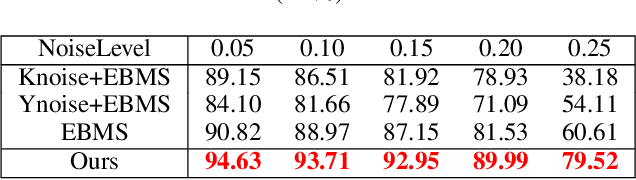

ProgressiveMotionSeg: Mutually Reinforced Framework for Event-Based Motion Segmentation

Mar 22, 2022

Abstract:Dynamic Vision Sensor (DVS) can asynchronously output the events reflecting apparent motion of objects with microsecond resolution, and shows great application potential in monitoring and other fields. However, the output event stream of existing DVS inevitably contains background activity noise (BA noise) due to dark current and junction leakage current, which will affect the temporal correlation of objects, resulting in deteriorated motion estimation performance. Particularly, the existing filter-based denoising methods cannot be directly applied to suppress the noise in event stream, since there is no spatial correlation. To address this issue, this paper presents a novel progressive framework, in which a Motion Estimation (ME) module and an Event Denoising (ED) module are jointly optimized in a mutually reinforced manner. Specifically, based on the maximum sharpness criterion, ME module divides the input event into several segments by adaptive clustering in a motion compensating warp field, and captures the temporal correlation of event stream according to the clustered motion parameters. Taking temporal correlation as guidance, ED module calculates the confidence that each event belongs to real activity events, and transmits it to ME module to update energy function of motion segmentation for noise suppression. The two steps are iteratively updated until stable motion segmentation results are obtained. Extensive experimental results on both synthetic and real datasets demonstrate the superiority of our proposed approaches against the State-Of-The-Art (SOTA) methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge