ProgressiveMotionSeg: Mutually Reinforced Framework for Event-Based Motion Segmentation

Paper and Code

Mar 22, 2022

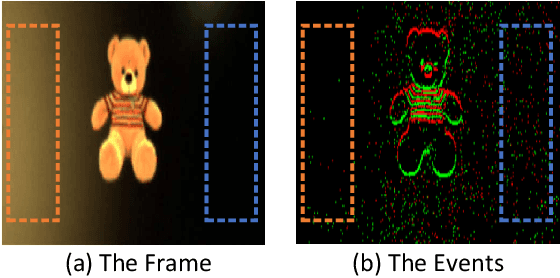

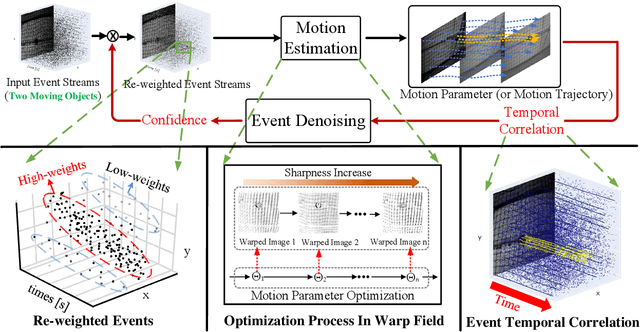

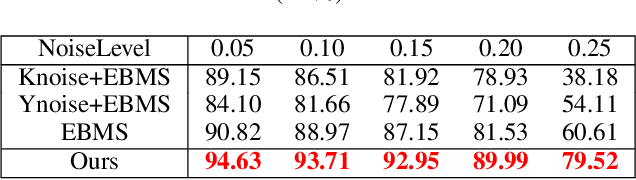

Dynamic Vision Sensor (DVS) can asynchronously output the events reflecting apparent motion of objects with microsecond resolution, and shows great application potential in monitoring and other fields. However, the output event stream of existing DVS inevitably contains background activity noise (BA noise) due to dark current and junction leakage current, which will affect the temporal correlation of objects, resulting in deteriorated motion estimation performance. Particularly, the existing filter-based denoising methods cannot be directly applied to suppress the noise in event stream, since there is no spatial correlation. To address this issue, this paper presents a novel progressive framework, in which a Motion Estimation (ME) module and an Event Denoising (ED) module are jointly optimized in a mutually reinforced manner. Specifically, based on the maximum sharpness criterion, ME module divides the input event into several segments by adaptive clustering in a motion compensating warp field, and captures the temporal correlation of event stream according to the clustered motion parameters. Taking temporal correlation as guidance, ED module calculates the confidence that each event belongs to real activity events, and transmits it to ME module to update energy function of motion segmentation for noise suppression. The two steps are iteratively updated until stable motion segmentation results are obtained. Extensive experimental results on both synthetic and real datasets demonstrate the superiority of our proposed approaches against the State-Of-The-Art (SOTA) methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge