Jingjing Yin

Takin: A Cohort of Superior Quality Zero-shot Speech Generation Models

Sep 18, 2024

Abstract:With the advent of the big data and large language model era, zero-shot personalized rapid customization has emerged as a significant trend. In this report, we introduce Takin AudioLLM, a series of techniques and models, mainly including Takin TTS, Takin VC, and Takin Morphing, specifically designed for audiobook production. These models are capable of zero-shot speech production, generating high-quality speech that is nearly indistinguishable from real human speech and facilitating individuals to customize the speech content according to their own needs. Specifically, we first introduce Takin TTS, a neural codec language model that builds upon an enhanced neural speech codec and a multi-task training framework, capable of generating high-fidelity natural speech in a zero-shot way. For Takin VC, we advocate an effective content and timbre joint modeling approach to improve the speaker similarity, while advocating for a conditional flow matching based decoder to further enhance its naturalness and expressiveness. Last, we propose the Takin Morphing system with highly decoupled and advanced timbre and prosody modeling approaches, which enables individuals to customize speech production with their preferred timbre and prosody in a precise and controllable manner. Extensive experiments validate the effectiveness and robustness of our Takin AudioLLM series models. For detailed demos, please refer to https://takinaudiollm.github.io.

PP-MeT: a Real-world Personalized Prompt based Meeting Transcription System

Sep 28, 2023

Abstract:Speaker-attributed automatic speech recognition (SA-ASR) improves the accuracy and applicability of multi-speaker ASR systems in real-world scenarios by assigning speaker labels to transcribed texts. However, SA-ASR poses unique challenges due to factors such as speaker overlap, speaker variability, background noise, and reverberation. In this study, we propose PP-MeT system, a real-world personalized prompt based meeting transcription system, which consists of a clustering system, target-speaker voice activity detection (TS-VAD), and TS-ASR. Specifically, we utilize target-speaker embedding as a prompt in TS-VAD and TS-ASR modules in our proposed system. In constrast with previous system, we fully leverage pre-trained models for system initialization, thereby bestowing our approach with heightened generalizability and precision. Experiments on M2MeT2.0 Challenge dataset show that our system achieves a cp-CER of 11.27% on the test set, ranking first in both fixed and open training conditions.

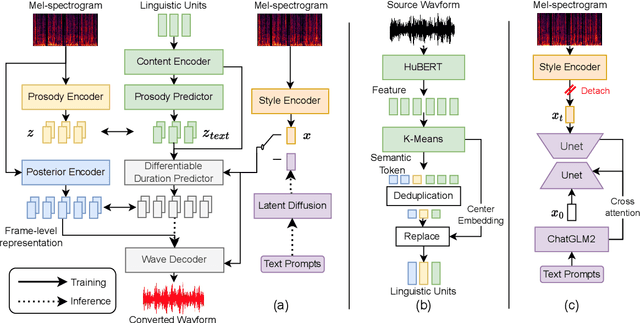

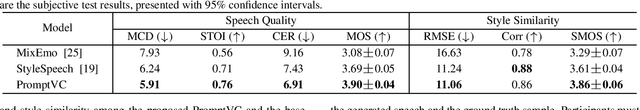

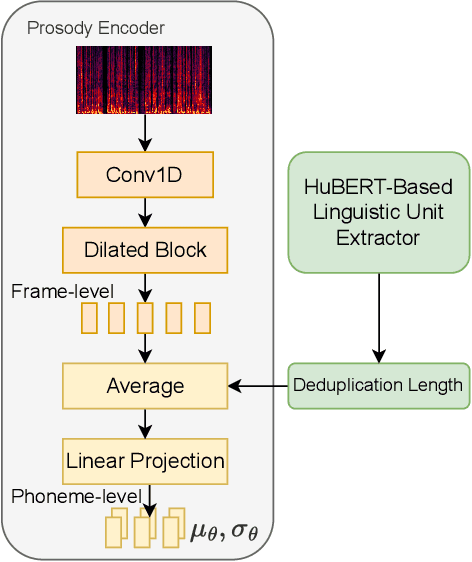

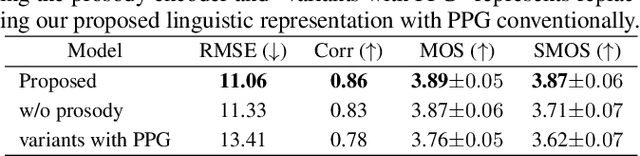

PromptVC: Flexible Stylistic Voice Conversion in Latent Space Driven by Natural Language Prompts

Sep 17, 2023

Abstract:Style voice conversion aims to transform the style of source speech to a desired style according to real-world application demands. However, the current style voice conversion approach relies on pre-defined labels or reference speech to control the conversion process, which leads to limitations in style diversity or falls short in terms of the intuitive and interpretability of style representation. In this study, we propose PromptVC, a novel style voice conversion approach that employs a latent diffusion model to generate a style vector driven by natural language prompts. Specifically, the style vector is extracted by a style encoder during training, and then the latent diffusion model is trained independently to sample the style vector from noise, with this process being conditioned on natural language prompts. To improve style expressiveness, we leverage HuBERT to extract discrete tokens and replace them with the K-Means center embedding to serve as the linguistic content, which minimizes residual style information. Additionally, we deduplicate the same discrete token and employ a differentiable duration predictor to re-predict the duration of each token, which can adapt the duration of the same linguistic content to different styles. The subjective and objective evaluation results demonstrate the effectiveness of our proposed system.

HYBRIDFORMER: improving SqueezeFormer with hybrid attention and NSR mechanism

Mar 15, 2023

Abstract:SqueezeFormer has recently shown impressive performance in automatic speech recognition (ASR). However, its inference speed suffers from the quadratic complexity of softmax-attention (SA). In addition, limited by the large convolution kernel size, the local modeling ability of SqueezeFormer is insufficient. In this paper, we propose a novel method HybridFormer to improve SqueezeFormer in a fast and efficient way. Specifically, we first incorporate linear attention (LA) and propose a hybrid LASA paradigm to increase the model's inference speed. Second, a hybrid neural architecture search (NAS) guided structural re-parameterization (SRep) mechanism, termed NSR, is proposed to enhance the ability of the model to extract local interactions. Extensive experiments conducted on the LibriSpeech dataset demonstrate that our proposed HybridFormer can achieve a 9.1% relative word error rate (WER) reduction over SqueezeFormer on the test-other dataset. Furthermore, when input speech is 30s, the HybridFormer can improve the model's inference speed up to 18%. Our source code is available online.

LMEC: Learnable Multiplicative Absolute Position Embedding Based Conformer for Speech Recognition

Dec 05, 2022

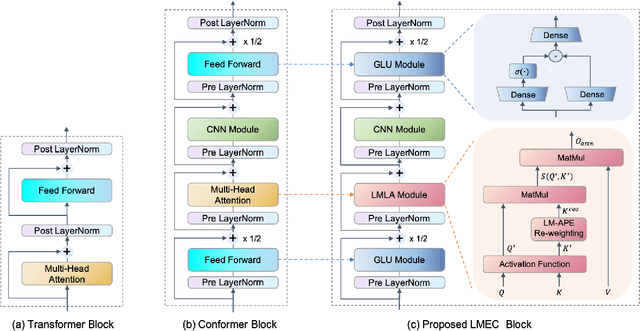

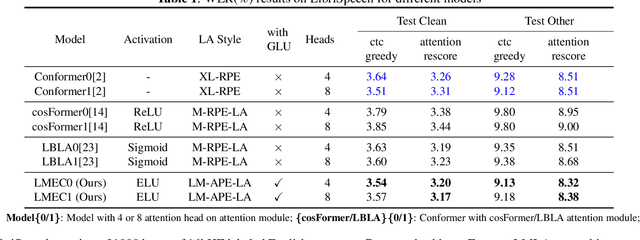

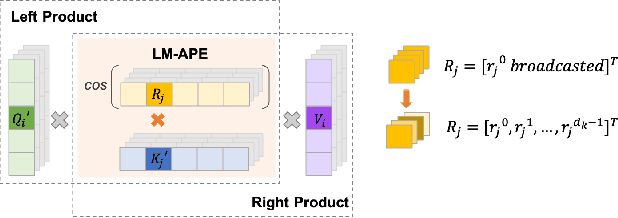

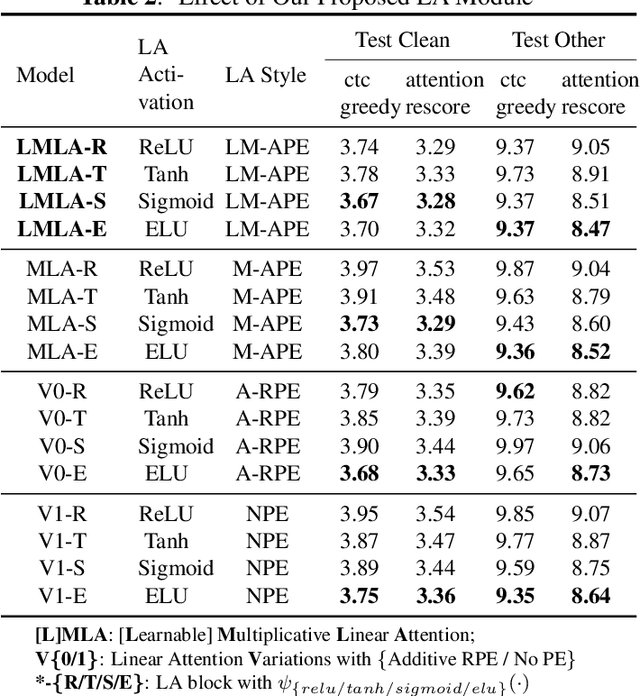

Abstract:This paper proposes a Learnable Multiplicative absolute position Embedding based Conformer (LMEC). It contains a kernelized linear attention (LA) module called LMLA to solve the time-consuming problem for long sequence speech recognition as well as an alternative to the FFN structure. First, the ELU function is adopted as the kernel function of our proposed LA module. Second, we propose a novel Learnable Multiplicative Absolute Position Embedding (LM-APE) based re-weighting mechanism that can reduce the well-known quadratic temporal-space complexity of softmax self-attention. Third, we use Gated Linear Units (GLU) to substitute the Feed Forward Network (FFN) for better performance. Extensive experiments have been conducted on the public LibriSpeech datasets. Compared to the Conformer model with cosFormer style linear attention, our proposed method can achieve up to 0.63% word-error-rate improvement on test-other and improve the inference speed by up to 13% (left product) and 33% (right product) on the LA module.

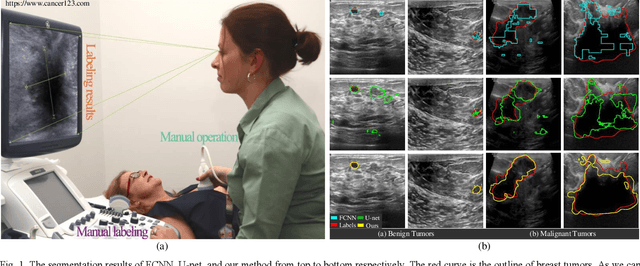

ESKNet-An enhanced adaptive selection kernel convolution for breast tumors segmentation

Nov 05, 2022

Abstract:Breast cancer is one of the common cancers that endanger the health of women globally. Accurate target lesion segmentation is essential for early clinical intervention and postoperative follow-up. Recently, many convolutional neural networks (CNNs) have been proposed to segment breast tumors from ultrasound images. However, the complex ultrasound pattern and the variable tumor shape and size bring challenges to the accurate segmentation of the breast lesion. Motivated by the selective kernel convolution, we introduce an enhanced selective kernel convolution for breast tumor segmentation, which integrates multiple feature map region representations and adaptively recalibrates the weights of these feature map regions from the channel and spatial dimensions. This region recalibration strategy enables the network to focus more on high-contributing region features and mitigate the perturbation of less useful regions. Finally, the enhanced selective kernel convolution is integrated into U-net with deep supervision constraints to adaptively capture the robust representation of breast tumors. Extensive experiments with twelve state-of-the-art deep learning segmentation methods on three public breast ultrasound datasets demonstrate that our method has a more competitive segmentation performance in breast ultrasound images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge