Jianxun Zhang

PSFHS Challenge Report: Pubic Symphysis and Fetal Head Segmentation from Intrapartum Ultrasound Images

Sep 17, 2024

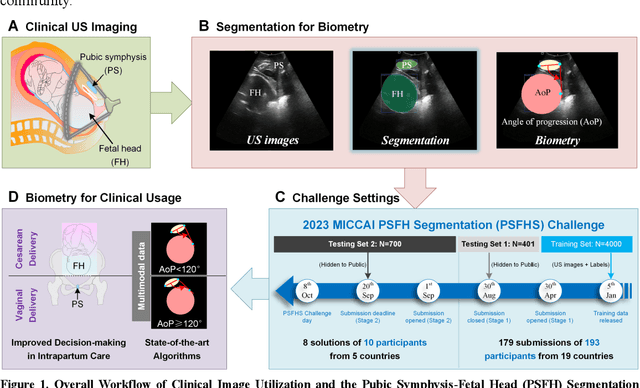

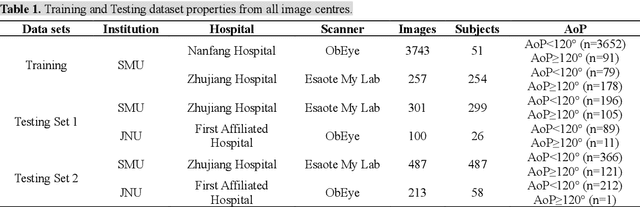

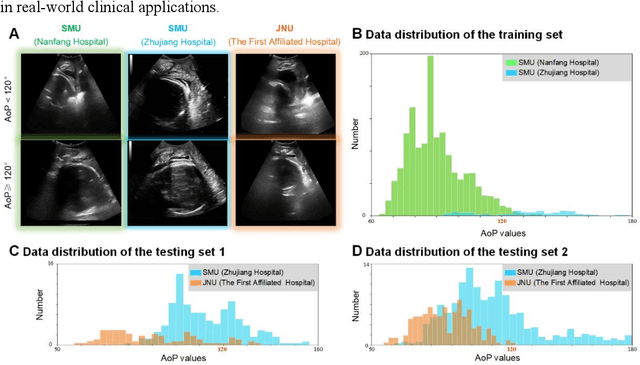

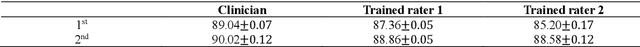

Abstract:Segmentation of the fetal and maternal structures, particularly intrapartum ultrasound imaging as advocated by the International Society of Ultrasound in Obstetrics and Gynecology (ISUOG) for monitoring labor progression, is a crucial first step for quantitative diagnosis and clinical decision-making. This requires specialized analysis by obstetrics professionals, in a task that i) is highly time- and cost-consuming and ii) often yields inconsistent results. The utility of automatic segmentation algorithms for biometry has been proven, though existing results remain suboptimal. To push forward advancements in this area, the Grand Challenge on Pubic Symphysis-Fetal Head Segmentation (PSFHS) was held alongside the 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2023). This challenge aimed to enhance the development of automatic segmentation algorithms at an international scale, providing the largest dataset to date with 5,101 intrapartum ultrasound images collected from two ultrasound machines across three hospitals from two institutions. The scientific community's enthusiastic participation led to the selection of the top 8 out of 179 entries from 193 registrants in the initial phase to proceed to the competition's second stage. These algorithms have elevated the state-of-the-art in automatic PSFHS from intrapartum ultrasound images. A thorough analysis of the results pinpointed ongoing challenges in the field and outlined recommendations for future work. The top solutions and the complete dataset remain publicly available, fostering further advancements in automatic segmentation and biometry for intrapartum ultrasound imaging.

BAAF: A Benchmark Attention Adaptive Framework for Medical Ultrasound Image Segmentation Tasks

Oct 02, 2023Abstract:The AI-based assisted diagnosis programs have been widely investigated on medical ultrasound images. Complex scenario of ultrasound image, in which the coupled interference of internal and external factors is severe, brings a unique challenge for localize the object region automatically and precisely in ultrasound images. In this study, we seek to propose a more general and robust Benchmark Attention Adaptive Framework (BAAF) to assist doctors segment or diagnose lesions and tissues in ultrasound images more quickly and accurately. Different from existing attention schemes, the BAAF consists of a parallel hybrid attention module (PHAM) and an adaptive calibration mechanism (ACM). Specifically, BAAF first coarsely calibrates the input features from the channel and spatial dimensions, and then adaptively selects more robust lesion or tissue characterizations from the coarse-calibrated feature maps. The design of BAAF further optimizes the "what" and "where" focus and selection problems in CNNs and seeks to improve the segmentation accuracy of lesions or tissues in medical ultrasound images. The method is evaluated on four medical ultrasound segmentation tasks, and the adequate experimental results demonstrate the remarkable performance improvement over existing state-of-the-art methods. In addition, the comparison with existing attention mechanisms also demonstrates the superiority of BAAF. This work provides the possibility for automated medical ultrasound assisted diagnosis and reduces reliance on human accuracy and precision.

ESKNet-An enhanced adaptive selection kernel convolution for breast tumors segmentation

Nov 05, 2022

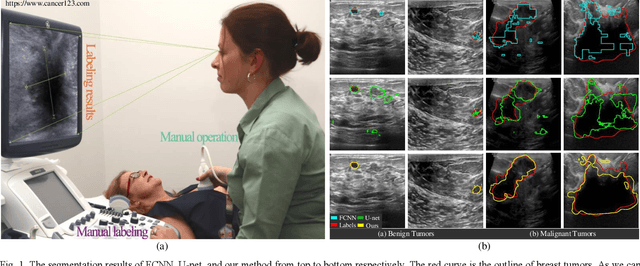

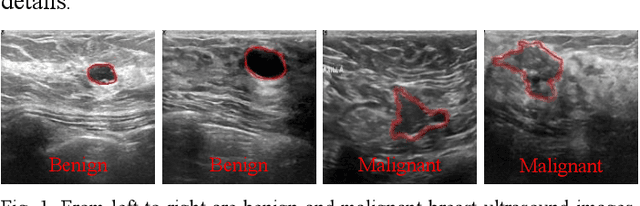

Abstract:Breast cancer is one of the common cancers that endanger the health of women globally. Accurate target lesion segmentation is essential for early clinical intervention and postoperative follow-up. Recently, many convolutional neural networks (CNNs) have been proposed to segment breast tumors from ultrasound images. However, the complex ultrasound pattern and the variable tumor shape and size bring challenges to the accurate segmentation of the breast lesion. Motivated by the selective kernel convolution, we introduce an enhanced selective kernel convolution for breast tumor segmentation, which integrates multiple feature map region representations and adaptively recalibrates the weights of these feature map regions from the channel and spatial dimensions. This region recalibration strategy enables the network to focus more on high-contributing region features and mitigate the perturbation of less useful regions. Finally, the enhanced selective kernel convolution is integrated into U-net with deep supervision constraints to adaptively capture the robust representation of breast tumors. Extensive experiments with twelve state-of-the-art deep learning segmentation methods on three public breast ultrasound datasets demonstrate that our method has a more competitive segmentation performance in breast ultrasound images.

BAGNet: Bidirectional Aware Guidance Network for Malignant Breast lesions Segmentation

Apr 28, 2022

Abstract:Breast lesions segmentation is an important step of computer-aided diagnosis system, and it has attracted much attention. However, accurate segmentation of malignant breast lesions is a challenging task due to the effects of heterogeneous structure and similar intensity distributions. In this paper, a novel bidirectional aware guidance network (BAGNet) is proposed to segment the malignant lesion from breast ultrasound images. Specifically, the bidirectional aware guidance network is used to capture the context between global (low-level) and local (high-level) features from the input coarse saliency map. The introduction of the global feature map can reduce the interference of surrounding tissue (background) on the lesion regions. To evaluate the segmentation performance of the network, we compared with several state-of-the-art medical image segmentation methods on the public breast ultrasound dataset using six commonly used evaluation metrics. Extensive experimental results indicate that our method achieves the most competitive segmentation results on malignant breast ultrasound images.

AAU-net: An Adaptive Attention U-net for Breast Lesions Segmentation in Ultrasound Images

Apr 26, 2022

Abstract:Various deep learning methods have been proposed to segment breast lesion from ultrasound images. However, similar intensity distributions, variable tumor morphology and blurred boundaries present challenges for breast lesions segmentation, especially for malignant tumors with irregular shapes. Considering the complexity of ultrasound images, we develop an adaptive attention U-net (AAU-net) to segment breast lesions automatically and stably from ultrasound images. Specifically, we introduce a hybrid adaptive attention module, which mainly consists of a channel self-attention block and a spatial self-attention block, to replace the traditional convolution operation. Compared with the conventional convolution operation, the design of the hybrid adaptive attention module can help us capture more features under different receptive fields. Different from existing attention mechanisms, the hybrid adaptive attention module can guide the network to adaptively select more robust representation in channel and space dimensions to cope with more complex breast lesions segmentation. Extensive experiments with several state-of-the-art deep learning segmentation methods on three public breast ultrasound datasets show that our method has better performance on breast lesion segmentation. Furthermore, robustness analysis and external experiments demonstrate that our proposed AAU-net has better generalization performance on the segmentation of breast lesions. Moreover, the hybrid adaptive attention module can be flexibly applied to existing network frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge