Jiande Sun

Machine Learning-Driven Student Performance Prediction for Enhancing Tiered Instruction

Feb 05, 2025

Abstract:Student performance prediction is one of the most important subjects in educational data mining. As a modern technology, machine learning offers powerful capabilities in feature extraction and data modeling, providing essential support for diverse application scenarios, as evidenced by recent studies confirming its effectiveness in educational data mining. However, despite extensive prediction experiments, machine learning methods have not been effectively integrated into practical teaching strategies, hindering their application in modern education. In addition, massive features as input variables for machine learning algorithms often leads to information redundancy, which can negatively impact prediction accuracy. Therefore, how to effectively use machine learning methods to predict student performance and integrate the prediction results with actual teaching scenarios is a worthy research subject. To this end, this study integrates the results of machine learning-based student performance prediction with tiered instruction, aiming to enhance student outcomes in target course, which is significant for the application of educational data mining in contemporary teaching scenarios. Specifically, we collect original educational data and perform feature selection to reduce information redundancy. Then, the performance of five representative machine learning methods is analyzed and discussed with Random Forest showing the best performance. Furthermore, based on the results of the classification of students, tiered instruction is applied accordingly, and different teaching objectives and contents are set for all levels of students. The comparison of teaching outcomes between the control and experimental classes, along with the analysis of questionnaire results, demonstrates the effectiveness of the proposed framework.

Concept-Aware Latent and Explicit Knowledge Integration for Enhanced Cognitive Diagnosis

Feb 04, 2025

Abstract:Cognitive diagnosis can infer the students' mastery of specific knowledge concepts based on historical response logs. However, the existing cognitive diagnostic models (CDMs) represent students' proficiency via a unidimensional perspective, which can't assess the students' mastery on each knowledge concept comprehensively. Moreover, the Q-matrix binarizes the relationship between exercises and knowledge concepts, and it can't represent the latent relationship between exercises and knowledge concepts. Especially, when the granularity of knowledge attributes refines increasingly, the Q-matrix becomes incomplete correspondingly and the sparse binary representation (0/1) fails to capture the intricate relationships among knowledge concepts. To address these issues, we propose a Concept-aware Latent and Explicit Knowledge Integration model for cognitive diagnosis (CLEKI-CD). Specifically, a multidimensional vector is constructed according to the students' mastery and exercise difficulty for each knowledge concept from multiple perspectives, which enhances the representation capabilities of the model. Moreover, a latent Q-matrix is generated by our proposed attention-based knowledge aggregation method, and it can uncover the coverage degree of exercises over latent knowledge. The latent Q-matrix can supplement the sparse explicit Q-matrix with the inherent relationships among knowledge concepts, and mitigate the knowledge coverage problem. Furthermore, we employ a combined cognitive diagnosis layer to integrate both latent and explicit knowledge, further enhancing cognitive diagnosis performance. Extensive experiments on real-world datasets demonstrate that CLEKI-CD outperforms the state-of-the-art models. The proposed CLEKI-CD is promising in practical applications in the field of intelligent education, as it exhibits good interpretability with diagnostic results.

Multimodal Inverse Attention Network with Intrinsic Discriminant Feature Exploitation for Fake News Detection

Feb 03, 2025

Abstract:Multimodal fake news detection has garnered significant attention due to its profound implications for social security. While existing approaches have contributed to understanding cross-modal consistency, they often fail to leverage modal-specific representations and explicit discrepant features. To address these limitations, we propose a Multimodal Inverse Attention Network (MIAN), a novel framework that explores intrinsic discriminative features based on news content to advance fake news detection. Specifically, MIAN introduces a hierarchical learning module that captures diverse intra-modal relationships through local-to-global and local-to-local interactions, thereby generating enhanced unimodal representations to improve the identification of fake news at the intra-modal level. Additionally, a cross-modal interaction module employs a co-attention mechanism to establish and model dependencies between the refined unimodal representations, facilitating seamless semantic integration across modalities. To explicitly extract inconsistency features, we propose an inverse attention mechanism that effectively highlights the conflicting patterns and semantic deviations introduced by fake news in both intra- and inter-modality. Extensive experiments on benchmark datasets demonstrate that MIAN significantly outperforms state-of-the-art methods, underscoring its pivotal contribution to advancing social security through enhanced multimodal fake news detection.

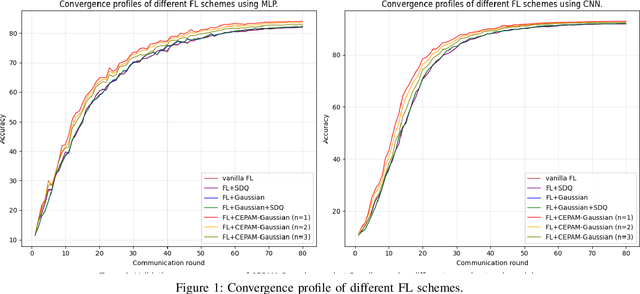

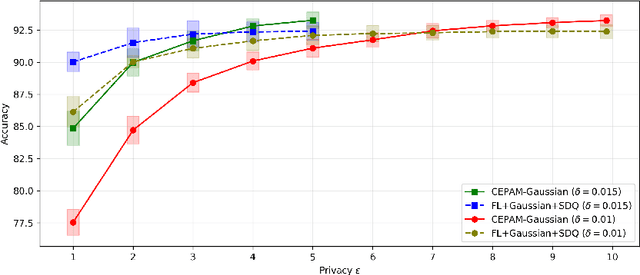

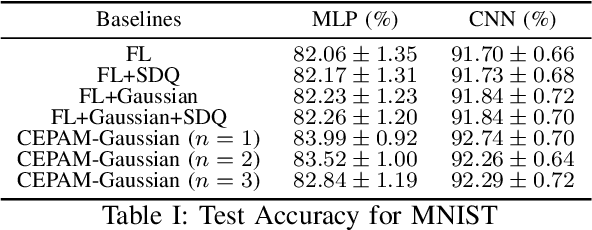

Communication-Efficient and Privacy-Adaptable Mechanism for Federated Learning

Jan 21, 2025

Abstract:Training machine learning models on decentralized private data via federated learning (FL) poses two key challenges: communication efficiency and privacy protection. In this work, we address these challenges within the trusted aggregator model by introducing a novel approach called the Communication-Efficient and Privacy-Adaptable Mechanism (CEPAM), achieving both objectives simultaneously. In particular, CEPAM leverages the rejection-sampled universal quantizer (RSUQ), a construction of randomized vector quantizer whose resulting distortion is equivalent to a prescribed noise, such as Gaussian or Laplace noise, enabling joint differential privacy and compression. Moreover, we analyze the trade-offs among user privacy, global utility, and transmission rate of CEPAM by defining appropriate metrics for FL with differential privacy and compression. Our CEPAM provides the additional benefit of privacy adaptability, allowing clients and the server to customize privacy protection based on required accuracy and protection. We assess CEPAM's utility performance using MNIST dataset, demonstrating that CEPAM surpasses baseline models in terms of learning accuracy.

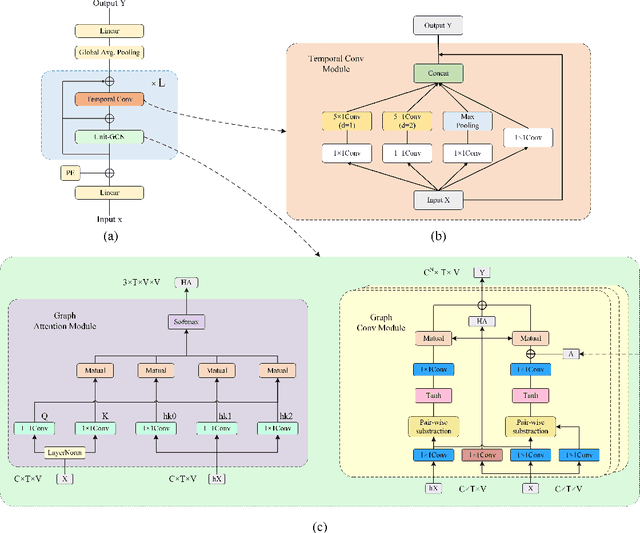

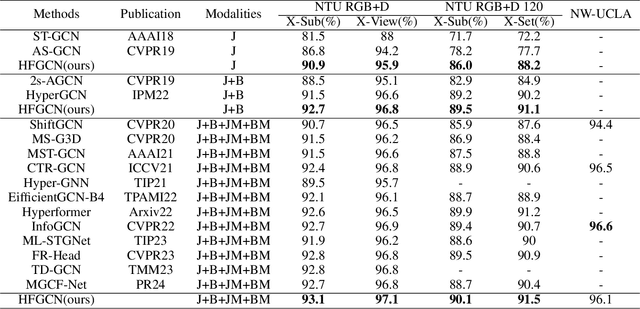

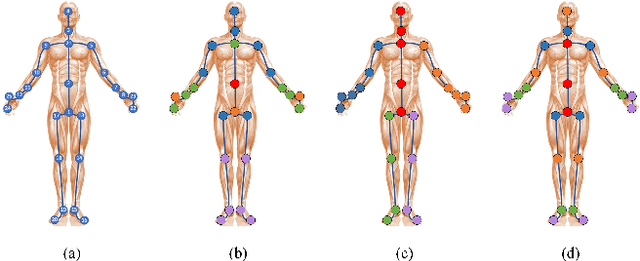

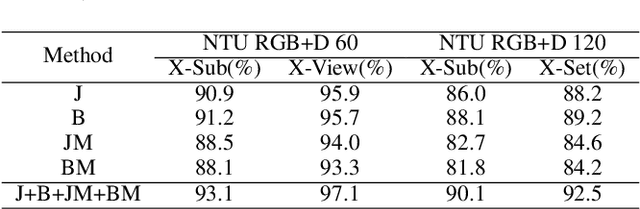

HFGCN:Hypergraph Fusion Graph Convolutional Networks for Skeleton-Based Action Recognition

Jan 19, 2025

Abstract:In recent years, action recognition has received much attention and wide application due to its important role in video understanding. Most of the researches on action recognition methods focused on improving the performance via various deep learning methods rather than the classification of skeleton points. The topological modeling between skeleton points and body parts was seldom considered. Although some studies have used a data-driven approach to classify the topology of the skeleton point, the nature of the skeleton point in terms of kinematics has not been taken into consideration. Therefore, in this paper, we draw on the theory of kinematics to adapt the topological relations of the skeleton point and propose a topological relation classification based on body parts and distance from core of body. To synthesize these topological relations for action recognition, we propose a novel Hypergraph Fusion Graph Convolutional Network (HFGCN). In particular, the proposed model is able to focus on the human skeleton points and the different body parts simultaneously, and thus construct the topology, which improves the recognition accuracy obviously. We use a hypergraph to represent the categorical relationships of these skeleton points and incorporate the hypergraph into a graph convolution network to model the higher-order relationships among the skeleton points and enhance the feature representation of the network. In addition, our proposed hypergraph attention module and hypergraph graph convolution module optimize topology modeling in temporal and channel dimensions, respectively, to further enhance the feature representation of the network. We conducted extensive experiments on three widely used datasets.The results validate that our proposed method can achieve the best performance when compared with the state-of-the-art skeleton-based methods.

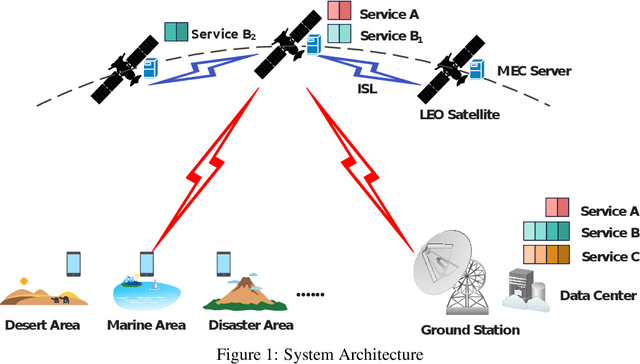

Cost-Efficient Computation Offloading and Service Chain Caching in LEO Satellite Networks

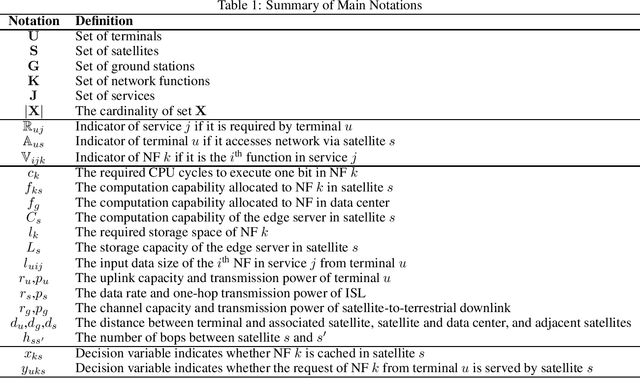

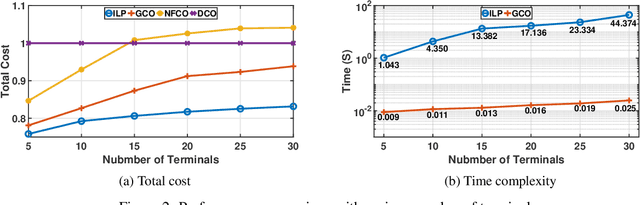

Nov 14, 2023

Abstract:The ever-increasing demand for ubiquitous, continuous, and high-quality services poses a great challenge to the traditional terrestrial network. To mitigate this problem, the mobile-edge-computing-enhanced low earth orbit (LEO) satellite network, which provides both communication connectivity and on-board processing services, has emerged as an effective method. The main issue in LEO satellites includes finding the optimal locations to host network functions (NFs) and then making offloading decisions. In this article, we jointly consider the problem of service chain caching and computation offloading to minimize the overall cost, which consists of task latency and energy consumption. In particular, the collaboration among satellites, the network resource limitations, and the specific operation order of NFs in service chains are taken into account. Then, the problem is formulated and linearized as an integer linear programming model. Moreover, to accelerate the solution, we provide a greedy algorithm with cubic time complexity. Numerical investigations demonstrate the effectiveness of the proposed scheme, which can reduce the overall cost by around 20% compared to the nominal case where NFs are served in data centers.

CPFES: Physical Fitness Evaluation Based on Canadian Agility and Movement Skill Assessment

Aug 28, 2023Abstract:In recent years, the assessment of fundamental movement skills integrated with physical education has focused on both teaching practice and the feasibility of assessment. The object of assessment has shifted from multiple ages to subdivided ages, while the content of assessment has changed from complex and time-consuming to concise and efficient. Therefore, we apply deep learning to physical fitness evaluation, we propose a system based on the Canadian Agility and Movement Skill Assessment (CAMSA) Physical Fitness Evaluation System (CPFES), which evaluates children's physical fitness based on CAMSA, and gives recommendations based on the scores obtained by CPFES to help children grow. We have designed a landmark detection module and a pose estimation module, and we have also designed a pose evaluation module for the CAMSA criteria that can effectively evaluate the actions of the child being tested. Our experimental results demonstrate the high accuracy of the proposed system.

Robust Single Image Dehazing Based on Consistent and Contrast-Assisted Reconstruction

Mar 29, 2022

Abstract:Single image dehazing as a fundamental low-level vision task, is essential for the development of robust intelligent surveillance system. In this paper, we make an early effort to consider dehazing robustness under variational haze density, which is a realistic while under-studied problem in the research filed of singe image dehazing. To properly address this problem, we propose a novel density-variational learning framework to improve the robustness of the image dehzing model assisted by a variety of negative hazy images, to better deal with various complex hazy scenarios. Specifically, the dehazing network is optimized under the consistency-regularized framework with the proposed Contrast-Assisted Reconstruction Loss (CARL). The CARL can fully exploit the negative information to facilitate the traditional positive-orient dehazing objective function, by squeezing the dehazed image to its clean target from different directions. Meanwhile, the consistency regularization keeps consistent outputs given multi-level hazy images, thus improving the model robustness. Extensive experimental results on two synthetic and three real-world datasets demonstrate that our method significantly surpasses the state-of-the-art approaches.

GaitStrip: Gait Recognition via Effective Strip-based Feature Representations and Multi-Level Framework

Mar 08, 2022

Abstract:Many gait recognition methods first partition the human gait into N-parts and then combine them to establish part-based feature representations. Their gait recognition performance is often affected by partitioning strategies, which are empirically chosen in different datasets. However, we observe that strips as the basic component of parts are agnostic against different partitioning strategies. Motivated by this observation, we present a strip-based multi-level gait recognition network, named GaitStrip, to extract comprehensive gait information at different levels. To be specific, our high-level branch explores the context of gait sequences and our low-level one focuses on detailed posture changes. We introduce a novel StriP-Based feature extractor (SPB) to learn the strip-based feature representations by directly taking each strip of the human body as the basic unit. Moreover, we propose a novel multi-branch structure, called Enhanced Convolution Module (ECM), to extract different representations of gaits. ECM consists of the Spatial-Temporal feature extractor (ST), the Frame-Level feature extractor (FL) and SPB, and has two obvious advantages: First, each branch focuses on a specific representation, which can be used to improve the robustness of the network. Specifically, ST aims to extract spatial-temporal features of gait sequences, while FL is used to generate the feature representation of each frame. Second, the parameters of the ECM can be reduced in test by introducing a structural re-parameterization technique. Extensive experimental results demonstrate that our GaitStrip achieves state-of-the-art performance in both normal walking and complex conditions.

Single Image Dehazing with An Independent Detail-Recovery Network

Sep 22, 2021

Abstract:Single image dehazing is a prerequisite which affects the performance of many computer vision tasks and has attracted increasing attention in recent years. However, most existing dehazing methods emphasize more on haze removal but less on the detail recovery of the dehazed images. In this paper, we propose a single image dehazing method with an independent Detail Recovery Network (DRN), which considers capturing the details from the input image over a separate network and then integrates them into a coarse dehazed image. The overall network consists of two independent networks, named DRN and the dehazing network respectively. Specifically, the DRN aims to recover the dehazed image details through local and global branches respectively. The local branch can obtain local detail information through the convolution layer and the global branch can capture more global information by the Smooth Dilated Convolution (SDC). The detail feature map is fused into the coarse dehazed image to obtain the dehazed image with rich image details. Besides, we integrate the DRN, the physical-model-based dehazing network and the reconstruction loss into an end-to-end joint learning framework. Extensive experiments on the public image dehazing datasets (RESIDE-Indoor, RESIDE-Outdoor and the TrainA-TestA) illustrate the effectiveness of the modules in the proposed method and show that our method outperforms the state-of-the-art dehazing methods both quantitatively and qualitatively. The code is released in https://github.com/YanLi-LY/Dehazing-DRN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge