Yantong Wang

Cost-Efficient Computation Offloading and Service Chain Caching in LEO Satellite Networks

Nov 14, 2023

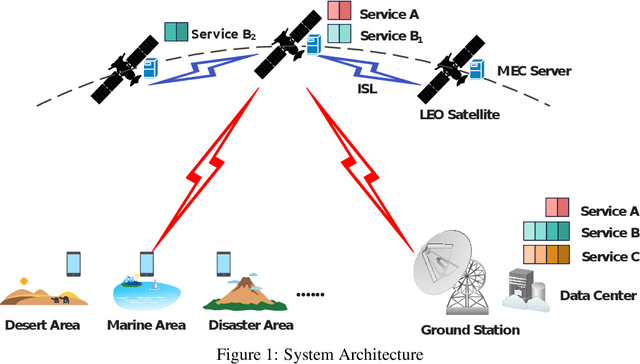

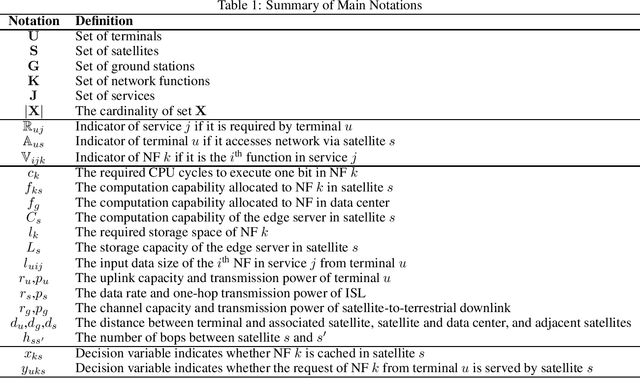

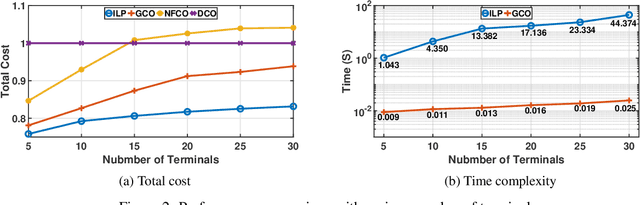

Abstract:The ever-increasing demand for ubiquitous, continuous, and high-quality services poses a great challenge to the traditional terrestrial network. To mitigate this problem, the mobile-edge-computing-enhanced low earth orbit (LEO) satellite network, which provides both communication connectivity and on-board processing services, has emerged as an effective method. The main issue in LEO satellites includes finding the optimal locations to host network functions (NFs) and then making offloading decisions. In this article, we jointly consider the problem of service chain caching and computation offloading to minimize the overall cost, which consists of task latency and energy consumption. In particular, the collaboration among satellites, the network resource limitations, and the specific operation order of NFs in service chains are taken into account. Then, the problem is formulated and linearized as an integer linear programming model. Moreover, to accelerate the solution, we provide a greedy algorithm with cubic time complexity. Numerical investigations demonstrate the effectiveness of the proposed scheme, which can reduce the overall cost by around 20% compared to the nominal case where NFs are served in data centers.

A Minmax Utilization Algorithm for Network Traffic Scheduling of Industrial Robots

Nov 02, 2021

Abstract:Emerging 5G and beyond wireless industrial virtualized networks are expected to support a significant number of robotic manipulators. Depending on the processes involved, these industrial robots might result in significant volume of multi-modal traffic that will need to traverse the network all the way to the (public/private) edge cloud, where advanced processing, control and service orchestration will be taking place. In this paper, we perform the traffic engineering by capitalizing on the underlying pseudo-deterministic nature of the repetitive processes of robotic manipulators in an industrial environment and propose an integer linear programming (ILP) model to minimize the maximum aggregate traffic in the network. The task sequence and time gap requirements are also considered in the proposed model. To tackle the curse of dimensionality in ILP, we provide a random search algorithm with quadratic time complexity. Numerical investigations reveal that the proposed scheme can reduce the peak data rate up to 53.4% compared with the nominal case where robotic manipulators operate in an uncoordinated fashion, resulting in significant improvement in the utilization of the underlying network resources.

Learning from Images: Proactive Caching with Parallel Convolutional Neural Networks

Aug 15, 2021

Abstract:With the continuous trend of data explosion, delivering packets from data servers to end users causes increased stress on both the fronthaul and backhaul traffic of mobile networks. To mitigate this problem, caching popular content closer to the end-users has emerged as an effective method for reducing network congestion and improving user experience. To find the optimal locations for content caching, many conventional approaches construct various mixed integer linear programming (MILP) models. However, such methods may fail to support online decision making due to the inherent curse of dimensionality. In this paper, a novel framework for proactive caching is proposed. This framework merges model-based optimization with data-driven techniques by transforming an optimization problem into a grayscale image. For parallel training and simple design purposes, the proposed MILP model is first decomposed into a number of sub-problems and, then, convolutional neural networks (CNNs) are trained to predict content caching locations of these sub-problems. Furthermore, since the MILP model decomposition neglects the internal effects among sub-problems, the CNNs' outputs have the risk to be infeasible solutions. Therefore, two algorithms are provided: the first uses predictions from CNNs as an extra constraint to reduce the number of decision variables; the second employs CNNs' outputs to accelerate local search. Numerical results show that the proposed scheme can reduce 71.6% computation time with only 0.8% additional performance cost compared to the MILP solution, which provides high quality decision making in real-time.

A Survey of Deep Learning for Data Caching in Edge Network

Aug 17, 2020

Abstract:The concept of edge caching provision in emerging 5G and beyond mobile networks is a promising method to deal both with the traffic congestion problem in the core network as well as reducing latency to access popular content. In that respect end user demand for popular content can be satisfied by proactively caching it at the network edge, i.e, at close proximity to the users. In addition to model based caching schemes learning-based edge caching optimizations has recently attracted significant attention and the aim hereafter is to capture these recent advances for both model based and data driven techniques in the area of proactive caching. This paper summarizes the utilization of deep learning for data caching in edge network. We first outline the typical research topics in content caching and formulate a taxonomy based on network hierarchical structure. Then, a number of key types of deep learning algorithms are presented, ranging from supervised learning to unsupervised learning as well as reinforcement learning. Furthermore, a comparison of state-of-the-art literature is provided from the aspects of caching topics and deep learning methods. Finally, we discuss research challenges and future directions of applying deep learning for caching

Network Orchestration in Mobile Networks via a Synergy of Model-driven and AI-based Techniques

Apr 01, 2020

Abstract:As data traffic volume continues to increase, caching of popular content at strategic network locations closer to the end user can enhance not only user experience but ease the utilization of highly congested links in the network. A key challenge in the area of proactive caching is finding the optimal locations to host the popular content items under various optimization criteria. These problems are combinatorial in nature and therefore finding optimal and/or near optimal decisions is computationally expensive. In this paper a framework is proposed to reduce the computational complexity of the underlying integer mathematical program by first predicting decision variables related to optimal locations using a deep convolutional neural network (CNN). The CNN is trained in an offline manner with optimal solutions and is then used to feed a much smaller optimization problems which is amenable for real-time decision making. Numerical investigations reveal that the proposed approach can provide in an online manner high quality decision making; a feature which is crucially important for real-world implementations.

Caching as an Image Characterization Problem using Deep Convolutional Neural Networks

Jul 16, 2019

Abstract:Optimizing caching locations of popular content has received significant research attention over the last few years. This paper targets the optimization of the caching locations by proposing a novel transformation of the optimization problem to a grey-scale image that is applied to a deep convolutional neural network (CNN). The rational for the proposed modeling comes from CNN's superiority to capture features in gray-scale images reaching human level performance in image recognition problems. The CNN has been trained with optimal solutions and the numerical investigations and analyses demonstrate the promising performance of the proposed method. Therefore, for enabling real-time decision making we moving away from a strictly optimization based framework to an amalgamation of optimization with a data driven approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge