Jeremy Irvin

Department of Computer Science, Stanford University, Stanford, CA, USA

Many-Shot In-Context Learning in Multimodal Foundation Models

May 16, 2024

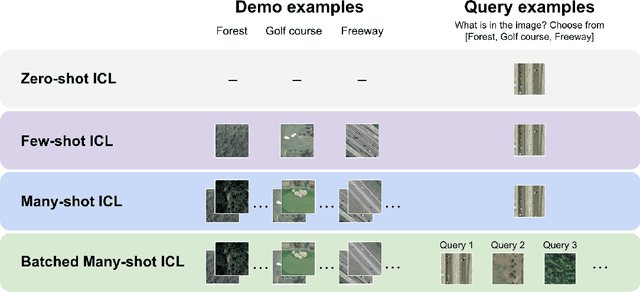

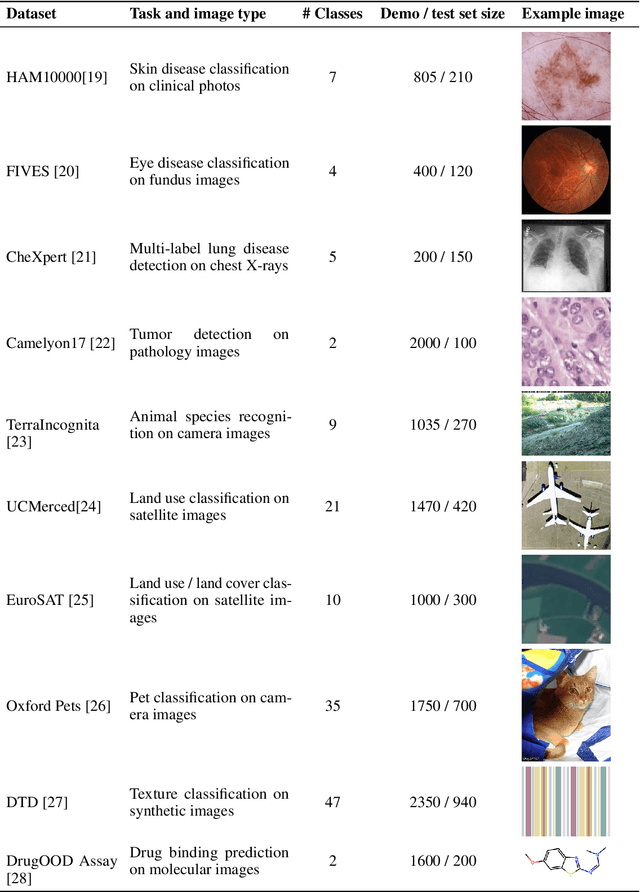

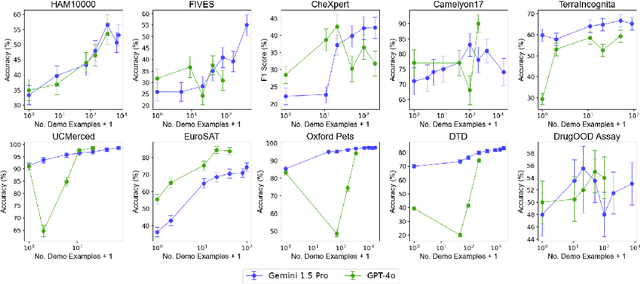

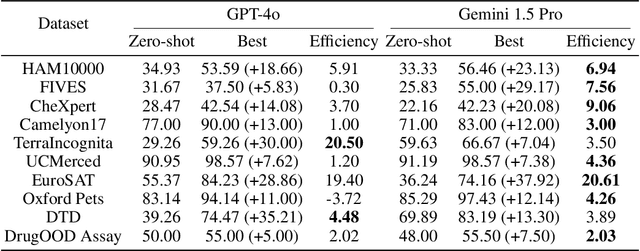

Abstract:Large language models are well-known to be effective at few-shot in-context learning (ICL). Recent advancements in multimodal foundation models have enabled unprecedentedly long context windows, presenting an opportunity to explore their capability to perform ICL with many more demonstrating examples. In this work, we evaluate the performance of multimodal foundation models scaling from few-shot to many-shot ICL. We benchmark GPT-4o and Gemini 1.5 Pro across 10 datasets spanning multiple domains (natural imagery, medical imagery, remote sensing, and molecular imagery) and tasks (multi-class, multi-label, and fine-grained classification). We observe that many-shot ICL, including up to almost 2,000 multimodal demonstrating examples, leads to substantial improvements compared to few-shot (<100 examples) ICL across all of the datasets. Further, Gemini 1.5 Pro performance continues to improve log-linearly up to the maximum number of tested examples on many datasets. Given the high inference costs associated with the long prompts required for many-shot ICL, we also explore the impact of batching multiple queries in a single API call. We show that batching up to 50 queries can lead to performance improvements under zero-shot and many-shot ICL, with substantial gains in the zero-shot setting on multiple datasets, while drastically reducing per-query cost and latency. Finally, we measure ICL data efficiency of the models, or the rate at which the models learn from more demonstrating examples. We find that while GPT-4o and Gemini 1.5 Pro achieve similar zero-shot performance across the datasets, Gemini 1.5 Pro exhibits higher ICL data efficiency than GPT-4o on most datasets. Our results suggest that many-shot ICL could enable users to efficiently adapt multimodal foundation models to new applications and domains. Our codebase is publicly available at https://github.com/stanfordmlgroup/ManyICL .

CloudTracks: A Dataset for Localizing Ship Tracks in Satellite Images of Clouds

Jan 25, 2024Abstract:Clouds play a significant role in global temperature regulation through their effect on planetary albedo. Anthropogenic emissions of aerosols can alter the albedo of clouds, but the extent of this effect, and its consequent impact on temperature change, remains uncertain. Human-induced clouds caused by ship aerosol emissions, commonly referred to as ship tracks, provide visible manifestations of this effect distinct from adjacent cloud regions and therefore serve as a useful sandbox to study human-induced clouds. However, the lack of large-scale ship track data makes it difficult to deduce their general effects on cloud formation. Towards developing automated approaches to localize ship tracks at scale, we present CloudTracks, a dataset containing 3,560 satellite images labeled with more than 12,000 ship track instance annotations. We train semantic segmentation and instance segmentation model baselines on our dataset and find that our best model substantially outperforms previous state-of-the-art for ship track localization (61.29 vs. 48.65 IoU). We also find that the best instance segmentation model is able to identify the number of ship tracks in each image more accurately than the previous state-of-the-art (1.64 vs. 4.99 MAE). However, we identify cases where the best model struggles to accurately localize and count ship tracks, so we believe CloudTracks will stimulate novel machine learning approaches to better detect elongated and overlapping features in satellite images. We release our dataset openly at {zenodo.org/records/10042922}.

An Empirical Study of Automated Mislabel Detection in Real World Vision Datasets

Dec 02, 2023Abstract:Major advancements in computer vision can primarily be attributed to the use of labeled datasets. However, acquiring labels for datasets often results in errors which can harm model performance. Recent works have proposed methods to automatically identify mislabeled images, but developing strategies to effectively implement them in real world datasets has been sparsely explored. Towards improved data-centric methods for cleaning real world vision datasets, we first conduct more than 200 experiments carefully benchmarking recently developed automated mislabel detection methods on multiple datasets under a variety of synthetic and real noise settings with varying noise levels. We compare these methods to a Simple and Efficient Mislabel Detector (SEMD) that we craft, and find that SEMD performs similarly to or outperforms prior mislabel detection approaches. We then apply SEMD to multiple real world computer vision datasets and test how dataset size, mislabel removal strategy, and mislabel removal amount further affect model performance after retraining on the cleaned data. With careful design of the approach, we find that mislabel removal leads per-class performance improvements of up to 8% of a retrained classifier in smaller data regimes.

USat: A Unified Self-Supervised Encoder for Multi-Sensor Satellite Imagery

Dec 02, 2023

Abstract:Large, self-supervised vision models have led to substantial advancements for automatically interpreting natural images. Recent works have begun tailoring these methods to remote sensing data which has rich structure with multi-sensor, multi-spectral, and temporal information providing massive amounts of self-labeled data that can be used for self-supervised pre-training. In this work, we develop a new encoder architecture called USat that can input multi-spectral data from multiple sensors for self-supervised pre-training. USat is a vision transformer with modified patch projection layers and positional encodings to model spectral bands with varying spatial scales from multiple sensors. We integrate USat into a Masked Autoencoder (MAE) self-supervised pre-training procedure and find that a pre-trained USat outperforms state-of-the-art self-supervised MAE models trained on remote sensing data on multiple remote sensing benchmark datasets (up to 8%) and leads to improvements in low data regimes (up to 7%). Code and pre-trained weights are available at https://github.com/stanfordmlgroup/USat .

Weakly-semi-supervised object detection in remotely sensed imagery

Nov 29, 2023

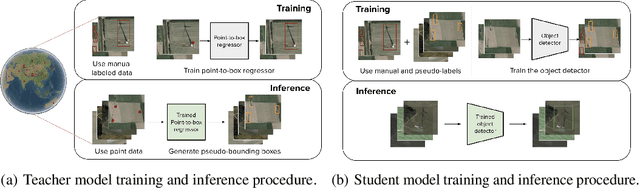

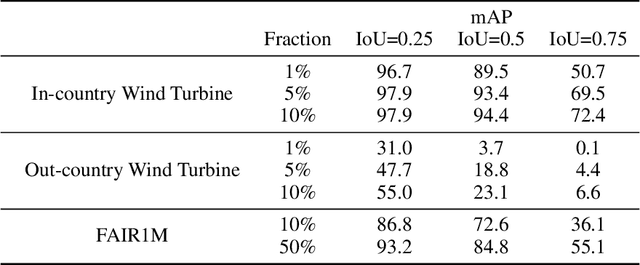

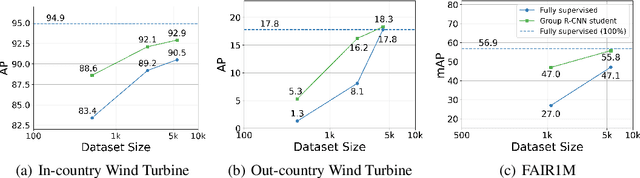

Abstract:Deep learning for detecting objects in remotely sensed imagery can enable new technologies for important applications including mitigating climate change. However, these models often require large datasets labeled with bounding box annotations which are expensive to curate, prohibiting the development of models for new tasks and geographies. To address this challenge, we develop weakly-semi-supervised object detection (WSSOD) models on remotely sensed imagery which can leverage a small amount of bounding boxes together with a large amount of point labels that are easy to acquire at scale in geospatial data. We train WSSOD models which use large amounts of point-labeled images with varying fractions of bounding box labeled images in FAIR1M and a wind turbine detection dataset, and demonstrate that they substantially outperform fully supervised models trained with the same amount of bounding box labeled images on both datasets. Furthermore, we find that the WSSOD models trained with 2-10x fewer bounding box labeled images can perform similarly to or outperform fully supervised models trained on the full set of bounding-box labeled images. We believe that the approach can be extended to other remote sensing tasks to reduce reliance on bounding box labels and increase development of models for impactful applications.

How to Train Your CheXDragon: Training Chest X-Ray Models for Transfer to Novel Tasks and Healthcare Systems

May 13, 2023

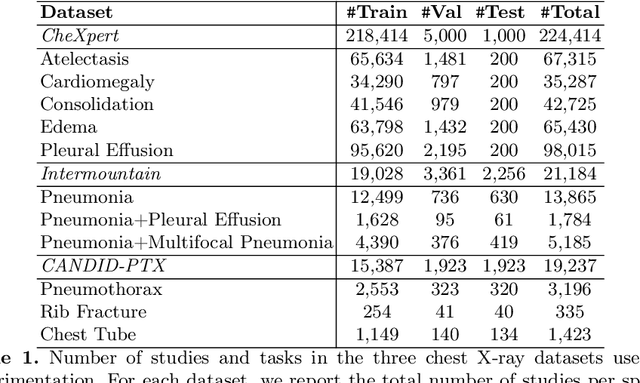

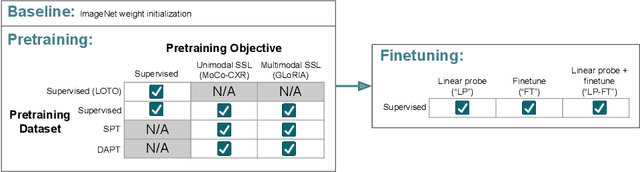

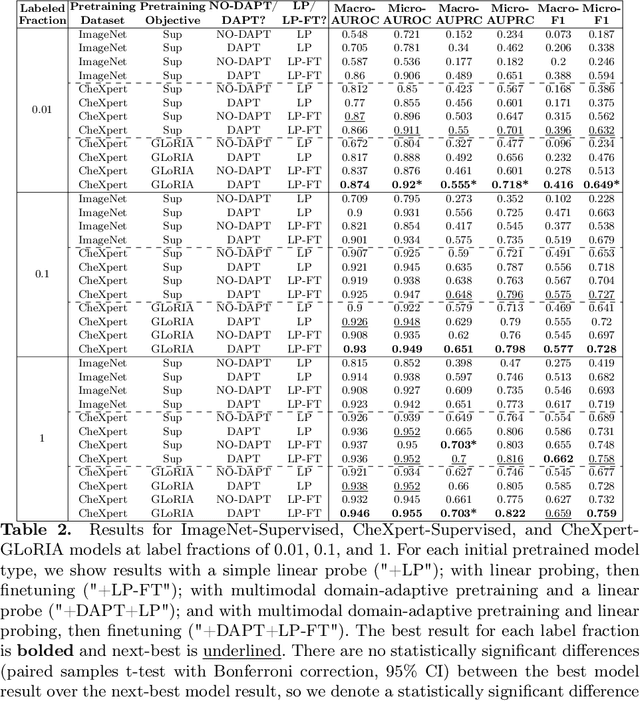

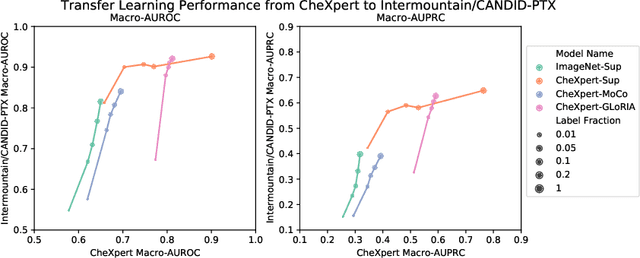

Abstract:Self-supervised learning (SSL) enables label efficient training for machine learning models. This is essential for domains such as medical imaging, where labels are costly and time-consuming to curate. However, the most effective supervised or SSL strategy for transferring models to different healthcare systems or novel tasks is not well understood. In this work, we systematically experiment with a variety of supervised and self-supervised pretraining strategies using multimodal datasets of medical images (chest X-rays) and text (radiology reports). We then evaluate their performance on data from two external institutions with diverse sets of tasks. In addition, we experiment with different transfer learning strategies to effectively adapt these pretrained models to new tasks and healthcare systems. Our empirical results suggest that multimodal SSL gives substantial gains over unimodal SSL in performance across new healthcare systems and tasks, comparable to models pretrained with full supervision. We demonstrate additional performance gains with models further adapted to the new dataset and task, using multimodal domain-adaptive pretraining (DAPT), linear probing then finetuning (LP-FT), and both methods combined. We offer suggestions for alternative models to use in scenarios where not all of these additions are feasible. Our results provide guidance for improving the generalization of medical image interpretation models to new healthcare systems and novel tasks.

Improving debris flow evacuation alerts in Taiwan using machine learning

Aug 27, 2022

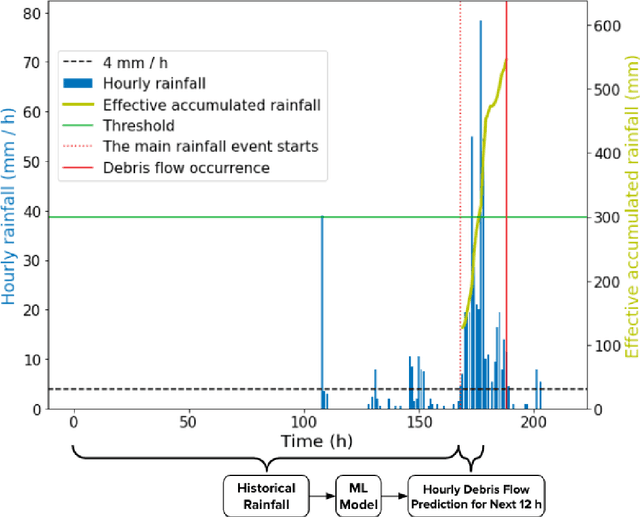

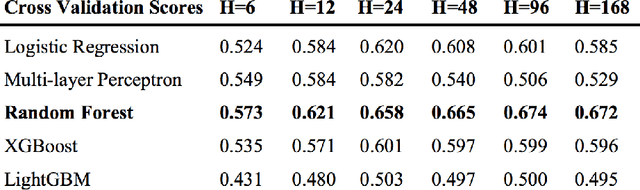

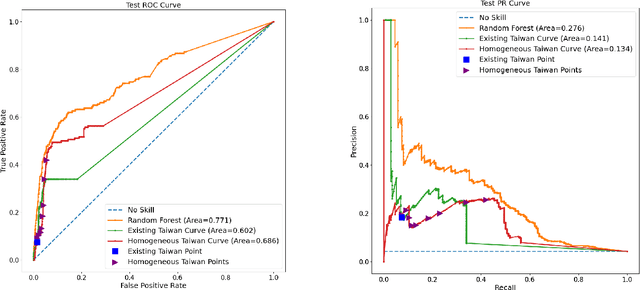

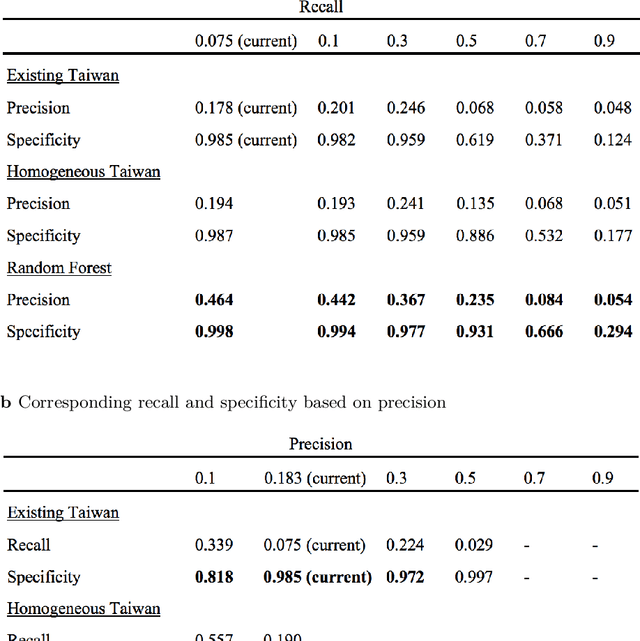

Abstract:Taiwan has the highest susceptibility to and fatalities from debris flows worldwide. The existing debris flow warning system in Taiwan, which uses a time-weighted measure of rainfall, leads to alerts when the measure exceeds a predefined threshold. However, this system generates many false alarms and misses a substantial fraction of the actual debris flows. Towards improving this system, we implemented five machine learning models that input historical rainfall data and predict whether a debris flow will occur within a selected time. We found that a random forest model performed the best among the five models and outperformed the existing system in Taiwan. Furthermore, we identified the rainfall trajectories strongly related to debris flow occurrences and explored trade-offs between the risks of missing debris flows versus frequent false alerts. These results suggest the potential for machine learning models trained on hourly rainfall data alone to save lives while reducing false alerts.

METER-ML: A Multi-sensor Earth Observation Benchmark for Automated Methane Source Mapping

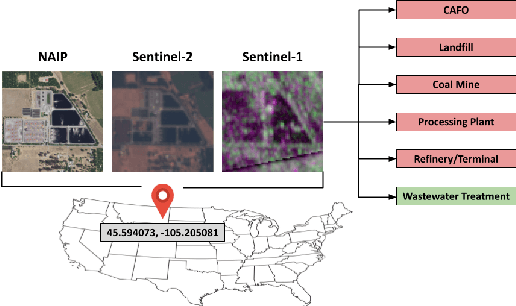

Jul 22, 2022

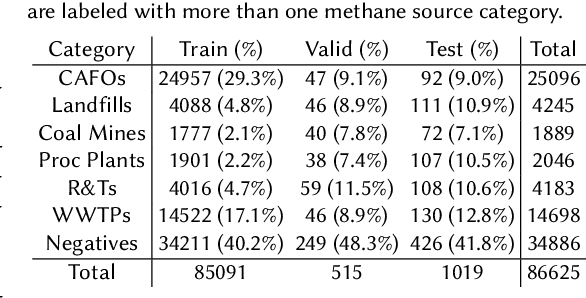

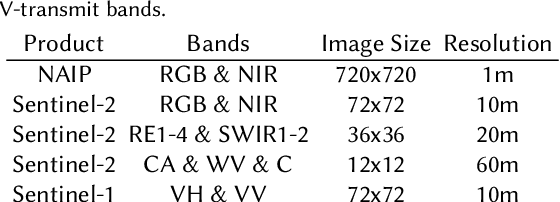

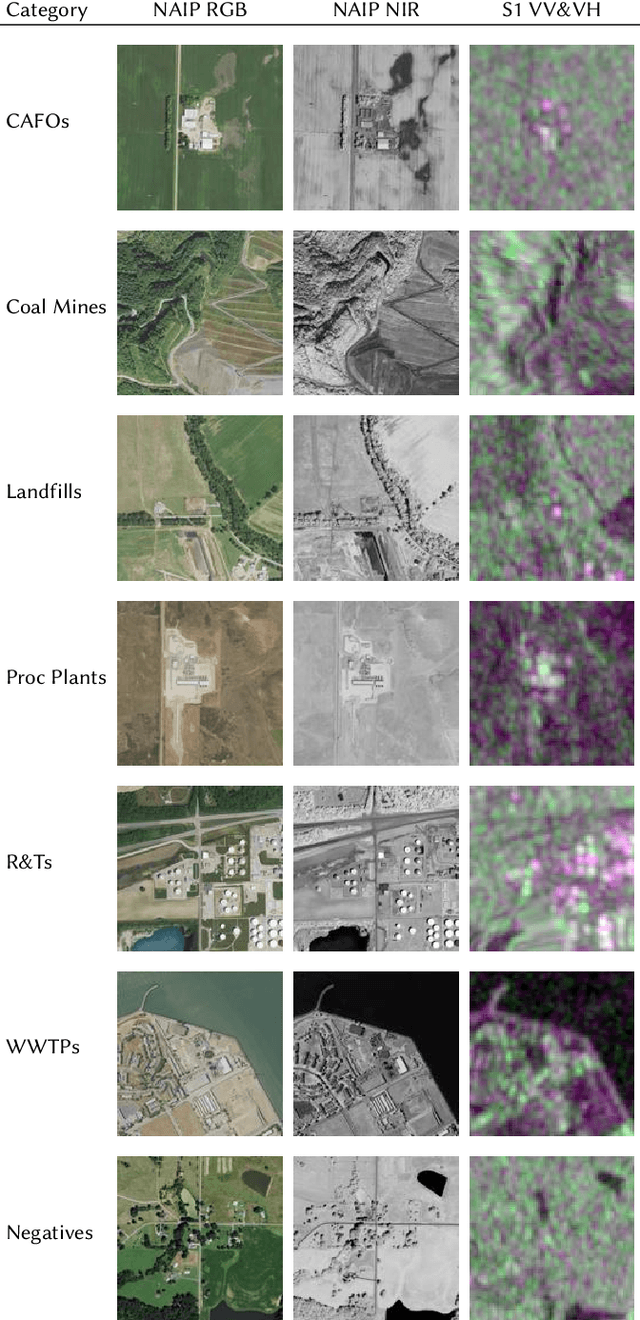

Abstract:Reducing methane emissions is essential for mitigating global warming. To attribute methane emissions to their sources, a comprehensive dataset of methane source infrastructure is necessary. Recent advancements with deep learning on remotely sensed imagery have the potential to identify the locations and characteristics of methane sources, but there is a substantial lack of publicly available data to enable machine learning researchers and practitioners to build automated mapping approaches. To help fill this gap, we construct a multi-sensor dataset called METER-ML containing 86,625 georeferenced NAIP, Sentinel-1, and Sentinel-2 images in the U.S. labeled for the presence or absence of methane source facilities including concentrated animal feeding operations, coal mines, landfills, natural gas processing plants, oil refineries and petroleum terminals, and wastewater treatment plants. We experiment with a variety of models that leverage different spatial resolutions, spatial footprints, image products, and spectral bands. We find that our best model achieves an area under the precision recall curve of 0.915 for identifying concentrated animal feeding operations and 0.821 for oil refineries and petroleum terminals on an expert-labeled test set, suggesting the potential for large-scale mapping. We make METER-ML freely available at https://stanfordmlgroup.github.io/projects/meter-ml/ to support future work on automated methane source mapping.

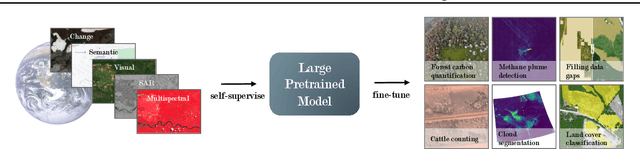

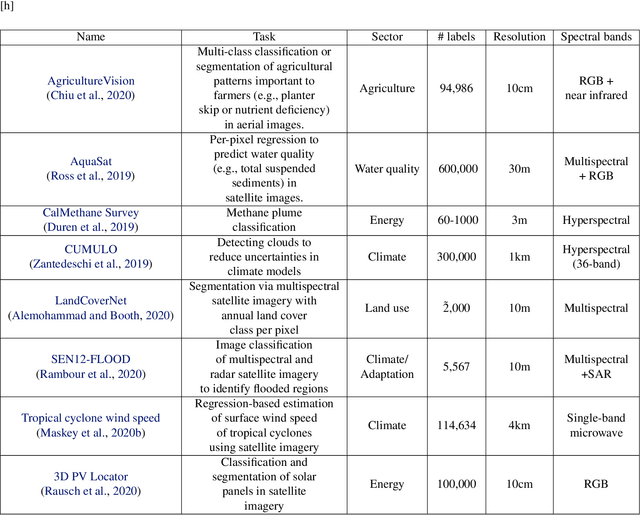

Toward Foundation Models for Earth Monitoring: Proposal for a Climate Change Benchmark

Dec 01, 2021

Abstract:Recent progress in self-supervision shows that pre-training large neural networks on vast amounts of unsupervised data can lead to impressive increases in generalisation for downstream tasks. Such models, recently coined as foundation models, have been transformational to the field of natural language processing. While similar models have also been trained on large corpuses of images, they are not well suited for remote sensing data. To stimulate the development of foundation models for Earth monitoring, we propose to develop a new benchmark comprised of a variety of downstream tasks related to climate change. We believe that this can lead to substantial improvements in many existing applications and facilitate the development of new applications. This proposal is also a call for collaboration with the aim of developing a better evaluation process to mitigate potential downsides of foundation models for Earth monitoring.

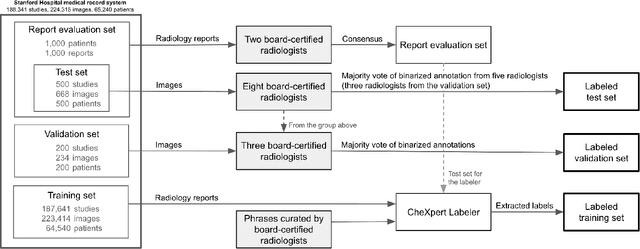

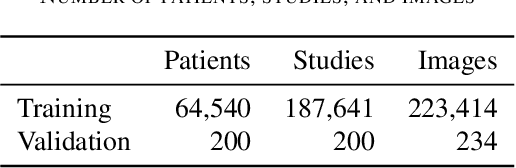

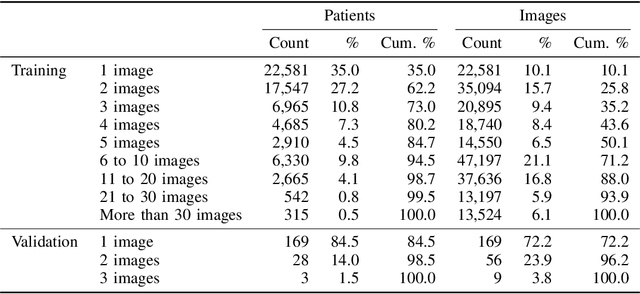

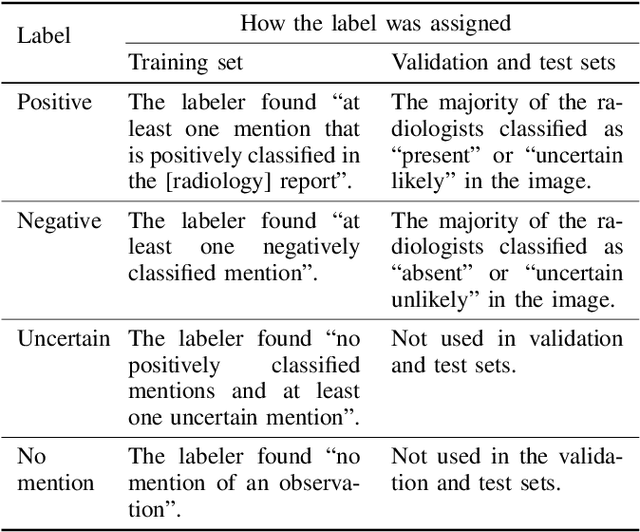

Structured dataset documentation: a datasheet for CheXpert

May 07, 2021

Abstract:Billions of X-ray images are taken worldwide each year. Machine learning, and deep learning in particular, has shown potential to help radiologists triage and diagnose images. However, deep learning requires large datasets with reliable labels. The CheXpert dataset was created with the participation of board-certified radiologists, resulting in the strong ground truth needed to train deep learning networks. Following the structured format of Datasheets for Datasets, this paper expands on the original CheXpert paper and other sources to show the critical role played by radiologists in the creation of reliable labels and to describe the different aspects of the dataset composition in detail. Such structured documentation intends to increase the awareness in the machine learning and medical communities of the strengths, applications, and evolution of CheXpert, thereby advancing the field of medical image analysis. Another objective of this paper is to put forward this dataset datasheet as an example to the community of how to create detailed and structured descriptions of datasets. We believe that clearly documenting the creation process, the contents, and applications of datasets accelerates the creation of useful and reliable models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge