Jan Issac

Autonomous Motion Department at the MPI for Intelligent Systems, Tübingen, Germany, Lula Robotics Inc., Seattle, WA, USA

RMPflow: A Geometric Framework for Generation of Multi-Task Motion Policies

Jul 25, 2020

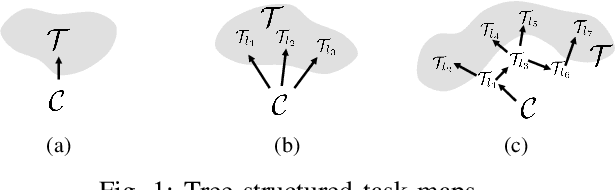

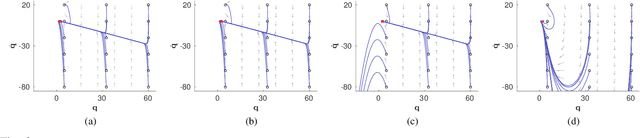

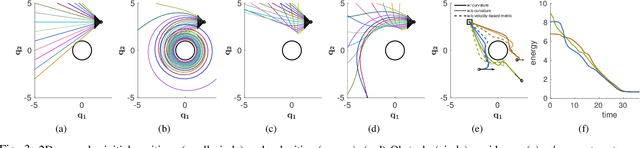

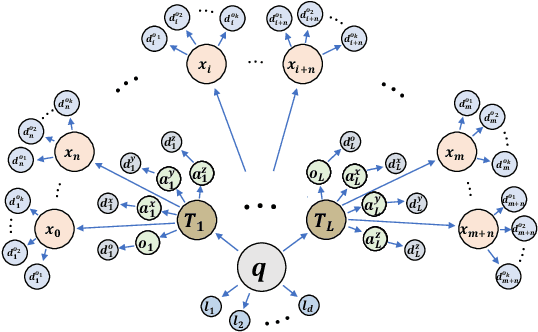

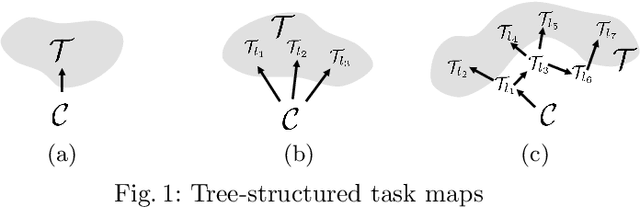

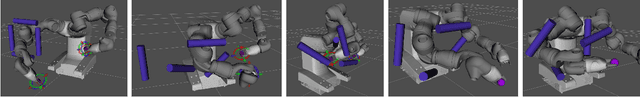

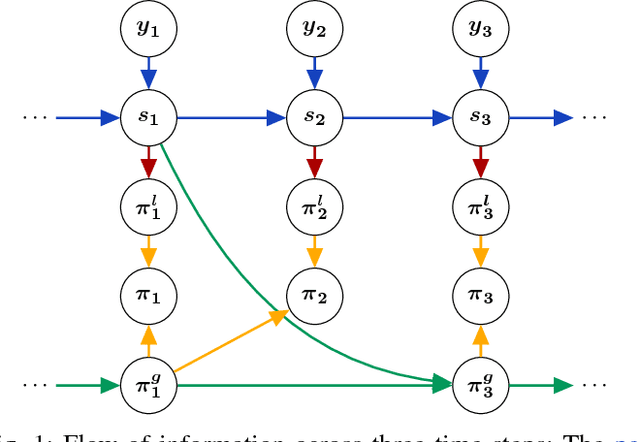

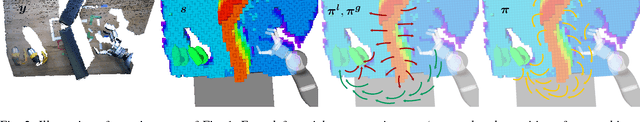

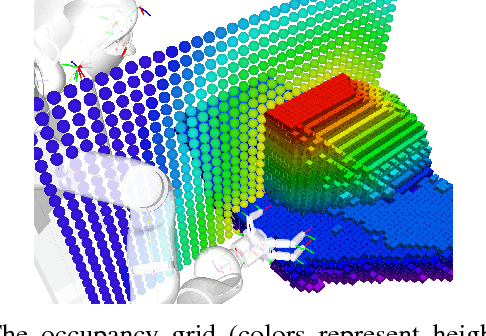

Abstract:Generating robot motion for multiple tasks in dynamic environments is challenging, requiring an algorithm to respond reactively while accounting for complex nonlinear relationships between tasks. In this paper, we develop a novel policy synthesis algorithm, RMPflow, based on geometrically consistent transformations of Riemannian Motion Policies (RMPs). RMPs are a class of reactive motion policies that parameterize non-Euclidean behaviors as dynamical systems in intrinsically nonlinear task spaces. Given a set of RMPs designed for individual tasks, RMPflow can combine these policies to generate an expressive global policy, while simultaneously exploiting sparse structure for computational efficiency. We study the geometric properties of RMPflow and provide sufficient conditions for stability. Finally, we experimentally demonstrate that accounting for the natural Riemannian geometry of task policies can simplify classically difficult problems, such as planning through clutter on high-DOF manipulation systems.

RMPflow: A Computational Graph for Automatic Motion Policy Generation

Apr 05, 2019

Abstract:We develop a novel policy synthesis algorithm, RMPflow, based on geometrically consistent transformations of Riemannian Motion Policies (RMPs). RMPs are a class of reactive motion policies designed to parameterize non-Euclidean behaviors as dynamical systems in intrinsically nonlinear task spaces. Given a set of RMPs designed for individual tasks, RMPflow can consistently combine these local policies to generate an expressive global policy, while simultaneously exploiting sparse structure for computational efficiency. We study the geometric properties of RMPflow and provide sufficient conditions for stability. Finally, we experimentally demonstrate that accounting for the geometry of task policies can simplify classically difficult problems, such as planning through clutter on high-DOF manipulation systems.

Closing the Sim-to-Real Loop: Adapting Simulation Randomization with Real World Experience

Mar 05, 2019

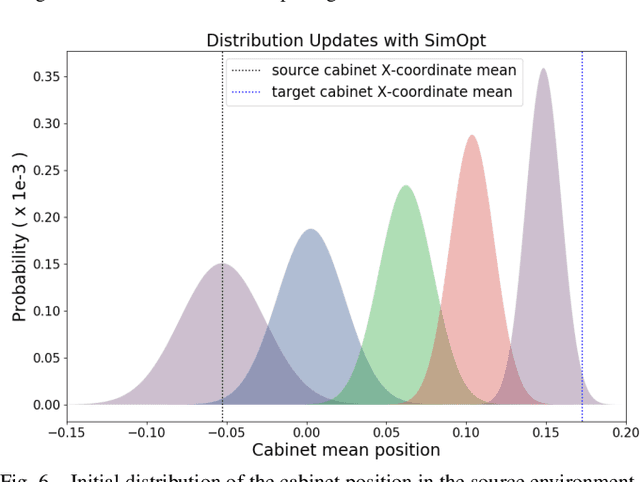

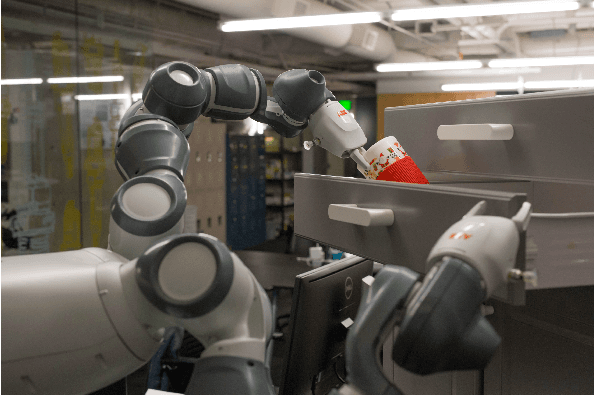

Abstract:We consider the problem of transferring policies to the real world by training on a distribution of simulated scenarios. Rather than manually tuning the randomization of simulations, we adapt the simulation parameter distribution using a few real world roll-outs interleaved with policy training. In doing so, we are able to change the distribution of simulations to improve the policy transfer by matching the policy behavior in simulation and the real world. We show that policies trained with our method are able to reliably transfer to different robots in two real world tasks: swing-peg-in-hole and opening a cabinet drawer. The video of our experiments can be found at https://sites.google.com/view/simopt

Riemannian Motion Policies

Jul 25, 2018

Abstract:We introduce the Riemannian Motion Policy (RMP), a new mathematical object for modular motion generation. An RMP is a second-order dynamical system (acceleration field or motion policy) coupled with a corresponding Riemannian metric. The motion policy maps positions and velocities to accelerations, while the metric captures the directions in the space important to the policy. We show that RMPs provide a straightforward and convenient method for combining multiple motion policies and transforming such policies from one space (such as the task space) to another (such as the configuration space) in geometrically consistent ways. The operators we derive for these combinations and transformations are provably optimal, have linearity properties making them agnostic to the order of application, and are strongly analogous to the covariant transformations of natural gradients popular in the machine learning literature. The RMP framework enables the fusion of motion policies from different motion generation paradigms, such as dynamical systems, dynamic movement primitives (DMPs), optimal control, operational space control, nonlinear reactive controllers, motion optimization, and model predictive control (MPC), thus unifying these disparate techniques from the literature. RMPs are easy to implement and manipulate, facilitate controller design, simplify handling of joint limits, and clarify a number of open questions regarding the proper fusion of motion generation methods (such as incorporating local reactive policies into long-horizon optimizers). We demonstrate the effectiveness of RMPs on both simulation and real robots, including their ability to naturally and efficiently solve complicated collision avoidance problems previously handled by more complex planners.

Real-time Perception meets Reactive Motion Generation

Oct 06, 2017

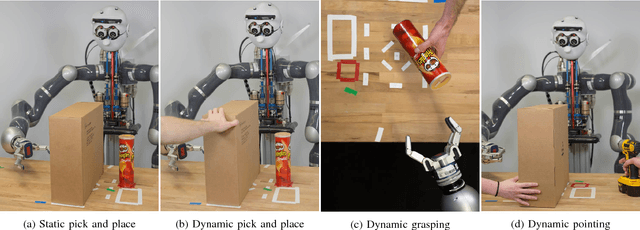

Abstract:We address the challenging problem of robotic grasping and manipulation in the presence of uncertainty. This uncertainty is due to noisy sensing, inaccurate models and hard-to-predict environment dynamics. We quantify the importance of continuous, real-time perception and its tight integration with reactive motion generation methods in dynamic manipulation scenarios. We compare three different systems that are instantiations of the most common architectures in the field: (i) a traditional sense-plan-act approach that is still widely used, (ii) a myopic controller that only reacts to local environment dynamics and (iii) a reactive planner that integrates feedback control and motion optimization. All architectures rely on the same components for real-time perception and reactive motion generation to allow a quantitative evaluation. We extensively evaluate the systems on a real robotic platform in four scenarios that exhibit either a challenging workspace geometry or a dynamic environment. In 333 experiments, we quantify the robustness and accuracy that is due to integrating real-time feedback at different time scales in a reactive motion generation system. We also report on the lessons learned for system building.

Probabilistic Articulated Real-Time Tracking for Robot Manipulation

Nov 25, 2016

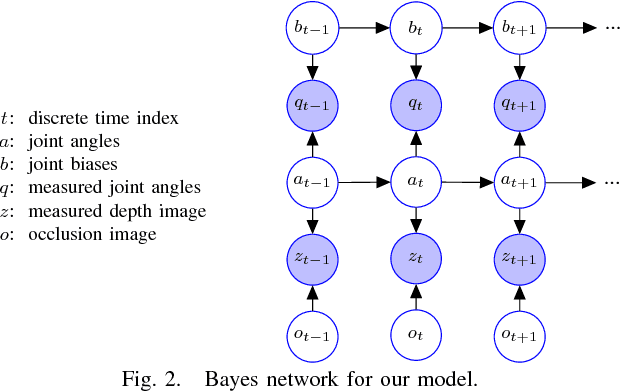

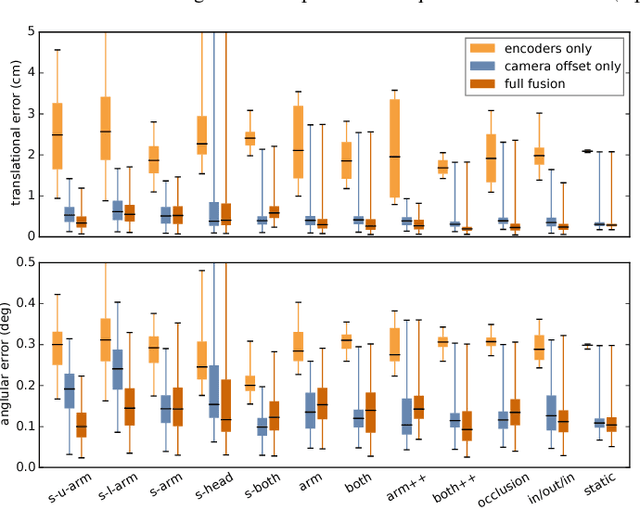

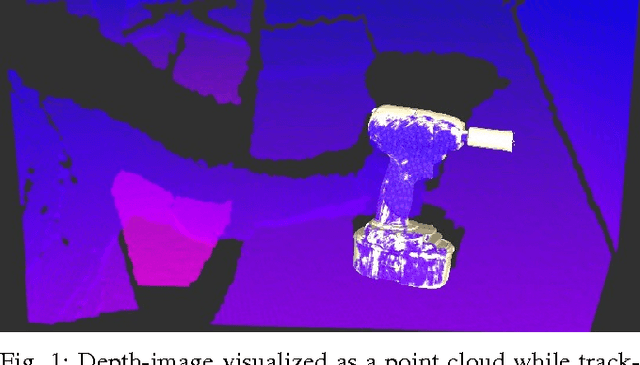

Abstract:We propose a probabilistic filtering method which fuses joint measurements with depth images to yield a precise, real-time estimate of the end-effector pose in the camera frame. This avoids the need for frame transformations when using it in combination with visual object tracking methods. Precision is achieved by modeling and correcting biases in the joint measurements as well as inaccuracies in the robot model, such as poor extrinsic camera calibration. We make our method computationally efficient through a principled combination of Kalman filtering of the joint measurements and asynchronous depth-image updates based on the Coordinate Particle Filter. We quantitatively evaluate our approach on a dataset recorded from a real robotic platform, annotated with ground truth from a motion capture system. We show that our approach is robust and accurate even under challenging conditions such as fast motion, significant and long-term occlusions, and time-varying biases. We release the dataset along with open-source code of our approach to allow for quantitative comparison with alternative approaches.

Robust Gaussian Filtering using a Pseudo Measurement

May 30, 2016

Abstract:Many sensors, such as range, sonar, radar, GPS and visual devices, produce measurements which are contaminated by outliers. This problem can be addressed by using fat-tailed sensor models, which account for the possibility of outliers. Unfortunately, all estimation algorithms belonging to the family of Gaussian filters (such as the widely-used extended Kalman filter and unscented Kalman filter) are inherently incompatible with such fat-tailed sensor models. The contribution of this paper is to show that any Gaussian filter can be made compatible with fat-tailed sensor models by applying one simple change: Instead of filtering with the physical measurement, we propose to filter with a pseudo measurement obtained by applying a feature function to the physical measurement. We derive such a feature function which is optimal under some conditions. Simulation results show that the proposed method can effectively handle measurement outliers and allows for robust filtering in both linear and nonlinear systems.

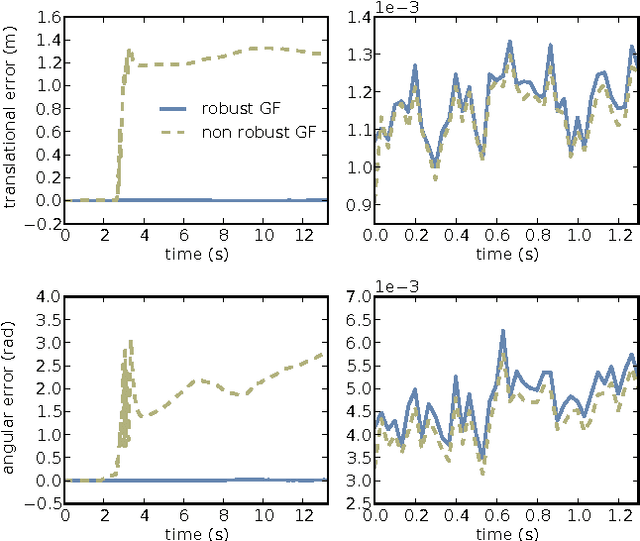

Depth-Based Object Tracking Using a Robust Gaussian Filter

Feb 19, 2016

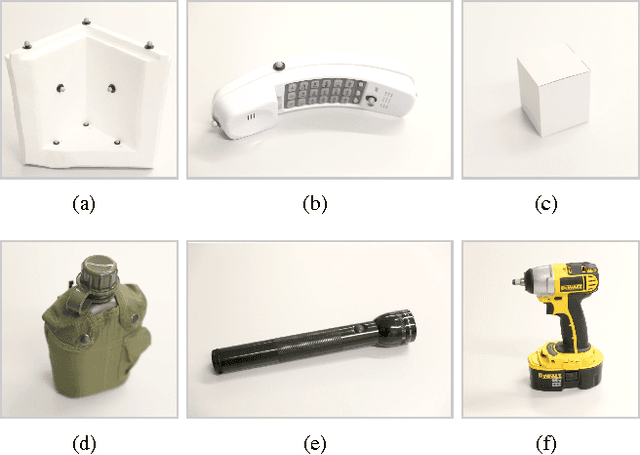

Abstract:We consider the problem of model-based 3D-tracking of objects given dense depth images as input. Two difficulties preclude the application of a standard Gaussian filter to this problem. First of all, depth sensors are characterized by fat-tailed measurement noise. To address this issue, we show how a recently published robustification method for Gaussian filters can be applied to the problem at hand. Thereby, we avoid using heuristic outlier detection methods that simply reject measurements if they do not match the model. Secondly, the computational cost of the standard Gaussian filter is prohibitive due to the high-dimensional measurement, i.e. the depth image. To address this problem, we propose an approximation to reduce the computational complexity of the filter. In quantitative experiments on real data we show how our method clearly outperforms the standard Gaussian filter. Furthermore, we compare its performance to a particle-filter-based tracking method, and observe comparable computational efficiency and improved accuracy and smoothness of the estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge