Hongjiang Wei

Improving 2D Diffusion Models for 3D Medical Imaging with Inter-Slice Consistent Stochasticity

Feb 04, 2026Abstract:3D medical imaging is in high demand and essential for clinical diagnosis and scientific research. Currently, diffusion models (DMs) have become an effective tool for medical imaging reconstruction thanks to their ability to learn rich, high-quality data priors. However, learning the 3D data distribution with DMs in medical imaging is challenging, not only due to the difficulties in data collection but also because of the significant computational burden during model training. A common compromise is to train the DMs on 2D data priors and reconstruct stacked 2D slices to address 3D medical inverse problems. However, the intrinsic randomness of diffusion sampling causes severe inter-slice discontinuities of reconstructed 3D volumes. Existing methods often enforce continuity regularizations along the z-axis, which introduces sensitive hyper-parameters and may lead to over-smoothing results. In this work, we revisit the origin of stochasticity in diffusion sampling and introduce Inter-Slice Consistent Stochasticity (ISCS), a simple yet effective strategy that encourages interslice consistency during diffusion sampling. Our key idea is to control the consistency of stochastic noise components during diffusion sampling, thereby aligning their sampling trajectories without adding any new loss terms or optimization steps. Importantly, the proposed ISCS is plug-and-play and can be dropped into any 2D trained diffusion based 3D reconstruction pipeline without additional computational cost. Experiments on several medical imaging problems show that our method can effectively improve the performance of medical 3D imaging problems based on 2D diffusion models. Our findings suggest that controlling inter-slice stochasticity is a principled and practically attractive route toward high-fidelity 3D medical imaging with 2D diffusion priors. The code is available at: https://github.com/duchenhe/ISCS

Unsupervised Motion-Compensated Decomposition for Cardiac MRI Reconstruction via Neural Representation

Nov 17, 2025Abstract:Cardiac magnetic resonance (CMR) imaging is widely used to characterize cardiac morphology and function. To accelerate CMR imaging, various methods have been proposed to recover high-quality spatiotemporal CMR images from highly undersampled k-t space data. However, current CMR reconstruction techniques either fail to achieve satisfactory image quality or are restricted by the scarcity of ground truth data, leading to limited applicability in clinical scenarios. In this work, we proposed MoCo-INR, a new unsupervised method that integrates implicit neural representations (INR) with the conventional motion-compensated (MoCo) framework. Using explicit motion modeling and the continuous prior of INRs, MoCo-INR can produce accurate cardiac motion decomposition and high-quality CMR reconstruction. Furthermore, we introduce a new INR network architecture tailored to the CMR problem, which significantly stabilizes model optimization. Experiments on retrospective (simulated) datasets demonstrate the superiority of MoCo-INR over state-of-the-art methods, achieving fast convergence and fine-detailed reconstructions at ultra-high acceleration factors (e.g., 20x in VISTA sampling). Additionally, evaluations on prospective (real-acquired) free-breathing CMR scans highlight the clinical practicality of MoCo-INR for real-time imaging. Several ablation studies further confirm the effectiveness of the critical components of MoCo-INR.

Low-Rank Augmented Implicit Neural Representation for Unsupervised High-Dimensional Quantitative MRI Reconstruction

Jun 10, 2025Abstract:Quantitative magnetic resonance imaging (qMRI) provides tissue-specific parameters vital for clinical diagnosis. Although simultaneous multi-parametric qMRI (MP-qMRI) technologies enhance imaging efficiency, robustly reconstructing qMRI from highly undersampled, high-dimensional measurements remains a significant challenge. This difficulty arises primarily because current reconstruction methods that rely solely on a single prior or physics-informed model to solve the highly ill-posed inverse problem, which often leads to suboptimal results. To overcome this limitation, we propose LoREIN, a novel unsupervised and dual-prior-integrated framework for accelerated 3D MP-qMRI reconstruction. Technically, LoREIN incorporates both low-rank prior and continuity prior via low-rank representation (LRR) and implicit neural representation (INR), respectively, to enhance reconstruction fidelity. The powerful continuous representation of INR enables the estimation of optimal spatial bases within the low-rank subspace, facilitating high-fidelity reconstruction of weighted images. Simultaneously, the predicted multi-contrast weighted images provide essential structural and quantitative guidance, further enhancing the reconstruction accuracy of quantitative parameter maps. Furthermore, our work introduces a zero-shot learning paradigm with broad potential in complex spatiotemporal and high-dimensional image reconstruction tasks, further advancing the field of medical imaging.

SUFFICIENT: A scan-specific unsupervised deep learning framework for high-resolution 3D isotropic fetal brain MRI reconstruction

May 26, 2025Abstract:High-quality 3D fetal brain MRI reconstruction from motion-corrupted 2D slices is crucial for clinical diagnosis. Reliable slice-to-volume registration (SVR)-based motion correction and super-resolution reconstruction (SRR) methods are essential. Deep learning (DL) has demonstrated potential in enhancing SVR and SRR when compared to conventional methods. However, it requires large-scale external training datasets, which are difficult to obtain for clinical fetal MRI. To address this issue, we propose an unsupervised iterative SVR-SRR framework for isotropic HR volume reconstruction. Specifically, SVR is formulated as a function mapping a 2D slice and a 3D target volume to a rigid transformation matrix, which aligns the slice to the underlying location in the target volume. The function is parameterized by a convolutional neural network, which is trained by minimizing the difference between the volume slicing at the predicted position and the input slice. In SRR, a decoding network embedded within a deep image prior framework is incorporated with a comprehensive image degradation model to produce the high-resolution (HR) volume. The deep image prior framework offers a local consistency prior to guide the reconstruction of HR volumes. By performing a forward degradation model, the HR volume is optimized by minimizing loss between predicted slices and the observed slices. Comprehensive experiments conducted on large-magnitude motion-corrupted simulation data and clinical data demonstrate the superior performance of the proposed framework over state-of-the-art fetal brain reconstruction frameworks.

JSover: Joint Spectrum Estimation and Multi-Material Decomposition from Single-Energy CT Projections

May 12, 2025Abstract:Multi-material decomposition (MMD) enables quantitative reconstruction of tissue compositions in the human body, supporting a wide range of clinical applications. However, traditional MMD typically requires spectral CT scanners and pre-measured X-ray energy spectra, significantly limiting clinical applicability. To this end, various methods have been developed to perform MMD using conventional (i.e., single-energy, SE) CT systems, commonly referred to as SEMMD. Despite promising progress, most SEMMD methods follow a two-step image decomposition pipeline, which first reconstructs monochromatic CT images using algorithms such as FBP, and then performs decomposition on these images. The initial reconstruction step, however, neglects the energy-dependent attenuation of human tissues, introducing severe nonlinear beam hardening artifacts and noise into the subsequent decomposition. This paper proposes JSover, a fundamentally reformulated one-step SEMMD framework that jointly reconstructs multi-material compositions and estimates the energy spectrum directly from SECT projections. By explicitly incorporating physics-informed spectral priors into the SEMMD process, JSover accurately simulates a virtual spectral CT system from SE acquisitions, thereby improving the reliability and accuracy of decomposition. Furthermore, we introduce implicit neural representation (INR) as an unsupervised deep learning solver for representing the underlying material maps. The inductive bias of INR toward continuous image patterns constrains the solution space and further enhances estimation quality. Extensive experiments on both simulated and real CT datasets show that JSover outperforms state-of-the-art SEMMD methods in accuracy and computational efficiency.

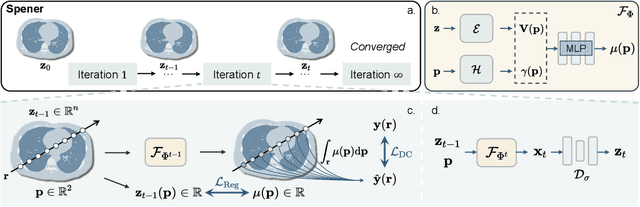

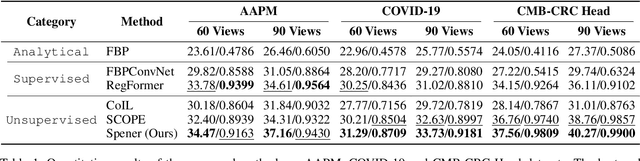

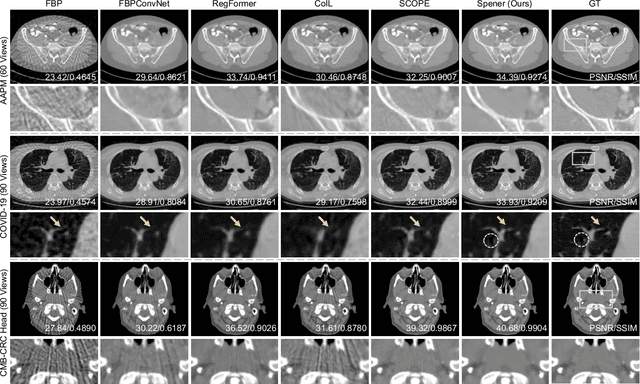

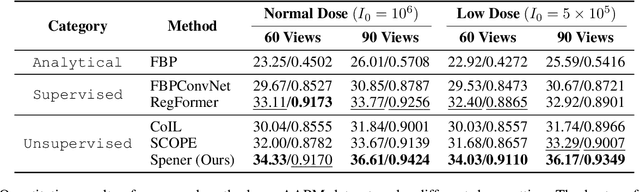

Unsupervised Self-Prior Embedding Neural Representation for Iterative Sparse-View CT Reconstruction

Feb 08, 2025

Abstract:Emerging unsupervised implicit neural representation (INR) methods, such as NeRP, NeAT, and SCOPE, have shown great potential to address sparse-view computed tomography (SVCT) inverse problems. Although these INR-based methods perform well in relatively dense SVCT reconstructions, they struggle to achieve comparable performance to supervised methods in sparser SVCT scenarios. They are prone to being affected by noise, limiting their applicability in real clinical settings. Additionally, current methods have not fully explored the use of image domain priors for solving SVCsT inverse problems. In this work, we demonstrate that imperfect reconstruction results can provide effective image domain priors for INRs to enhance performance. To leverage this, we introduce Self-prior embedding neural representation (Spener), a novel unsupervised method for SVCT reconstruction that integrates iterative reconstruction algorithms. During each iteration, Spener extracts local image prior features from the previous iteration and embeds them to constrain the solution space. Experimental results on multiple CT datasets show that our unsupervised Spener method achieves performance comparable to supervised state-of-the-art (SOTA) methods on in-domain data while outperforming them on out-of-domain datasets. Moreover, Spener significantly improves the performance of INR-based methods in handling SVCT with noisy sinograms. Our code is available at https://github.com/MeijiTian/Spener.

Unsupervised Multi-Parameter Inverse Solving for Reducing Ring Artifacts in 3D X-Ray CBCT

Dec 08, 2024

Abstract:Ring artifacts are prevalent in 3D cone-beam computed tomography (CBCT) due to non-ideal responses of X-ray detectors, severely degrading imaging quality and reliability. Current state-of-the-art (SOTA) ring artifact reduction (RAR) algorithms rely on extensive paired CT samples for supervised learning. While effective, these methods do not fully capture the physical characteristics of ring artifacts, leading to pronounced performance drops when applied to out-of-domain data. Moreover, their applications to 3D CBCT are limited by high memory demands. In this work, we introduce \textbf{Riner}, an unsupervised method formulating 3D CBCT RAR as a multi-parameter inverse problem. Our core innovation is parameterizing the X-ray detector responses as solvable variables within a differential physical model. By jointly optimizing a neural field to represent artifact-free CT images and estimating response parameters directly from raw measurements, Riner eliminates the need for external training data. Moreover, it accommodates diverse CT geometries, enhancing practical usability. Empirical results on both simulated and real-world datasets show that Riner surpasses existing SOTA RAR methods in performance.

GESH-Net: Graph-Enhanced Spherical Harmonic Convolutional Networks for Cortical Surface Registration

Oct 18, 2024Abstract:Currently, cortical surface registration techniques based on classical methods have been well developed. However, a key issue with classical methods is that for each pair of images to be registered, it is necessary to search for the optimal transformation in the deformation space according to a specific optimization algorithm until the similarity measure function converges, which cannot meet the requirements of real-time and high-precision in medical image registration. Researching cortical surface registration based on deep learning models has become a new direction. But so far, there are still only a few studies on cortical surface image registration based on deep learning. Moreover, although deep learning methods theoretically have stronger representation capabilities, surpassing the most advanced classical methods in registration accuracy and distortion control remains a challenge. Therefore, to address this challenge, this paper constructs a deep learning model to study the technology of cortical surface image registration. The specific work is as follows: (1) An unsupervised cortical surface registration network based on a multi-scale cascaded structure is designed, and a convolution method based on spherical harmonic transformation is introduced to register cortical surface data. This solves the problem of scale-inflexibility of spherical feature transformation and optimizes the multi-scale registration process. (2)By integrating the attention mechanism, a graph-enhenced module is introduced into the registration network, using the graph attention module to help the network learn global features of cortical surface data, enhancing the learning ability of the network. The results show that the graph attention module effectively enhances the network's ability to extract global features, and its registration results have significant advantages over other methods.

Coordinate-Based Neural Representation Enabling Zero-Shot Learning for 3D Multiparametric Quantitative MRI

Oct 02, 2024

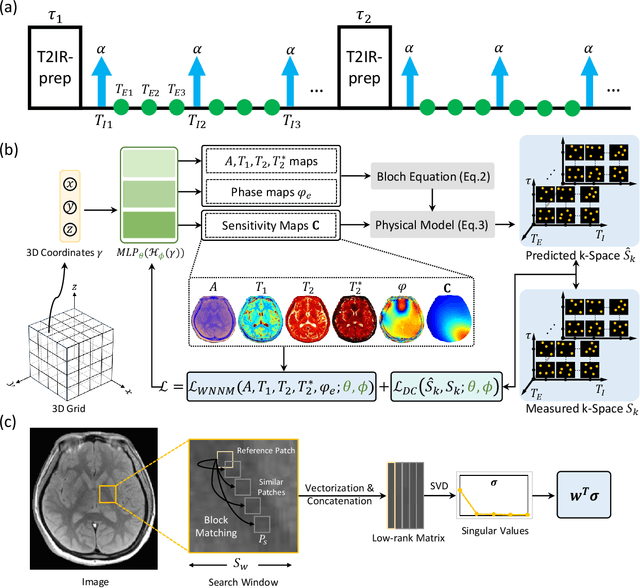

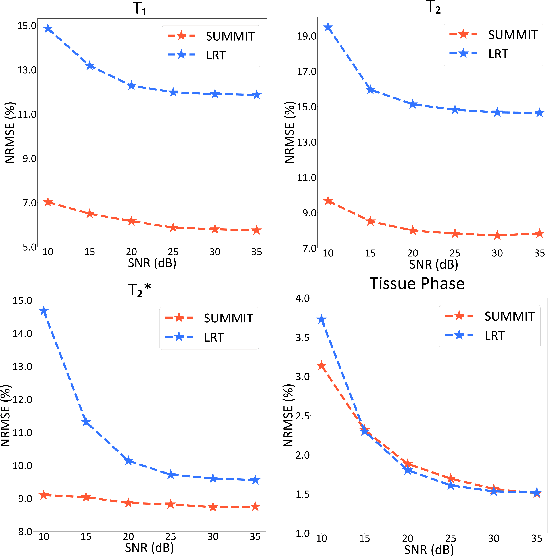

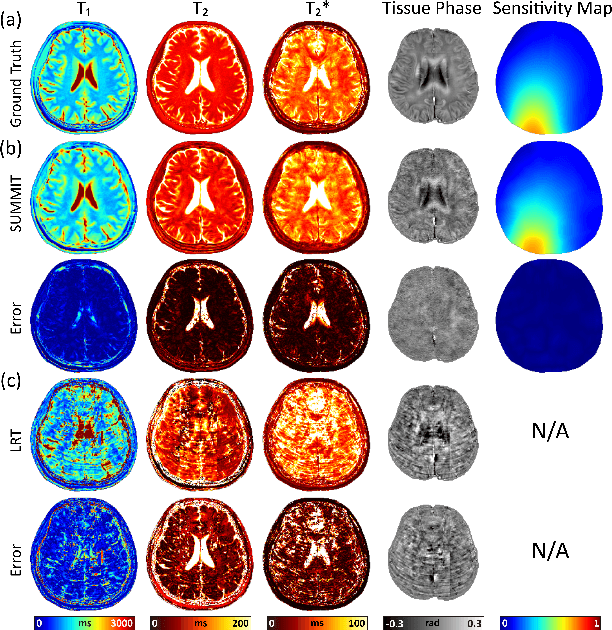

Abstract:Quantitative magnetic resonance imaging (qMRI) offers tissue-specific physical parameters with significant potential for neuroscience research and clinical practice. However, lengthy scan times for 3D multiparametric qMRI acquisition limit its clinical utility. Here, we propose SUMMIT, an innovative imaging methodology that includes data acquisition and an unsupervised reconstruction for simultaneous multiparametric qMRI. SUMMIT first encodes multiple important quantitative properties into highly undersampled k-space. It further leverages implicit neural representation incorporated with a dedicated physics model to reconstruct the desired multiparametric maps without needing external training datasets. SUMMIT delivers co-registered T1, T2, T2*, and quantitative susceptibility mapping. Extensive simulations and phantom imaging demonstrate SUMMIT's high accuracy. Additionally, the proposed unsupervised approach for qMRI reconstruction also introduces a novel zero-shot learning paradigm for multiparametric imaging applicable to various medical imaging modalities.

Moner: Motion Correction in Undersampled Radial MRI with Unsupervised Neural Representation

Sep 25, 2024

Abstract:Motion correction (MoCo) in radial MRI is a challenging problem due to the unpredictability of subject's motion. Current state-of-the-art (SOTA) MoCo algorithms often use extensive high-quality MR images to pre-train neural networks, obtaining excellent reconstructions. However, the need for large-scale datasets significantly increases costs and limits model generalization. In this work, we propose Moner, an unsupervised MoCo method that jointly solves artifact-free MR images and accurate motion from undersampled, rigid motion-corrupted k-space data, without requiring training data. Our core idea is to leverage the continuous prior of implicit neural representation (INR) to constrain this ill-posed inverse problem, enabling ideal solutions. Specifically, we incorporate a quasi-static motion model into the INR, granting its ability to correct subject's motion. To stabilize model optimization, we reformulate radial MRI as a back-projection problem using the Fourier-slice theorem. Additionally, we propose a novel coarse-to-fine hash encoding strategy, significantly enhancing MoCo accuracy. Experiments on multiple MRI datasets show our Moner achieves performance comparable to SOTA MoCo techniques on in-domain data, while demonstrating significant improvements on out-of-domain data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge