Hongfu Sun

Latent Refinement via Flow Matching for Training-free Linear Inverse Problem Solving

Nov 08, 2025

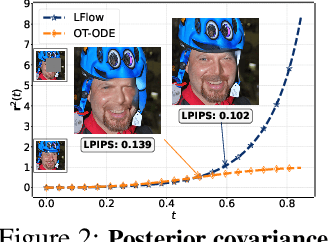

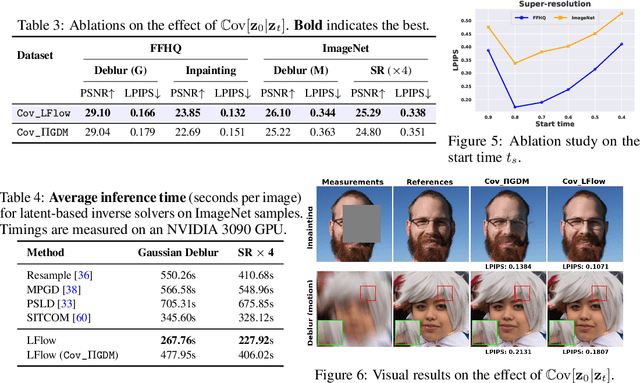

Abstract:Recent advances in inverse problem solving have increasingly adopted flow priors over diffusion models due to their ability to construct straight probability paths from noise to data, thereby enhancing efficiency in both training and inference. However, current flow-based inverse solvers face two primary limitations: (i) they operate directly in pixel space, which demands heavy computational resources for training and restricts scalability to high-resolution images, and (ii) they employ guidance strategies with prior-agnostic posterior covariances, which can weaken alignment with the generative trajectory and degrade posterior coverage. In this paper, we propose LFlow (Latent Refinement via Flows), a training-free framework for solving linear inverse problems via pretrained latent flow priors. LFlow leverages the efficiency of flow matching to perform ODE sampling in latent space along an optimal path. This latent formulation further allows us to introduce a theoretically grounded posterior covariance, derived from the optimal vector field, enabling effective flow guidance. Experimental results demonstrate that our proposed method outperforms state-of-the-art latent diffusion solvers in reconstruction quality across most tasks. The code will be publicly available at https://github.com/hosseinaskari-cs/LFlow .

SAMRI: Segment Anything Model for MRI

Oct 30, 2025Abstract:Accurate magnetic resonance imaging (MRI) segmentation is crucial for clinical decision-making, but remains labor-intensive when performed manually. Convolutional neural network (CNN)-based methods can be accurate and efficient, but often generalize poorly to MRI's variable contrast, intensity inhomogeneity, and protocols. Although the transformer-based Segment Anything Model (SAM) has demonstrated remarkable generalizability in natural images, existing adaptations often treat MRI as another imaging modality, overlooking these modality-specific challenges. We present SAMRI, an MRI-specialized SAM trained and validated on 1.1 million labeled MR slices spanning whole-body organs and pathologies. We demonstrate that SAM can be effectively adapted to MRI by simply fine-tuning its mask decoder using a two-stage strategy, reducing training time by 94% and trainable parameters by 96% versus full-model retraining. Across diverse MRI segmentation tasks, SAMRI achieves a mean Dice of 0.87, delivering state-of-the-art accuracy across anatomical regions and robust generalization on unseen structures, particularly small and clinically important structures.

GateFuseNet: An Adaptive 3D Multimodal Neuroimaging Fusion Network for Parkinson's Disease Diagnosis

Oct 26, 2025Abstract:Accurate diagnosis of Parkinson's disease (PD) from MRI remains challenging due to symptom variability and pathological heterogeneity. Most existing methods rely on conventional magnitude-based MRI modalities, such as T1-weighted images (T1w), which are less sensitive to PD pathology than Quantitative Susceptibility Mapping (QSM), a phase-based MRI technique that quantifies iron deposition in deep gray matter nuclei. In this study, we propose GateFuseNet, an adaptive 3D multimodal fusion network that integrates QSM and T1w images for PD diagnosis. The core innovation lies in a gated fusion module that learns modality-specific attention weights and channel-wise gating vectors for selective feature modulation. This hierarchical gating mechanism enhances ROI-aware features while suppressing irrelevant signals. Experimental results show that our method outperforms three existing state-of-the-art approaches, achieving 85.00% accuracy and 92.06% AUC. Ablation studies further validate the contributions of ROI guidance, multimodal integration, and fusion positioning. Grad-CAM visualizations confirm the model's focus on clinically relevant pathological regions. The source codes and pretrained models can be found at https://github.com/YangGaoUQ/GateFuseNet

SUSEP-Net: Simulation-Supervised and Contrastive Learning-based Deep Neural Networks for Susceptibility Source Separation

Jun 16, 2025Abstract:Quantitative susceptibility mapping (QSM) provides a valuable tool for quantifying susceptibility distributions in human brains; however, two types of opposing susceptibility sources (i.e., paramagnetic and diamagnetic), may coexist in a single voxel, and cancel each other out in net QSM images. Susceptibility source separation techniques enable the extraction of sub-voxel information from QSM maps. This study proposes a novel SUSEP-Net for susceptibility source separation by training a dual-branch U-net with a simulation-supervised training strategy. In addition, a contrastive learning framework is included to explicitly impose similarity-based constraints between the branch-specific guidance features in specially-designed encoders and the latent features in the decoders. Comprehensive experiments were carried out on both simulated and in vivo data, including healthy subjects and patients with pathological conditions, to compare SUSEP-Net with three state-of-the-art susceptibility source separation methods (i.e., APART-QSM, \c{hi}-separation, and \c{hi}-sepnet). SUSEP-Net consistently showed improved results compared with the other three methods, with better numerical metrics, improved high-intensity hemorrhage and calcification lesion contrasts, and reduced artifacts in brains with pathological conditions. In addition, experiments on an agarose gel phantom data were conducted to validate the accuracy and the generalization capability of SUSEP-Net.

Highly Undersampled MRI Reconstruction via a Single Posterior Sampling of Diffusion Models

May 13, 2025Abstract:Incoherent k-space under-sampling and deep learning-based reconstruction methods have shown great success in accelerating MRI. However, the performance of most previous methods will degrade dramatically under high acceleration factors, e.g., 8$\times$ or higher. Recently, denoising diffusion models (DM) have demonstrated promising results in solving this issue; however, one major drawback of the DM methods is the long inference time due to a dramatic number of iterative reverse posterior sampling steps. In this work, a Single Step Diffusion Model-based reconstruction framework, namely SSDM-MRI, is proposed for restoring MRI images from highly undersampled k-space. The proposed method achieves one-step reconstruction by first training a conditional DM and then iteratively distilling this model. Comprehensive experiments were conducted on both publicly available fastMRI images and an in-house multi-echo GRE (QSM) subject. Overall, the results showed that SSDM-MRI outperformed other methods in terms of numerical metrics (PSNR and SSIM), qualitative error maps, image fine details, and latent susceptibility information hidden in MRI phase images. In addition, the reconstruction time for a 320*320 brain slice of SSDM-MRI is only 0.45 second, which is only comparable to that of a simple U-net, making it a highly effective solution for MRI reconstruction tasks.

IR2QSM: Quantitative Susceptibility Mapping via Deep Neural Networks with Iterative Reverse Concatenations and Recurrent Modules

Jun 18, 2024Abstract:Quantitative susceptibility mapping (QSM) is an MRI phase-based post-processing technique to extract the distribution of tissue susceptibilities, demonstrating significant potential in studying neurological diseases. However, the ill-conditioned nature of dipole inversion makes QSM reconstruction from the tissue field prone to noise and artifacts. In this work, we propose a novel deep learning-based IR2QSM method for QSM reconstruction. It is designed by iterating four times of a reverse concatenations and middle recurrent modules enhanced U-net, which could dramatically improve the efficiency of latent feature utilization. Simulated and in vivo experiments were conducted to compare IR2QSM with several traditional algorithms (MEDI and iLSQR) and state-of-the-art deep learning methods (U-net, xQSM, and LPCNN). The results indicated that IR2QSM was able to obtain QSM images with significantly increased accuracy and mitigated artifacts over other methods. Particularly, IR2QSM demonstrated on average the best NRMSE (27.59%) in simulated experiments, which is 15.48%, 7.86%, 17.24%, 9.26%, and 29.13% lower than iLSQR, MEDI, U-net, xQSM, LPCNN, respectively, and led to improved QSM results with fewer artifacts for the in vivo data.

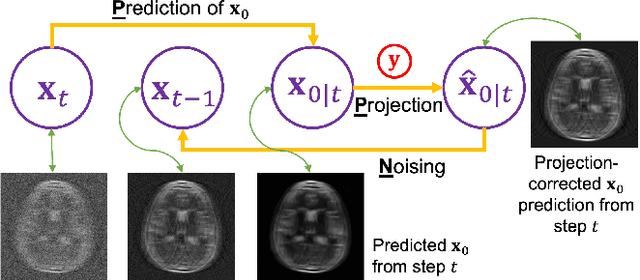

Bi-level Guided Diffusion Models for Zero-Shot Medical Imaging Inverse Problems

Apr 04, 2024Abstract:In the realm of medical imaging, inverse problems aim to infer high-quality images from incomplete, noisy measurements, with the objective of minimizing expenses and risks to patients in clinical settings. The Diffusion Models have recently emerged as a promising approach to such practical challenges, proving particularly useful for the zero-shot inference of images from partially acquired measurements in Magnetic Resonance Imaging (MRI) and Computed Tomography (CT). A central challenge in this approach, however, is how to guide an unconditional prediction to conform to the measurement information. Existing methods rely on deficient projection or inefficient posterior score approximation guidance, which often leads to suboptimal performance. In this paper, we propose \underline{\textbf{B}}i-level \underline{G}uided \underline{D}iffusion \underline{M}odels ({BGDM}), a zero-shot imaging framework that efficiently steers the initial unconditional prediction through a \emph{bi-level} guidance strategy. Specifically, BGDM first approximates an \emph{inner-level} conditional posterior mean as an initial measurement-consistent reference point and then solves an \emph{outer-level} proximal optimization objective to reinforce the measurement consistency. Our experimental findings, using publicly available MRI and CT medical datasets, reveal that BGDM is more effective and efficient compared to the baselines, faithfully generating high-fidelity medical images and substantially reducing hallucinatory artifacts in cases of severe degradation.

QSMDiff: Unsupervised 3D Diffusion Models for Quantitative Susceptibility Mapping

Mar 21, 2024

Abstract:Quantitative Susceptibility Mapping (QSM) dipole inversion is an ill-posed inverse problem for quantifying magnetic susceptibility distributions from MRI tissue phases. While supervised deep learning methods have shown success in specific QSM tasks, their generalizability across different acquisition scenarios remains constrained. Recent developments in diffusion models have demonstrated potential for solving 2D medical imaging inverse problems. However, their application to 3D modalities, such as QSM, remains challenging due to high computational demands. In this work, we developed a 3D image patch-based diffusion model, namely QSMDiff, for robust QSM reconstruction across different scan parameters, alongside simultaneous super-resolution and image-denoising tasks. QSMDiff adopts unsupervised 3D image patch training and full-size measurement guidance during inference for controlled image generation. Evaluation on simulated and in-vivo human brains, using gradient-echo and echo-planar imaging sequences across different acquisition parameters, demonstrates superior performance. The method proposed in QSMDiff also holds promise for impacting other 3D medical imaging applications beyond QSM.

Fast Controllable Diffusion Models for Undersampled MRI Reconstruction

Dec 01, 2023

Abstract:Supervised deep learning methods have shown promise in undersampled Magnetic Resonance Imaging (MRI) reconstruction, but their requirement for paired data limits their generalizability to the diverse MRI acquisition parameters. Recently, unsupervised controllable generative diffusion models have been applied to undersampled MRI reconstruction, without paired data or model retraining for different MRI acquisitions. However, diffusion models are generally slow in sampling and state-of-the-art acceleration techniques can lead to sub-optimal results when directly applied to the controllable generation process. This study introduces a new algorithm called Predictor-Projector-Noisor (PPN), which enhances and accelerates controllable generation of diffusion models for undersampled MRI reconstruction. Our results demonstrate that PPN produces high-fidelity MR images that conform to undersampled k-space measurements with significantly shorter reconstruction time than other controllable sampling methods. In addition, the unsupervised PPN accelerated diffusion models are adaptable to different MRI acquisition parameters, making them more practical for clinical use than supervised learning techniques.

Plug-and-Play Latent Feature Editing for Orientation-Adaptive Quantitative Susceptibility Mapping Neural Networks

Nov 14, 2023Abstract:Quantitative susceptibility mapping (QSM) is a post-processing technique for deriving tissue magnetic susceptibility distribution from MRI phase measurements. Deep learning (DL) algorithms hold great potential for solving the ill-posed QSM reconstruction problem. However, a significant challenge facing current DL-QSM approaches is their limited adaptability to magnetic dipole field orientation variations during training and testing. In this work, we propose a novel Orientation-Adaptive Latent Feature Editing (OA-LFE) module to learn the encoding of acquisition orientation vectors and seamlessly integrate them into the latent features of deep networks. Importantly, it can be directly Plug-and-Play (PnP) into various existing DL-QSM architectures, enabling reconstructions of QSM from arbitrary magnetic dipole orientations. Its effectiveness is demonstrated by combining the OA-LFE module into our previously proposed phase-to-susceptibility single-step instant QSM (iQSM) network, which was initially tailored for pure-axial acquisitions. The proposed OA-LFE-empowered iQSM, which we refer to as iQSM+, is trained in a self-supervised manner on a specially-designed simulation brain dataset. Comprehensive experiments are conducted on simulated and in vivo human brain datasets, encompassing subjects ranging from healthy individuals to those with pathological conditions. These experiments involve various MRI platforms (3T and 7T) and aim to compare our proposed iQSM+ against several established QSM reconstruction frameworks, including the original iQSM. The iQSM+ yields QSM images with significantly improved accuracies and mitigates artifacts, surpassing other state-of-the-art DL-QSM algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge