Marlon Bran Lorenzana

Single Image Compressed Sensing MRI via a Self-Supervised Deep Denoising Approach

Nov 22, 2023Abstract:Popular methods in compressed sensing (CS) are dependent on deep learning (DL), where large amounts of data are used to train non-linear reconstruction models. However, ensuring generalisability over and access to multiple datasets is challenging to realise for real-world applications. To address these concerns, this paper proposes a single image, self-supervised (SS) CS-MRI framework that enables a joint deep and sparse regularisation of CS artefacts. The approach effectively dampens structured CS artefacts, which can be difficult to remove assuming sparse reconstruction, or relying solely on the inductive biases of CNN to produce noise-free images. Image quality is thereby improved compared to either approach alone. Metrics are evaluated using Cartesian 1D masks on a brain and knee dataset, with PSNR improving by 2-4dB on average.

Multi-scale MRI reconstruction via dilated ensemble networks

Oct 07, 2023

Abstract:As aliasing artefacts are highly structural and non-local, many MRI reconstruction networks use pooling to enlarge filter coverage and incorporate global context. However, this inadvertently impedes fine detail recovery as downsampling creates a resolution bottleneck. Moreover, real and imaginary features are commonly split into separate channels, discarding phase information particularly important to high frequency textures. In this work, we introduce an efficient multi-scale reconstruction network using dilated convolutions to preserve resolution and experiment with a complex-valued version using complex convolutions. Inspired by parallel dilated filters, multiple receptive fields are processed simultaneously with branches that see both large structural artefacts and fine local features. We also adopt dense residual connections for feature aggregation to efficiently increase scale and the deep cascade global architecture to reduce overfitting. The real-valued version of this model outperformed common reconstruction architectures as well as a state-of-the-art multi-scale network whilst being three times more efficient. The complex-valued network yielded better qualitative results when more phase information was present.

Finite Compressive Sensing

Sep 15, 2023

Abstract:This paper introduces a sparse projection matrix composed of discrete (digital) periodic lines that create a pseudo-random (p.frac) sampling scheme. Our approach enables random Cartesian sampling whilst employing deterministic and one-dimensional (1D) trajectories derived from the discrete Radon transform (DRT). Unlike radial trajectories, DRT projections can be back-projected without interpolation. Thus, we also propose a novel reconstruction method based on the exact projections of the DRT called finite Fourier reconstruction (FFR). We term this combined p.frac and FFR strategy Finite Compressive Sensing (FCS), with image recovery demonstrated on experimental and simulated data; image quality comparisons are made with Cartesian random sampling in 1D and two-dimensional (2D), as well as radial under-sampling in a more constrained experiment. Our experiments indicate FCS enables 3-5dB gain in peak signal-to-noise ratio (PSNR) for 2-, 4- and 8-fold under-sampling compared to 1D Cartesian random sampling. This paper aims to: Review common sampling strategies for compressed sensing (CS)-magnetic resonance imaging (MRI) to inform the motivation of a projective and Cartesian sampling scheme. Compare the incoherence of these sampling strategies and the proposed p.frac. Compare reconstruction quality of the sampling schemes under various reconstruction strategies to determine the suitability of p.frac for CS-MRI. It is hypothesised that because p.frac is a highly incoherent sampling scheme, that reconstructions will be of high quality compared to 1D Cartesian phase-encode under-sampling.

Transformer Compressed Sensing via Global Image Tokens

Mar 27, 2022

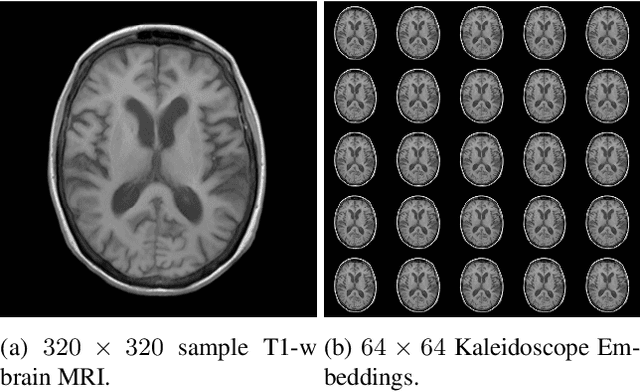

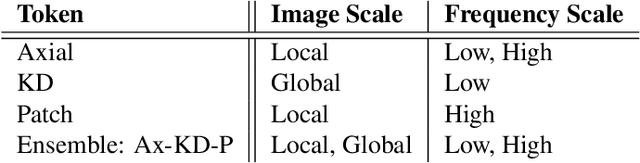

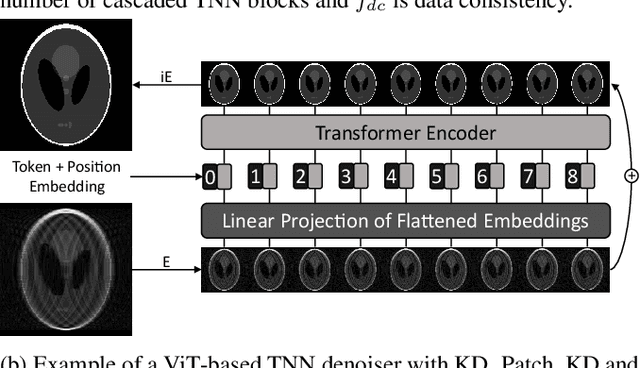

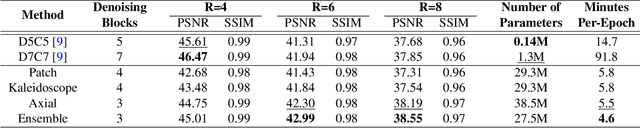

Abstract:Convolutional neural networks (CNN) have demonstrated outstanding Compressed Sensing (CS) performance compared to traditional, hand-crafted methods. However, they are broadly limited in terms of generalisability, inductive bias and difficulty to model long distance relationships. Transformer neural networks (TNN) overcome such issues by implementing an attention mechanism designed to capture dependencies between inputs. However, high-resolution tasks typically require vision Transformers (ViT) to decompose an image into patch-based tokens, limiting inputs to inherently local contexts. We propose a novel image decomposition that naturally embeds images into low-resolution inputs. These Kaleidoscope tokens (KD) provide a mechanism for global attention, at the same computational cost as a patch-based approach. To showcase this development, we replace CNN components in a well-known CS-MRI neural network with TNN blocks and demonstrate the improvements afforded by KD. We also propose an ensemble of image tokens, which enhance overall image quality and reduces model size. Supplementary material is available: https://github.com/uqmarlonbran/TCS.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge