Hanyu Zhou

RareAlert: Aligning heterogeneous large language model reasoning for early rare disease risk screening

Jan 26, 2026Abstract:Missed and delayed diagnosis remains a major challenge in rare disease care. At the initial clinical encounters, physicians assess rare disease risk using only limited information under high uncertainty. When high-risk patients are not recognised at this stage, targeted diagnostic testing is often not initiated, resulting in missed diagnosis. Existing primary care triage processes are structurally insufficient to reliably identify patients with rare diseases at initial clinical presentation and universal screening is needed to reduce diagnostic delay. Here we present RareAlert, an early screening system which predict patient-level rare disease risk from routinely available primary-visit information. RareAlert integrates reasoning generated by ten LLMs, calibrates and weights these signals using machine learning, and distils the aligned reasoning into a single locally deployable model. To develop and evaluate RareAlert, we curated RareBench, a real-world dataset of 158,666 cases covering 33 Orphanet disease categories and more than 7,000 rare conditions, including both rare and non-rare presentations. The results showed that rare disease identification can be reconceptualised as a universal uncertainty resolution process applied to the general patient population. On an independent test set, RareAlert, a Qwen3-4B based model trained with calibrated reasoning signals, achieved an AUC of 0.917, outperforming the best machine learning ensemble and all evaluated LLMs, including GPT-5, DeepSeek-R1, Claude-3.7-Sonnet, o3-mini, Gemini-2.5-Pro, and Qwen3-235B. These findings demonstrate the diversity in LLM medical reasoning and the effectiveness of aligning such reasoning in highly uncertain clinical tasks. By incorporating calibrated reasoning into a single model, RareAlert enables accurate, privacy-preserving, and scalable rare disease risk screening suitable for large-scale local deployment.

PRiSM: Benchmarking Phone Realization in Speech Models

Jan 20, 2026Abstract:Phone recognition (PR) serves as the atomic interface for language-agnostic modeling for cross-lingual speech processing and phonetic analysis. Despite prolonged efforts in developing PR systems, current evaluations only measure surface-level transcription accuracy. We introduce PRiSM, the first open-source benchmark designed to expose blind spots in phonetic perception through intrinsic and extrinsic evaluation of PR systems. PRiSM standardizes transcription-based evaluation and assesses downstream utility in clinical, educational, and multilingual settings with transcription and representation probes. We find that diverse language exposure during training is key to PR performance, encoder-CTC models are the most stable, and specialized PR models still outperform Large Audio Language Models. PRiSM releases code, recipes, and datasets to move the field toward multilingual speech models with robust phonetic ability: https://github.com/changelinglab/prism.

Adapting Depth Anything to Adverse Imaging Conditions with Events

Jan 05, 2026Abstract:Robust depth estimation under dynamic and adverse lighting conditions is essential for robotic systems. Currently, depth foundation models, such as Depth Anything, achieve great success in ideal scenes but remain challenging under adverse imaging conditions such as extreme illumination and motion blur. These degradations corrupt the visual signals of frame cameras, weakening the discriminative features of frame-based depths across the spatial and temporal dimensions. Typically, existing approaches incorporate event cameras to leverage their high dynamic range and temporal resolution, aiming to compensate for corrupted frame features. However, such specialized fusion models are predominantly trained from scratch on domain-specific datasets, thereby failing to inherit the open-world knowledge and robust generalization inherent to foundation models. In this work, we propose ADAE, an event-guided spatiotemporal fusion framework for Depth Anything in degraded scenes. Our design is guided by two key insights: 1) Entropy-Aware Spatial Fusion. We adaptively merge frame-based and event-based features using an information entropy strategy to indicate illumination-induced degradation. 2) Motion-Guided Temporal Correction. We resort to the event-based motion cue to recalibrate ambiguous features in blurred regions. Under our unified framework, the two components are complementary to each other and jointly enhance Depth Anything under adverse imaging conditions. Extensive experiments have been performed to verify the superiority of the proposed method. Our code will be released upon acceptance.

Is Nano Banana Pro a Low-Level Vision All-Rounder? A Comprehensive Evaluation on 14 Tasks and 40 Datasets

Dec 19, 2025Abstract:The rapid evolution of text-to-image generation models has revolutionized visual content creation. While commercial products like Nano Banana Pro have garnered significant attention, their potential as generalist solvers for traditional low-level vision challenges remains largely underexplored. In this study, we investigate the critical question: Is Nano Banana Pro a Low-Level Vision All-Rounder? We conducted a comprehensive zero-shot evaluation across 14 distinct low-level tasks spanning 40 diverse datasets. By utilizing simple textual prompts without fine-tuning, we benchmarked Nano Banana Pro against state-of-the-art specialist models. Our extensive analysis reveals a distinct performance dichotomy: while \textbf{Nano Banana Pro demonstrates superior subjective visual quality}, often hallucinating plausible high-frequency details that surpass specialist models, it lags behind in traditional reference-based quantitative metrics. We attribute this discrepancy to the inherent stochasticity of generative models, which struggle to maintain the strict pixel-level consistency required by conventional metrics. This report identifies Nano Banana Pro as a capable zero-shot contender for low-level vision tasks, while highlighting that achieving the high fidelity of domain specialists remains a significant hurdle.

LLaVA-4D: Embedding SpatioTemporal Prompt into LMMs for 4D Scene Understanding

May 18, 2025Abstract:Despite achieving significant progress in 2D image understanding, large multimodal models (LMMs) struggle in the physical world due to the lack of spatial representation. Typically, existing 3D LMMs mainly embed 3D positions as fixed spatial prompts within visual features to represent the scene. However, these methods are limited to understanding the static background and fail to capture temporally varying dynamic objects. In this paper, we propose LLaVA-4D, a general LMM framework with a novel spatiotemporal prompt for visual representation in 4D scene understanding. The spatiotemporal prompt is generated by encoding 3D position and 1D time into a dynamic-aware 4D coordinate embedding. Moreover, we demonstrate that spatial and temporal components disentangled from visual features are more effective in distinguishing the background from objects. This motivates embedding the 4D spatiotemporal prompt into these features to enhance the dynamic scene representation. By aligning visual spatiotemporal embeddings with language embeddings, LMMs gain the ability to understand both spatial and temporal characteristics of static background and dynamic objects in the physical world. Additionally, we construct a 4D vision-language dataset with spatiotemporal coordinate annotations for instruction fine-tuning LMMs. Extensive experiments have been conducted to demonstrate the effectiveness of our method across different tasks in 4D scene understanding.

TimeTracker: Event-based Continuous Point Tracking for Video Frame Interpolation with Non-linear Motion

May 06, 2025Abstract:Video frame interpolation (VFI) that leverages the bio-inspired event cameras as guidance has recently shown better performance and memory efficiency than the frame-based methods, thanks to the event cameras' advantages, such as high temporal resolution. A hurdle for event-based VFI is how to effectively deal with non-linear motion, caused by the dynamic changes in motion direction and speed within the scene. Existing methods either use events to estimate sparse optical flow or fuse events with image features to estimate dense optical flow. Unfortunately, motion errors often degrade the VFI quality as the continuous motion cues from events do not align with the dense spatial information of images in the temporal dimension. In this paper, we find that object motion is continuous in space, tracking local regions over continuous time enables more accurate identification of spatiotemporal feature correlations. In light of this, we propose a novel continuous point tracking-based VFI framework, named TimeTracker. Specifically, we first design a Scene-Aware Region Segmentation (SARS) module to divide the scene into similar patches. Then, a Continuous Trajectory guided Motion Estimation (CTME) module is proposed to track the continuous motion trajectory of each patch through events. Finally, intermediate frames at any given time are generated through global motion optimization and frame refinement. Moreover, we collect a real-world dataset that features fast non-linear motion. Extensive experiments show that our method outperforms prior arts in both motion estimation and frame interpolation quality.

Bridge Frame and Event: Common Spatiotemporal Fusion for High-Dynamic Scene Optical Flow

Mar 11, 2025Abstract:High-dynamic scene optical flow is a challenging task, which suffers spatial blur and temporal discontinuous motion due to large displacement in frame imaging, thus deteriorating the spatiotemporal feature of optical flow. Typically, existing methods mainly introduce event camera to directly fuse the spatiotemporal features between the two modalities. However, this direct fusion is ineffective, since there exists a large gap due to the heterogeneous data representation between frame and event modalities. To address this issue, we explore a common-latent space as an intermediate bridge to mitigate the modality gap. In this work, we propose a novel common spatiotemporal fusion between frame and event modalities for high-dynamic scene optical flow, including visual boundary localization and motion correlation fusion. Specifically, in visual boundary localization, we figure out that frame and event share the similar spatiotemporal gradients, whose similarity distribution is consistent with the extracted boundary distribution. This motivates us to design the common spatiotemporal gradient to constrain the reference boundary localization. In motion correlation fusion, we discover that the frame-based motion possesses spatially dense but temporally discontinuous correlation, while the event-based motion has spatially sparse but temporally continuous correlation. This inspires us to use the reference boundary to guide the complementary motion knowledge fusion between the two modalities. Moreover, common spatiotemporal fusion can not only relieve the cross-modal feature discrepancy, but also make the fusion process interpretable for dense and continuous optical flow. Extensive experiments have been performed to verify the superiority of the proposed method.

LLaFEA: Frame-Event Complementary Fusion for Fine-Grained Spatiotemporal Understanding in LMMs

Mar 10, 2025Abstract:Large multimodal models (LMMs) excel in scene understanding but struggle with fine-grained spatiotemporal reasoning due to weak alignment between linguistic and visual representations. Existing methods map textual positions and durations into the visual space encoded from frame-based videos, but suffer from temporal sparsity that limits language-vision temporal coordination. To address this issue, we introduce LLaFEA (Large Language and Frame-Event Assistant) to leverage event cameras for temporally dense perception and frame-event fusion. Our approach employs a cross-attention mechanism to integrate complementary spatial and temporal features, followed by self-attention matching for global spatio-temporal associations. We further embed textual position and duration tokens into the fused visual space to enhance fine-grained alignment. This unified framework ensures robust spatio-temporal coordinate alignment, enabling LMMs to interpret scenes at any position and any time. In addition, we construct a dataset of real-world frames-events with coordinate instructions and conduct extensive experiments to validate the effectiveness of the proposed method.

Cross-video Identity Correlating for Person Re-identification Pre-training

Sep 27, 2024

Abstract:Recent researches have proven that pre-training on large-scale person images extracted from internet videos is an effective way in learning better representations for person re-identification. However, these researches are mostly confined to pre-training at the instance-level or single-video tracklet-level. They ignore the identity-invariance in images of the same person across different videos, which is a key focus in person re-identification. To address this issue, we propose a Cross-video Identity-cOrrelating pre-traiNing (CION) framework. Defining a noise concept that comprehensively considers both intra-identity consistency and inter-identity discrimination, CION seeks the identity correlation from cross-video images by modeling it as a progressive multi-level denoising problem. Furthermore, an identity-guided self-distillation loss is proposed to implement better large-scale pre-training by mining the identity-invariance within person images. We conduct extensive experiments to verify the superiority of our CION in terms of efficiency and performance. CION achieves significantly leading performance with even fewer training samples. For example, compared with the previous state-of-the-art~\cite{ISR}, CION with the same ResNet50-IBN achieves higher mAP of 93.3\% and 74.3\% on Market1501 and MSMT17, while only utilizing 8\% training samples. Finally, with CION demonstrating superior model-agnostic ability, we contribute a model zoo named ReIDZoo to meet diverse research and application needs in this field. It contains a series of CION pre-trained models with spanning structures and parameters, totaling 32 models with 10 different structures, including GhostNet, ConvNext, RepViT, FastViT and so on. The code and models will be made publicly available at https://github.com/Zplusdragon/CION_ReIDZoo.

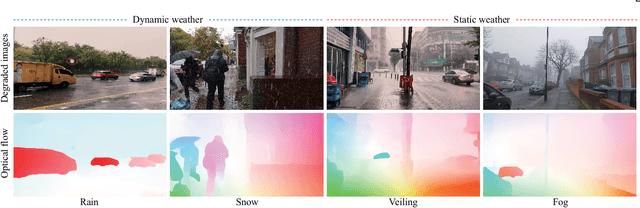

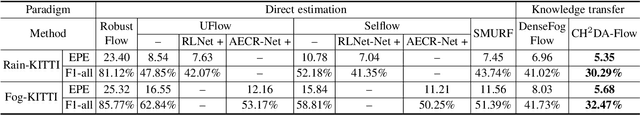

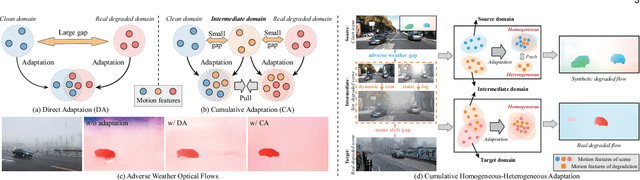

Adverse Weather Optical Flow: Cumulative Homogeneous-Heterogeneous Adaptation

Sep 25, 2024

Abstract:Optical flow has made great progress in clean scenes, while suffers degradation under adverse weather due to the violation of the brightness constancy and gradient continuity assumptions of optical flow. Typically, existing methods mainly adopt domain adaptation to transfer motion knowledge from clean to degraded domain through one-stage adaptation. However, this direct adaptation is ineffective, since there exists a large gap due to adverse weather and scene style between clean and real degraded domains. Moreover, even within the degraded domain itself, static weather (e.g., fog) and dynamic weather (e.g., rain) have different impacts on optical flow. To address above issues, we explore synthetic degraded domain as an intermediate bridge between clean and real degraded domains, and propose a cumulative homogeneous-heterogeneous adaptation framework for real adverse weather optical flow. Specifically, for clean-degraded transfer, our key insight is that static weather possesses the depth-association homogeneous feature which does not change the intrinsic motion of the scene, while dynamic weather additionally introduces the heterogeneous feature which results in a significant boundary discrepancy in warp errors between clean and degraded domains. For synthetic-real transfer, we figure out that cost volume correlation shares a similar statistical histogram between synthetic and real degraded domains, benefiting to holistically aligning the homogeneous correlation distribution for synthetic-real knowledge distillation. Under this unified framework, the proposed method can progressively and explicitly transfer knowledge from clean scenes to real adverse weather. In addition, we further collect a real adverse weather dataset with manually annotated optical flow labels and perform extensive experiments to verify the superiority of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge