Hansu Gu

Feature-Indexed Federated Recommendation with Residual-Quantized Codebooks

Jan 26, 2026Abstract:Federated recommendation provides a privacy-preserving solution for training recommender systems without centralizing user interactions. However, existing methods follow an ID-indexed communication paradigm that transmit whole item embeddings between clients and the server, which has three major limitations: 1) consumes uncontrollable communication resources, 2) the uploaded item information cannot generalize to related non-interacted items, and 3) is sensitive to client noisy feedback. To solve these problems, it is necessary to fundamentally change the existing ID-indexed communication paradigm. Therefore, we propose a feature-indexed communication paradigm that transmits feature code embeddings as codebooks rather than raw item embeddings. Building on this paradigm, we present RQFedRec, which assigns each item a list of discrete code IDs via Residual Quantization (RQ)-Kmeans. Each client generates and trains code embeddings as codebooks based on discrete code IDs provided by the server, and the server collects and aggregates these codebooks rather than item embeddings. This design makes communication controllable since the codebooks could cover all items, enabling updates to propagate across related items in same code ID. In addition, since code embedding represents many items, which is more robust to a single noisy item. To jointly capture semantic and collaborative information, RQFedRec further adopts a collaborative-semantic dual-channel aggregation with a curriculum strategy that emphasizes semantic codes early and gradually increases the contribution of collaborative codes over training. Extensive experiments on real-world datasets demonstrate that RQFedRec consistently outperforms state-of-the-art federated recommendation baselines while significantly reducing communication overhead.

Bidirectional Knowledge Distillation for Enhancing Sequential Recommendation with Large Language Models

May 23, 2025Abstract:Large language models (LLMs) have demonstrated exceptional performance in understanding and generating semantic patterns, making them promising candidates for sequential recommendation tasks. However, when combined with conventional recommendation models (CRMs), LLMs often face challenges related to high inference costs and static knowledge transfer methods. In this paper, we propose a novel mutual distillation framework, LLMD4Rec, that fosters dynamic and bidirectional knowledge exchange between LLM-centric and CRM-based recommendation systems. Unlike traditional unidirectional distillation methods, LLMD4Rec enables iterative optimization by alternately refining both models, enhancing the semantic understanding of CRMs and enriching LLMs with collaborative signals from user-item interactions. By leveraging sample-wise adaptive weighting and aligning output distributions, our approach eliminates the need for additional parameters while ensuring effective knowledge transfer. Extensive experiments on real-world datasets demonstrate that LLMD4Rec significantly improves recommendation accuracy across multiple benchmarks without increasing inference costs. This method provides a scalable and efficient solution for combining the strengths of both LLMs and CRMs in sequential recommendation systems.

EcomScriptBench: A Multi-task Benchmark for E-commerce Script Planning via Step-wise Intention-Driven Product Association

May 21, 2025Abstract:Goal-oriented script planning, or the ability to devise coherent sequences of actions toward specific goals, is commonly employed by humans to plan for typical activities. In e-commerce, customers increasingly seek LLM-based assistants to generate scripts and recommend products at each step, thereby facilitating convenient and efficient shopping experiences. However, this capability remains underexplored due to several challenges, including the inability of LLMs to simultaneously conduct script planning and product retrieval, difficulties in matching products caused by semantic discrepancies between planned actions and search queries, and a lack of methods and benchmark data for evaluation. In this paper, we step forward by formally defining the task of E-commerce Script Planning (EcomScript) as three sequential subtasks. We propose a novel framework that enables the scalable generation of product-enriched scripts by associating products with each step based on the semantic similarity between the actions and their purchase intentions. By applying our framework to real-world e-commerce data, we construct the very first large-scale EcomScript dataset, EcomScriptBench, which includes 605,229 scripts sourced from 2.4 million products. Human annotations are then conducted to provide gold labels for a sampled subset, forming an evaluation benchmark. Extensive experiments reveal that current (L)LMs face significant challenges with EcomScript tasks, even after fine-tuning, while injecting product purchase intentions improves their performance.

FedCIA: Federated Collaborative Information Aggregation for Privacy-Preserving Recommendation

Apr 19, 2025Abstract:Recommendation algorithms rely on user historical interactions to deliver personalized suggestions, which raises significant privacy concerns. Federated recommendation algorithms tackle this issue by combining local model training with server-side model aggregation, where most existing algorithms use a uniform weighted summation to aggregate item embeddings from different client models. This approach has three major limitations: 1) information loss during aggregation, 2) failure to retain personalized local features, and 3) incompatibility with parameter-free recommendation algorithms. To address these limitations, we first review the development of recommendation algorithms and recognize that their core function is to share collaborative information, specifically the global relationship between users and items. With this understanding, we propose a novel aggregation paradigm named collaborative information aggregation, which focuses on sharing collaborative information rather than item parameters. Based on this new paradigm, we introduce the federated collaborative information aggregation (FedCIA) method for privacy-preserving recommendation. This method requires each client to upload item similarity matrices for aggregation, which allows clients to align their local models without constraining embeddings to a unified vector space. As a result, it mitigates information loss caused by direct summation, preserves the personalized embedding distributions of individual clients, and supports the aggregation of parameter-free models. Theoretical analysis and experimental results on real-world datasets demonstrate the superior performance of FedCIA compared with the state-of-the-art federated recommendation algorithms. Code is available at https://github.com/Mingzhe-Han/FedCIA.

UXAgent: A System for Simulating Usability Testing of Web Design with LLM Agents

Apr 13, 2025

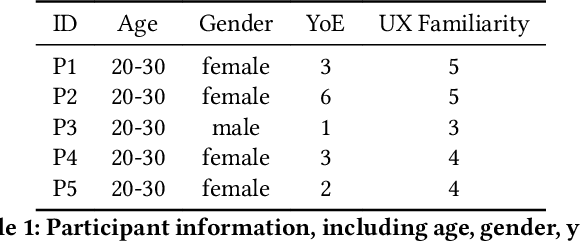

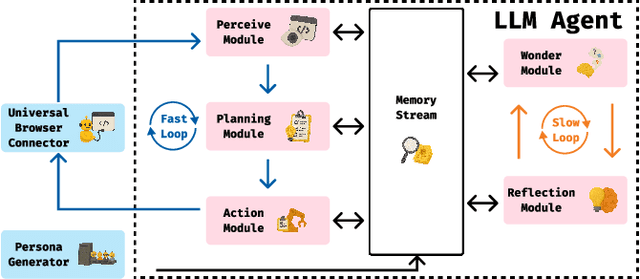

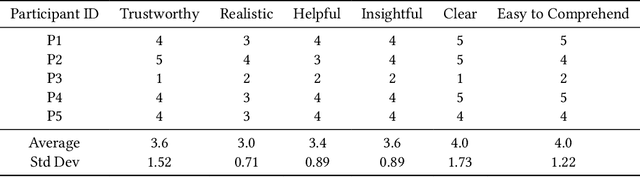

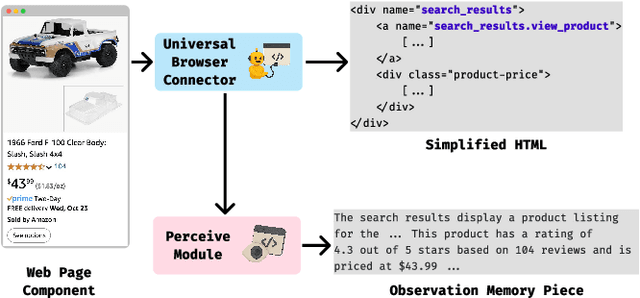

Abstract:Usability testing is a fundamental research method that user experience (UX) researchers use to evaluate and iterate a web design, but\textbf{ how to evaluate and iterate the usability testing study design } itself? Recent advances in Large Language Model-simulated Agent (\textbf{LLM Agent}) research inspired us to design \textbf{UXAgent} to support UX researchers in evaluating and reiterating their usability testing study design before they conduct the real human-subject study. Our system features a Persona Generator module, an LLM Agent module, and a Universal Browser Connector module to automatically generate thousands of simulated users to interactively test the target website. The system also provides an Agent Interview Interface and a Video Replay Interface so that the UX researchers can easily review and analyze the generated qualitative and quantitative log data. Through a heuristic evaluation, five UX researcher participants praised the innovation of our system but also expressed concerns about the future of LLM Agent usage in UX studies.

Mitigating Popularity Bias in Collaborative Filtering through Fair Sampling

Feb 19, 2025Abstract:Recommender systems often suffer from popularity bias, where frequently interacted items are overrepresented in recommendations. This bias stems from propensity factors influencing training data, leading to imbalanced exposure. In this paper, we introduce a Fair Sampling (FS) approach to address this issue by ensuring that both users and items are selected with equal probability as positive and negative instances. Unlike traditional inverse propensity score (IPS) methods, FS does not require propensity estimation, eliminating errors associated with inaccurate calculations. Our theoretical analysis demonstrates that FS effectively neutralizes the influence of propensity factors, achieving unbiased learning. Experimental results validate that FS outperforms state-of-the-art methods in both point-wise and pair-wise recommendation tasks, enhancing recommendation fairness without sacrificing accuracy. The implementation is available at https://anonymous.4open.science/r/Fair-Sampling.

Enhancing Cross-Domain Recommendations with Memory-Optimized LLM-Based User Agents

Feb 19, 2025Abstract:Large Language Model (LLM)-based user agents have emerged as a powerful tool for improving recommender systems by simulating user interactions. However, existing methods struggle with cross-domain scenarios due to inefficient memory structures, leading to irrelevant information retention and failure to account for social influence factors such as popularity. To address these limitations, we introduce AgentCF++, a novel framework featuring a dual-layer memory architecture and a two-step fusion mechanism to filter domain-specific preferences effectively. Additionally, we propose interest groups with shared memory, allowing the model to capture the impact of popularity trends on users with similar interests. Through extensive experiments on multiple cross-domain datasets, AgentCF++ demonstrates superior performance over baseline models, highlighting its effectiveness in refining user behavior simulation for recommender systems. Our code is available at https://anonymous.4open.science/r/AgentCF-plus.

Enhancing LLM-Based Recommendations Through Personalized Reasoning

Feb 19, 2025

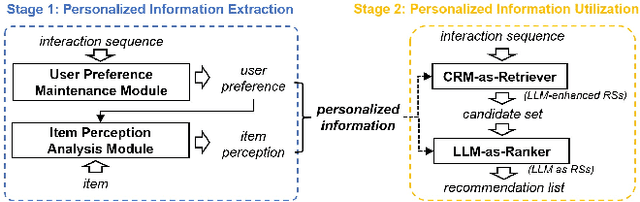

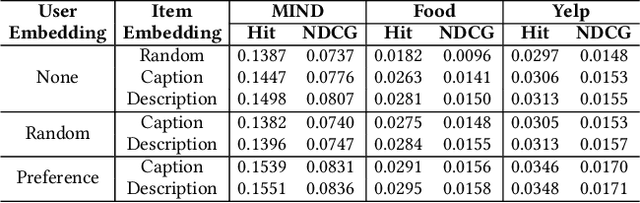

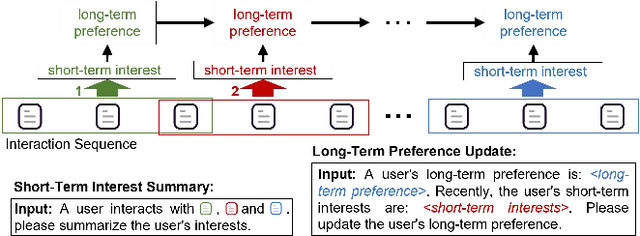

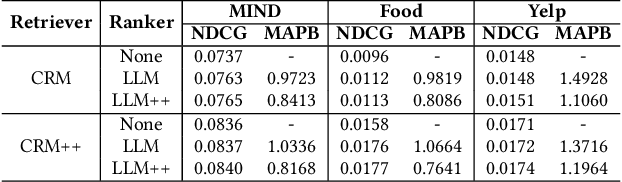

Abstract:Current recommendation systems powered by large language models (LLMs) often underutilize their reasoning capabilities due to a lack of explicit logical structuring. To address this limitation, we introduce CoT-Rec, a framework that integrates Chain-of-Thought (CoT) reasoning into LLM-driven recommendations by incorporating two crucial processes: user preference analysis and item perception evaluation. CoT-Rec operates in two key phases: (1) personalized data extraction, where user preferences and item perceptions are identified, and (2) personalized data application, where this information is leveraged to refine recommendations. Our experimental analysis demonstrates that CoT-Rec improves recommendation accuracy by making better use of LLMs' reasoning potential. The implementation is publicly available at https://anonymous.4open.science/r/CoT-Rec.

UXAgent: An LLM Agent-Based Usability Testing Framework for Web Design

Feb 18, 2025

Abstract:Usability testing is a fundamental yet challenging (e.g., inflexible to iterate the study design flaws and hard to recruit study participants) research method for user experience (UX) researchers to evaluate a web design. Recent advances in Large Language Model-simulated Agent (LLM-Agent) research inspired us to design UXAgent to support UX researchers in evaluating and reiterating their usability testing study design before they conduct the real human subject study. Our system features an LLM-Agent module and a universal browser connector module so that UX researchers can automatically generate thousands of simulated users to test the target website. The results are shown in qualitative (e.g., interviewing how an agent thinks ), quantitative (e.g., # of actions), and video recording formats for UX researchers to analyze. Through a heuristic user evaluation with five UX researchers, participants praised the innovation of our system but also expressed concerns about the future of LLM Agent-assisted UX study.

Oracle-guided Dynamic User Preference Modeling for Sequential Recommendation

Dec 01, 2024

Abstract:Sequential recommendation methods can capture dynamic user preferences from user historical interactions to achieve better performance. However, most existing methods only use past information extracted from user historical interactions to train the models, leading to the deviations of user preference modeling. Besides past information, future information is also available during training, which contains the ``oracle'' user preferences in the future and will be beneficial to model dynamic user preferences. Therefore, we propose an oracle-guided dynamic user preference modeling method for sequential recommendation (Oracle4Rec), which leverages future information to guide model training on past information, aiming to learn ``forward-looking'' models. Specifically, Oracle4Rec first extracts past and future information through two separate encoders, then learns a forward-looking model through an oracle-guiding module which minimizes the discrepancy between past and future information. We also tailor a two-phase model training strategy to make the guiding more effective. Extensive experiments demonstrate that Oracle4Rec is superior to state-of-the-art sequential methods. Further experiments show that Oracle4Rec can be leveraged as a generic module in other sequential recommendation methods to improve their performance with a considerable margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge