Hakan Bilen

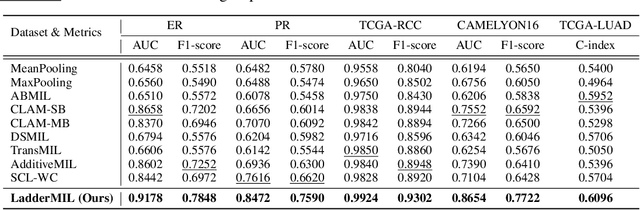

Enabling Progressive Whole-slide Image Analysis with Multi-scale Pyramidal Network

Feb 02, 2026Abstract:Multiple-instance Learning (MIL) is commonly used to undertake computational pathology (CPath) tasks, and the use of multi-scale patches allows diverse features across scales to be learned. Previous studies using multi-scale features in clinical applications rely on multiple inputs across magnifications with late feature fusion, which does not retain the link between features across scales while the inputs are dependent on arbitrary, manufacturer-defined magnifications, being inflexible and computationally expensive. In this paper, we propose the Multi-scale Pyramidal Network (MSPN), which is plug-and-play over attention-based MIL that introduces progressive multi-scale analysis on WSI. Our MSPN consists of (1) grid-based remapping that uses high magnification features to derive coarse features and (2) the coarse guidance network (CGN) that learns coarse contexts. We benchmark MSPN as an add-on module to 4 attention-based frameworks using 4 clinically relevant tasks across 3 types of foundation model, as well as the pre-trained MIL framework. We show that MSPN consistently improves MIL across the compared configurations and tasks, while being lightweight and easy-to-use.

MoonSeg3R: Monocular Online Zero-Shot Segment Anything in 3D with Reconstructive Foundation Priors

Dec 17, 2025Abstract:In this paper, we focus on online zero-shot monocular 3D instance segmentation, a novel practical setting where existing approaches fail to perform because they rely on posed RGB-D sequences. To overcome this limitation, we leverage CUT3R, a recent Reconstructive Foundation Model (RFM), to provide reliable geometric priors from a single RGB stream. We propose MoonSeg3R, which introduces three key components: (1) a self-supervised query refinement module with spatial-semantic distillation that transforms segmentation masks from 2D visual foundation models (VFMs) into discriminative 3D queries; (2) a 3D query index memory that provides temporal consistency by retrieving contextual queries; and (3) a state-distribution token from CUT3R that acts as a mask identity descriptor to strengthen cross-frame fusion. Experiments on ScanNet200 and SceneNN show that MoonSeg3R is the first method to enable online monocular 3D segmentation and achieves performance competitive with state-of-the-art RGB-D-based systems. Code and models will be released.

PAOLI: Pose-free Articulated Object Learning from Sparse-view Images

Sep 04, 2025Abstract:We present a novel self-supervised framework for learning articulated object representations from sparse-view, unposed images. Unlike prior methods that require dense multi-view observations and ground-truth camera poses, our approach operates with as few as four views per articulation and no camera supervision. To address the inherent challenges, we first reconstruct each articulation independently using recent advances in sparse-view 3D reconstruction, then learn a deformation field that establishes dense correspondences across poses. A progressive disentanglement strategy further separates static from moving parts, enabling robust separation of camera and object motion. Finally, we jointly optimize geometry, appearance, and kinematics with a self-supervised loss that enforces cross-view and cross-pose consistency. Experiments on the standard benchmark and real-world examples demonstrate that our method produces accurate and detailed articulated object representations under significantly weaker input assumptions than existing approaches.

Jamais Vu: Exposing the Generalization Gap in Supervised Semantic Correspondence

Jun 09, 2025Abstract:Semantic correspondence (SC) aims to establish semantically meaningful matches across different instances of an object category. We illustrate how recent supervised SC methods remain limited in their ability to generalize beyond sparsely annotated training keypoints, effectively acting as keypoint detectors. To address this, we propose a novel approach for learning dense correspondences by lifting 2D keypoints into a canonical 3D space using monocular depth estimation. Our method constructs a continuous canonical manifold that captures object geometry without requiring explicit 3D supervision or camera annotations. Additionally, we introduce SPair-U, an extension of SPair-71k with novel keypoint annotations, to better assess generalization. Experiments not only demonstrate that our model significantly outperforms supervised baselines on unseen keypoints, highlighting its effectiveness in learning robust correspondences, but that unsupervised baselines outperform supervised counterparts when generalized across different datasets.

DD-Ranking: Rethinking the Evaluation of Dataset Distillation

May 19, 2025

Abstract:In recent years, dataset distillation has provided a reliable solution for data compression, where models trained on the resulting smaller synthetic datasets achieve performance comparable to those trained on the original datasets. To further improve the performance of synthetic datasets, various training pipelines and optimization objectives have been proposed, greatly advancing the field of dataset distillation. Recent decoupled dataset distillation methods introduce soft labels and stronger data augmentation during the post-evaluation phase and scale dataset distillation up to larger datasets (e.g., ImageNet-1K). However, this raises a question: Is accuracy still a reliable metric to fairly evaluate dataset distillation methods? Our empirical findings suggest that the performance improvements of these methods often stem from additional techniques rather than the inherent quality of the images themselves, with even randomly sampled images achieving superior results. Such misaligned evaluation settings severely hinder the development of DD. Therefore, we propose DD-Ranking, a unified evaluation framework, along with new general evaluation metrics to uncover the true performance improvements achieved by different methods. By refocusing on the actual information enhancement of distilled datasets, DD-Ranking provides a more comprehensive and fair evaluation standard for future research advancements.

Visually Interpretable Subtask Reasoning for Visual Question Answering

May 12, 2025

Abstract:Answering complex visual questions like `Which red furniture can be used for sitting?' requires multi-step reasoning, including object recognition, attribute filtering, and relational understanding. Recent work improves interpretability in multimodal large language models (MLLMs) by decomposing tasks into sub-task programs, but these methods are computationally expensive and less accurate due to poor adaptation to target data. To address this, we introduce VISTAR (Visually Interpretable Subtask-Aware Reasoning Model), a subtask-driven training framework that enhances both interpretability and reasoning by generating textual and visual explanations within MLLMs. Instead of relying on external models, VISTAR fine-tunes MLLMs to produce structured Subtask-of-Thought rationales (step-by-step reasoning sequences). Experiments on two benchmarks show that VISTAR consistently improves reasoning accuracy while maintaining interpretability. Our code and dataset will be available at https://github.com/ChengJade/VISTAR.

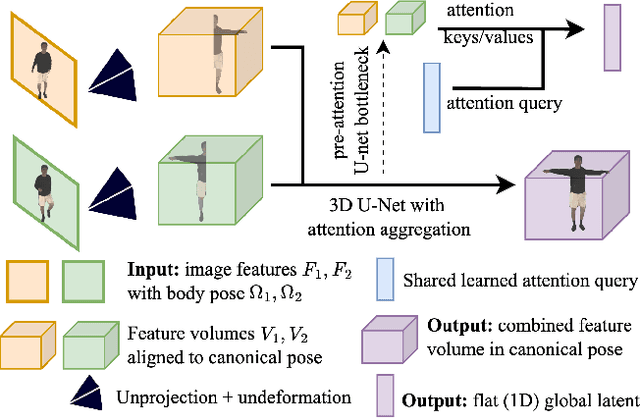

HumMorph: Generalized Dynamic Human Neural Fields from Few Views

Apr 27, 2025

Abstract:We introduce HumMorph, a novel generalized approach to free-viewpoint rendering of dynamic human bodies with explicit pose control. HumMorph renders a human actor in any specified pose given a few observed views (starting from just one) in arbitrary poses. Our method enables fast inference as it relies only on feed-forward passes through the model. We first construct a coarse representation of the actor in the canonical T-pose, which combines visual features from individual partial observations and fills missing information using learned prior knowledge. The coarse representation is complemented by fine-grained pixel-aligned features extracted directly from the observed views, which provide high-resolution appearance information. We show that HumMorph is competitive with the state-of-the-art when only a single input view is available, however, we achieve results with significantly better visual quality given just 2 monocular observations. Moreover, previous generalized methods assume access to accurate body shape and pose parameters obtained using synchronized multi-camera setups. In contrast, we consider a more practical scenario where these body parameters are noisily estimated directly from the observed views. Our experimental results demonstrate that our architecture is more robust to errors in the noisy parameters and clearly outperforms the state of the art in this setting.

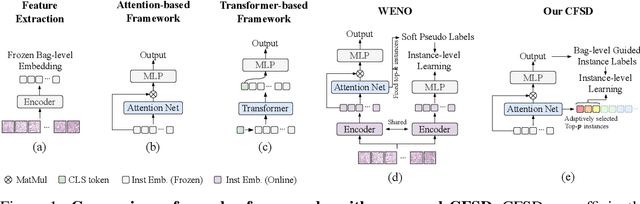

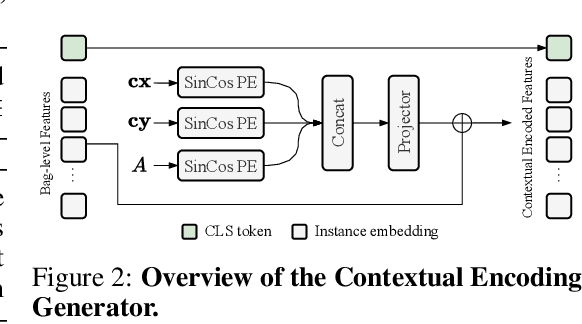

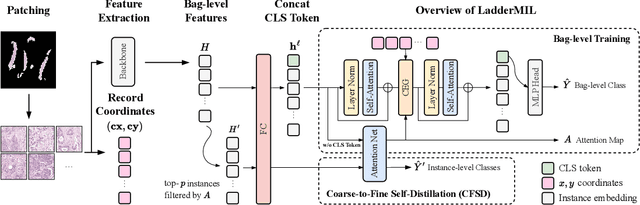

Multiple Instance Learning with Coarse-to-Fine Self-Distillation

Feb 04, 2025

Abstract:Multiple Instance Learning (MIL) for whole slide image (WSI) analysis in computational pathology often neglects instance-level learning as supervision is typically provided only at the bag level. In this work, we present PathMIL, a framework designed to improve MIL through two perspectives: (1) employing instance-level supervision and (2) learning inter-instance contextual information on bag level. Firstly, we propose a novel Coarse-to-Fine Self-Distillation (CFSD) paradigm, to probe and distil a classifier trained with bag-level information to obtain instance-level labels which could effectively provide the supervision for the same classifier in a finer way. Secondly, to capture inter-instance contextual information in WSI, we propose Two-Dimensional Positional Encoding (2DPE), which encodes the spatial appearance of instances within a bag. We also theoretically and empirically prove the instance-level learnability of CFSD. PathMIL is evaluated on multiple benchmarking tasks, including subtype classification (TCGA-NSCLC), tumour classification (CAMELYON16), and an internal benchmark for breast cancer receptor status classification. Our method achieves state-of-the-art performance, with AUC scores of 0.9152 and 0.8524 for estrogen and progesterone receptor status classification, respectively, an AUC of 0.9618 for subtype classification, and 0.8634 for tumour classification, surpassing existing methods.

Spatially-Adaptive Hash Encodings For Neural Surface Reconstruction

Dec 06, 2024Abstract:Positional encodings are a common component of neural scene reconstruction methods, and provide a way to bias the learning of neural fields towards coarser or finer representations. Current neural surface reconstruction methods use a "one-size-fits-all" approach to encoding, choosing a fixed set of encoding functions, and therefore bias, across all scenes. Current state-of-the-art surface reconstruction approaches leverage grid-based multi-resolution hash encoding in order to recover high-detail geometry. We propose a learned approach which allows the network to choose its encoding basis as a function of space, by masking the contribution of features stored at separate grid resolutions. The resulting spatially adaptive approach allows the network to fit a wider range of frequencies without introducing noise. We test our approach on standard benchmark surface reconstruction datasets and achieve state-of-the-art performance on two benchmark datasets.

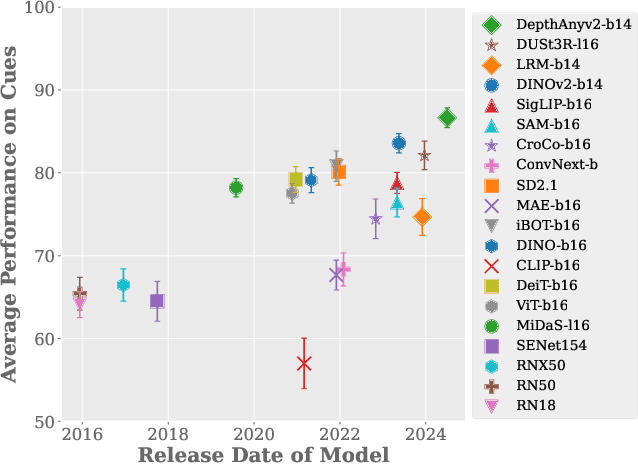

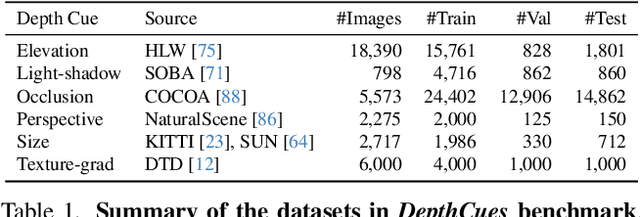

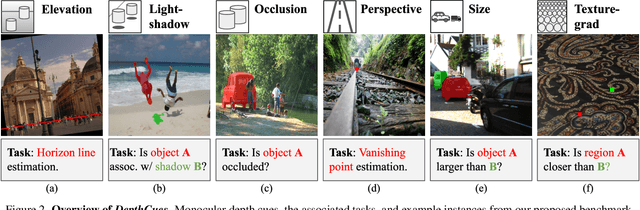

DepthCues: Evaluating Monocular Depth Perception in Large Vision Models

Nov 26, 2024

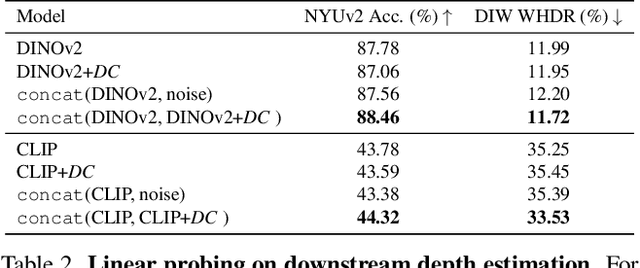

Abstract:Large-scale pre-trained vision models are becoming increasingly prevalent, offering expressive and generalizable visual representations that benefit various downstream tasks. Recent studies on the emergent properties of these models have revealed their high-level geometric understanding, in particular in the context of depth perception. However, it remains unclear how depth perception arises in these models without explicit depth supervision provided during pre-training. To investigate this, we examine whether the monocular depth cues, similar to those used by the human visual system, emerge in these models. We introduce a new benchmark, DepthCues, designed to evaluate depth cue understanding, and present findings across 20 diverse and representative pre-trained vision models. Our analysis shows that human-like depth cues emerge in more recent larger models. We also explore enhancing depth perception in large vision models by fine-tuning on DepthCues, and find that even without dense depth supervision, this improves depth estimation. To support further research, our benchmark and evaluation code will be made publicly available for studying depth perception in vision models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge