Girish Chowdhary

Visual-Language-Guided Task Planning for Horticultural Robots

Jan 17, 2026Abstract:Crop monitoring is essential for precision agriculture, but current systems lack high-level reasoning. We introduce a novel, modular framework that uses a Visual Language Model (VLM) to guide robotic task planning, interleaving input queries with action primitives. We contribute a comprehensive benchmark for short- and long-horizon crop monitoring tasks in monoculture and polyculture environments. Our main results show that VLMs perform robustly for short-horizon tasks (comparable to human success), but exhibit significant performance degradation in challenging long-horizon tasks. Critically, the system fails when relying on noisy semantic maps, demonstrating a key limitation in current VLM context grounding for sustained robotic operations. This work offers a deployable framework and critical insights into VLM capabilities and shortcomings for complex agricultural robotics.

Active Semantic Mapping of Horticultural Environments Using Gaussian Splatting

Jan 17, 2026Abstract:Semantic reconstruction of agricultural scenes plays a vital role in tasks such as phenotyping and yield estimation. However, traditional approaches that rely on manual scanning or fixed camera setups remain a major bottleneck in this process. In this work, we propose an active 3D reconstruction framework for horticultural environments using a mobile manipulator. The proposed system integrates the classical Octomap representation with 3D Gaussian Splatting to enable accurate and efficient target-aware mapping. While a low-resolution Octomap provides probabilistic occupancy information for informative viewpoint selection and collision-free planning, 3D Gaussian Splatting leverages geometric, photometric, and semantic information to optimize a set of 3D Gaussians for high-fidelity scene reconstruction. We further introduce simple yet effective strategies to enhance robustness against segmentation noise and reduce memory consumption. Simulation experiments demonstrate that our method outperforms purely occupancy-based approaches in both runtime efficiency and reconstruction accuracy, enabling precise fruit counting and volume estimation. Compared to a 0.01m-resolution Octomap, our approach achieves an improvement of 6.6% in fruit-level F1 score under noise-free conditions, and up to 28.6% under segmentation noise. Additionally, it achieves a 50% reduction in runtime, highlighting its potential for scalable, real-time semantic reconstruction in agricultural robotics.

ZeST: an LLM-based Zero-Shot Traversability Navigation for Unknown Environments

Aug 26, 2025Abstract:The advancement of robotics and autonomous navigation systems hinges on the ability to accurately predict terrain traversability. Traditional methods for generating datasets to train these prediction models often involve putting robots into potentially hazardous environments, posing risks to equipment and safety. To solve this problem, we present ZeST, a novel approach leveraging visual reasoning capabilities of Large Language Models (LLMs) to create a traversability map in real-time without exposing robots to danger. Our approach not only performs zero-shot traversability and mitigates the risks associated with real-world data collection but also accelerates the development of advanced navigation systems, offering a cost-effective and scalable solution. To support our findings, we present navigation results, in both controlled indoor and unstructured outdoor environments. As shown in the experiments, our method provides safer navigation when compared to other state-of-the-art methods, constantly reaching the final goal.

Towards Efficient Large Scale Spatial-Temporal Time Series Forecasting via Improved Inverted Transformers

Mar 13, 2025

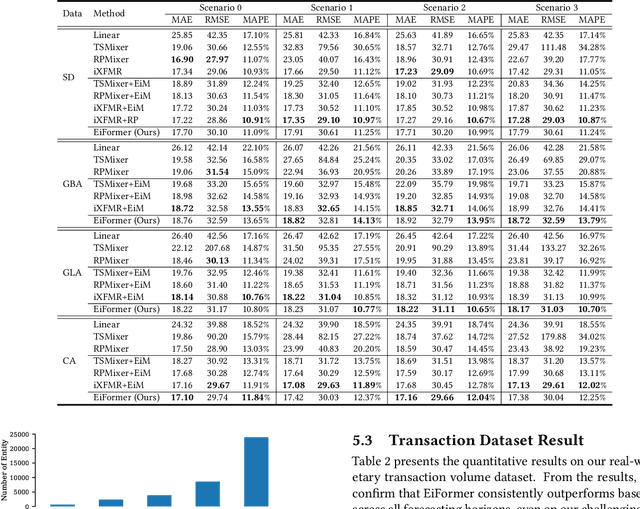

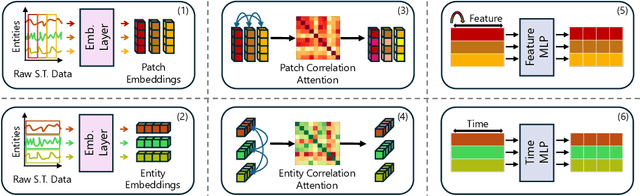

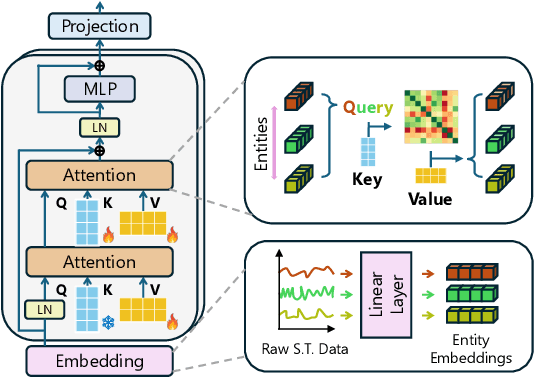

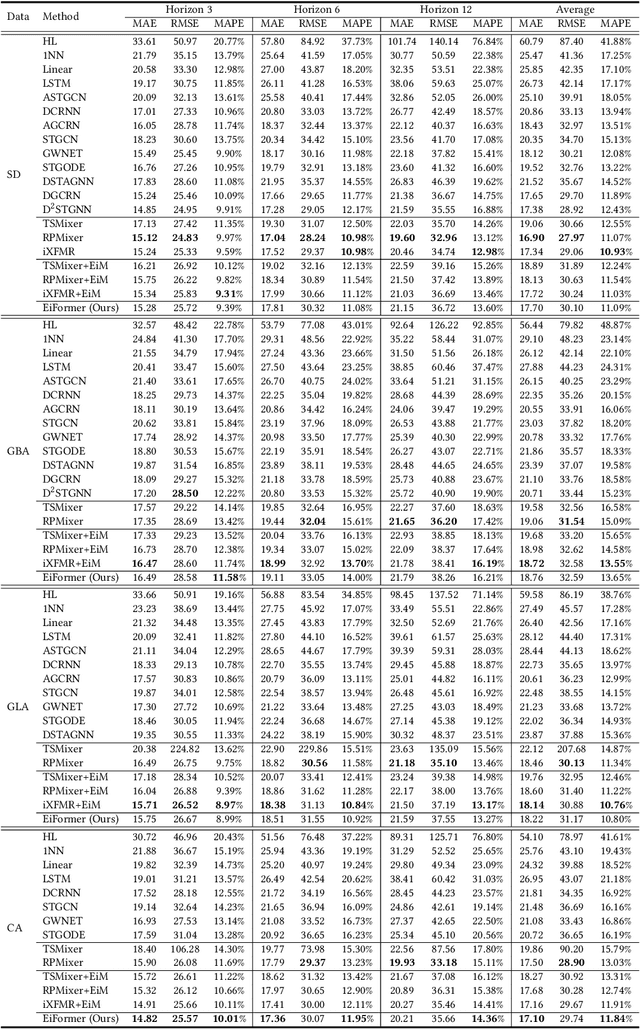

Abstract:Time series forecasting at scale presents significant challenges for modern prediction systems, particularly when dealing with large sets of synchronized series, such as in a global payment network. In such systems, three key challenges must be overcome for accurate and scalable predictions: 1) emergence of new entities, 2) disappearance of existing entities, and 3) the large number of entities present in the data. The recently proposed Inverted Transformer (iTransformer) architecture has shown promising results by effectively handling variable entities. However, its practical application in large-scale settings is limited by quadratic time and space complexity ($O(N^2)$) with respect to the number of entities $N$. In this paper, we introduce EiFormer, an improved inverted transformer architecture that maintains the adaptive capabilities of iTransformer while reducing computational complexity to linear scale ($O(N)$). Our key innovation lies in restructuring the attention mechanism to eliminate redundant computations without sacrificing model expressiveness. Additionally, we incorporate a random projection mechanism that not only enhances efficiency but also improves prediction accuracy through better feature representation. Extensive experiments on the public LargeST benchmark dataset and a proprietary large-scale time series dataset demonstrate that EiFormer significantly outperforms existing methods in both computational efficiency and forecasting accuracy. Our approach enables practical deployment of transformer-based forecasting in industrial applications where handling time series at scale is essential.

BYON: Bring Your Own Networks for Digital Agriculture Applications

Feb 03, 2025Abstract:Digital agriculture technologies rely on sensors, drones, robots, and autonomous farm equipment to improve farm yields and incorporate sustainability practices. However, the adoption of such technologies is severely limited by the lack of broadband connectivity in rural areas. We argue that farming applications do not require permanent always-on connectivity. Instead, farming activity and digital agriculture applications follow seasonal rhythms of agriculture. Therefore, the need for connectivity is highly localized in time and space. We introduce BYON, a new connectivity model for high bandwidth agricultural applications that relies on emerging connectivity solutions like citizens broadband radio service (CBRS) and satellite networks. BYON creates an agile connectivity solution that can be moved along a farm to create spatio-temporal connectivity bubbles. BYON incorporates a new gateway design that reacts to the presence of crops and optimizes coverage in agricultural settings. We evaluate BYON in a production farm and demonstrate its benefits.

Precision Harvesting in Cluttered Environments: Integrating End Effector Design with Dual Camera Perception

Jan 31, 2025

Abstract:Due to labor shortages in specialty crop industries, a need for robotic automation to increase agricultural efficiency and productivity has arisen. Previous manipulation systems perform well in harvesting in uncluttered and structured environments. High tunnel environments are more compact and cluttered in nature, requiring a rethinking of the large form factor systems and grippers. We propose a novel codesigned framework incorporating a global detection camera and a local eye-in-hand camera that demonstrates precise localization of small fruits via closed-loop visual feedback and reliable error handling. Field experiments in high tunnels show our system can reach an average of 85.0\% of cherry tomato fruit in 10.98s on average.

Active Semantic Mapping with Mobile Manipulator in Horticultural Environments

Dec 13, 2024

Abstract:Semantic maps are fundamental for robotics tasks such as navigation and manipulation. They also enable yield prediction and phenotyping in agricultural settings. In this paper, we introduce an efficient and scalable approach for active semantic mapping in horticultural environments, employing a mobile robot manipulator equipped with an RGB-D camera. Our method leverages probabilistic semantic maps to detect semantic targets, generate candidate viewpoints, and compute corresponding information gain. We present an efficient ray-casting strategy and a novel information utility function that accounts for both semantics and occlusions. The proposed approach reduces total runtime by 8% compared to previous baselines. Furthermore, our information metric surpasses other metrics in reducing multi-class entropy and improving surface coverage, particularly in the presence of segmentation noise. Real-world experiments validate our method's effectiveness but also reveal challenges such as depth sensor noise and varying environmental conditions, requiring further research.

MetaCropFollow: Few-Shot Adaptation with Meta-Learning for Under-Canopy Navigation

Nov 21, 2024Abstract:Autonomous under-canopy navigation faces additional challenges compared to over-canopy settings - for example the tight spacing between the crop rows, degraded GPS accuracy and excessive clutter. Keypoint-based visual navigation has been shown to perform well in these conditions, however the differences between agricultural environments in terms of lighting, season, soil and crop type mean that a domain shift will likely be encountered at some point of the robot deployment. In this paper, we explore the use of Meta-Learning to overcome this domain shift using a minimal amount of data. We train a base-learner that can quickly adapt to new conditions, enabling more robust navigation in low-data regimes.

CropNav: a Framework for Autonomous Navigation in Real Farms

Nov 17, 2024

Abstract:Small robots that can operate under the plant canopy can enable new possibilities in agriculture. However, unlike larger autonomous tractors, autonomous navigation for such under canopy robots remains an open challenge because Global Navigation Satellite System (GNSS) is unreliable under the plant canopy. We present a hybrid navigation system that autonomously switches between different sets of sensing modalities to enable full field navigation, both inside and outside of crop. By choosing the appropriate path reference source, the robot can accommodate for loss of GNSS signal quality and leverage row-crop structure to autonomously navigate. However, such switching can be tricky and difficult to execute over scale. Our system provides a solution by automatically switching between an exteroceptive sensing based system, such as Light Detection And Ranging (LiDAR) row-following navigation and waypoints path tracking. In addition, we show how our system can detect when the navigate fails and recover automatically extending the autonomous time and mitigating the necessity of human intervention. Our system shows an improvement of about 750 m per intervention over GNSS-based navigation and 500 m over row following navigation.

Fed-EC: Bandwidth-Efficient Clustering-Based Federated Learning For Autonomous Visual Robot Navigation

Nov 06, 2024

Abstract:Centralized learning requires data to be aggregated at a central server, which poses significant challenges in terms of data privacy and bandwidth consumption. Federated learning presents a compelling alternative, however, vanilla federated learning methods deployed in robotics aim to learn a single global model across robots that works ideally for all. But in practice one model may not be well suited for robots deployed in various environments. This paper proposes Federated-EmbedCluster (Fed-EC), a clustering-based federated learning framework that is deployed with vision based autonomous robot navigation in diverse outdoor environments. The framework addresses the key federated learning challenge of deteriorating model performance of a single global model due to the presence of non-IID data across real-world robots. Extensive real-world experiments validate that Fed-EC reduces the communication size by 23x for each robot while matching the performance of centralized learning for goal-oriented navigation and outperforms local learning. Fed-EC can transfer previously learnt models to new robots that join the cluster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge