Vitor Akihiro Hisano Higuti

CropNav: a Framework for Autonomous Navigation in Real Farms

Nov 17, 2024

Abstract:Small robots that can operate under the plant canopy can enable new possibilities in agriculture. However, unlike larger autonomous tractors, autonomous navigation for such under canopy robots remains an open challenge because Global Navigation Satellite System (GNSS) is unreliable under the plant canopy. We present a hybrid navigation system that autonomously switches between different sets of sensing modalities to enable full field navigation, both inside and outside of crop. By choosing the appropriate path reference source, the robot can accommodate for loss of GNSS signal quality and leverage row-crop structure to autonomously navigate. However, such switching can be tricky and difficult to execute over scale. Our system provides a solution by automatically switching between an exteroceptive sensing based system, such as Light Detection And Ranging (LiDAR) row-following navigation and waypoints path tracking. In addition, we show how our system can detect when the navigate fails and recover automatically extending the autonomous time and mitigating the necessity of human intervention. Our system shows an improvement of about 750 m per intervention over GNSS-based navigation and 500 m over row following navigation.

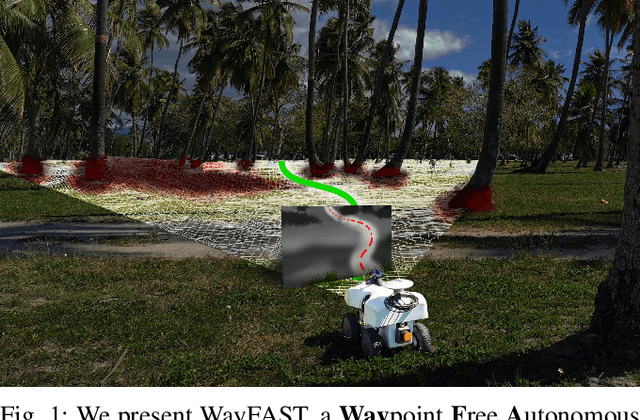

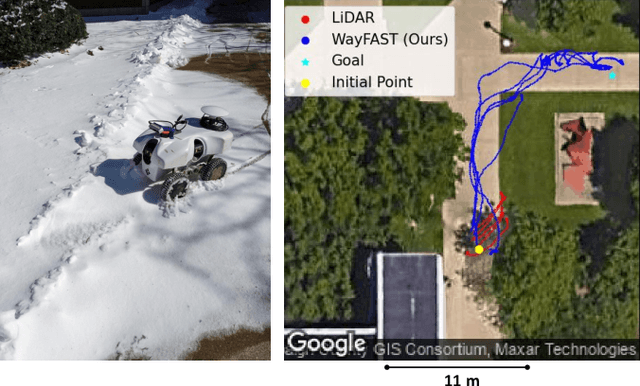

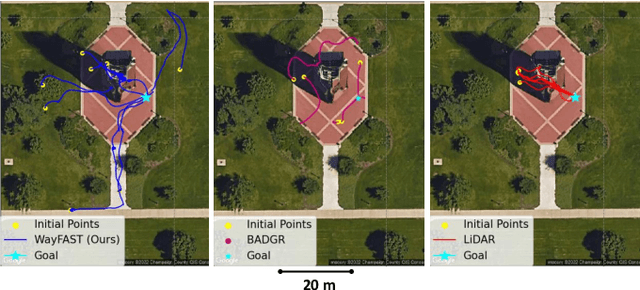

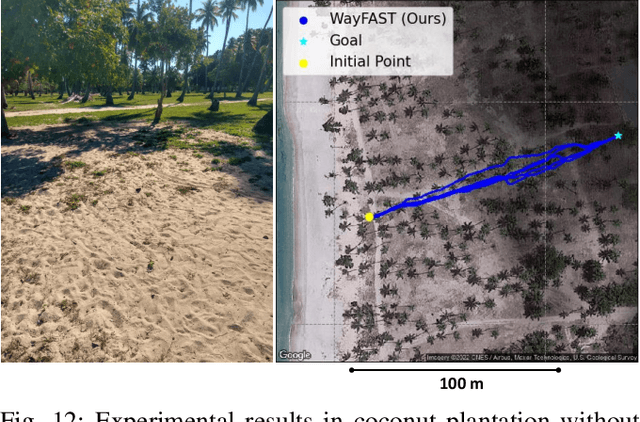

WayFAST: Traversability Predictive Navigation for Field Robots

Mar 22, 2022

Abstract:We present a self-supervised approach for learning to predict traversable paths for wheeled mobile robots that require good traction to navigate. Our algorithm, termed WayFAST (Waypoint Free Autonomous Systems for Traversability), uses RGB and depth data, along with navigation experience, to autonomously generate traversable paths in outdoor unstructured environments. Our key inspiration is that traction can be estimated for rolling robots using kinodynamic models. Using traction estimates provided by an online receding horizon estimator, we are able to train a traversability prediction neural network in a self-supervised manner, without requiring heuristics utilized by previous methods. We demonstrate the effectiveness of WayFAST through extensive field testing in varying environments, ranging from sandy dry beaches to forest canopies and snow covered grass fields. Our results clearly demonstrate that WayFAST can learn to avoid geometric obstacles as well as untraversable terrain, such as snow, which would be difficult to avoid with sensors that provide only geometric data, such as LiDAR. Furthermore, we show that our training pipeline based on online traction estimates is more data-efficient than other heuristic-based methods.

Multi-Sensor Fusion based Robust Row Following for Compact Agricultural Robots

Jun 28, 2021

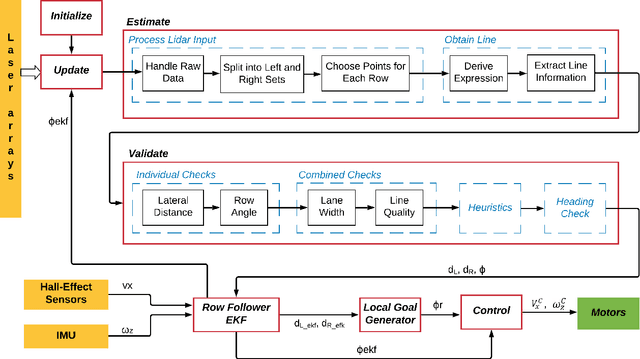

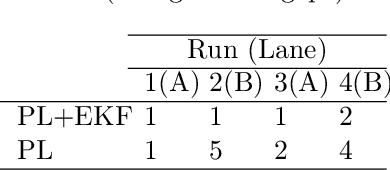

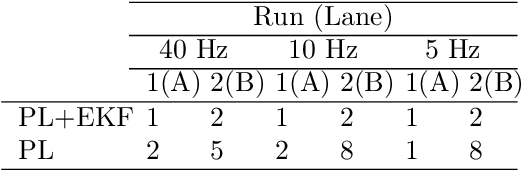

Abstract:This paper presents a state-of-the-art LiDAR based autonomous navigation system for under-canopy agricultural robots. Under-canopy agricultural navigation has been a challenging problem because GNSS and other positioning sensors are prone to significant errors due to attentuation and multi-path caused by crop leaves and stems. Reactive navigation by detecting crop rows using LiDAR measurements is a better alternative to GPS but suffers from challenges due to occlusion from leaves under the canopy. Our system addresses this challenge by fusing IMU and LiDAR measurements using an Extended Kalman Filter framework on low-cost hardwware. In addition, a local goal generator is introduced to provide locally optimal reference trajectories to the onboard controller. Our system is validated extensively in real-world field environments over a distance of 50.88~km on multiple robots in different field conditions across different locations. We report state-of-the-art distance between intervention results, showing that our system is able to safely navigate without interventions for 386.9~m on average in fields without significant gaps in the crop rows, 56.1~m in production fields and 47.5~m in fields with gaps (space of 1~m without plants in both sides of the row).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge