Emanuël A. P. Habets

Leveraging Discriminative Latent Representations for Conditioning GAN-Based Speech Enhancement

Aug 28, 2025Abstract:Generative speech enhancement methods based on generative adversarial networks (GANs) and diffusion models have shown promising results in various speech enhancement tasks. However, their performance in very low signal-to-noise ratio (SNR) scenarios remains under-explored and limited, as these conditions pose significant challenges to both discriminative and generative state-of-the-art methods. To address this, we propose a method that leverages latent features extracted from discriminative speech enhancement models as generic conditioning features to improve GAN-based speech enhancement. The proposed method, referred to as DisCoGAN, demonstrates performance improvements over baseline models, particularly in low-SNR scenarios, while also maintaining competitive or superior performance in high-SNR conditions and on real-world recordings. We also conduct a comprehensive evaluation of conventional GAN-based architectures, including GANs trained end-to-end, GANs as a first processing stage, and post-filtering GANs, as well as discriminative models under low-SNR conditions. We show that DisCoGAN consistently outperforms existing methods. Finally, we present an ablation study that investigates the contributions of individual components within DisCoGAN and analyzes the impact of the discriminative conditioning method on overall performance.

VoxATtack: A Multimodal Attack on Voice Anonymization Systems

Jul 16, 2025Abstract:Voice anonymization systems aim to protect speaker privacy by obscuring vocal traits while preserving the linguistic content relevant for downstream applications. However, because these linguistic cues remain intact, they can be exploited to identify semantic speech patterns associated with specific speakers. In this work, we present VoxATtack, a novel multimodal de-anonymization model that incorporates both acoustic and textual information to attack anonymization systems. While previous research has focused on refining speaker representations extracted from speech, we show that incorporating textual information with a standard ECAPA-TDNN improves the attacker's performance. Our proposed VoxATtack model employs a dual-branch architecture, with an ECAPA-TDNN processing anonymized speech and a pretrained BERT encoding the transcriptions. Both outputs are projected into embeddings of equal dimensionality and then fused based on confidence weights computed on a per-utterance basis. When evaluating our approach on the VoicePrivacy Attacker Challenge (VPAC) dataset, it outperforms the top-ranking attackers on five out of seven benchmarks, namely B3, B4, B5, T8-5, and T12-5. To further boost performance, we leverage anonymized speech and SpecAugment as augmentation techniques. This enhancement enables VoxATtack to achieve state-of-the-art on all VPAC benchmarks, after scoring 20.6% and 27.2% average equal error rate on T10-2 and T25-1, respectively. Our results demonstrate that incorporating textual information and selective data augmentation reveals critical vulnerabilities in current voice anonymization methods and exposes potential weaknesses in the datasets used to evaluate them.

Dynamic Slimmable Networks for Efficient Speech Separation

Jul 08, 2025Abstract:Recent progress in speech separation has been largely driven by advances in deep neural networks, yet their high computational and memory requirements hinder deployment on resource-constrained devices. A significant inefficiency in conventional systems arises from using static network architectures that maintain constant computational complexity across all input segments, regardless of their characteristics. This approach is sub-optimal for simpler segments that do not require intensive processing, such as silence or non-overlapping speech. To address this limitation, we propose a dynamic slimmable network (DSN) for speech separation that adaptively adjusts its computational complexity based on the input signal. The DSN combines a slimmable network, which can operate at different network widths, with a lightweight gating module that dynamically determines the required width by analyzing the local input characteristics. To balance performance and efficiency, we introduce a signal-dependent complexity loss that penalizes unnecessary computation based on segmental reconstruction error. Experiments on clean and noisy two-speaker mixtures from the WSJ0-2mix and WHAM! datasets show that the DSN achieves a better performance-efficiency trade-off than individually trained static networks of different sizes.

Low-Complexity Neural Wind Noise Reduction for Audio Recordings

Jul 02, 2025Abstract:Wind noise significantly degrades the quality of outdoor audio recordings, yet remains difficult to suppress in real-time on resource-constrained devices. In this work, we propose a low-complexity single-channel deep neural network that leverages the spectral characteristics of wind noise. Experimental results show that our method achieves performance comparable to the state-of-the-art low-complexity ULCNet model. The proposed model, with only 249K parameters and roughly 73 MHz of computational power, is suitable for embedded and mobile audio applications.

You Are What You Say: Exploiting Linguistic Content for VoicePrivacy Attacks

Jun 11, 2025Abstract:Speaker anonymization systems hide the identity of speakers while preserving other information such as linguistic content and emotions. To evaluate their privacy benefits, attacks in the form of automatic speaker verification (ASV) systems are employed. In this study, we assess the impact of intra-speaker linguistic content similarity in the attacker training and evaluation datasets, by adapting BERT, a language model, as an ASV system. On the VoicePrivacy Attacker Challenge datasets, our method achieves a mean equal error rate (EER) of 35%, with certain speakers attaining EERs as low as 2%, based solely on the textual content of their utterances. Our explainability study reveals that the system decisions are linked to semantically similar keywords within utterances, stemming from how LibriSpeech is curated. Our study suggests reworking the VoicePrivacy datasets to ensure a fair and unbiased evaluation and challenge the reliance on global EER for privacy evaluations.

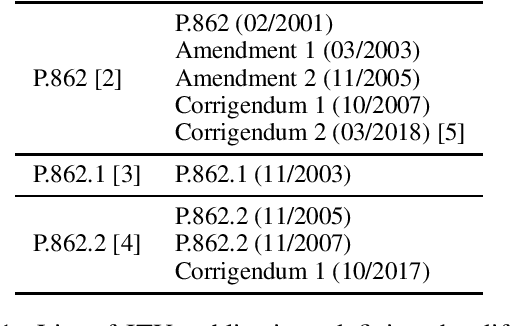

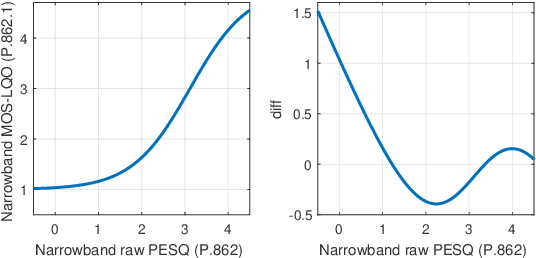

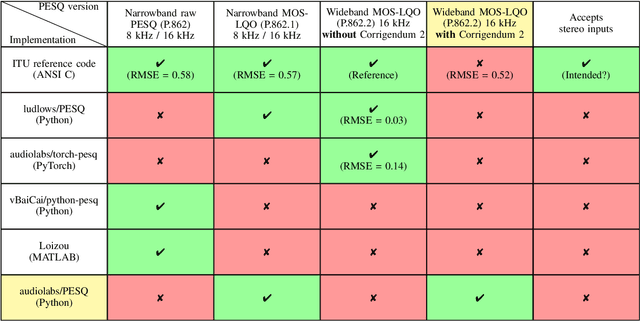

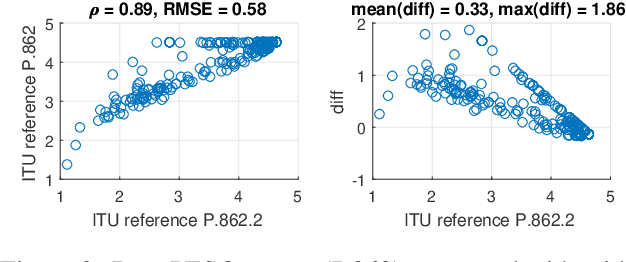

Navigating PESQ: Up-to-Date Versions and Open Implementations

May 26, 2025

Abstract:Perceptual Evaluation of Speech Quality (PESQ) is an objective quality measure that remains widely used despite its withdrawal by the International Telecommunication Union (ITU). PESQ has evolved over two decades, with multiple versions and publicly available implementations emerging during this time. The numerous versions and their updates can be overwhelming, especially for new PESQ users. This work provides practical guidance on the different versions and implementations of PESQ. We show that differences can be significant, especially between PESQ versions. We stress the importance of specifying the exact version and implementation that is used to compute PESQ, and possibly to detail how multi-channel signals are handled. These practices would facilitate the interpretation of results and allow comparisons of PESQ scores between different studies. We also provide a repository that implements the latest corrections to PESQ, i.e., Corrigendum 2, which is not implemented by any other openly available distribution: https://github.com/audiolabs/PESQ.

On the Relation Between Speech Quality and Quantized Latent Representations of Neural Codecs

Mar 05, 2025Abstract:Neural audio signal codecs have attracted significant attention in recent years. In essence, the impressive low bitrate achieved by such encoders is enabled by learning an abstract representation that captures the properties of encoded signals, e.g., speech. In this work, we investigate the relation between the latent representation of the input signal learned by a neural codec and the quality of speech signals. To do so, we introduce Latent-representation-to-Quantization error Ratio (LQR) measures, which quantify the distance from the idealized neural codec's speech signal model for a given speech signal. We compare the proposed metrics to intrusive measures as well as data-driven supervised methods using two subjective speech quality datasets. This analysis shows that the proposed LQR correlates strongly (up to 0.9 Pearson's correlation) with the subjective quality of speech. Despite being a non-intrusive metric, this yields a competitive performance with, or even better than, other pre-trained and intrusive measures. These results show that LQR is a promising basis for more sophisticated speech quality measures.

Transparent NLP: Using RAG and LLM Alignment for Privacy Q&A

Feb 10, 2025

Abstract:The transparency principle of the General Data Protection Regulation (GDPR) requires data processing information to be clear, precise, and accessible. While language models show promise in this context, their probabilistic nature complicates truthfulness and comprehensibility. This paper examines state-of-the-art Retrieval Augmented Generation (RAG) systems enhanced with alignment techniques to fulfill GDPR obligations. We evaluate RAG systems incorporating an alignment module like Rewindable Auto-regressive Inference (RAIN) and our proposed multidimensional extension, MultiRAIN, using a Privacy Q&A dataset. Responses are optimized for preciseness and comprehensibility and are assessed through 21 metrics, including deterministic and large language model-based evaluations. Our results show that RAG systems with an alignment module outperform baseline RAG systems on most metrics, though none fully match human answers. Principal component analysis of the results reveals complex interactions between metrics, highlighting the need to refine metrics. This study provides a foundation for integrating advanced natural language processing systems into legal compliance frameworks.

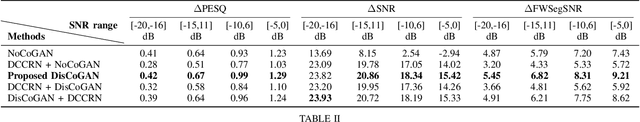

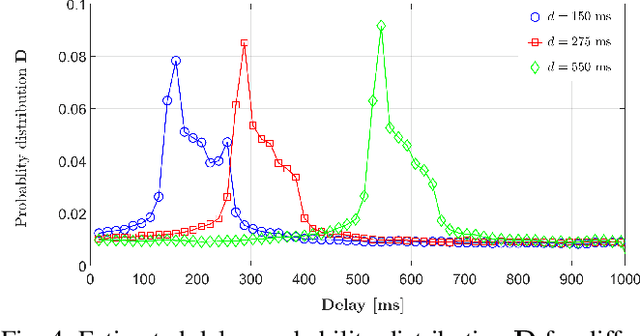

GAN-Based Speech Enhancement for Low SNR Using Latent Feature Conditioning

Oct 17, 2024

Abstract:Enhancing speech quality under adverse SNR conditions remains a significant challenge for discriminative deep neural network (DNN)-based approaches. In this work, we propose DisCoGAN, which is a time-frequency-domain generative adversarial network (GAN) conditioned by the latent features of a discriminative model pre-trained for speech enhancement in low SNR scenarios. Our proposed method achieves superior performance compared to state-of-the-arts discriminative methods and also surpasses end-to-end (E2E) trained GAN models. We also investigate the impact of various configurations for conditioning the proposed GAN model with the discriminative model and assess their influence on enhancing speech quality

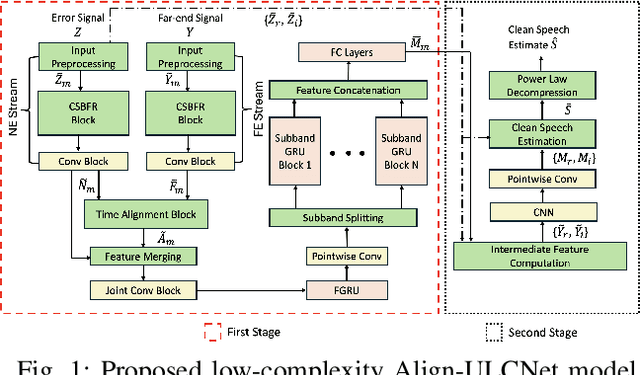

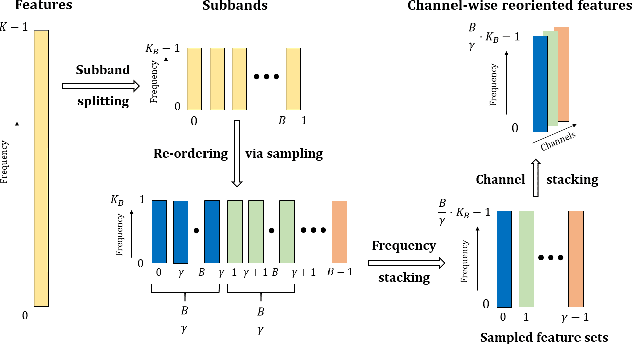

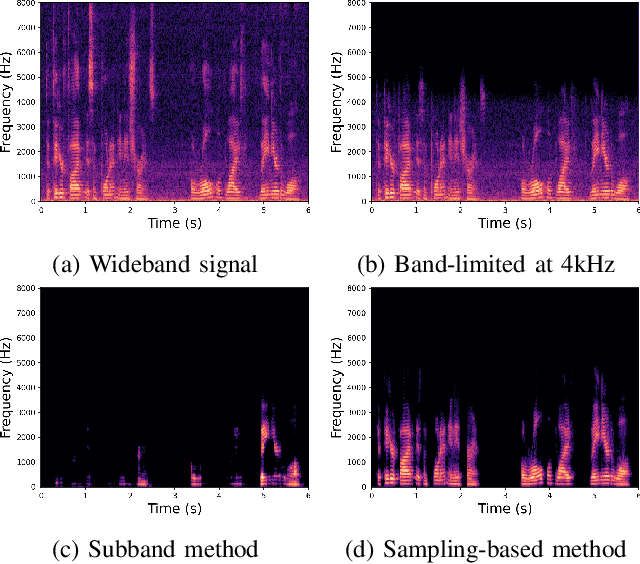

Align-ULCNet: Towards Low-Complexity and Robust Acoustic Echo and Noise Reduction

Oct 17, 2024

Abstract:The successful deployment of deep learning-based acoustic echo and noise reduction (AENR) methods in consumer devices has spurred interest in developing low-complexity solutions, while emphasizing the need for robust performance in real-life applications. In this work, we propose a hybrid approach to enhance the state-of-the-art (SOTA) ULCNet model by integrating time alignment and parallel encoder blocks for the model inputs, resulting in better echo reduction and comparable noise reduction performance to existing SOTA methods. We also propose a channel-wise sampling-based feature reorientation method, ensuring robust performance across many challenging scenarios, while maintaining overall low computational and memory requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge