Ngoc Thang Vu

A Toolbox for Improving Evolutionary Prompt Search

Nov 07, 2025Abstract:Evolutionary prompt optimization has demonstrated effectiveness in refining prompts for LLMs. However, existing approaches lack robust operators and efficient evaluation mechanisms. In this work, we propose several key improvements to evolutionary prompt optimization that can partially generalize to prompt optimization in general: 1) decomposing evolution into distinct steps to enhance the evolution and its control, 2) introducing an LLM-based judge to verify the evolutions, 3) integrating human feedback to refine the evolutionary operator, and 4) developing more efficient evaluation strategies that maintain performance while reducing computational overhead. Our approach improves both optimization quality and efficiency. We release our code, enabling prompt optimization on new tasks and facilitating further research in this area.

Use Cases for Voice Anonymization

Aug 08, 2025

Abstract:The performance of a voice anonymization system is typically measured according to its ability to hide the speaker's identity and keep the data's utility for downstream tasks. This means that the requirements the anonymization should fulfill depend on the context in which it is used and may differ greatly between use cases. However, these use cases are rarely specified in research papers. In this paper, we study the implications of use case-specific requirements on the design of voice anonymization methods. We perform an extensive literature analysis and user study to collect possible use cases and to understand the expectations of the general public towards such tools. Based on these studies, we propose the first taxonomy of use cases for voice anonymization, and derive a set of requirements and design criteria for method development and evaluation. Using this scheme, we propose to focus more on use case-oriented research and development of voice anonymization systems.

The Risks and Detection of Overestimated Privacy Protection in Voice Anonymisation

Jul 30, 2025Abstract:Voice anonymisation aims to conceal the voice identity of speakers in speech recordings. Privacy protection is usually estimated from the difficulty of using a speaker verification system to re-identify the speaker post-anonymisation. Performance assessments are therefore dependent on the verification model as well as the anonymisation system. There is hence potential for privacy protection to be overestimated when the verification system is poorly trained, perhaps with mismatched data. In this paper, we demonstrate the insidious risk of overestimating anonymisation performance and show examples of exaggerated performance reported in the literature. For the worst case we identified, performance is overestimated by 74% relative. We then introduce a means to detect when performance assessment might be untrustworthy and show that it can identify all overestimation scenarios presented in the paper. Our solution is openly available as a fork of the 2024 VoicePrivacy Challenge evaluation toolkit.

First Steps Towards Voice Anonymization for Code-Switching Speech

Jul 02, 2025Abstract:The goal of voice anonymization is to modify an audio such that the true identity of its speaker is hidden. Research on this task is typically limited to the same English read speech datasets, thus the efficacy of current methods for other types of speech data remains unknown. In this paper, we present the first investigation of voice anonymization for the multilingual phenomenon of code-switching speech. We prepare two corpora for this task and propose adaptations to a multilingual anonymization model to make it applicable for code-switching speech. By testing the anonymization performance of this and two language-independent methods on the datasets, we find that only the multilingual system performs well in terms of privacy and utility preservation. Furthermore, we observe challenges in performing utility evaluations on this data because of its spontaneous character and the limited code-switching support by the multilingual speech recognition model.

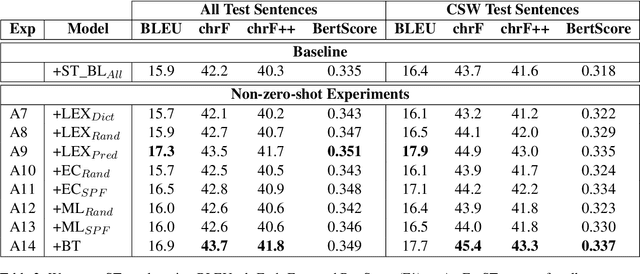

The Impact of Code-switched Synthetic Data Quality is Task Dependent: Insights from MT and ASR

Mar 30, 2025

Abstract:Code-switching, the act of alternating between languages, emerged as a prevalent global phenomenon that needs to be addressed for building user-friendly language technologies. A main bottleneck in this pursuit is data scarcity, motivating research in the direction of code-switched data augmentation. However, current literature lacks comprehensive studies that enable us to understand the relation between the quality of synthetic data and improvements on NLP tasks. We extend previous research conducted in this direction on machine translation (MT) with results on automatic speech recognition (ASR) and cascaded speech translation (ST) to test generalizability of findings. Our experiments involve a wide range of augmentation techniques, covering lexical replacements, linguistic theories, and back-translation. Based on the results of MT, ASR, and ST, we draw conclusions and insights regarding the efficacy of various augmentation techniques and the impact of quality on performance.

Discrete Subgraph Sampling for Interpretable Graph based Visual Question Answering

Dec 11, 2024Abstract:Explainable artificial intelligence (XAI) aims to make machine learning models more transparent. While many approaches focus on generating explanations post-hoc, interpretable approaches, which generate the explanations intrinsically alongside the predictions, are relatively rare. In this work, we integrate different discrete subset sampling methods into a graph-based visual question answering system to compare their effectiveness in generating interpretable explanatory subgraphs intrinsically. We evaluate the methods on the GQA dataset and show that the integrated methods effectively mitigate the performance trade-off between interpretability and answer accuracy, while also achieving strong co-occurrences between answer and question tokens. Furthermore, we conduct a human evaluation to assess the interpretability of the generated subgraphs using a comparative setting with the extended Bradley-Terry model, showing that the answer and question token co-occurrence metrics strongly correlate with human preferences. Our source code is publicly available.

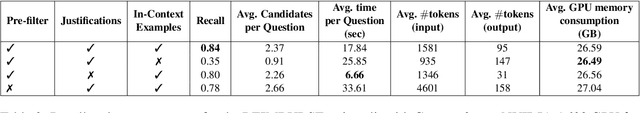

A Zero-Shot approach to the Conversational Tree Search Task

Oct 08, 2024

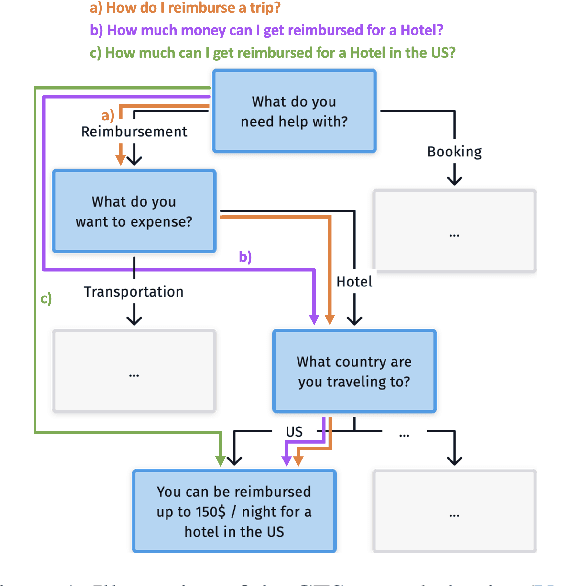

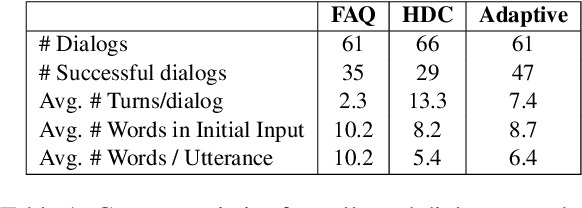

Abstract:In sensitive domains, such as legal or medial domains, the correctness of information given to users is critical. To address this, the recently introduced task Conversational Tree Search (CTS) provides a graph-based framework for controllable task-oriented dialog in sensitive domains. However, a big drawback of state-of-the-art CTS agents is their long training time, which is especially problematic as a new agent must be trained every time the associated domain graph is updated. The goal of this paper is to eliminate the need for training CTS agents altogether. To achieve this, we implement a novel LLM-based method for zero-shot, controllable CTS agents. We show that these agents significantly outperform state-of-the-art CTS agents (p<0.0001; Barnard Exact test) in simulation. This generalizes to all available CTS domains. Finally, we perform user evaluation to test the agent performance in the wild, showing that our policy significantly (p<0.05; Barnard Exact) improves task-success compared to the state-of-the-art Reinforcement Learning-based CTS agent.

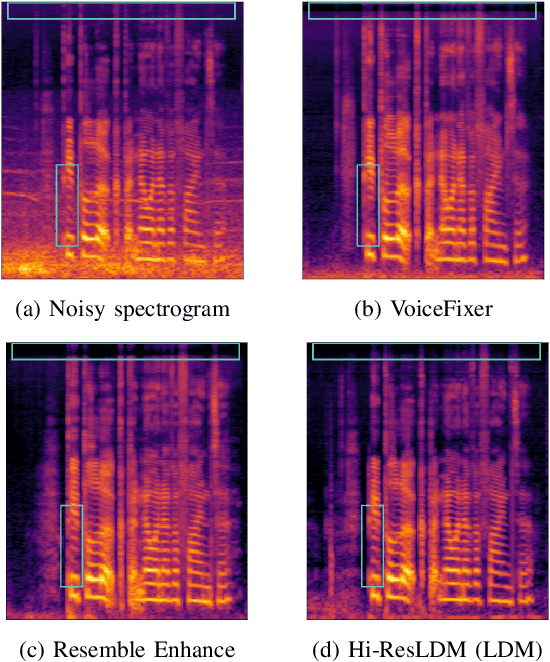

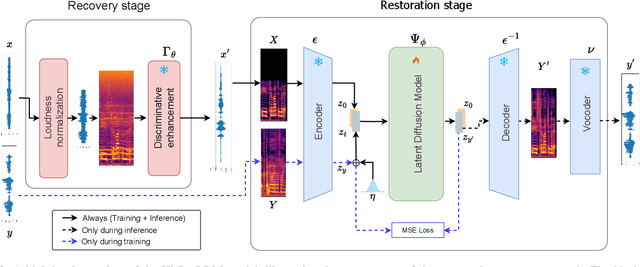

High-Resolution Speech Restoration with Latent Diffusion Model

Sep 17, 2024

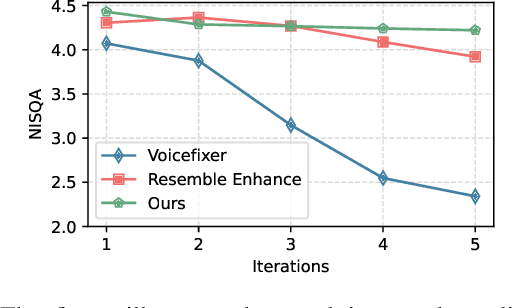

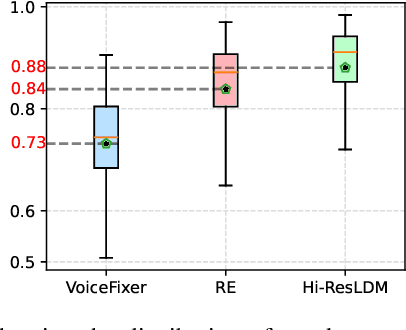

Abstract:Traditional speech enhancement methods often oversimplify the task of restoration by focusing on a single type of distortion. Generative models that handle multiple distortions frequently struggle with phone reconstruction and high-frequency harmonics, leading to breathing and gasping artifacts that reduce the intelligibility of reconstructed speech. These models are also computationally demanding, and many solutions are restricted to producing outputs in the wide-band frequency range, which limits their suitability for professional applications. To address these challenges, we propose Hi-ResLDM, a novel generative model based on latent diffusion designed to remove multiple distortions and restore speech recordings to studio quality, sampled at 48kHz. We benchmark Hi-ResLDM against state-of-the-art methods that leverage GAN and Conditional Flow Matching (CFM) components, demonstrating superior performance in regenerating high-frequency-band details. Hi-ResLDM not only excels in non-instrusive metrics but is also consistently preferred in human evaluation and performs competitively on intrusive evaluations, making it ideal for high-resolution speech restoration.

Explaining Vision-Language Similarities in Dual Encoders with Feature-Pair Attributions

Aug 26, 2024

Abstract:Dual encoder architectures like CLIP models map two types of inputs into a shared embedding space and learn similarities between them. However, it is not understood how such models compare two inputs. Here, we address this research gap with two contributions. First, we derive a method to attribute predictions of any differentiable dual encoder onto feature-pair interactions between its inputs. Second, we apply our method to CLIP-type models and show that they learn fine-grained correspondences between parts of captions and regions in images. They match objects across input modes and also account for mismatches. However, this visual-linguistic grounding ability heavily varies between object classes, depends on the training data distribution, and largely improves after in-domain training. Using our method we can identify knowledge gaps about specific object classes in individual models and can monitor their improvement upon fine-tuning.

Investigating the effect of Mental Models in User Interaction with an Adaptive Dialog Agent

Aug 26, 2024

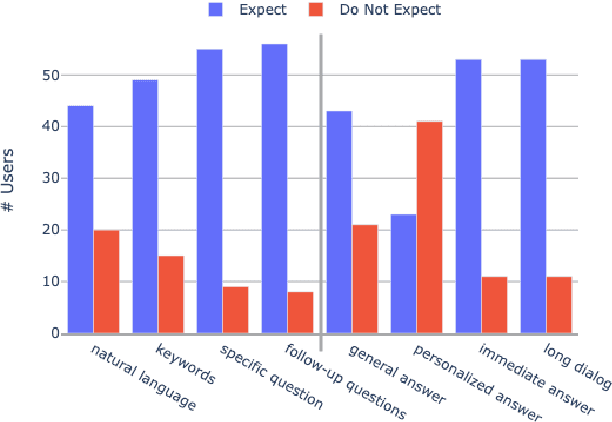

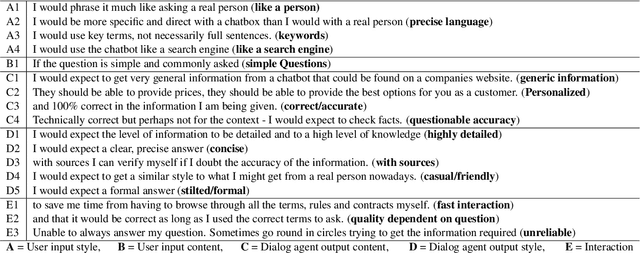

Abstract:Mental models play an important role in whether user interaction with intelligent systems, such as dialog systems is successful or not. Adaptive dialog systems present the opportunity to align a dialog agent's behavior with heterogeneous user expectations. However, there has been little research into what mental models users form when interacting with a task-oriented dialog system, how these models affect users' interactions, or what role system adaptation can play in this process, making it challenging to avoid damage to human-AI partnership. In this work, we collect a new publicly available dataset for exploring user mental models about information seeking dialog systems. We demonstrate that users have a variety of conflicting mental models about such systems, the validity of which directly impacts the success of their interactions and perceived usability of system. Furthermore, we show that adapting a dialog agent's behavior to better align with users' mental models, even when done implicitly, can improve perceived usability, dialog efficiency, and success. To this end, we argue that implicit adaptation can be a valid strategy for task-oriented dialog systems, so long as developers first have a solid understanding of users' mental models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge