Sebastian Padó

One Persona, Many Cues, Different Results: How Sociodemographic Cues Impact LLM Personalization

Jan 26, 2026Abstract:Personalization of LLMs by sociodemographic subgroup often improves user experience, but can also introduce or amplify biases and unfair outcomes across groups. Prior work has employed so-called personas, sociodemographic user attributes conveyed to a model, to study bias in LLMs by relying on a single cue to prompt a persona, such as user names or explicit attribute mentions. This disregards LLM sensitivity to prompt variations (robustness) and the rarity of some cues in real interactions (external validity). We compare six commonly used persona cues across seven open and proprietary LLMs on four writing and advice tasks. While cues are overall highly correlated, they produce substantial variance in responses across personas. We therefore caution against claims from a single persona cue and recommend future personalization research to evaluate multiple externally valid cues.

Beyond Marginal Distributions: A Framework to Evaluate the Representativeness of Demographic-Aligned LLMs

Jan 22, 2026Abstract:Large language models are increasingly used to represent human opinions, values, or beliefs, and their steerability towards these ideals is an active area of research. Existing work focuses predominantly on aligning marginal response distributions, treating each survey item independently. While essential, this may overlook deeper latent structures that characterise real populations and underpin cultural values theories. We propose a framework for evaluating the representativeness of aligned models through multivariate correlation patterns in addition to marginal distributions. We show the value of our evaluation scheme by comparing two model steering techniques (persona prompting and demographic fine-tuning) and evaluating them against human responses from the World Values Survey. While the demographically fine-tuned model better approximates marginal response distributions than persona prompting, both techniques fail to fully capture the gold standard correlation patterns. We conclude that representativeness is a distinct aspect of value alignment and an evaluation focused on marginals can mask structural failures, leading to overly optimistic conclusions about model capabilities.

Do Political Opinions Transfer Between Western Languages? An Analysis of Unaligned and Aligned Multilingual LLMs

Aug 07, 2025Abstract:Public opinion surveys show cross-cultural differences in political opinions between socio-cultural contexts. However, there is no clear evidence whether these differences translate to cross-lingual differences in multilingual large language models (MLLMs). We analyze whether opinions transfer between languages or whether there are separate opinions for each language in MLLMs of various sizes across five Western languages. We evaluate MLLMs' opinions by prompting them to report their (dis)agreement with political statements from voting advice applications. To better understand the interaction between languages in the models, we evaluate them both before and after aligning them with more left or right views using direct preference optimization and English alignment data only. Our findings reveal that unaligned models show only very few significant cross-lingual differences in the political opinions they reflect. The political alignment shifts opinions almost uniformly across all five languages. We conclude that in Western language contexts, political opinions transfer between languages, demonstrating the challenges in achieving explicit socio-linguistic, cultural, and political alignment of MLLMs.

Computational Analysis of Gender Depiction in the Comedias of Calderón de la Barca

Nov 06, 2024

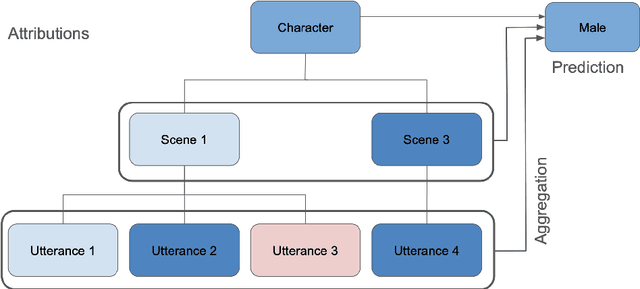

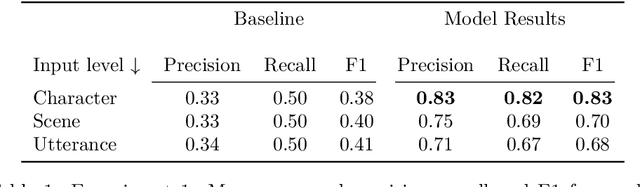

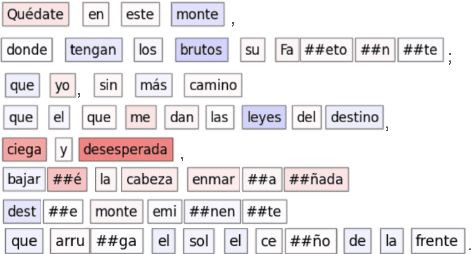

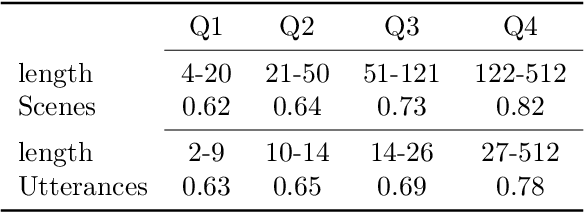

Abstract:In theatre, playwrights use the portrayal of characters to explore culturally based gender norms. In this paper, we develop quantitative methods to study gender depiction in the non-religious works (comedias) of Pedro Calder\'on de la Barca, a prolific Spanish 17th century author. We gather insights from a corpus of more than 100 plays by using a gender classifier and applying model explainability (attribution) methods to determine which text features are most influential in the model's decision to classify speech as 'male' or 'female', indicating the most gendered elements of dialogue in Calder\'on's comedias in a human accessible manner. We find that female and male characters are portrayed differently and can be identified by the gender prediction model at practically useful accuracies (up to f=0.83). Analysis reveals semantic aspects of gender portrayal, and demonstrates that the model is even useful in providing a relatively accurate scene-by-scene prediction of cross-dressing characters.

Toeing the Party Line: Election Manifestos as a Key to Understand Political Discourse on Twitter

Oct 21, 2024

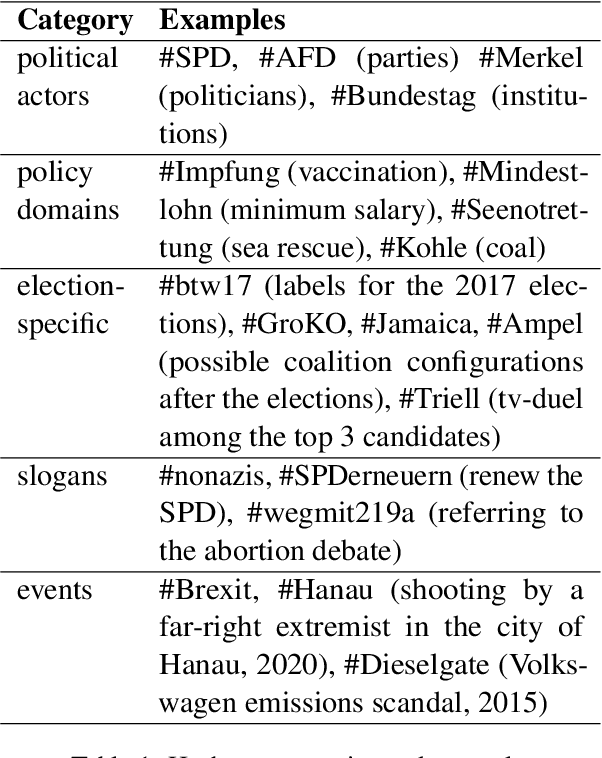

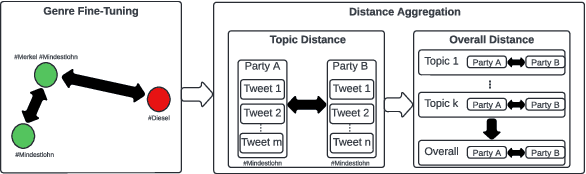

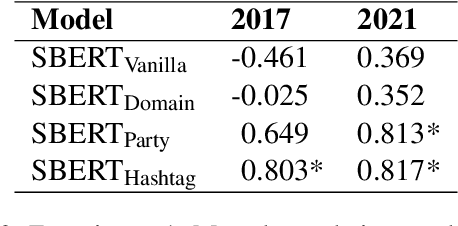

Abstract:Political discourse on Twitter is a moving target: politicians continuously make statements about their positions. It is therefore crucial to track their discourse on social media to understand their ideological positions and goals. However, Twitter data is also challenging to work with since it is ambiguous and often dependent on social context, and consequently, recent work on political positioning has tended to focus strongly on manifestos (parties' electoral programs) rather than social media. In this paper, we extend recently proposed methods to predict pairwise positional similarities between parties from the manifesto case to the Twitter case, using hashtags as a signal to fine-tune text representations, without the need for manual annotation. We verify the efficacy of fine-tuning and conduct a series of experiments that assess the robustness of our method for low-resource scenarios. We find that our method yields stable positioning reflective of manifesto positioning, both in scenarios with all tweets of candidates across years available and when only smaller subsets from shorter time periods are available. This indicates that it is possible to reliably analyze the relative positioning of actors forgoing manual annotation, even in the noisier context of social media.

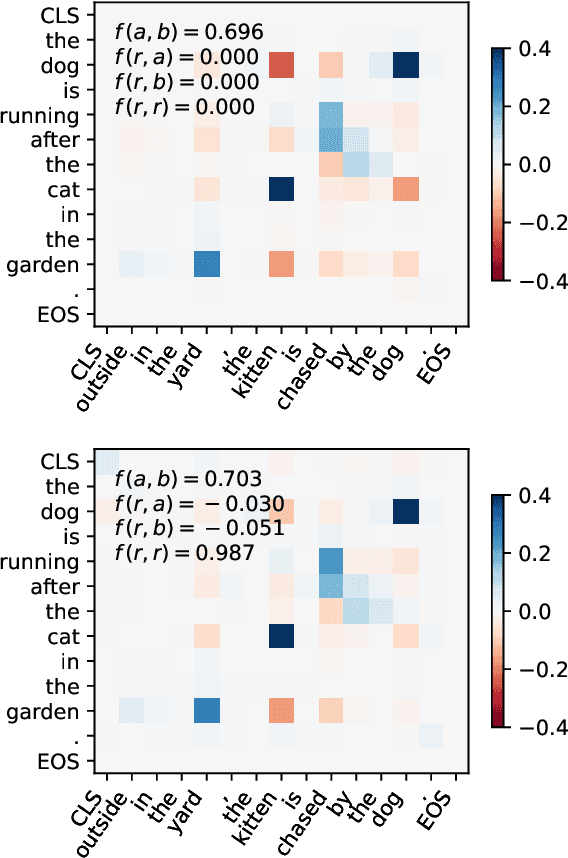

Explaining Vision-Language Similarities in Dual Encoders with Feature-Pair Attributions

Aug 26, 2024

Abstract:Dual encoder architectures like CLIP models map two types of inputs into a shared embedding space and learn similarities between them. However, it is not understood how such models compare two inputs. Here, we address this research gap with two contributions. First, we derive a method to attribute predictions of any differentiable dual encoder onto feature-pair interactions between its inputs. Second, we apply our method to CLIP-type models and show that they learn fine-grained correspondences between parts of captions and regions in images. They match objects across input modes and also account for mismatches. However, this visual-linguistic grounding ability heavily varies between object classes, depends on the training data distribution, and largely improves after in-domain training. Using our method we can identify knowledge gaps about specific object classes in individual models and can monitor their improvement upon fine-tuning.

Multi-Dimensional Machine Translation Evaluation: Model Evaluation and Resource for Korean

Mar 19, 2024Abstract:Almost all frameworks for the manual or automatic evaluation of machine translation characterize the quality of an MT output with a single number. An exception is the Multidimensional Quality Metrics (MQM) framework which offers a fine-grained ontology of quality dimensions for scoring (such as style, fluency, accuracy, and terminology). Previous studies have demonstrated the feasibility of MQM annotation but there are, to our knowledge, no computational models that predict MQM scores for novel texts, due to a lack of resources. In this paper, we address these shortcomings by (a) providing a 1200-sentence MQM evaluation benchmark for the language pair English-Korean and (b) reframing MT evaluation as the multi-task problem of simultaneously predicting several MQM scores using SOTA language models, both in a reference-based MT evaluation setup and a reference-free quality estimation (QE) setup. We find that reference-free setup outperforms its counterpart in the style dimension while reference-based models retain an edge regarding accuracy. Overall, RemBERT emerges as the most promising model. Through our evaluation, we offer an insight into the translation quality in a more fine-grained, interpretable manner.

Towards Understanding the Relationship between In-context Learning and Compositional Generalization

Mar 18, 2024

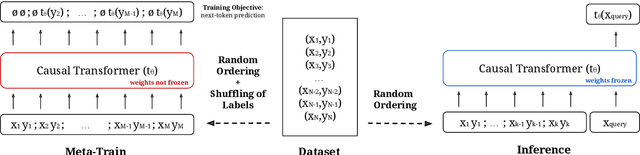

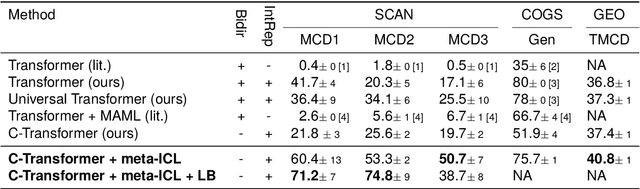

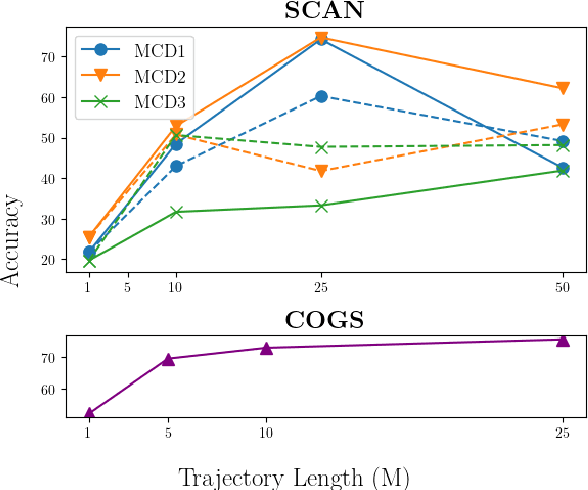

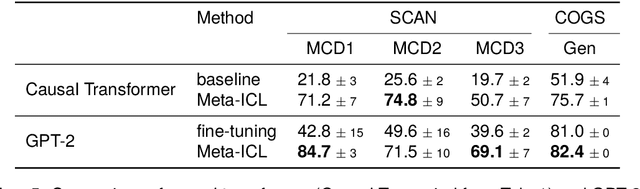

Abstract:According to the principle of compositional generalization, the meaning of a complex expression can be understood as a function of the meaning of its parts and of how they are combined. This principle is crucial for human language processing and also, arguably, for NLP models in the face of out-of-distribution data. However, many neural network models, including Transformers, have been shown to struggle with compositional generalization. In this paper, we hypothesize that forcing models to in-context learn can provide an inductive bias to promote compositional generalization. To test this hypothesis, we train a causal Transformer in a setting that renders ordinary learning very difficult: we present it with different orderings of the training instance and shuffle instance labels. This corresponds to training the model on all possible few-shot learning problems attainable from the dataset. The model can solve the task, however, by utilizing earlier examples to generalize to later ones (i.e. in-context learning). In evaluations on the datasets, SCAN, COGS, and GeoQuery, models trained in this manner indeed show improved compositional generalization. This indicates the usefulness of in-context learning problems as an inductive bias for generalization.

Beyond prompt brittleness: Evaluating the reliability and consistency of political worldviews in LLMs

Feb 27, 2024Abstract:Due to the widespread use of large language models (LLMs) in ubiquitous systems, we need to understand whether they embed a specific worldview and what these views reflect. Recent studies report that, prompted with political questionnaires, LLMs show left-liberal leanings. However, it is as yet unclear whether these leanings are reliable (robust to prompt variations) and whether the leaning is consistent across policies and political leaning. We propose a series of tests which assess the reliability and consistency of LLMs' stances on political statements based on a dataset of voting-advice questionnaires collected from seven EU countries and annotated for policy domains. We study LLMs ranging in size from 7B to 70B parameters and find that their reliability increases with parameter count. Larger models show overall stronger alignment with left-leaning parties but differ among policy programs: They evince a (left-wing) positive stance towards environment protection, social welfare but also (right-wing) law and order, with no consistent preferences in foreign policy, migration, and economy.

Approximate Attributions for Off-the-Shelf Siamese Transformers

Feb 05, 2024

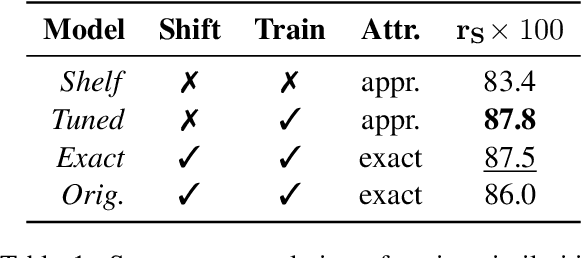

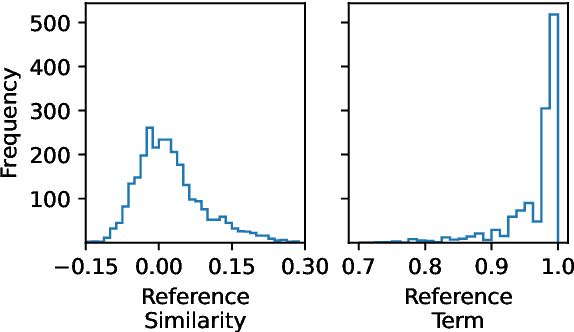

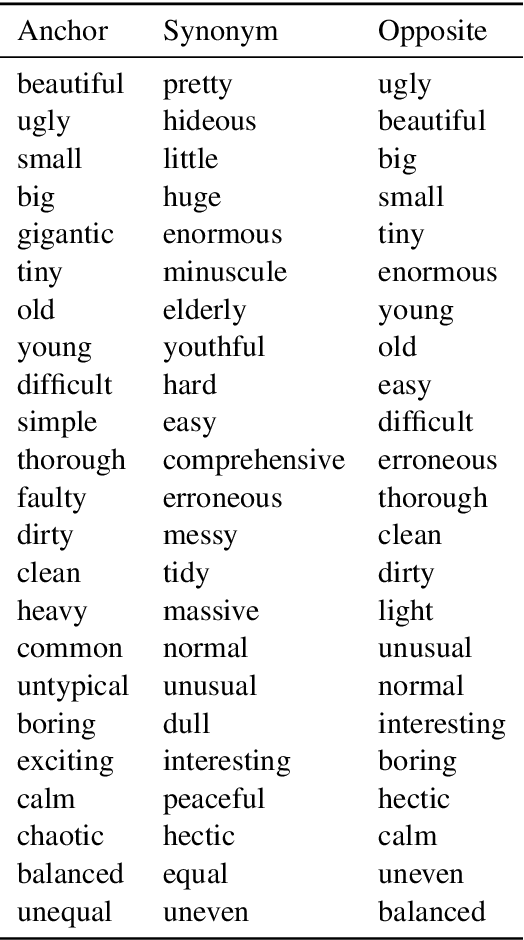

Abstract:Siamese encoders such as sentence transformers are among the least understood deep models. Established attribution methods cannot tackle this model class since it compares two inputs rather than processing a single one. To address this gap, we have recently proposed an attribution method specifically for Siamese encoders (M\"oller et al., 2023). However, it requires models to be adjusted and fine-tuned and therefore cannot be directly applied to off-the-shelf models. In this work, we reassess these restrictions and propose (i) a model with exact attribution ability that retains the original model's predictive performance and (ii) a way to compute approximate attributions for off-the-shelf models. We extensively compare approximate and exact attributions and use them to analyze the models' attendance to different linguistic aspects. We gain insights into which syntactic roles Siamese transformers attend to, confirm that they mostly ignore negation, explore how they judge semantically opposite adjectives, and find that they exhibit lexical bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge