Andreas Walther

Blind Acoustic Parameter Estimation Through Task-Agnostic Embeddings Using Latent Approximations

Jul 29, 2024

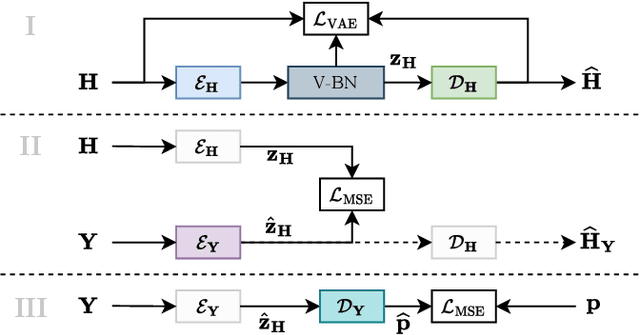

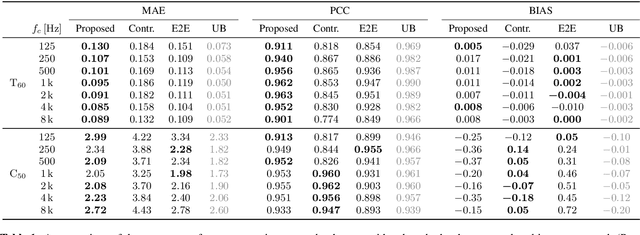

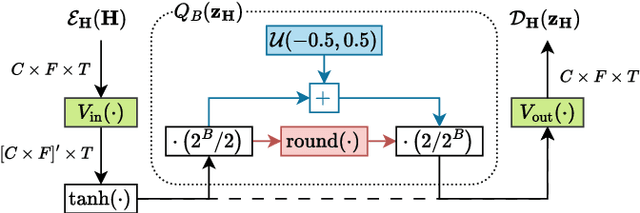

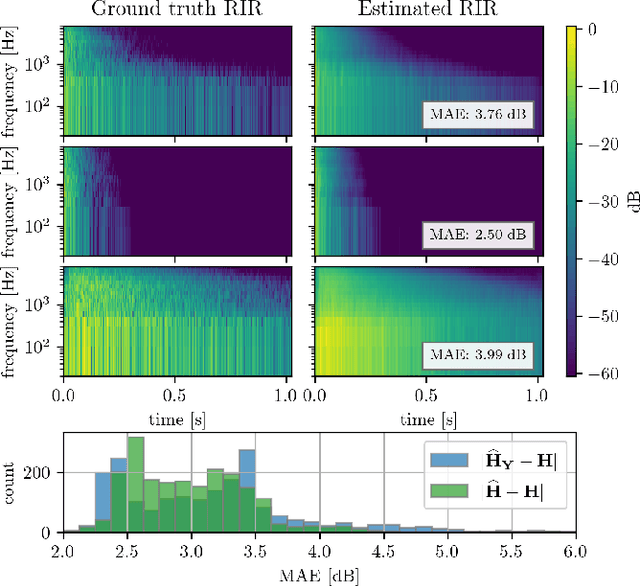

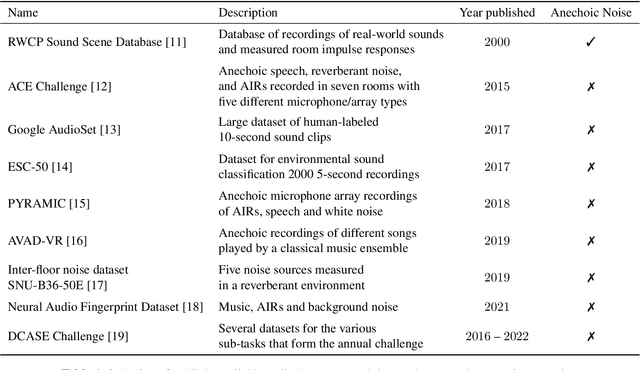

Abstract:We present a method for blind acoustic parameter estimation from single-channel reverberant speech. The method is structured into three stages. In the first stage, a variational auto-encoder is trained to extract latent representations of acoustic impulse responses represented as mel-spectrograms. In the second stage, a separate speech encoder is trained to estimate low-dimensional representations from short segments of reverberant speech. Finally, the pre-trained speech encoder is combined with a small regression model and evaluated on two parameter regression tasks. Experimentally, the proposed method is shown to outperform a fully end-to-end trained baseline model.

Data-driven Joint Detection and Localization of Acoustic Reflectors

Feb 09, 2024Abstract:Room geometry inference algorithms rely on the localization of acoustic reflectors to identify boundary surfaces of an enclosure. Rooms with highly absorptive walls or walls at large distances from the measurement setup pose challenges for such algorithms. As it is not always possible to localize all walls, we present a data-driven method to jointly detect and localize acoustic reflectors that correspond to nearby and/or reflective walls. A multi-branch convolutional recurrent neural network is employed for this purpose. The network's input consists of a time-domain acoustic beamforming map, obtained via Radon transform from multi-channel room impulse responses. A modified loss function is proposed that forces the network to pay more attention to walls that can be estimated with a small error. Simulation results show that the proposed method can detect nearby and/or reflective walls and improve the localization performance for the detected walls.

Data-driven 3D Room Geometry Inference with a Linear Loudspeaker Array and a Single Microphone

Aug 28, 2023

Abstract:Knowing the room geometry may be very beneficial for many audio applications, including sound reproduction, acoustic scene analysis, and sound source localization. Room geometry inference (RGI) deals with the problem of reflector localization (RL) based on a set of room impulse responses (RIRs). Motivated by the increasing popularity of commercially available soundbars, this article presents a data-driven 3D RGI method using RIRs measured from a linear loudspeaker array to a single microphone. A convolutional recurrent neural network (CRNN) is trained using simulated RIRs in a supervised fashion for RL. The Radon transform, which is equivalent to delay-and-sum beamforming, is applied to multi-channel RIRs, and the resulting time-domain acoustic beamforming map is fed into the CRNN. The room geometry is inferred from the microphone position and the reflector locations estimated by the network. The results obtained using measured RIRs show that the proposed data-driven approach generalizes well to unseen RIRs and achieves an accuracy level comparable to a baseline model-driven RGI method that involves intermediate semi-supervised steps, thereby offering a unified and fully automated RGI framework.

Contrastive Representation Learning for Acoustic Parameter Estimation

Mar 13, 2023Abstract:A study is presented in which a contrastive learning approach is used to extract low-dimensional representations of the acoustic environment from single-channel, reverberant speech signals. Convolution of room impulse responses (RIRs) with anechoic source signals is leveraged as a data augmentation technique that offers considerable flexibility in the design of the upstream task. We evaluate the embeddings across three different downstream tasks, which include the regression of acoustic parameters reverberation time RT60 and clarity index C50, and the classification into small and large rooms. We demonstrate that the learned representations generalize well to unseen data and perform similarly to a fully-supervised baseline.

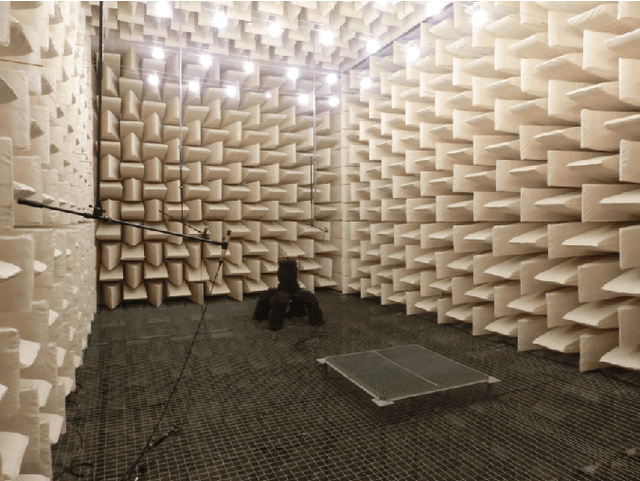

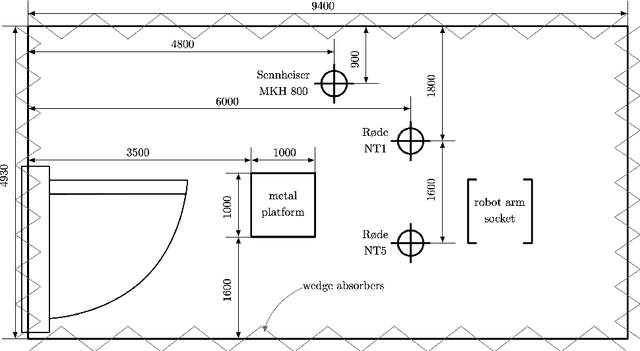

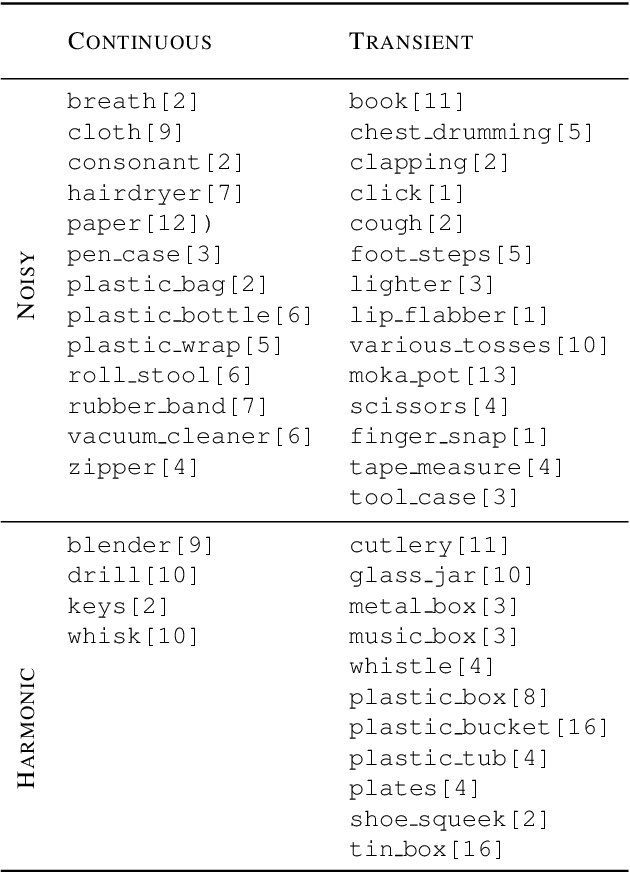

AID: Open-source Anechoic Interferer Dataset

Aug 05, 2022

Abstract:A dataset of anechoic recordings of various sound sources encountered in domestic environments is presented. The dataset is intended to be a resource of non-stationary, environmental noise signals that, when convolved with acoustic impulse responses, can be used to simulate complex acoustic scenes. Additionally, a Python library is provided to generate random mixtures of the recordings in the dataset, which can be used as non-stationary interference signals.

Blind Reverberation Time Estimation in Dynamic Acoustic Conditions

Feb 23, 2022

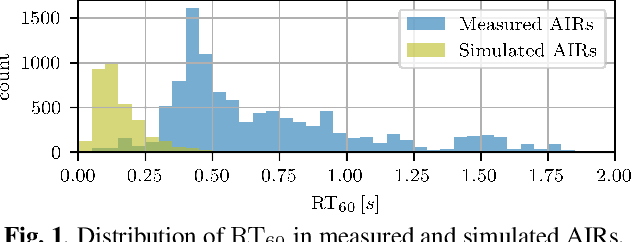

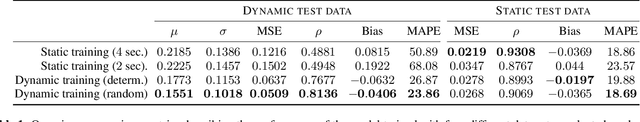

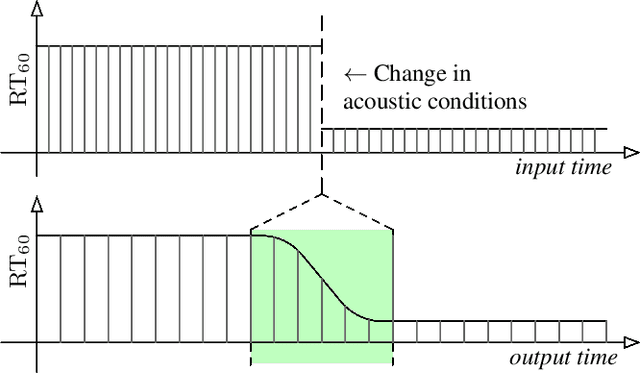

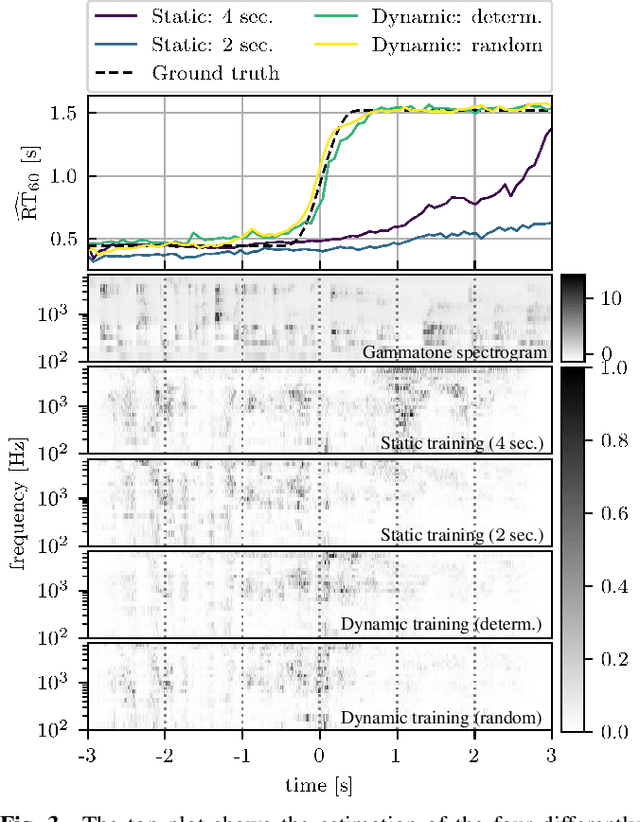

Abstract:The estimation of reverberation time from real-world signals plays a central role in a wide range of applications. In many scenarios, acoustic conditions change over time which in turn requires the estimate to be updated continuously. Previously proposed methods involving deep neural networks were mostly designed and tested under the assumption of static acoustic conditions. In this work, we show that these approaches can perform poorly in dynamically evolving acoustic environments. Motivated by a recent trend towards data-centric approaches in machine learning, we propose a novel way of generating training data and demonstrate, using an existing deep neural network architecture, the considerable improvement in the ability to follow temporal changes in reverberation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge